Big Wizards Discover What Some Autonomy Users Knew 30 Years Ago. Remarkable, Is It Not?

April 14, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

What happens if one assembles a corpus, feeds it into a smart software system, and turns it on after some tuning and optimizing for search or a related process like indexing. After a few months, the precision and recall of the system degrades. What’s the fix? Easy. Assemble a corpus. Feed it into the smart software system. Turn it on after some tuning and optimizing. The approach works and would keep the Autonomy neuro linguistic programming system working quite well.

Not only was Autonomy ahead of the information retrieval game in the late 1990s, I have made the case that its approach was one of the enablers for the smart software in use today at outfits like BAE Systems.

There were a couple of drawbacks with the Autonomy approach. The principal one was the expensive and time intensive job of assembling a training corpus. The narrower the domain, the easier this was. The broader the domain — for instance, general business information — the more resource intensive the work became.

The second drawback was that as new content was fed into the black box, the internals recalibrated to accommodate new words and phrases. Because the initial training set did not know about these words and phrases, the precision and recall from the point of the view of the user would degrade. From the engineering point of view, the Autonomy system was behaving in a known, predictable manner. The drawback was that users did not understand what I call “drift”, and the licensees’ accountants did not want to pay for the periodic and time consuming retraining.

What’s changed since the late 1990s? First, there are methods — not entirely satisfactory from my point of view — like the Snorkel-type approach. A system is trained once and then it uses methods that do retraining without expensive subject matter experts and massive time investments. The second method is the use of ChatGPT-type approaches which get trained on large volumes of content, not the comparatively small training sets feasible decades ago.

Are there “drift” issues with today’s whiz bang methods?

Yep. For supporting evidence, navigate to “91% of ML Models Degrade in Time.” The write up from big brains at “MIT, Harvard, The University of Monterrey, and other top institutions” learned about model degradation. On one hand, that’s good news. A bit of accuracy about magic software is helpful. On the other hand, the failure of big brain institutions to note the problem and then look into it is troubling. I am not going to discuss why experts don’t know what high profile advanced systems actually do. I have done that elsewhere in my monographs and articles.

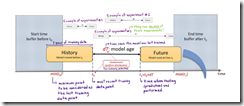

I found this “explanatory diagram” in the write up interesting:

What was the authors’ conclusion other than not knowing what was common knowledge among Autonomy-type system users in the 1990s?

You need to retrain the model! You need to embrace low cost Snorkel-type methods for building training data! You have to know what subject matter experts know even though SMEs are an endangered species!

I am glad I am old and heading into what Dylan Thomas called “that good night.” Why? The “drift” is just one obvious characteristic. There are other, more sinister issues just around the corner.

Stephen E Arnold, April 14, 2023