Digital Reasoning

A Followup Interview with Tim Estes

|

|

Digital Reasoning lit up our radar earlier this year. The company’s work for one of the high-profile agencies in the US government was well received. The company’s technology delivered actionable information, PowerPoint-ready graphics, and blistering performance. We were curious and interviewed Tim Estes, the chief executive officer and founder of Digital Reasoning. You can read the full text of that February 2010 interview at http://www.arnoldit.com/search-wizards-speak/digital-reasoning.html. In February 2010, Mr. Estes told me:

|

On December 6, 2010, the company rolled out its most ambitious upgrade since the firm’s founding in 2000. The firm’s flagship product is Synthesys, and Version 3.0 delivers data fusion and advanced analytics on a par with such vendors’ as i2 Ltd. and Palantir. With i2 and Palantir involved in a complex legal matter, Digital Reasoning has developed its technology in a way that delivers rock solid stability, support for standards, and original methods for processing structured and unstructured information.

I talked with Mr. Estes about Synthesys Version 3.0 in late November 2010. The full text of my interview with him appears below. Additional information about Digital Reasoning appears in our companion Web logs, Beyond Search and IntelTrax.

Tim, thanks for taking the time to talk with me. What’s Synthesys Version 3 provide to your clients?

Synthesys V3.0 provides a horizontally scalable solution for entity identification, resolution, and analysis from unstructured and structured data behind the firewall. Our customers are primarily in the defense and intelligence market at this point so we have focused on an architecture that is pure software and can run on a variety of server architectures.

What about scaling to handle “big data”?

Great question. Synthesys delivers a “no-compromise” solution for a scalable and robust analytic system giving the customer a truly entity-centric enterprise view. We integrate Cassandra and Hadoop seamlessly as a backend. In fact, some of our customers are probably running some of the largest Cassandra and Hadoop systems in the world right now.

What are some of the new features in Synthesys Version 3?

As far as features- there are a lot of them. Synthesys Version 3.0 is the culmination of nearly three years of development. Let me highlight several. First, we’ve enhanced and improved the core language processing in dramatic ways. For example, there is more robustness against noisy and dirty data. And we have provided better analytics quality. We have also integrated fully with Hadoop for horizontal scale. As you know, we generally run Cloudera’s distribution CDH. Hadoop is outstanding for the type of analytic task where the model can be local to the data for execution. At that level, we probably have one of the most flexible and scalable text processing architectures on the market today.

Can you elaborate on the analytics?

On analytics, we are a pretty unique animal. After we get all of the unstructured and structured data into its elemental form and index it. We think this approach is pretty cool. Imagine having real time queries of all of your data based on the relationships and entities in it. In that scenario, we then move to global analytics. Synthesys then starts finding concepts in the data and resolves entities using our patented unsupervised algorithms.

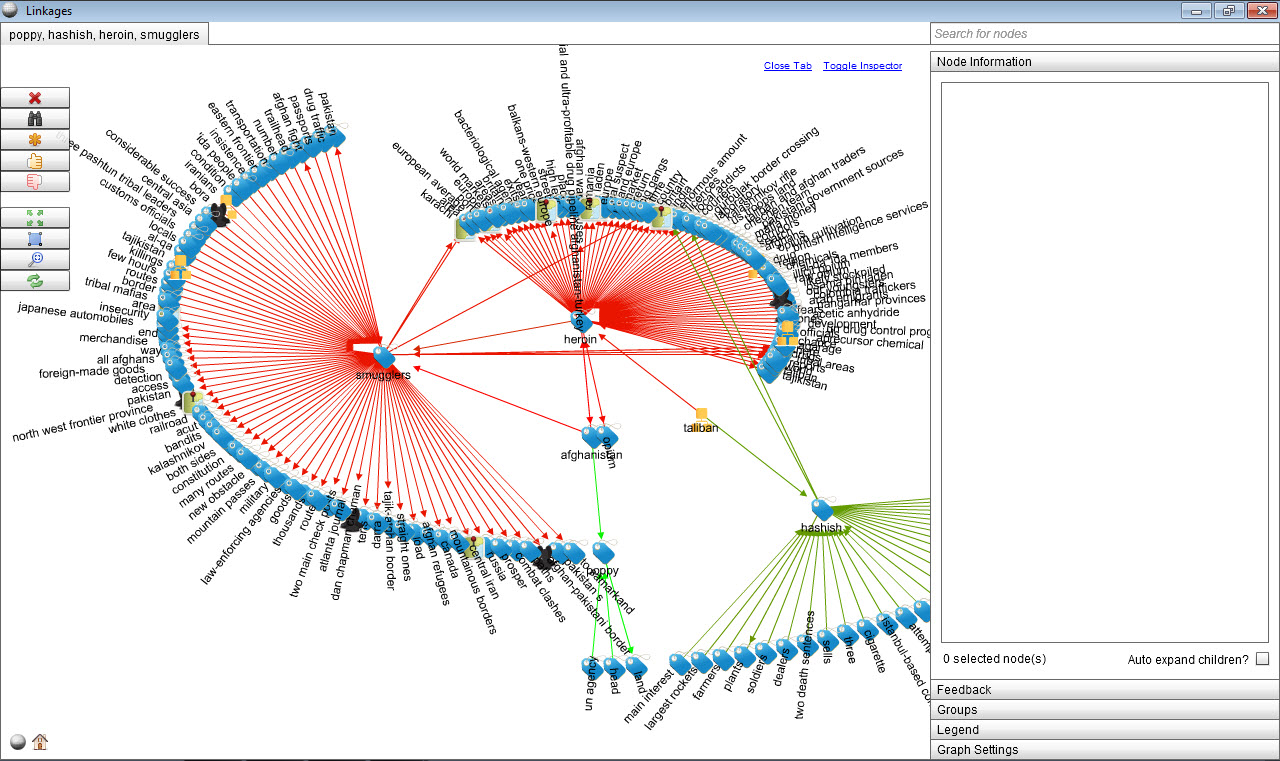

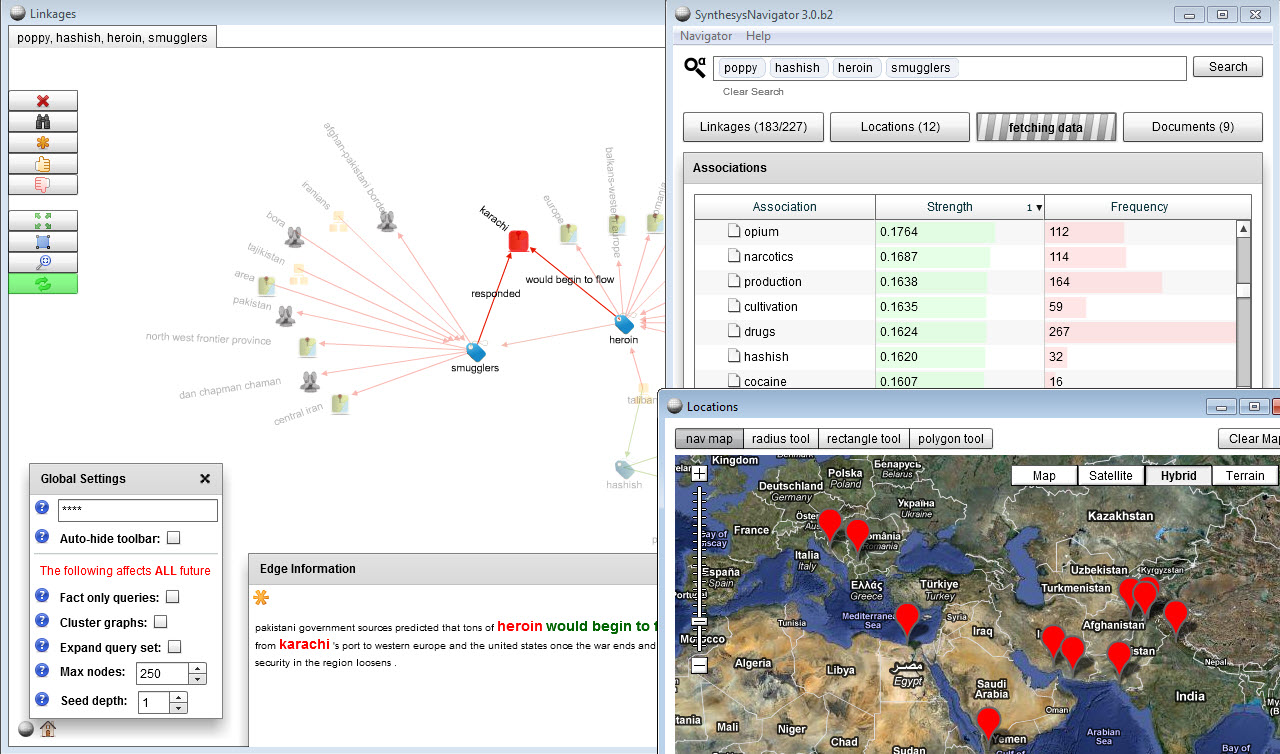

© Digital Reasoning, 2010

Stephen, making this method scale well was something we are particularly proud of. We exercised Hadoop and then found ways to squeeze additional mileage from it as well.

This point is key however because concept discovery and resolution are essential to summarization, downstream trending, and inference.

Are there changes in the query component?

On the query side we bring together a MQL[1]-style query language that brings semantic queries to the general web developer.

MQL? Is that the Metaweb Query Language that was designed to follow REST conventions while providing easy semantic queries for end users and third-party apps.

Yes. Digital Reasoning adapted this as closely as possible for our query standard called KBQL (Knowledge Base Query Language). Metaweb was acquired by Google in Summer 2010.

What’s your use of MQL?

We use it to tie into our powerful Link Analysis engine to generate semantic graphs (such as those that Palantir and I2 Analyst Notebook have done from well-curated structured data).

Can you give me an example?

Sure, these graphs can be as simple as “What locations are tied to this person?” to “What are the people tied to these organizations that are linked within a given timeframe or given spatial area?” What used to require massive investments in analyst time to read and distill are now generated directly and in seconds.

This transforms the analyst workflow and revolutionizes productivity far beyond what the traditional entity extractors and visualization and workflow tools have done today. We are seeing strong demand from many market sectors for this capability.

Synthesys Version 3.0 provides an honest and real way to address information overload. Synthesys delivers real productivity which goes beyond human augmentation tools.

© Digital Reasoning, 2010

Are you offering a cloud option?

Soon. We believe there is a broad market of people who are not going to move their data into the cloud (just like our current customers can’t move their data outside of their protected networks).

Synthesys is an on-premises solution, but it has been designed to the private cloud/cluster. We run on Amazon’s EC2 for most of our scale testing. We do have significant experience with that architecture as an option. So the cloud option will be available soon.

Tim, would you back up and refresh my memory about your firm and its clients?

Digital Reasoning is a small but rapidly growing firm that focuses on solving the problem of understanding structured and unstructured data at scale.

The principal markets we serve are the defense and Intelligence sectors, which we estimate to be approximately $500 million at this time.

Why defense and intel?

There is huge need and very limited product answers. Sure, there is a lot of hype (“actionable intelligence” and “big data analytics” and “Platform for Analytics”), etc. but the reality is often quite different and the smart buyers program managers in this market know it.

Users want something that works on their existing data, not simply a demonstration on clean, structured data. Real world data causes most data fusion systems to choke or bog down.

Furthermore, the defense and intel community want solutions that analysts get real value out of and solutions that won’t break at scale. Most previous solutions fail on one or more of these dimensions.

Are there non-government markets for Synthesys technology?

Yes. We are getting good bit of interest from companies that need what I call “big data analytics” for financial services, legal eDiscovery, health care, and media tasks.

Would you remind me about your background?

My background is ten years of entrepreneurship with this company and my academic background focused on philosophy of language and semiotics. But I have been working on Synthesys since the wrap up of my third year in school. I have blended my programming and math skills with what is I suppose a pretty short academic background. A lot of the background ideas are in our US patent 7,249,117, Knowledge Discovery Agent System and Method.” But that patent, which I wrote in 2002 was just the beginning. In fact, Synthesys Version 3.0 is really the sixth or seventh generation of the technology that our company has worked through.

That's helpful. Now help me understand the new analytic functions you have included in Synthesys 3? What are some of the new analytics in Version 3?

Absolutely. Synthesys V3.0 is really about three new things: First, entity analysis and resolution on structured and unstructured data, second, link analysis, and three, a fully distributed architecture.

Let’s start with entity analysis. That’s people, places, things, proper nouns, and the like, right?

Yes, and entity analysis is huge. We can have a solid 80 percent or higher solution to transforming tons of unstructured data into entities in relationship (regardless of if they are specifically categorized or extracted). We know from our clients that this level of entity identification is transformative. Most systems struggle to hit 60 percent and we consistently top 80 percent.

Does this mean that in a corpus you identify most of the entities in unstructured text automatically and at scale and real-time content flows?

Yes.

And Synthesys is strict about not throwing away entities or relationships that we can’t categorize immediately. We don’t see traditional extract-transform-load methods as much of an answer to real world problems.

Our view is that analytics is about turning data into a rich pool of features that can be modeled at multiple levels to create real knowledge summarization. Our approach saves time as a result of that leveling up or summarization method. We see this as the only real answer to information overload.

What about relationships among entities?

Our new approach to analytics is married with our link analysis method. We can find linkages between an entity and everything else it is really connected to in the data.

Lots of vendors provide link analysis. Correct?

True, but we can pivot around that very fast. One of the big things we’ve done with Synthesys Version 3 is to implement a compelling graph generation capability on the Cassandra distributed infrastructure. With this approach, we can generate, manage, and rank very large graphs. These graphs can be far bigger than an end user can handle. So that’s where the ranking comes in, the “big picture” that makes sense of a real world “big data” problem.

Isn’t scaling the Achilles’ hell, though? Oracle scales but the client needs hundreds of thousands of dollars for licenses and hardware, so scaling is great in a PowerPoint, but not so good for the Oracle or IBM DB2 shop.

I agree, and our clients want a different, affordable, and reliable approach. Our third component in Synthesys Version 3 is the distributed architecture.

As you well know, the truth is that most other entity resolvers and semantic search capabilities just can’t scale. The ones that appear to scale generally can’t “move”; that is, these solutions are cloud-based and arrive with flashy marketing collateral that gloss over the problems associated with migrating data and the management tasks required by the architecture these solutions run on.

Right. The problems with some of the limitations of Google discussed by the TopHatMonocle blog is an example.

Exactly. Digital Reasoning wanted to get this right with the release of Synthesys V3.0.

We now have software that can scale and move for the semantic and entity resolution problems. Synthesys 3 is not a research lab or demo type product. Synthesys Version 3 is production-ready. We are now deploying Version 3 into critical mission applications.

Without getting into too much of the engineering, what did you do?

I have to simplify. We adopted Hadoop for most of our distributed processing. I had the privilege thanks to Mike Olsen and the team at Cloudera to speak at Hadoop World to talk about what we are doing with Hadoop.

And for storage?

For storage, we looked at several options (HBase, Cassandra, and Voldemort, among others). After our analysis, we chose Cassandra for several reasons in mid 2009. We think that was definitely the right choice for what we are doing. The Riptano team, which leads Cassandra, has been great to work with. As a hybrid user, we keep an eye out for the best and feel privileged to work closely with these talented teams.

For us, the successful utilization of these two technologies in Synthesys means we are horizontally scalable with the backing of two cutting edge massive analytics technology that also have a strong Open Source following. Synthesys has made a “scale out” model viable for rich entity analytics and link analysis. We think our approach is innovative, particularly in an off-the-shelf offering.

What specific problems will these and the analytic functions address? For instance, can you process social content such as the Twitter firehouse with more than 8,000 tweets a second?

Yes. Given enough hardware and a backbone and pipe, that’s not as big a deal as it sounds. For one thing, a tweet is about one to two sentences as a maximum. If we are processing a few documents a second and the average five kilobyte document has about 30 to 40 sentences, that would mean that a 200 to 400 node cluster should in theory be the Twitter fire hose. I think the issue would be the pipes and the networking and scheduling of the analytic jobs. We have several clients asking us about exactly this type of Synthesys Version 3 application.

Is there an API for Synthesys Version 3?

Yes, there are APIs for client developers to use Synthesys services in support of their applications (KBQL, REST, and Java).

But what about the real world use cases for Synthesys Version 3?

The main features I mentioned like entities and relationships allow the software to do a lot of the heavy lifting of figuring out all of the facts and relationships in the data and linking them. For example, in a specific financial deal, Synthesys 3 can identify the who and the what, map the connections, and deliver the key insights. The system resolves those different entities where it can so as to show a more summarized view.

This is the way to address analysts and decision makers having too much data to read. Intelligence analysts, marketing professionals, and lawyers – each of these groups has a similar problem that Synthesys Version 3 solves.

Instead of clicking on links and scanning documents for information, Synthesys Version 3 moves the user from reading a ranked or filtered set of documents to a direct visual set of facts and relationships that are all linked back to the key contexts in documents or databases. One click and the user has the exact fact. Days and hours become minutes and seconds.

Synthesys, if I understand you, handles big data and reduces the cycle time throughout the process. Am I stating this correctly?

Yes, that’s what Synthesys delivers. While there are a lot of other things Synthesys Version 3 can do, we have learned that our system can chop 70 to 90 percent of the time required using more traditional methods in the analytic process.

I know that there are many analytic tools available from large firms like IBM SPSS and smaller specialists like Megaputer. Where does your system fit into this competitive landscape?

We both know that there are a lot of tools and technologies in the market today. In order to evaluate those offerings there are four key questions to consider:

- Do you have to pre-model your data; for example, train frames for extraction, map them to an ontology, etc.?

- Does the system scale?

- Does the system handle real world structured and unstructured data?

- Does the system integrate with mainstream technologies for “big data” infrastructure?

The notion of building the model from the data versus through massive knowledge engineering and consulting is the first major piece.

If the services investment is substantially greater than the software, then that’s pretty suspect. We can’t “Mechanical Turk” our way out of the information overload problem. We aren’t creating enough people with the requisite information technology skills.

One of the founding ideas of Digital Reasoning is to focus on turning services into software instead of software into services. Many of the firms providing data fusion and related services are focusing on selling consulting and engineering services, not delivering software that can be easily deployed and used.

Our approach at Digital Reasoning is to deliver software, which goes counter to some of the conventional wisdom. Think about what’s happening with the existing vendors’ methods. Users have to process more and more data. Then the budgets are exhausted, the systems don’t deliver, and the client gets farther and farther behind understanding the data, both in terms of real time and historical perspectives

What about scale?

I think I’ve already covered that relatively well. Not many of the offerings that can handle structured and unstructured data at an entity level really scale at all. Most of today’s solutions work in silos and on set scale data. Vendors have layered on machine learning and statistical capabilities. But the scaling problem has, for the most part, been pushed aside. Synthesys Version 3 scales.

Structured data are important but the problem is the unstructured content. What Synthesys Version 3’s angle on email, Web content, Facebook posts, and general office content?

You have identified the key problem in many organizations. The key thing is to make sure it really handles unstructured data. Sticking data into Lucene and providing keyword search is not really analytics. That exercise must makes unstructured information retrievable via a laundry list.

Lucene is solid, but it wasn’t designed for entity analytics. Solr has brought it further along, but that’s all metadata stuff. At some point, you have to get into the hard problems to see what’s really going on inside your data.

Synthesys acquires, processes, and makes structured and unstructured data available for the functions I have described. As you have said, Digital Reasoning is one of the companies that is going “beyond search”.

Thanks. Rip-and-replace is just not an option in many organizations today. But can I integrate Synthesys Version 3 into what I already have?

Our architecture makes this possible. Most vendors’ products are tagged with an “open source” label. I suggest that organizations do some investigating to see if these “open” systems can really fit into the mainstream of what’s going on with Big Data.

As I said, for Digital Reasoning, we use Hadoop and NoSQL. We think Cassandra is definitely one of the NoSQL’s with significant momentum. By picking the open architecture infrastructure, Synthesys is riding a big way and getting the most out of it.

The other players – for instance, EMC Greenplum, IBM Netezza, and Oracle Exadata – in the space have their role but mostly in big data warehousing and standard querying. Our interest is in the application space; specifically, answering questions like “What can you learn from your data with an off the shelf system (where you don’t have to do customer algorithms for your enterprise and all of the expensive lessons learned that come with that?”

We think a lot of those problems are common and underserved; for example, pulling out all of the facts from unstructured data at scale, linking them, and then resolving the entities. That’s where we fit. Right now we are pretty unique in that positioning and we can

Technically, we are partnered with Riptano and Cloudera in taking on some of these large scale deployment issues. In the Defense and Intelligence Space we are partnered with several firms and hold a lot of good relationships there. Most of the service integrators don’t get into building products like ours, so the pairing is a good fit.

There is a great deal of buzz about Palantir. I see the company as delivering "eye candy". Is Digital Reasoning delivering digital cotton candy or industrial strength capabilities?

There is the old saying that the great thing about “the demo” is that it sells. The problem is that the demo doesn’t scale and the results in practice rarely look that pretty.

Like big hat, no cattle?

Yep, that works for quite a few data fusion companies.

But our approach is different. I’d like to think we are bringing a real, maintainable architecture that will scale to the problem.

The realness of it is to recognize that we can’t have humans substantially in the loop on the data ingest, index, and first pass analysis process. It’s strictly a matter of scale. No one has enough developers to write customer parsers, and often no one understand the data well enough to make an a priori ontology that will hold up in real world use.

A lot of the work around Palantir is based on the notion that a different work flow process and tools can create a dramatic enhancement in productivity. In my experience, without solving the hard problem of understanding the data algorithmically, work flow and other management activities add to costs and time.

Many vendors tiptoe around the time and cost issues. Demos work because it is like a Dancing with the Stars’ routine. There is a spot light, a short performance time, and lots of distractions. The stage craft passes for reality. Reality is different.

What about a use case? I know you may not be able to mention your government clients by name.

One key use case that is unique to Digital Reasoning is our use of entity analytics to determine key related terms or semantic synonyms without having an a priori alias list.

Let me see if I understand you. You can identify an alternate name for a person of interest on the fly, right?

Yes. When we present our Synthesys technology, we ingest some unclassified information from an open source. For example, we can pull in information from countries located northeast of Cairo, Egypt, for instance.

We then let Synthesys generate a list of entities and aliases. We can do a number of things, including aliases of particular individuals. We handle name variants based on the patterns in the data, not string similarity.

So you are going beyond key words?

Right. Way beyond key words. We can process real world data and generate outputs that an analyst or other professional can use “right now.”

This relatively simple analytic use case is immensely valuable to pick up key links and guard against false negatives. And it’s far more than many of the companies such as Attensity, i2, Palantir, Inxight, among others, can do. Most systems need humans up and down the work process who have to tag and sort data.

Synthesys just outputs the entities and the aliases with whatever other Synthesys elements the client requires.

Most of the "intelligence" services and products I review are difficult to operate. In fact, some like SAS and SPSS require a trained and dedicated programmer to get even a simple change to a standing report. What have you done with Synthesys 3 to address usability?

The key component of usability is understanding who the user is and what the role of the system is in a larger architecture. For instance, the kinds of users of Synthesys are rank-and-file professionals, including administrators, application developers, and analysts.

Administrators need easy-to-use tools for basic tasks like monitoring data loading and intuitive, more advanced tools for harder things like manipulating an alias report.

Synthesys provides an intuitive graphical user interface for most functions. A user can load loading data and watch the progress of the task. There’s strong logging for monitoring the usage of the system by clients over time.

Administrators also have diagnostic tools like our “KBQL Sandbox” to test queries against the backend. There’s a graphical interface for this activity.

How much training is required by a user?

Users can access the system with little or no recourse to our provided training lessons. For those who want to perform certain operations such as creating customer categories for entity extraction we have a walk through plus documentation.

We designed our system for analysts, not computer science majors. We have incorporated powerful supervised learning technology to provide the information that’s needed.

The system also has a clean and simple XML Schema Development service for mapping structured data into its limited high level constructs. Administrators write simple XSLTs or edit existing XSLTs to get their data into the system.

And XSLT is?

Oh, sorry. Extensible Stylesheet Language or XSL and the “T” means transformation.

Thanks. Are you a platform?

Yes. And we are the common entity analytics layer for the organization. For the most part, Synthesys really isn’t an application. It’s a platform for applications that are entity-oriented. It’s also able to take input form other engines and applications. Synthesys is what I call an “entity blackboard.”

Would you give me an example?

Sure, some customers want to use a third party entity extractor or NLPs and that fine with us. The blackboard effect is cool for advanced applications that want generate custom ensemble algorithms to do better ranking and prediction.

What is the typical deployment time from the moment a person signs a deal with Digital Reasoning until the system is outputting actionable intelligence?

We generally have the system up in a week. The amount of time until analytic results are available is a function of the amount of data being processed and the hardware resources being used. Normally, we can read millions of documents and records in hours or days on modest hardware. Then a few days of user training with the client leads right to actionable results.

Most competitive systems do not take into account entities in unstructured data without a lot of manual effort up front to train or set up. I have heard that some well known systems take six months or more to set up and tune. We’re quite agile.

Would you walk me through the life cycle of a deployment in a commercial enterprise?

Once an enterprise has decided to deploy Synthesys, one of our engineers assist with the deployment onto an in-house system. This is typically seven or more systems made up of single or dual quad-core blades. The number of blades is related to the volume and scale of the data. We mostly run on Linux though we also use/support Windows and OS X. We install Hadoop (a vanilla version or Cloudera’s CDH3) and Cassandra. With these components installed, we then deploy Synthesys. We configure content intake and the various analytic jobs to the cluster.

In most case, these steps take one to three days, depending on the client’s specific requirements. Also, we might work with the client to assist getting their data into Synthesys (though many write and maintain their own XSLTs with only a few pointers from us).

Once the data are available, we bulk load historical content. We work with the client to set up the update schedule. We try to get as close to real time as the data permit. Once the data have been ingested and analyzed, results are accessible via the Synthesys Navigator. For some clients, we assist consultatively with the applications and reports.

When you look forward nine to 12 months, what will be the impact of open source software on proprietary systems? Do you have an open source component in Synthesys 3?

Since the founding of Digital Reasoning, I’ve said that someday all software will learn, the rest will be commoditized I see that more and more every year. The largest systems for Big Data Analytics are increasing running Open Source. Most of the NoSQL solutions are Open Source. We are committed to Open Source both on the backend side and working closely to recommend and communicate with leaders on the Cassandra and Hadoop projects along with other projects that we are looking at including. We are also considering Open Sourcing components of our system that can foster better adoption and development – particularly in the analytic tool space. Most of our proprietary components are in analytics algorithms that we’ve had internally in various versions for years and years. We invent new ones too, but our protected space is in the algorithmic techniques and tunings used to make sense of data.

If a reader wants more information about your new version of Synthesys 3, where should that person look?

Our Web site at www.digitalreasoning.com is a good resource. We have more detailed information on Synthesys V3.0 as well as a copy of a technical overview white paper on Synthesys. If they are looking for a deeper dialogue, they can write us at info@digitalreasoning.com, and we can start a conversation.

ArnoldIT Comment

Digital Reasoning Systems (www.digitalreasoning.com) solves the problem of information overload by providing the tools people need to understand relationships between entities in vast amounts of unstructured and structured data.

Digital Reasoning builds data analytic solutions based on a distinctive mathematical approach to understanding natural language. The value of Digital Reasoning is not only the ability to leverage an organization’s existing knowledge base, but also to reveal critical hidden information and relationships that may not have been apparent during manual or other automated analytic efforts. Synthesys™ is a registered trademark of Digital Reasoning Systems, Inc.

Stephen E. Arnold, December 7, 2010