Hot Neuron

An Interview with Dr. Bill Dimm

|

|

Bryn Mawr is a suburb of Philadelphia. In the midst of gracious homes and enticing restaurants, Hot Neuron, a specialist firm in information retrieval technologies and services, develops next-generation content processing systems. Founded in 2000, the company's focus is on developing innovative methods and algorithms that help people find and organize information that will make their companies more productive. Dr. Bill Dimm, whose degree in theoretical elementary particle physics from Cornell University, distinguishes him from some of the companies working in search and content processing. Prior to setting up his own firm, Dr. Dimm worked in the interest rate derivatives research group at BNP/Cooper Neff, Inc. doing mathematical modeling and computer programming. Beyond Search spoke with Dr. Dimm in his office in Bryn Mawr on March 21, 2010. |

What's a "hot neuron"?

After finishing my Ph.D. in theoretical elementary particle physics at Cornell, I got a job doing derivative securities research (applied math and computer programming) for a large bank. As the Internet bubble was inflating, I felt like I was missing out on all of the fun, so I left my job to start Hot Neuron. My intention was to build a software company that would focus on inventing novel algorithms and mathematical models, rather than developing cookie cutter software. That was the motivation for the name. Brain cells are neurons, so I thought about calling the company Hyper Neuron to indicate that we're a company that thinks a lot. A squatter already had the domain name, so I went with Hot Neuron. Crazy company names were all the rage back then.

Why search and content processing? Some of the people I have interviewed think theoretical physics is easier than content processing?

When contemplating business ideas, the first step for me has always been to try to think of things that would be useful to people, and the second step is to consider whether it is something that fits well with my interests and abilities. When I left my old job, I had two ideas in mind for my business; one was related to derivative securities, and the other had no specific connection to my expertise. After doing more research, I ended up abandoning both of them. Along the way, I was spending a lot of time monitoring the websites of all of the Internet business publications (there used to be so many!) to stay on top of the industry. It occurred to me that it would be really useful if people could find all of the new articles on a topic from one location instead of hopping from site to site, which was the inspiration for launching MagPortal.com back in 2000.

Will you give me more detail about your MagPortal.com service?

My original plan for MagPortal.com was to monitor the articles users clicked on and automatically recommend other articles that might interest them. That struck me as an interesting intellectual puzzle to work on. Unfortunately, a big firestorm broke out over privacy issues at the time, thanks to DoubleClick. Thinking that people might get upset by having their reading habits monitored, I scaled the idea back to putting a “similar articles” link next to each article.

How does the math function in this service?

The mathematical model I cooked up to identify similar articles worked better than anything I found in books or academic journals, and I planned to turn it into a stand-alone product someday, but I really wasn't sure what people would use it for. Several years later, I got a call from a law firm that had spent the last two years hunting unsuccessfully for software that could cluster the millions of documents they needed to review during the discovery phase of litigation. They read about the similar articles algorithm on our corporate site and asked if it was something we could do. That was the inspiration for our Clustify software. The “similar articles” algorithm was just a tiny part of the innovation necessary to build something as powerful and flexible as Clustify, so the road from that phone call to the final product was a pretty long one. But, the need for such a product was clear, and it was a case where a smarter algorithm could make a world of difference, so I thought it was worth pursuing.

How can a licensee implement the MagPortal.com functions on one's Web site?

Our MagPortal.com feed clients can easily embed an article listing or search engine output anywhere on the page they want. Actually mixing the data about individual articles with other data would be considerably harder, since different sources tend to have different output fields, sorting conventions, etc.

What are the salient features of the system you have developed?

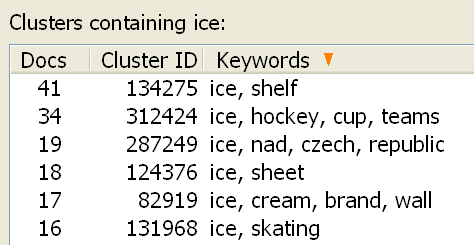

Clustify analyzes the text of your documents and groups related documents together into clusters. Each cluster is labeled with a few keywords to tell you what it is about, providing an overview of what the document set is about, and allowing you to browse the clusters by keyword in a a hierarchical fashion. The aim is to help the user more efficiently and consistently categorize documents, since he or she can categorize an entire cluster or a whole group of clusters with a single mouse click. Our approach to forming clusters is impacted by that goal. We use a modified agglomerative algorithm to ensure that the most similar documents get clustered together, and we allow the user to specify how similar documents must be in order to appear in the same cluster. By choosing a high similarity cutoff, the user can be confident that it is safe to categorize all documents in the cluster the same way. Clustify can also do automatic categorization by taking documents that have already been categorized, finding similar documents, and putting them in the same categories.

What differentiates your approach from others that are available to prospective licensees?

A critical differentiating feature is that Clustify can handle large document sets. If you are doing manual categorization, and you trust the software enough to choose categories by reviewing only one document in the cluster, the amount of labor required is proportional to the number of clusters. Without clustering, it would be proportional to the number of documents. So, the number of documents per cluster is a measure of how much clustering leverages your manual labor. Typically, the number of documents per cluster increases with the size of the document set, so clustering has the greatest benefit for large document sets. Software that can't handle large document sets fails when it is needed most, and when the largest benefit is available.

Could I use your system for eDiscovery or a similar activity where I don't know exactly what's in the corpus?

That's a good question. Yes. Another important feature, especially for e-discovery, is that Clustify allows you to choose what you mean by “related” documents. It does both conceptual clustering (grouping documents that are about the same topic), and near-duplicate detection (identifying documents that contain chunks of identical text). Most e-discovery tools do one or the other, requiring the user to buy and learn multiple pieces of software, and deal with different inputs and outputs. With Clustify, you simply flip an option to switch between conceptual clustering and near-dupe, and cluster browsing, categorization, I/O, etc. all work in the exact same way.

There are a ton of small things that can make a big difference in whether or not clustering software works for a particular application. For example, Clustify is purely mathematical with no dictionary or thesaurus, so it can adapt to specialized document sets or (some) foreign languages. It has a really good algorithm for deciding which words are important to pay attention to, and which ones should be ignored, but it also gives you the flexibility to override it selectively or completely. It allows you to boost the weight of selected words, so you can force clusters to form around topics that are important to you. The output files are very simple, so you can easily import them into other systems. It is very easy to run a calculation with default parameters in the Clustify GUI and get a good result, but you also have the option to tweak several parameters if you want more control. And, you can skip the GUI completely, invoking Clustify in an automated way from a script or other program.

What's the content processing speed of your system?

It's very easy to be misleading about “throughput” numbers. If you use a standard agglomerative clustering algorithm from a textbook, you will be comparing every document to every other document to figure out which documents are most similar to each other. So, the calculation time is proportional to the number of documents squared. Clustering a million documents would take 100 times longer than clustering 100,000 documents, so a throughput number based on 100,000 documents will be ten times larger than one based on a million documents. Clustify scales better than that, but it still isn't meaningful to quote a rate without specifying details about the document set.

A few years ago, we downloaded Wikipedia, extracted the text from the bodies of the articles, and removed the junk articles (stub articles, etc.), leaving 1.3 million articles with a total of 3.5GB of text. On a fairly typical desktop computer (2.0 GHz CPU, 7200rpm SATA drive), Clustify can do conceptual clustering of that data set in 20 minutes on Linux or 50 minutes on Windows. It consumes only 750 Mb of memory to do that calculation. The only limit on the number of documents is the amount of available memory. We estimate that a calculation that takes an hour or two for Clustify would take about a year for the standard agglomerative algorithm.

That's impressive. What's the licensing approach you offer your customers?

We have two licensing models. One option is the traditional “purchase” model, where you pay a bunch of money up front for a site license that you can use forever (pricing depends on the type and size of the company, with law firm pricing starting at $40,000 and e-discovery service provider pricing staring at $80,000). The other option, which is better for companies with smaller data sets, is to pay based on the amount of data they process, with no up-front fee.

Isn't the search and content processing sector crowded? I track about 300 firms and there are more contacting me each week.

Well, the amount of data and the number of customers for such products and services is always increasing, too. For companies that do original research and adapt their products to their customers' needs (like us, of course), there is a fair amount of opportunity for differentiation–customers really need to try the products and see what works in their situation. The companies that just pull an algorithm out of a book or mimic another product will be left competing on price. Of course, I wouldn't mind having fewer competitors ;-)

Can you highlight a function that catches the attention of some of your prospects?

Customers react to different features and functions. The needs are often quite particular. But our biggest focus at the moment is integrating with more systems used by law firms in order to expand our potential customer base (law firms aren't our only market, but they are constantly processing large, new data sets, so they are a big market for us). Longer term, we'll be adding language detection, so it will automatically cluster different languages separately. We also plan to add multi-threading (as fast as Clustify currently is, it only utilizes one processing core).

Do you support other vendors’ systems or are you a stand-alone solution?

Absolutely. We pride ourselves on our ability to mesh with what the customer is now using. As a stand-alone product, you can launch the GUI and simply point it at a directory containing a bunch of documents you want to cluster. It can also be used with e-discovery document management tools where it can talk to the database to fetch document content, export cluster information as additional database fields, and pass tag (categorization) data back and forth. Clustify can also be run without the GUI, so clients can launch it in a an automated way from a script or other program, and grab the output and pull it into another system without user intervention.

What's your view on semantics and natural language processing? Are these technologies ready for prime time?

I'm not an expert on the state of NLP, so I can't really say if it is “ready for prime time.” I will say that such tools can be invaluable in some circumstances where the desired information simply doesn't relate to a simple set of keywords, but they also add an extra layer of complexity where errors can occur. If a user enters a few keywords into a standard search engine, he or she understands where the result comes from–it is the set of all documents containing the desired words. If the quality of the result is poor, he or she can add or subtract search terms to move toward a better result. With semantic or NLP search, it's harder for the user to know what he or she is really getting. When it works it can work great, but when a strange result occurs it can be hard to know where it came from or how to improve it.

A number of vendors have shown me very fancy interfaces. The interfaces take center stage and the information within the interface gets pushed to the background. Are we entering an era of eye candy instead of results that are relevant to the user?

I hope not, but it's not really up to me, sadly. Our focus with Clustify has been to enable people to get work done as quickly and simply as possible. Making a simple user interface that allows people to do everything they need to do is quite a bit of work. We display cluster data as simple lists that the user can navigate with the mouse or the keyboard. It is so simple that people can learn to use it in a matter of minutes, and it works well even for very large data sets. Users can categorize documents using keyboard shortcuts without ever touching the mouse, which helps them get work done quickly. Some competitors have created elaborate user interfaces with circles drawn all over the screen. It takes a significant amount of training for people to learn such tools. How much does pushing the mouse around to click on circles slow the user down? Can you really work with large data sets in systems like that? Hopefully, customers think about how a tool will help them get work done, instead of being swept away by pretty pictures in a brochure.

What is it that you think people are looking for from semantic technology?

That really depends on which people you are talking about. If you are talking about search experts and power users, they are probably looking for richer interfaces, and would appreciate having more controls, more filters, and more options. But, the average user just wants to get whatever they are looking for with as little work as possible. If someone wants to find articles about business, they can type “business articles” into Google and get a perfectly good answer. To have wide appeal, semantic technology needs to be that simple. Better content needs to happen automatically, without the user needing to do a lot of extra clicking or scrolling to find the right thing. A richer interface needs to be so simple to use that people don't have to spend any time learning it.

What are the hot trends in search for the next 12 to 24 months?

Hot trends come and go so quickly that I don't think they should be of much interest to someone who is looking to build a company (rather than just looking to cash out quickly). The long-term trend is that the amount of information is increasing and tools to cope with that will be more and more important. I'm focused on improving Clustify to meet our customers' needs.

Where can people get more information?

I suggest those who want information navigate to the Clustify web site. We also provide information at Hot Neuron.

ArnoldIT Comment

Hot Neuron's system generates useful results. The company lacks the profile that Microsoft has for its search and content processing systems, but big does not mean better. The Hot Neuron systems respond quickly and deliver useful results. An organization seeking a method for clustering content and adding value to existing information systems will benefit from a test drive of the Hot Neuron system. We were impressed.

Debra E. Johnson, March 23, 2010