Are Experts Misunderstanding Google Indexing?

April 12, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Google is not perfect. More and more people are learning that the mystics of Mountain View are working hard every day to deliver revenue. In order to produce more money and profit, one must use Rust to become twice as wonderful than a programmer who labors to make C++ sit up, bark, and roll over. This dispersal of the cloud of unknowing obfuscating the magic of the Google can be helpful. What’s puzzling to me is that what Google does catches people by surprise. For example, consider the “real” news presented in “Google Books Is Indexing AI-Generated Garbage.” The main idea strikes me as:

But one unintended outcome of Google Books indexing AI-generated text is its possible future inclusion in Google Ngram viewer. Google Ngram viewer is a search tool that charts the frequencies of words or phrases over the years in published books scanned by Google dating back to 1500 and up to 2019, the most recent update to the Google Books corpora. Google said that none of the AI-generated books I flagged are currently informing Ngram viewer results.

Thanks, Microsoft Copilot. I enjoyed learning that security is a team activity. Good enough again.

Indexing lousy content has been the core function of Google’s Web search system for decades. Search engine optimization generates information almost guaranteed to drag down how higher-value content is handled. If the flagship provides the navigation system to other ships in the fleet, won’t those vessels crash into bridges?

In order to remediate Google’s approach to indexing requires several basic steps. (I have in various ways shared these ideas with the estimable Google over the years. Guess what? No one cared, understood, and if the Googler understood, did not want to increase overhead costs. So what are these steps? I shall share them:

- Establish an editorial policy for content. Yep, this means that a system and method or systems and methods are needed to determine what content gets indexed.

- Explain the editorial policy and what a person or entity must do to get content processed and indexed by the Google, YouTube, Gemini, or whatever the mystics in Mountain View conjure into existence

- Include metadata with each content object so one knows the index date, the content object creation date, and similar information

- Operate in a consistent, professional manner over time. The “gee, we just killed that” is not part of the process. Sorry, mystics.

Let me offer several observations:

- Google, like any alleged monopoly, faces significant management challenges. Moving information within such an enterprise is difficult. For an organization with a Foosball culture, the task may be a bit outside the wheelhouse of most young people and individuals who are engineers, not presidents of fraternities or sororities.

- The organization is under stress. The pressure is financial because controlling the cost of the plumbing is a reasonably difficult undertaking. Second, there is technical pressure. Google itself made clear that it was in Red Alert mode and keeps adding flashing lights with each and every misstep the firm’s wizards make. These range from contentious relationships with mere governments to individual staff member who grumble via internal emails, angry Googler public utterances, or from observed behavior at conferences. Body language does speak sometimes.

- The approach to smart software is remarkable. Individuals in the UK pontificate. The Mountain View crowd reassures and smiles — a lot. (Personally I find those big, happy looks a bit tiresome, but that’s a dinobaby for you.)

Net net: The write up does not address the issue that Google happily exploits. The company lacks the mental rigor setting and applying editorial policies requires. SEO is good enough to index. Therefore, fake books are certainly A-OK for now.

Stephen E Arnold, April 12, 2024

AI Will Take Jobs for Sure: Money Talks, Humans Walk

April 12, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Report Shows Managers Eager to Replace or Devalue Workers with AI Tools

Bosses have had it with the worker-favorable labor market that emerged from the pandemic. Fortunately, there is a new option that is happy to be exploited. We learn from TechSpot that a recent “Survey Reveals Almost Half of All Managers Aim to Replace Workers with AI, Could Use It to Lower Wages.” The report is by beautiful.ai, which did its best to spin the results as a trend toward collaboration, not pink slips. Nevertheless, the numbers seem to back up worker concerns. Writer Rog Thubron summarizes:

“A report by Beautiful.ai, which makes AI-powered presentation software, surveyed over 3,000 managers about AI tools in the workplace, how they’re being implemented, and what impact they believe these technologies will have. The headline takeaway is that 41% of managers said they are hoping that they can replace employees with cheaper AI tools in 2024. … The rest of the survey’s results are just as depressing for worried workers: 48% of managers said their businesses would benefit financially if they could replace a large number of employees with AI tools; 40% said they believe multiple employees could be replaced by AI tools and the team would operate well without them; 45% said they view AI as an opportunity to lower salaries of employees because less human-powered work is needed; and 12% said they are using AI in hopes to downsize and save money on worker salaries. It’s no surprise that 62% of managers said that their employees fear that AI tools will eventually cost them their jobs. Furthermore, 66% of managers said their employees fear that AI tools will make them less valuable at work in 2024.”

Managers themselves are not immune to the threat: Half of them said they worry their pay will decrease, and 64% believe AI tools do their jobs better than experienced humans do. At least they are realistic. Beautiful.ai stresses another statistic: 60% of respondents who are already using AI tools see them as augmenting, not threatening, jobs. The firm also emphasizes the number of managers who hope to replace employees with AI decreased “significantly” since last year’s survey. Progress?

Cynthia Murrell, April 12, 2024

Tennessee Sends a Hunk of Burnin’ Love to AI Deep Fakery

April 11, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Leave it the state that houses Music City. NPR reports, “Tennessee Becomes the First State to Protect Musicians and Other Artists Against AI.” Courts have demonstrated existing copyright laws are inadequate in the face of generative AI. This update to the state’s existing law is named the Ensuring Likeness Voice and Image Security Act, or ELVIS Act for short. Clever. Reporter Rebecca Rosman writes:

“Tennessee made history on Thursday, becoming the first U.S. state to sign off on legislation to protect musicians from unauthorized artificial intelligence impersonation. ‘Tennessee is the music capital of the world, & we’re leading the nation with historic protections for TN artists & songwriters against emerging AI technology,’ Gov. Bill Lee announced on social media. While the old law protected an artist’s name, photograph or likeness, the new legislation includes AI-specific protections. Once the law takes effect on July 1, people will be prohibited from using AI to mimic an artist’s voice without permission.”

Prominent artists and music industry groups helped push the bill since it was introduced in January. Flanked by musicians and state representatives, Governor Bill Lee theatrically signed it into law on stage at the famous Robert’s Western World. But what now? In its write-up, “TN Gov. Lee Signs ELVIS Act Into Law in Honky-Tonk, Protects Musicians from AI Abuses,” The Tennessean briefly notes:

“The ELVIS Act adds artist’s voices to the state’s current Protection of Personal Rights law and can be criminally enforced by district attorneys as a Class A misdemeanor. Artists—and anyone else with exclusive licenses, like labels and distribution groups—can sue civilly for damages.”

While much of the music industry is located in and around Nashville, we imagine most AI mimicry does not take place within Tennessee. It is tricky to sue someone located elsewhere under state law. Perhaps this legislation’s primary value is as an example to lawmakers in other states and, ultimately, at the federal level. Will others be inspired to follow the Volunteer State’s example?

Cynthia Murrell, April 11, 2024

Has Google Aligned Its AI Messaging for the AI Circus?

April 10, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I followed the announcements at the Google shindig Cloud Next. My goodness, Google’s Code Red has produced quite a new announcements. However, I want to ask a simple question, “Has Google organized its AI acts under one tent?” You can wallow in the Google AI news because TechMeme on April 10, 2024, has a carnival midway of information.

I want to focus on one facet: The enterprise transformation underway. Google wants to cope with Microsoft’s pushing AI into the enterprise, into the Manhattan chatbot, and into the government. One example of what Google envisions is what Google calls “genAI agents.” Explaining scripts with smarts requires a diagram. Here’s one, courtesy of Constellation Research:

Look at the diagram. The “customer”, which is the organization, is at the center of a Googley world: plumbing, models, and a “platform.” Surrounding this core with the customer at the center are scripts with smarts. These will do customer functions. This customer, of course, is the customer of the real customer, the organization. The genAI agents will do employee functions, creative functions, data functions, code functions, and security functions. The only missing function is the “paying Google function,” but that is baked into the genAI approach.

If one accepts the myriad announcements as the “as is” world of Google AI, the Cloud Next conference will have done its job. If you did not get the memo, you may see the Googley diagram as the work of enthusiastic marketers. The quantumly supreme lingo as more evidence that Code Red has been one output of the Code Red initiative.

I want to call attention, however, to the information in the allegedly accurate “Google DeepMind’s CEO Reportedly Thinks It’ll Be Tough to Catch Up with OpenAI’s Sora.” The write up states:

Google DeepMind CEO may think OpenAI’s text-to-video generator, Sora, has an edge. Demis Hassabis told a colleague it’d be hard for Google to draw level with Sora … The Information reported. His comments come as Big Tech firms compete in an AI race to build rival products.

Am I to believe the genAI system can deliver what enterprises, government organizations, and non governmental entities want: Ways to cut costs and operate in a smarter way?

If I tell myself, “Believe Google’s Cloud Next statements?” Amazon, IBM, Microsoft, OpenAI, and others should fold their tents, put their animals back on the train, and head to another city in Kansas.

If I tell myself, “Google is not delivering and one cannot believe the company which sells ads and outputs weird images of ethnically interesting historical characters,” then the advertising company is a bit disjointed.

Several observations:

- The YouTube content processing issues are an indication that Google is making interesting decisions which may have significant legal consequences related to copyright

- The senior managers who are in direct opposition about their enthusiasm for Google’s AI capabilities need to get in the same book and preferably read from the same page

- The assertions appear to be marketing which is less effective than Microsoft’s at this time.

Net net: The circus has some tired acts. The Sundar and Prabhakar Show seemed a bit tired. The acts were better than those features on the Gong Show but not as scintillating as performances on the Masked Singer. But what about search? Oh, it’s great. And that circus train. Is it powered by steam?

Stephen E Arnold, April 9, 2024

x

x

x

x

Meta Warns Limiting US AI Sharing Diminishes Influence

April 10, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Limiting tech information is a way organizations and governments prevent bad actors from using them for harmful reasons. Whether repressing the information is good or bad is a topic for debate, big tech leaders don’t want limitations. Yahoo Finance reports on what Meta thinks about the issue: “Meta Says Limits On Sharing AI Technology May Dim US Influence.”

Nick Clegg is Meta Platform’s policy chief and he told the US government that if they prevented tech companies from sharing AI technology publicly (aka open source) it would damage America’s influence on AI development. Clegg’s statement is alluding to if “if you don’t let us play, we can’t make the rules.” In more politically correct and also true words, Clegg argued that a more “restrictive approach” would mean other nations’ tech could become the “global norm.” It sounds like the old imperial vs. metric measurements argument.

Open source code is fundamentally for advancing new technology. Many big tech companies want to guard their proprietary code so they can exploit it for profits. Others, like Clegg, appear to want global industry influence for higher revenue margins and encourage new developments.

Meta’s argument for keeping the technology open may resonate with the current presidential administration and Congress. For years, efforts to pass legislation that restricts technology companies’ business practices have all died in Congress, including bills meant to protect children on social media, to limit tech giants from unfairly boosting their own products, and to safeguard users’ data online.

But other bills aimed at protecting American business interests have had more success, including the Chips and Science Act, passed in 2022 to support US chipmakers while addressing national security concerns around semiconductor manufacturing. Another bill targeting Chinese tech giant ByteDance Ltd. and its popular social network, TikTok, is awaiting a vote in the Senate after passing in the House earlier this month.”

Restricting technology sounds like the argument about controlling misinformation. False information does harm society but it begs the argument “what is to be considered harmful?” Another similarity is the use of a gun or car. Cars and guns are essential and dangerous tools to modern society, but in the wrong hands they’re deadly weapons.

Whitney Grace, April 10, 2024

Perplexed at Perplexity? It Is Just the Need for Money. Relax.

April 5, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

“Gen-AI Search Engine Perplexity Has a Plan to Sell Ads” makes it clear that the dynamic world of wildly over-hyped smart software is somewhat fluid. Pivoting from “No, never” to “Yes, absolutely” might catch some by surprise. But this dinobaby is ready for AI’s morphability. Artificial intelligence means something to the person using the term. There may be zero correlation between the meaning of AI in the mind of any other people. Absent the Vulcan mind meld, people have to adapt. Morphability is important.

The dinobaby analyst is totally confused. First, say one thing. Then, do the opposite. Thanks, MSFT Copilot. Close enough. How’s that AI reorganization going?

I am thinking about AI because Perplexity told Adweek that despite obtaining $73 million in Series B funding, the company will start selling ads. This is no big deal for Google which slips unmarked ads into its short video streams. But Perplexity was not supposed to sell ads. Yeah, well, that’s no longer an operative concept.

The write up says:

Perplexity also links sources in the response while suggesting related questions users might want to ask. These related questions, which account for 40% of Perplexity’s queries, are where the company will start introducing native ads, by letting brands influence these questions,

Sounds rock solid, but I think that the ads will have a bit of morphability; that is, when big bucks are at stake, those ads are going to go many places. With an alleged 10 million monthly active users, some advertisers will want those ads shoved down the throat of anything that looks like a human or bot with buying power.

Advertisers care about “brand safety.” But those selling ads care about selling ads. That’s why exciting ads turn up in quite interesting places.

I have a slight distrust for pivoters. But that’s just an old dinobaby, an easily confused dinobaby at that.

Stephen E Arnold, April 5, 2024

Nah, AI Is for Little People Too. Ho Ho Ho

April 5, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I like the idea that smart software is open. Anyone can download software and fire up that old laptop. Magic just happens. The reality is that smart software is going to involve some big outfits and big bucks when serious applications or use cases are deployed. How do I know this? Well, I read “Microsoft and OpenAI Reportedly Building $100 Billion Secret Supercomputer to Train Advanced AI.” The number $100 billion in not $6 trillion bandied about by Sam AI-Man a few weeks ago. It does, however, make Amazon’s paltry $3 billion look like chump change. And where does that leave the AI start ups, the AI open source champions, and the plain vanilla big-smile venture folks? The answer is, “Ponying up some bucks to get that AI to take flight.”

Thanks, MSFT Copilot. Stick to your policies.

The write up states:

… the dynamic duo are working on a $100 billion — that’s "billion" with a "b," meaning a sum exceeding many countries’ gross domestic products — on a hush-hush supercomputer designed to train powerful new AI.

The write up asks a question some folks with AI sparkling in their eyes cannot answer; to wit:

Needless to say, that’s a mammoth investment. As such, it shines an even brighter spotlight on a looming question for the still-nascent AI industry: how’s the whole thing going to pay for itself?

But I know the answer: With other people’s money and possibly costs distributed across many customers.

Observations are warranted:

- The cost of smart software is likely to be an issue for everyone. I don’t think “free” is the same as forever

- Mistral wants to do smaller language models, but Microsoft has “invested” in that outfit as well. If necessary, some creative end runs around an acquisition may be needed because MSFT may want to take Mistral off the AI chess board

- What’s the cost of the electricity to operate what $100 billion can purchase? How about a nifty thorium reactor?

Net net: Okay, Google, what is your move now that MSFT has again captured the headlines?

Stephen E Arnold, April 5, 2024

Yeah, Stability at Stability AI: Will Flame Outs Light Up the Bubble?

April 4, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “Inside the $1 Billion Love Affair between Stability AI’s Complicated Founder and Tech Investors Coatue and Lightspeed—And How It Turned Bitter within Months.” Interesting but, from my point of view, not surprising. High school science club members, particularly when preserving some of their teeny bopper ethos into alleged adulthood can be interesting people. And at work, exciting may be a suitable word. The write up’s main idea is that the wizard “left home in his pajamas.” Well, that’s a good summary of where Stability AI is.

The high school science club finds itself at odds with a mere school principal. The science club student knows that if the principal were capable, he would not be a mere principal. Thanks, MSFT Copilot. Were your senior managers in a high school science club?

The write up points out that Stability was the progenitor of Stable Diffusion, the art generator. I noticed the psycho-babbly terms stability and stable. Did you? Did the investors? Did the employees? Answer: Hey, there’s money to be made.

I noted this statement in the article:

The collaborative relationship between the investors and the promising startup gradually morphed into something more akin to that of a parent and an unruly child as the extent of internal turmoil and lack of clear direction at Stability became apparent, and even increased as Stability used its funding to expand its ranks.

Yep, high school management methods: “Don’t tell me what to do. I am smarter than you, Mr. Assistant Principal. You need me on the Quick Recall team, so go away,” echo in my mind in an Ezoic AI voice.

The write up continued the tale of mismanagement and adolescent angst, quoting the founder of Stability AI:

“Nobody tells you how hard it is to be a CEO and there are better CEOs than me to scale a business,” Mostaque said. “I am not sure anyone else would have been able to build and grow the research team to build the best and most widely used models out there and I’m very proud of the team there. I look forward to moving onto the next problem to handle and hopefully move the needle.”

I interpreted this as, “I did not know that calcium carbide in the lab sink drain could explode when in contact with water and then ignited, Mr. Principal.”

And, finally, let me point out this statement:

Though Stability AI’s models can still generate images of space unicorns and Lego burgers, music, and videos, the company’s chances of long-term success are nothing like they once appeared. “It’s definitely not gonna make me rich,” the investor says.

Several observations:

- Stability may presage the future for other high-flying and low-performing AI outfits. Why? Because teen management skills are problematic in a so-so economic environment

- AI is everywhere and its value is now derived by having something that solves a problem people will pay to have ameliorated. Shiny stuff fresh from the lab won’t make stakeholders happy

- Discipline, particularly in high school science club members, may not be what a dinobaby like me would call rigorous. Sloppiness produces a mess and lost opportunities.

Net net: Ask about a potential employer’s high school science club memories.

Stephen E Arnold, April 4, 2024

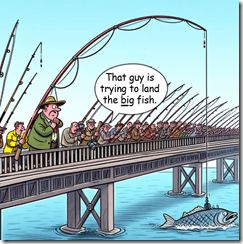

Angling to Land the Big Google Fish: A Humblebrag Quest to Be CEO?

April 3, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

My goodness, the staff and alums of DeepMind have been in the news. Wherever there are big bucks or big buzz opportunities, one will find the DeepMind marketing machinery. Consider “Can Demis Hassabis Save Google?” The headline has two messages for me. The first is that a “real” journalist things that Google is in big trouble. Big trouble translates to stakeholder discontent. That discontent means it is time to roll in a new Top Dog. I love poohbahing. But opining that the Google is in trouble. Sure, it was aced by the Microsoft-OpenAI play not too long ago. But the Softies have moved forward with the Mistral deal and the mysterious Inflection deal . But the Google has money, market share, and might. Jake Paul can say he wants the Mike Tyson death stare. But that’s an opinion until Mr. Tyson hits Mr. Paul in the face.

The second message in the headline that one of the DeepMind tribe can take over Google, defeat Microsoft, generate new revenues, avoid regulatory purgatory, and dodge the pain of its swinging door approach to online advertising revenue generation; that is, people pay to get in, people pay to get out, and soon will have to subscribe to watch those entering and exiting the company’s advertising machine.

Thanks, MSFT Copilot. Nice fish.

What are the points of the essay which caught my attention other than the headline for those clued in to the Silicon Valley approach to “real” news? Let me highlight a few points.

First, here’s a quote from the write up:

Late on chatbots, rife with naming confusing, and with an embarrassing image generation fiasco just in the rearview mirror, the path forward won’t be simple. But Hassabis has a chance to fix it. To those who known him, have worked alongside him, and still do — all of whom I’ve spoken with for this story — Hassabis just might be the perfect person for the job. “We’re very good at inventing new breakthroughs,” Hassabis tells me. “I think we’ll be the ones at the forefront of doing that again in the future.”

Is the past a predictor of future success? More than lab-to-Android is going to be required. But the evaluation of the “good at inventing new breakthroughs” is an assertion. Google has been in the me-too business for a long time. The company sees itself as a modern Bell Labs and PARC. I think that the company’s perception of itself, its culture, and the comments of its senior executives suggest that the derivative nature of Google is neither remembered nor considered. It’s just “we’re very good.” Sure “we” are.

Second, I noted this statement:

Ironically, a breakthrough within Google — called the transformer model — led to the real leap. OpenAI used transformers to build its GPT models, which eventually powered ChatGPT. Its generative ‘large language’ models employed a form of training called “self-supervised learning,” focused on predicting patterns, and not understanding their environments, as AlphaGo did. OpenAI’s generative models were clueless about the physical world they inhabited, making them a dubious path toward human level intelligence, but would still become extremely powerful. Within DeepMind, generative models weren’t taken seriously enough, according to those inside, perhaps because they didn’t align with Hassabis’s AGI priority, and weren’t close to reinforcement learning. Whatever the rationale, DeepMind fell behind in a key area.

Google figured something out and then did nothing with the “insight.” There were research papers and chatter. But OpenAI (powered in part by Sam AI-Man) used the Google invention and used it to carpet bomb, mine, and set on fire Google’s presumed lead in anything related to search, retrieval, and smart software. The aftermath of the Microsoft OpenAI PR coup is a continuing story of rehabilitation. From what I have seen, Google needs more time getting its ageingbody parts working again. The ad machine produces money, but the company reels from management issue to management issue with alarming frequency. Biased models complement spats with employees. Silicon Valley chutzpah causes neurological spasms among US and EU regulators. Something is broken, and I am not sure a person from inside the company has the perspective, knowledge, and management skills to fix an increasingly peculiar outfit. (Yes, I am thinking of ethnically-incorrect German soldiers loyal to a certain entity on Google’s list of questionable words and phrases.)

And, lastly, let’s look at this statement in the essay:

Many of those who know Hassabis pine for him to become the next CEO, saying so in their conversations with me. But they may have to hold their breath. “I haven’t heard that myself,” Hassabis says after I bring up the CEO talk. He instantly points to how busy he is with research, how much invention is just ahead, and how much he wants to be part of it. Perhaps, given the stakes, that’s right where Google needs him. “I can do management,” he says, ”but it’s not my passion. Put it that way. I always try to optimize for the research and the science.”

I wonder why the author of the essay does not query Jeff Dean, the former head of a big AI unit in Mother Google’s inner sanctum about Mr. Hassabis? How about querying Mr. Hassabis’ co-founder of DeepMind about Mr. Hassabis’ temperament and decision-making method? What about chasing down former employees of DeepMind and getting those wizards’ perspective on what DeepMind can and cannot accomplish.

Net net: Somewhere in the little-understood universe of big technology, there is an invisible hand pointing at DeepMind and making sure the company appears in scientific publications, the trade press, peer reviewed journals, and LinkedIn funded content. Determining what’s self-delusion, fact, and PR wordsmithing is quite difficult.

Google may need some help. To be frank, I am not sure anyone in the Google starting line up can do the job. I am also not certain that a blue chip consulting firm can do much either. Google, after a quarter century of zero effective regulation, has become larger than most government agencies. Its institutional mythos creates dozens of delusional Ulysses who cannot separate fantasies of the lotus eaters from the gritty reality of the company as one of the contributors to the problems facing youth, smaller businesses, governments, and cultural norms.

Google is Googley. It will resist change.

Stephen E Arnold, April 3, 2024

India: AI, We Go This Way, Then We Go That Way

April 3, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

In early March 2024, the India said it would require all AI-related projects still in development receive governmental approval before they were released to the public. India’s Ministry of Electronics and Information Technology stated it wanted to notify the public of AI technology’s fallacies and its unreliability. The intent was to label all AI technology with a “consent popup” that informed users of potential errors and defects. The ministry also wanted to label potentially harmful AI content, such as deepfakes, with a label or unique identifier.

The Register explains that it didn’t take long for the south Asian country to rescind the plan: “India Quickly Unwinds Requirement For Government Approval Of AIs.” The ministry issued a update that removed the requirement for government approval but they did add more obligations to label potentially harmful content:

"Among the new requirements for Indian AI operations are labelling deepfakes, preventing bias in models, and informing users of models’ limitations. AI shops are also to avoid production and sharing of illegal content, and must inform users of consequences that could flow from using AI to create illegal material.”

Minister of State for Entrepreneurship, Skill Development, Electronics, and Technology Rajeev Chandrasekhar provided context for the government’s initial plan for approval. He explained it was intended only for big technology companies. Smaller companies and startups wouldn’t have needed the approval. Chandrasekhar is recognized for his support of boosting India’s burgeoning technology industry.

Whitney Grace, April 3, 2024