Cyber Security: A Modest Reminder about Reality

April 11, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

If I participated in every webinar to which I am invited, I would have no time for eating, sleeping, and showing up at the gym to pretend I am working out like a scholarship chasing football player. I like the food and snooze stuff. The gym? Yeah, it is better than a visit to my “real” doctor. (Mine has comic book art on the walls of the office. No diplomas. Did I tell you I live in rural Kentucky, where comic books are considered literature.)

I read what to me was a grim article titled “Classified US Documents on Ukraine War Leaked: Report.” The publisher was Al Jazeera, and I suppose the editor could have slapped a more tantalizing title and subtitle on the article. (The information, according to Al Jazeera first appeared in the New York Times. Okay, I won’t comment on this factoid.)

Here’s the paragraph which caught my attention:

There was no explanation as to how the plans were obtained.

Two points come to my mind:

- Smart software and analytic tools appear to be unable to pinpoint the who, when, and where the documents originated. Some vendors make assertions that their real time systems can deliver this type of information. Maybe? But maybe not?

- The Fancy Dan cyber tools whether infused with Bayesian goodness or just recycled machine learning are not helping out with the questions about who, what, and where either.

If the information emerges in the near future, I will be pleased. My hunch is that cyber is a magic word for marketers and individuals looking for a high-pay, red-hot career.

The reality is that either disinformation or insiders make these cyber marketing assertions ring like a bell made of depleted uranium.

Stephen E Arnold, April 11, 2023

Google and Consistency: Hobgoblin? Nah, Basic High School Management Method

April 11, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid

I read with some amusement the story “Google Backtracks on a Business-Disrupting Limitation to Its Drive Storage Service.” The write up explains:

Google recently decided to impose a new “surprise” limitation to Drive, making business customers unable to create an unlimited number of files on the service.

Was there a warning? An option for customers? Nah. The management methods of the Google do not consider these facets of a decision. Is there a management procedure? That’s a question?

The write up reports:

as as Mountain View [Google management] finally confirmed that the file cap actually was a “safeguard” designed to prevent possible misuse of Drive in a way that could “impact the stability and safety of the system.”

Safeguards are good. Customer focused and feel goody.

The write up then states without any critical comment:

the weekend provided enough feedback from dissatisfied users that Google had to reverse its decision.

Thus, a decision was made, users complained, and someone at Google actually looked at the mess and made a decision to reverse the file limit.

How long did this take? About 48 hours.

Does this signal that Google is customer centric? Nope.

Does this decision illustrate a deliberate management method? Nope.

Is this the Code Red operating environment in action? Yep.

I have to dash. I hear the high school class change bell ringing. No high school science club meeting tomorrow.

Stephen E Arnold, April 11, 2023

Sequoia on AI: Is The Essay an Example of What Informed Analysis Will Be in the Future?

April 10, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I read an essay produced by the famed investment outfit Sequoia. Its title: “Generative AI: A Creative New World.” The write up contains buzzwords, charts, a modern version of a list, and this fascinating statement:

This piece was co-written with GPT-3. GPT-3 did not spit out the entire article, but it was responsible for combating writer’s block, generating entire sentences and paragraphs of text, and brainstorming different use cases for generative AI. Writing this piece with GPT-3 was a nice taste of the human-computer co-creation interactions that may form the new normal. We also generated illustrations for this post with Midjourney, which was SO MUCH FUN!

I loved the capital letters and the exclamation mark. Does smart software do that in its outputs?

I noted one other passage which caught my attention; to wit:

The best Generative AI companies can generate a sustainable competitive advantage by executing relentlessly on the flywheel between user engagement/data and model performance.

I understand “relentlessly.” To be honest, I don’t know about a “sustainable competitive advantage” or user engagement/data model performance. I do understand the Amazon flywheel, but my understand that it is slowing and maybe wobbling a bit.

My take on the passage in purple as in purple prose is that “best” AI depends not on accuracy, lack of bias, or transparency. Success comes from users and how well the system performs. “Perform” is ambiguous. My hunch is that the Sequoia smart software (only version 3) and the super smart Sequoia humanoids were struggling to express why a venture firm is having “fun” with a bit of B-school teaming — money.

The word “money” does not appear in the write up. The phrase “economic value” appears twice in the introduction to the essay. No reference to “payoff.” No reference to “exit strategy.” No use of the word “financial.”

Interesting. Exactly how does a money-centric firm write about smart software without focusing on the financial upside in a quite interesting economic environment.

I know why smart software misses the boat. It’s good with deterministic answers for which enough information is available to train the model to produce what seems like coherent answers. Maybe the smart software used by Sequoia was not clued in to the reports about Sequoia’s explanations of its winners and losers? Maybe the version of the smart software is not up the tough subject to which the Sequoia MBAs sought guidance?

On the other hand, maybe Sequoia did not think through what should be included in a write up by a financial firm interested in generating big payoffs for itself and its partners.

Either way. The essay seems like a class project which is “good enough.” The creative new world lacks the force that through the green fuse drives the cash.

Stephen E Arnold, April 10, 2023

The Google: A Big, Fat, and Code Red Addled Target for Squabbling Legal Eagles

April 10, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

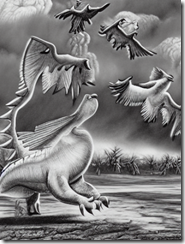

Does the idea of a confused Google waving its tiny arms at pesky legal eagles seem possible. The Google is not just an online advertising leader, it is a magnet for attorneys, solicitors, and the aforementioned legal eagle.

“Rival Lawsuits Vie to Represent UK Publishers in Class-action Claim against Google” states:

The dinosaur – legal eagle image is the product of the really smart and intuitive ScribbledDiffusion.com system.

“Rival Lawsuits Vie to Represent UK Publishers in Class-action Claim against Google” states:

The claimants in both those cases argue that Google has engaged in anti-competitive behavior through its control of each part of the market for display advertising. The trillion-dollar company provides technology to both advertisers and publishers (through products such as Google Adsense and Doubleclick for Publishers) and runs AdX, an ad exchange that mediates advertising auctions.

Imagine two different lawsuits with flocks of squabbling lawyers. Poor Google. The company is dealing with the downstream consequences of Microsoft’s brilliant marketing play. The company has called into question the techno-wizardry of the online advertising outfit. Plus, it has rippled through its management processes. The already wonky approach to PR and HR are juicy targets for critics and some aggrieved employees.

How will Google respond? My concern is that Google’s senior management is becoming less capable than it was pre-Microsoft at Davos era. The Google is not going away, but its recent behaviors like changing file size limits, dumping employees, and apparent confusion about what to do now that Messrs. Brin and Page have returned to Starfleet command.

A real Bard said:

So quick bright things come to confusion. (Midsummer Night’s Dream, which should not be read aloud in a sophomore high school English class. Right, Bottom?)

I am not sure what Google’s Bard would say. I am reluctant to use the system since my son asked it, “Which city is better? Memphis, Tennessee, or Barcelona, Spain. Bard pointed out that Memphis was a soccer player who liked Barcelona.”

What the risk of this UK spat between lawyers getting resolved? Maybe 90 percent. What’s the likelihood Google will be hit with another fine? Maybe 95 percent. Being under siege and equipped with arthritic management hands at the controls of an ageing starship are liabilities in my opinion.

Stephen E Arnold, April 10, 2023

AI Is Not the Only System That Hallucinates

April 7, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I personally love it when software goes off the deep end. From the early days of “Fatal Error” to the more interesting outputs of a black box AI system, the digital comedy road show delights me.

I read “The Call to Halt ‘Dangerous’ AI Research Ignores a Simple Truth” reminds me that it is not just software which is subject to synapse wonkiness. Consider this statement from the Wired Magazine story:

… there is no magic button that anyone can press that would halt “dangerous” AI research while allowing only the “safe” kind.

Yep, no magic button. No kidding. We have decades of experience with US big technology companies’ behavior to make clear exactly the trajectory of new methods.

I love this statement from Wired Magazine no less:

Instead of halting research, we need to improve transparency and accountability while developing guidelines around the deployment of AI systems. Policy, research, and user-led initiatives along these lines have existed for decades in different sectors, and we already have concrete proposals to work with to address the present risks of AI.

Wired was one of the cheerleaders when it fired up its unreadable pink text with orange headlines in 1993 as I recall. The cheerleading was loud and repetitive.

I would suggest that “simple truth” is in short supply. In my experience, big technology savvy companies will do whatever they can do to corner a market and generate as much money as possible. Lock in, monopolistic behavior, collusion, and other useful tools are available.

Nice try Wired. Transparency is good to consider, but big outfits are not in the let the sun shine in game.

Stephen E Arnold, April 7, 2023

Who Does AI? Academia? Nope. Government Research Centers? Nope. Who Then?

April 7, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Smart software is the domain of commercial enterprises. How would these questions be answered in China? Differently I would suggest.

“AI Is Entering an Era of Corporate Control” cites a report from Stanford University (an institution whose president did some alleged Fancy Dancing in research data) to substantiate the observation. I noted this passage:

The AI Index states that, for many years, academia led the way in developing state-of-the-art AI systems, but industry has now firmly taken over. “In 2022, there were 32 significant industry-produced machine learning models compared to just three produced by academia…

Interesting. Innovation, however, seems to have drained from the Ivory Towers (now in the student loan business) and Federal research labs (now in the marketing their achievements to obtain more US government funding). These two slices of smart people are not performing when it comes to smart software.

The source article does not dwell on these innovation laggards. Instead I learn that AI investment is decreasing and that running AI models kills whales and snail darters.

For me, the main issue is, “Why is there a paucity of smart software in US universities and national laboratories? Heck, let’s toss in DARPA too.” I think it is easy to point to the commercial moves of OpenAI, the marketing of Microsoft, and the foibles of the Sundar and Prabhakar Comedy Show. In fact, the role of big companies is obvious. Was a research report needed? A tweet would have handled the topic for me.

I wonder what structural friction is inhibiting universities and outfits like LANL, ORNL, and Sandia, among others.

Stephen E Arnold, April 7, 2023

Digital Addiction Game Plan: Get Those Kiddies When Young

April 6, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I enjoy research which provides roadmaps for confused digital Hummer drivers. The Hummer weighs more than four tons and costs about the same as one GMLRS rocket. Digital weapons are more effective and less expensive. One does give up a bit of shock and awe, however. Life is full of trade offs.

The information in “Teens on Screens: Life Online for Children and Young Adults Revealed” is interesting. The analytics wizards have figure out how to hook a young person on zippy new media. I noted this insight:

Children are gravitating to ‘dramatic’ online videos which appear designed to maximize stimulation but require minimal effort and focus…

How does one craft a magnetic video:

Gossip, conflict, controversy, extreme challenges and high stakes – often involving large sums of money – are recurring themes. ‘Commentary’ and ‘reaction’ video formats, particularly those stirring up rivalry between influencers while encouraging viewers to pick sides, were also appealing to participants. These videos, popularized by the likes of Mr Beast, Infinite and JackSucksAtStuff, are often short-form, with a distinct, stimulating, editing style, designed to create maximum dramatic effect. This involves heavy use of choppy, ‘jump-cut’ edits, rapidly changing camera angles, special effects, animations and fast-paced speech.

One interesting item in the article’s summary of the research concerned “split screening.” The term means that one watches more than one short-form video at the same time. (As a dinobaby, I have to work hard to get one thing done. Two things simultaneously. Ho ho ho.)

What can an enterprising person interested in weaponizing information do? Here are some ideas:

- Undermine certain values

- Present shaped information

- Take time from less exciting pursuits like homework and reading books

- Having self-esteem building experiences.

Who cares? Advertisers, those hostile to the interests of the US, groomers, and probably several other cohorts.

I have to stop now. I need to watch multiple TikToks.

Stephen E Arnold, April 6, 2023

Google: Traffic in Kings Cross? Not So Hot

April 6, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I saw a picture of a sign held by a Googler (maybe a Xoogler or a Xoogler to be?) with the message:

Google layoffs. Hostile. Unnecessary. Brutal. Unfair.

Another Google PR/HR moment upon which the management team can surf… or drown? (One must consider different outcomes, mustn’t one?)

I did a small bit of online sleuthing and discovered what may be a “real” news story about the traffic hassles in King’s Cross this morning (April 4, 2023). “Unite Google Workers Strike Outside London HQ over Alleged Appalling Treatment” reports:

Google workers have been reduced to tears by fears of being made redundant, a union representative told a London rally… Others clutched placards with messages such as “Being evil is not a strategy” and “R.I.P Google culture 1998 – 2023”.

Google’s wizardly management team allegedly said:

Google said it has been “constructively engaging and listening to employees”.

I want to highlight a quite spectacular statement, which — for all I know — could have been generated by Google’s smart software which has allegedly been infused with some ChatGPT goodness:

It [the union for aspiring Xooglers] also alleges that employees with disabilities are being told to get a doctor’s note if they want a colleague to attend their meetings and “even then, union representation is still prohibited”.

Let me put this in context. Google is dealing with what I call the Stapler Affair. Plus, it continues to struggle against the stream of marketing goodness flowing from Redmond, seat of the new online advertising pretender to Google’s throne. The company continues to flail at assorted legal eagles bringing good tidings of great joy to lawyers billing for the cornucopia of lawsuits aimed at the Google.

My goodness. Now Google has created a bit of ill will for London sidewalk, bus, and roadway users. Does this sound like a desirable outcome? Maybe for Google senior management, not those trying to be happy at King’s Cross.

Stephen E Arnold, April 6, 2023

Google, Does Quantum Supremacy Imply That Former Staff Grouse in Public?

April 5, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I am not sure if this story is spot on. I am writing about “Report: A Google AI Researcher Resigned after Learning Google’s Bard Uses Data from ChatGPT.” I am skeptical because today is All Fools’ Day. Being careful is sometimes a useful policy. An exception might be when a certain online advertising company is losing bigly to the marketing tactics of [a] Microsoft, the AI in Word and Azure Security outfit, [b] OpenAI and its little language model that could, and [c] Midjourney which just rolled out its own camera with a chip called Bionzicle. (Is this perhaps pronounced “bio-cycle” like washing machine cycle or “bion zickle” like bio pickle? I go with the pickle sound; it seems appropriate.

The cited article reports as actual factual real news:

ChatGPT AI is often accused of leveraging “stolen” data from websites and artists to build its AI models, but this is the first time another AI firm has been accused of stealing from ChatGPT. ChatGPT is powering Bing Chat search features, owing to an exclusive contract between Microsoft and OpenAI. It’s something of a major coup, given that Bing leap-frogged long-time search powerhouse Google in adding AI to its setup first, leading to a dip in Google’s share price.

This is im port’ANT as the word is pronounced on a certain podcast.

More interesting to me is that recycled Silicon Valley type real news verifies this remarkable assertion as the knowledge output of a PROM’ inANT researcher, allegedly named Jacob Devlin. Mr. Devil has found his future at – wait for it – OpenAI. Wasn’t OpenAI the company that wanted to do good and save the planet and then discovered Microsoft backing, thirsty trapped AI investors, and the American way of wealth?

Net net: I wish I could say, April’s fool, but I can’t. I have an unsubstantiated hunch that Google’s governance relies on the whims of high school science club members arguing about what pizza topping to order after winning the local math competition. Did the team cheat? My goodness no. The team has an ethical compass modeled on the triangulations of William McCloundy or I.O.U. O’Brian, the fellow who sold the Brooklyn Bridge in the early 20th century.

Stephen E Arnold, April 5, 2023

Gotcha, Googzilla: Bing Channels GoTo, Overture, and Yahoo with Smart Software

April 5, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I read “That Was Fast! Microsoft Slips Ads into AI-Powered Bing Chat.” Not exactly a surprise? No, nope. Microsoft now understands that offering those who want to put a message in front of eye balls generates money. Google is the poster child of Madison Avenue on steroids.

The write up says:

We are also exploring additional capabilities for publishers including our more than 7,500 Microsoft Start partner brands. We recently met with some of our partners to begin exploring ideas and to get feedback on how we can continue to distribute content in a way that is meaningful in traffic and revenue for our partners.

Just 7,500? Why not more? Do you think Microsoft will follow the Google playbook, just enhanced with the catnip of smart software? If you respond, “yes,” you are on the monetization supersonic jet. Buckle up.

Here are my predictions based on what little I know about Google’s “legacy”:

- Money talks; therefore, the ad filtering system will be compromised by those with access to getting ads into the “system”. (Do you believe that software and human filtering systems are perfect? I have a bridge to sell you.)

- The content will be warped by ads. This is the gravity principle: Get to close to big money and the good intentions get sucked into the advertisers’ universe. Maybe it is roses and Pepsi Cola in the black hole, but I know it will not contain good intentions with mustard.

- The notion of a balanced output, objectivity, or content selected by a smart algorithm will be fiddled. How do I know? I would point to the importance of payoffs in 1950s rock and roll radio and the advertising business. How about a week on a yacht? Okay, I will send details. No strings, of course.

- And guard rails? Yep, keep content that makes advertisers — particularly big advertisers — happy. Block or suppress content that makes advertisers — particularly big advertisers – unhappy.

Do I have other predictions? Oh, yes. Why not formulate your own ideas after reading “BingBang: AAD Misconfiguration Led to Bing.com Results Manipulation and Account Takeover.” Bingo!

Net net: Microsoft has an opportunity to become the new Google. What could go wrong?

Stephen E Arnold, April 5, 2023