Silicon Valley Streetbeefs

July 26, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

If you are not familiar with Streetbeefs, I suggest you watch the “Top 5 Boxing Knock Outs” on YouTube. Don’t forget to explore the articles about Streetbeefs at the about section of the Community page for the producer. Useful information appears on the main Web site too.

So what’s Streetbeefs do? The organization’s tag line explains:

Guns down. Gloves up.

If that is not sufficiently clear, the organization offers:

This org is for fighters and friends of STREETBEEFS! Everyone who fights for Streetbeefs does so to solve a dispute, or for pure sport… NOONE IS PAID TO FIGHT HERE… We also have many dedicated volunteers who help with reffing, security, etc..but if you’re joining the group looking for a paid position please know we’re not hiring. This is a brotherhood/sisterhood of like minded people who love fighting, fitness, and who hate gun violence. Our goal is to build a large community of people dedicated to stopping street violence and who can depend on each other like family. This organization is filled with tough people…but its also filled with the most giving people around..Streetbeefs members support each other to the fullest.

I read “Elon Musk Is Still Insisting He’s Down to Fight Mark Zuckerberg: ‘Any Place, Any Time, Any Rules.” This article makes clear that Mark (Senator, thank you for that question) Zuckerberg and Elon (over promise and under deliver) Musk want to fight one another. The article says (I cannot believe I read this by the way):

Elon Musk is impressed with Meta’s latest AI model, but he’s still raring for a bout in the ring with Mark Zuckerberg. “I’ll fight Zuckerberg any place, any time, any rules,” Musk told reporters in Washington on Wednesday [July 24, 2024].

My thought is that Mr. Musk should ring up Sunshine Trask, who is the group manager for fighter signups at Streetbeefs. Trask can coordinate the fight plus the undercard with the two testosterone charged big technology giants. With most fights held in make shift rings outside, Golden Gate Park might be a suitable venue for the event. Another possibility is to rope off the street near Philz coffee in Sunnyvale and hold the “beef” in Plaza de Sol.

Both Mr. Musk and Mr. Zuckerberg can meet with Scarface, the principal figure at Streetbeefs and get a sense of how the show will go down, what the rules are, and why videographers are in the ring with the fighter.

If these titans of technology want to fight, why not bring their idea of manly valor to a group with considerable experience handling individuals of diverse character.

Perhaps the “winner” would ask Scarface if he would go a few rounds to test his skills and valor against the victor of the truly bizarre dust up the most macho of the Sillycon Valley superstars. My hunch is that talking with Scarface and his colleagues might inform Messrs. Musk and Zuckerberg of the brilliance, maturity, and excitement of fighting for real.

On the other hand, Scarface might demonstrate his street and business acumen by saying, “You guys are adults?”

Stephen E Arnold, July 26, 2024

If Math Is Running Out of Problems, Will AI Help Out the Humans?

July 26, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I read “Math Is Running Out of Problems.” The write up appeared in Medium and when I clicked I was not asked to join, pay, or turn a cartwheel. (Does Medium think 80-year-old dinobabies can turn cartwheels? The answer is, “Hey, doofus, if you want to read Medium articles pay up.)

Thanks, MSFT Copilot. Good enough, just like smart security software.

I worked through the free essay, which is a reprise of an earlier essay on the topic of running out of math problems. These reason that few cared about the topic is that most people cannot make change. Thinking about a world without math problems is an intellectual task which takes time from scamming the elderly, doom scrolling, generating synthetic information, or watching reruns of I Love Lucy.

The main point of the essay in my opinion is:

…take a look at any undergraduate text in mathematics. How many of them will mention recent research in mathematics from the last couple decades? I’ve never seen it.

New and math problems is an oxymoron.

I think the author is correct. As specialization becomes more desirable to a person, leaving the rest of the world behind is a consequence. But the issue arises in other disciplines. Consider artificial intelligence. That jazzy phrase embraces a number of mathematical premises, but it boils down to a few chestnuts, roasted, seasoned, and mixed with some interesting ethanols. (How about that wild and crazy Sir Thomas Bayes?)

My view is that as the apparent pace of information flow erodes social and cultural structures, the quest for “new” pushes a frantic individual to come up with a novelty. The problem with a novelty is that it takes one’s eye off the ball and ultimately the game itself. The present state of affairs in math was evident decades ago.

What’s interesting is that this issue is not new. In the early 1980s, Dialog Information Services hosted a mathematics database called xxx. The person representing the MATHFILE database (now called MathSciNet) told me in 1981:

We are having a difficult time finding people to review increasingly narrow and highly specialized papers about an almost unknown area of mathematics.

Flash forward to 2024. Now this problem is getting attention in 2024 and no one seems to care?

Several observations:

- Like smart software, maybe humans are running out of high-value information? Chasing ever smaller mathematical “insights” may be a reminder that humans and their vaunted creativity has limits, hard limits.

- If the premise of the paper is correct, the issue should be evident in other fields as well. I would suggest the creation of a “me too” index. The idea is that for a period of history, one can calculate how many knock off ideas grab the coat tails of an innovation. My hunch is that the state of most modern technical insight is high on the me too index. No, I am not counting “original” TikTok-type information objects.

- The fragmentation which seems apparent to me in mathematics and that interesting field of mathematical physics mirrors the fragmentation of certain cultural precepts; for example, ethical behavior. Why is everything “so bad”? The answer is, “Specialization.”

Net net: The pursuit of the ever more specialized insight hastens the erosion of larger ideas and cultural knowledge. We have come a long way in four decades. The direction is clear. It is not just a math problem. It is a now problem and it is pervasive. I want a hat that says, “I’m glad I’m old.”

Stephen E Arnold, July 26, 2024

How Can Creatives Survive AI Disruptions?

July 26, 2024

What does the AI takeover mean for creative workers? No one really knows. But there is no shortage of opinions on how to prepare. Art and design magazine Creative Boom polled its readers and shares some of their insights in, “Where the Creative Industry Is Heading, and How to Survive the Next 15 Years.” Some point out creatives have had to adapt to disruptive technologies before, like digital photography, Photoshop, and Adobe Illustrator. But is generative AI in another ballpark? Perhaps.

Several creatives emphasize, well, creativity. They insist human creativity is something AI can never master (though I would not be so sure.) They advise fellow artists and designers to focus on what makes one human over mastering the tech. Writer Tom May states:

“It’s now less about your technical skills and more about your ideas in terms of concepts, branding and strategy. In other words, as technology automates more aspects of our creative work, our human-centric skills will become increasingly valuable. The ability to understand and connect with people on an emotional level, think critically, solve complex problems, and generate truly original ideas are skills that AI cannot easily replicate. ‘As a designer, I believe we need to think about the future regarding how we can be more human and design in a more human way,’ says designer Hugo Carvalho. ‘It’s like how Art Nouveau was a reaction to the loss of expression that came after the first industrial revolution. Similarly, this new era will be a reaction to AI and the loss of focus in human-to-human design, connections, and relationships.’”

How optimistic. The write-up advises perennial tactics like continuous learning, developing a strong personal style, networking, and taking risks. May also reminds readers not to sideline ethical considerations as they navigate AI upheaval. Accessibility, sustainability, cultural sensitivity, and social impact should remain priorities. Interesting. Is empathy the real factor that sets humans apart from algorithms?

Cynthia Murrell, July 26, 2024

Google and Third-Party Cookies: The Writing Is on the Financial Projection Worksheet

July 25, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I have been amused by some of the write ups about Google’s third-party cookie matter. Google is the king of the jungle when it comes to saying one thing and doing another. Let’s put some wood behind social media. Let’s make that Dodgeball thing take off. Let’s make that AI-enhanced search deliver more user joy. Now we are in third-party cookie revisionism. Even Famous Amos has gone back to its “original” recipe after the new and improved Famous Amos chips tanked big time. Google does not want to wait to watch ad and data sale-related revenue fall. The Google is changing its formulation before the numbers arrive.

“Google No Longer Plans to Eliminate Third-Party Cookies in Chrome” explains:

Google announced its cookie updates in a blog post shared today, where the company said that it instead plans to focus on user choice.

What percentage of Google users alter default choices? Don’t bother to guess. The number is very, very few. The one-click away baloney is a fabrication, an obfuscation. I have technical support which makes our systems as secure as possible given the resources an 80-year-old dinobaby has. But check out those in the rest home / warehouse for the soon to die? I would wager one US dollar that absolutely zero individuals will opt out of third-party cookies. Most of those in Happy Trail Ending Elder Care Facility cannot eat cookies. Opting out? Give me a break.

The MacRumors’ write up continues:

Back in 2020, Google claimed that it would phase out support for third-party cookies in Chrome by 2022, a timeline that was pushed back multiple times due to complaints from advertisers and regulatory issues. Google has been working on a Privacy Sandbox to find ways to improve privacy while still delivering info to advertisers, but third-party cookies will now be sticking around so as not to impact publishers and advertisers.

The Apple-centric online publication notes that UK regulators will check out Google’s posture. Believe me, Googzilla sits up straight when advertising revenue is projected to tank. Losing click data which can be relicensed, repurposed, and re-whatever is not something the competitive beastie enjoys.

MacRumors is not anti-Google. Hey, Google pays Apple big bucks to be “there” despite Safari. Here’s the online publications moment of hope:

Google does not plan to stop working on its Privacy Sandbox APIs, and the company says they will improve over time so that developers will have a privacy preserving alternative to cookies. Additional privacy controls, such as IP Protection, will be added to Chrome’s Incognito mode.

Correct. Google does not plan. Google outputs based on current situational awareness. That’s why Google 2020 has zero impact on Google 2024.

Three observations which will pain some folks:

- Google AI search and other services are under a microscope. I find the decision one which may increase scrutiny, not decrease regulators’ interest in the Google. Google made a decision which generates revenue but may increase legal expenses

- No matter how much money swizzles at each quarter’s end, Google’s business model may be more brittle than the revenue and profit figures suggest. Google is pumping billions into self driving cars, and doing an about face on third party cookies? The new Google puzzles me because search seems to be in the background.

- Google’s management is delivering revenues and profit, so the wizardly leaders are not going anywhere like some of Google’s AI initiatives.

Net net: After 25 years, the Google still baffles me. Time to head for Philz Coffee.

Stephen E Arnold, July 25, 2024

The Simple Fix: Door Dash Some Diversity in AI

July 25, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “There’s a Simple Answer to the AI Bias Conundrum: More Diversity.” I have read some amazing online write ups in the last few days, but this essay really stopped me in my tracks. Let’s begin with an anecdote from 1973. A half century ago I worked at a nuclear consulting firm which became part of Halliburton Industries. You know Halliburton. Dick Cheney. Ringing a bell?

One of my first tasks for the senior vice president who hired me was to assist the firm in identifying minorities in American universities about to graduate with a PhD in nuclear engineering. I am a Type A, and I set about making telephone calls, doing site visits, and working with our special librarian Dominique Doré, who had had a similar job when she worked in France for a nuclear outfit in that country. I chugged along and identified two possibles. Each was at the US Naval Academy at different stages of their academic career. The individuals would not be available for a commercial job until each had completed military service. So I failed, right?

Not even the clever wolf can put on a simple costume and become something he is not. Is this a trope for a diversity issue? Thanks, OpenAI. Good enough because I got tired of being told, “Inappropriate prompt.”

Nope. The project was designed to train me to identify high-value individuals in PhD programs. I learned three things:

- Nuclear engineers with PhDs in the early 1970s comprised a small percentage of those with the pre-requisites to become nuclear engineers. (I won’t go into details, but you can think in terms of mathematics, physics, and something like biology because radiation can ruin one’s life in a jiffy.)

- The US Navy, the University of California-Berkeley, and a handful of other universities with PhD programs in nuclear engineering were scouting promising high school students in order to convince them to enter the universities’ or the US government’s programs.

- The demand for nuclear engineers (forget female, minority, or non-US citizen engineers) was high. The competition was intense. My now-deceased friend Dr. Jim Terwilliger from Virginia Tech told me that he received job offers every week, including one from an outfit in the then Soviet Union. The demand was worldwide, yet the pool of qualified individuals graduating with a PhD seemed to be limited to six to 10 in the US, 20 in France, and a dozen in what was then called “the Far East.”

Everyone wanted the PhDs in nuclear engineering. Diversity simply did not exist. The top dog at Halliburton 50 years ago, told me, “We need more nuclear engineers. It is just not simple.”

Now I read “There’s a Simple Answer to the AI Bias Conundrum: More Diversity.” Okay, easy to say. Why not try to deliver? Believe me if the US Navy, Halliburton, and a dumb pony like myself could not figure out how to equip a person with the skills and capabilities required to fool around with nuclear material, how will a group of technology wizards in Silicon Valley with oodles of cash just do what’s simple? The answer is, “It will take structural change, time, and an educational system that is similar to that which was provided a half century ago.”

The reality is that people without training, motivation, education, and incentives will not produce the content outputs at a scale needed to recalibrate those wondrous smart software knowledge spaces and probabilistic-centric smart software systems.

Here’s a passage from the write up which caught my attention:

Given the rapid race for profits and the tendrils of bias rooted in our digital libraries and lived experiences, it’s unlikely we’ll ever fully vanquish it from our AI innovation. But that can’t mean inaction or ignorance is acceptable. More diversity in STEM and more diversity of talent intimately involved in the AI process will undoubtedly mean more accurate, inclusive models — and that’s something we will all benefit from.

Okay, what’s the plan? Who is going to take the lead? What’s the timeline? Who will do the work to address the educational and psychological factors? Simple, right? Words, words, words.

Stephen E Arnold, July 25, 2024

Why Is Anyone Surprised That AI Is Biased?

July 25, 2024

Let’s top this one last time, all right? Algorithms are biased against specific groups.

Why are they biased? They’re biased because the testing data sets contain limited information about diversity.

What types of diversity? There’s a range but it usually involves racism, sexism, and socioeconomic status.

How does this happen? It usually happens, not because the designers are racist or whatever, but from blind ignorance. They don’t see outside their technology boxes so their focus is limited.

But they can be racist, sexist, etc? Yes, they’re human and have their personal prejudices. Those can be consciously or inadvertently programmed into a data set.

How can this be fixed? Get larger, cleaner data sets that are more reflective of actual populations.

Did you miss any minority groups? Unfortunately yes and it happens to be an oldie but a goodie: disabled folks. Stephen Downes writes that, “ChatGPT Shows Hiring Bias Against People With Disabilities.” Downes commented on an article from Futurity that describes how a doctoral student from the University of Washington studies on ChatGPT ranks resumes of abled vs. disabled people.

The test discovered when ChatGPT was asked to rank resumes, people with resumes that included references to a disability were ranked lower. This part is questionable because it doesn’t state the prompt given to ChatGPT. When the generative text AI was told to be less “ableist” and some of the “disabled” resumes ranked higher. The article then goes into a valid yet overplayed argument about diversity and inclusion. No solutions were provided.

Downes asked questions that also beg for solutions:

“This is a problem, obviously. But in assessing issues of this type, two additional questions need to be asked: first, how does the AI performance compare with human performance? After all, it is very likely the AI is drawing on actual human discrimination when it learns how to assess applications. And second, how much easier is it to correct the AI behaviour as compared to the human behaviour? This article doesn’t really consider the comparison with humans. But it does show the AI can be corrected. How about the human counterparts?”

Solutions? Anyone?

Whitney Grace, July 25, 2024

Crowd What? Strike Who?

July 24, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

How are those Delta cancellations going? Yeah, summer, families, harried business executives, and lots of hand waving. I read a semi-essay about the minor update misstep which caused blue to become a color associated with failure. I love the quirky sad face and the explanations from the assorted executives, experts, and poohbahs about how so many systems could fail in such a short time on a global scale.

In “Surely Microsoft Isn’t Blaming EU for Its Problems?” I noted six reasons the CrowdStrike issue became news instead of a system administrator annoyance. In a nutshell, the reasons identified harken back to Microsoft’s decision to use an “open design.” I like the phrase because it beckons a wide range of people to dig into the plumbing. Microsoft also allegedly wants to support its customers with older computers. I am not sure older anything is supported by anyone. As a dinobaby, I have first-hand experience with this “we care about legacy stuff.” Baloney. The essay mentions “kernel-level access.” How’s that working out? Based on CrowdStrike’s remarkable ability to generate PR from exceptions which appear to have allowed the super special security software to do its thing, that access sure does deliver. (Why does the nationality of CrowdStrike’s founder not get mentioned? Dmitri Alperovitch, a Russian who became a US citizen and a couple of other people set up the firm in 2012. Is there any possibility that the incident was a test play or part of a Russian long game?)

Satan congratulates one of his technical professionals for an update well done. Thanks, MSFT Copilot. How’re things today? Oh, that’s too bad.

The essay mentions that the world today is complex. Yeah, complexity goes with nifty technology, and everyone loves complexity when it becomes like an appliance until it doesn’t work. Then fixes are difficult because few know what went wrong. The article tosses in a reference to Microsoft’s “market size.” But centralization is what an appliance does, right? Who wants a tube radio when the radio can be software defined and embedded in another gizmo like those FM radios in some mobile devices. Who knew? And then there is a reference to “security.” We are presented with a tidy list.

The one hitch in the git along is that the issue emerges from a business culture which has zero to do with technology. The objective of a commercial enterprise is to generate profits. Companies generate profits by selling high, subtracting costs, and keeping the rest for themselves and stakeholders.

Hiring and training professionals to do jobs like quality checks, environmental impact statements, and ensuring ethical business behavior in work processes is overhead. One can hire a blue chip consulting firm and spark an opioid crisis or deprecate funding for pre-release checks and quality assurance work.

Engineering excellence takes time and money. What’s valued is maximizing the payoff. The other baloney is marketing and PR to keep regulators, competitors, and lawyers away.

The write up encapsulates the reason that change will be difficult and probably impossible for a company whether in the US or Ukraine to deliver what the customer expects. Regulators have failed to protect citizens from the behaviors of commercial enterprises. The customers assume that a big company cares about excellence.

I am not pessimistic. I have simply learned to survive in what is a quite error-prone environment. Pundits call the world fragile or brittle. Those words are okay. The more accurate term is reality. Get used to it and knock off the jargon about failure, corner cutting, and profit maximization. The reality is that Delta, blue screens, and yip yap about software chock full of issues define the world.

Fancy talk, lists, and entitled assurances won’t do the job. Reality is here. Accept it and blame.

Stephen E Arnold, July 24, 2024

The French AI Service Aims for the Ultimate: Cheese, Yes. AI? Maybe

July 24, 2024

AI developments are dominating technology news. Nothing makes tech new headlines jump up the newsfeed faster than mergers or partnerships. The Next Web delivered when it shared news that, "Silo And Mistral Join Forces In Yet Another European AI Team-Up.” Europe is the home base for many AI players, including Silo and Astral. These companies are from Finland and France respectively and they decided to partner to design sovereign AI solutions.

Silo is already known for partnering with other companies and Mistral is another member to its growing roster of teammates. The collaboration between the the two focuses on the deployment and planning of AI into existing infrastructures:

The past couple of years have seen businesses scramble to implement AI, often even before they know how they are actually going to use it, for fear of being left behind. Without proper implementation and the correct solutions and models, the promises of efficiency gains and added value that artificial intelligence can offer an organization risk falling flat.

“Silo and Mistral say they will provide a joint offering for businesses, “merging the end-to-end AI capabilities of Silo AI with Mistral AI’s industry leading state-of-the-art AI models,” combining their expertise to meet an increasing demand for value-creating AI solutions.”

Silo focuses on digital sovereignty and has developed open source LLM for “low resource European languages.” Mistral designs generative AI that are open source for hobby designers and fancier versions for commercial ventures.

The partnership between the two companies plans to speed up AI adoption across Europe and equalize it by including more regional languages.

Whitney Grace, July 24, 2024

Automating to Reduce Staff: Money Talks, Employees? Yeah, Well

July 24, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Are you a developer who oversees a project? Are you one of those professionals who toiled to understand the true beauty of a PERT chart invented by a Type A blue-chip consulting firm I have heard? If so, you may sport these initials on your business card: PMP, PMI-RMP, PRINCE2, etc. I would suggest that Google is taking steps to eliminate your role. How do I know the death knell tolls for thee? Easy. I read “Google Brings AI Agent Platform Project Oscar Open Source.” The write up doesn’t come out and say, “Dev managers or project managers, find your future elsewhere, but the intent bubbles beneath the surface of the Google speak.

A 35-year-old executive gets the good news. As a project manager, he can now seek another information-mediating job at an indendent plumbing company, a local dry cleaner, or the outfit that repurposes basketball courts to pickleball courts. So many futures to find. Thanks, MSFT Copilot. That’s a pretty good Grim Reaper. The former PMP looks snappy too. Well, good enough.

The “Google Brings AI Agent Platform Project Oscar Open Source” real “news” story says:

Google has announced Project Oscar, a way for open-source development teams to use and build agents to manage software programs.

Say hi, to Project Oscar. The smart software is new, so expect it to morph, be killed, resurrected, and live a long fruitful life.

The write up continues:

“I truly believe that AI has the potential to transform the entire software development lifecycle in many positive ways,” Karthik Padmanabhan, lead Developer Relations at Google India, said in a blog post. “[We’re] sharing a sneak peek into AI agents we’re working on as part of our quest to make AI even more helpful and accessible to all developers.” Through Project Oscar, developers can create AI agents that function throughout the software development lifecycle. These agents can range from a developer agent to a planning agent, runtime agent, or support agent. The agents can interact through natural language, so users can give instructions to them without needing to redo any code.

Helpful? Seems like it. Will smart software reduce costs and allow for more “efficiency methods” to be implemented? Yep.

The article includes a statement from a Googler; to wit:

“We wondered if AI agents could help, not by writing code which we truly enjoy, but by reducing disruptions and toil,” Balahan said in a video released by Google. Go uses an AI agent developed through Project Oscar that takes issue reports and “enriches issue reports by reviewing this data or invoking development tools to surface the information that matters most.” The agent also interacts with whoever reports an issue to clarify anything, even if human maintainers are not online.

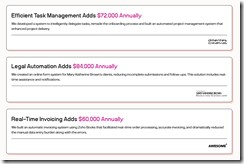

Where is Google headed with this “manage” software programs? A partial answer may be deduced from this write up from Linklemon. Its commercial “We Automate Workflows for Small to Medium (sic) Businesses.” The image below explains the business case clearly:

Those purple numbers are generated by chopping staff and making an existing system cheaper to operate. Translation: Find your future elsewhere, please.”

My hunch is that if the automation in Google India is “good enough,” the service will be tested in the US. Once that happens, Microsoft and other enterprise vendors will jump on the me-too express.

What’s that mean? Oh, heck, I don’t want to rerun that tired old “find your future elsewhere line,” but I will: Many professionals who intermediate information will here, “Great news, you now have the opportunity to find your future elsewhere.” Lucky folks, right, Google.

Stephen E Arnold, July 24, 2024

Google AdWords in Russia?

July 23, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

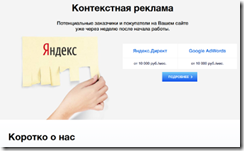

I have been working on a project requiring me to examine a handful of Web sites hosted in Russia, in the Russian language, and tailored for people residing in Russia and its affiliated countries. I came away today with a screenshot from the site for IT Cube Studio. The outfit creates Web sites and provides advertising services. Here’s a screenshot in Russian which advertises the firm’s ability to place Google AdWords for a Russian client:

If you don’t read Russian, here’s the translation of the text. I used Google Translate which seems to do an okay job with the language pair Russian to English. The ad says:

Contextual advertising. Potential customers and buyers on your website a week after the start of work.

The word

is the Russian spelling of Yandex. The Google word is “Google.”

I thought there were sanctions. In fact, I navigated to Google and entered this query “google AdWords Russia.” What did Google tell me on July 22, 2024, 503 pm US Eastern time?

Here’s the Google results page:

The screenshot is difficult to read, but let me highlight the answer to my question about Google’s selling AdWords in Russia.

There is a March 10, 2022, update which says:

Mar 10, 2022 — As part of our recent suspension of ads in Russia, we will also pause ads on Google properties and networks globally for advertisers based in [Russia] …

Plus there is one of those “smart” answers which says:

People also ask

Does Google Ads work in Russia?

Due to the ongoing war in Ukraine, we will be temporarily pausing Google ads from serving to users located in Russia. [Emphasis in the original Google results page display}

I know my Russian is terrible, but I am probably slightly better equipped to read and understand English. The Google results seem to say, “Hey, we don’t sell AdWords in Russia.”

I wonder if the company IT Cube Studio is just doing some marketing razzle dazzle. Is it possible that Google is saying one thing and doing another in Russia? I recall that Google said it wasn’t WiFi sniffing in Germany a number of years ago. I believe that Google was surprised when the WiFi sniffing was documented and disclosed.

I find these big company questions difficult to answer. I am certainly not a Google-grade intellect. I am a dinobaby. And I am inclined to believe that there is a really simple explanation or a very, very sincere apology if the IT Cube Studio outfit is selling Google AdWords when sanctions are in place.

If anyone of the two or three people who follow my Web log knows the answer to my questions, please, let me know. You can write me at benkent2020 at yahoo dot com. For now, I find this interesting. The Google would not violate sanctions, would it?

Stephen E Arnold, July 23, 2024