Okay, Google, How Are Your Fancy Math Recommendation Procedures Working? Just Great, You Say

May 17, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I have no idea what the Tech Transparency Project is. I am not interested in running a Google query for illumination. I don’t want to read jibber jabber from You.com or ChatGPT. In short, I am the ideal dino baby: Set in his ways, disinterested, and skeptical of information from an outfit which wants “transparency” in our shadow age.

I read “YouTube Leads Young Gamers to Videos of Guns, School Shootings.” For the moment, let’s assume that the Transparency folks are absolutely 100 percent off the mark. Google YouTube’s algorithms are humming along with 99.999999 (six sigma land) accuracy. Furthermore, let’s assume that the assertions in the article that the Google YouTube ad machine is providing people with links known to have direct relevance to a user’s YouTube viewing habits.

What’s this mean?

It means that Google is doing a bang up job of protecting young people, impressionable minds, and those who stumble into a forest of probabilities from “bad stuff.” The Transparency Project has selected outlier data and is not understanding the brilliant and precise methods of the Google algorithm wizards. Since people at the Transparency Project do not (I shall assume) work at Google, how can these non-Googlers fathom the subtle functioning of the Google mechanisms. Remember the old chestnut about people who thought cargo planes were a manifestation of God. Well, cargo cult worshippers need to accept the Google reality.

Let’s take a different viewpoint. Google is a pretty careless outfit. Multiple products and internal teams spat with one another over the Foosball table. Power struggles erupt in the stratospheric intellectual heights of Google carpetland and Google Labs. Wizards get promoted and great leaders who live far, far away become the one with the passkey to the smart software control room. Lesser wizards follow instructions, and the result may be what the Tech Transparency write up is describing — mere aberrations, tiny shavings of infinitesimals which could add up to something, or a glitch in a threshold setting caused by a surge of energy released when a Googler learns about a new ChatGPT application.

A researcher explaining how popular online video services can shape young minds. As Charles Colson observed, “Once you have them by the [unmentionables], their hearts and minds will follow.” True or false when it comes to pumping video information into immature minds of those seven to 14 years old? False, of course. Balderdash. Anyone suggesting such psychological operations is unfit to express an opinion. That sounds reasonable, right? Art happily generated by the tireless servant of creators — MidJourney, of course.

The write up states:

- YouTube recommended hundreds of videos about guns and gun violence to accounts for boys interested in video games, according to a new study.

- Some of the recommended videos gave instructions on how to convert guns into automatic weapons or depicted school shootings.

- The gamer accounts that watched the YouTube-recommended videos got served a much higher volume of gun- and shooting-related content.

- Many of the videos violated YouTube’s own policies on firearms, violence, and child safety, and YouTube took no apparent steps to age-restrict them.

And what supports these assertions which fly in the face of Googzilla’s assertions about risk, filtering, concern for youth, yada yada yada?

Let me present one statement from the cited article:

The study found YouTube recommending numerous other weapons-related videos to minors that violated the platform’s policies. For example, YouTube’s algorithm pushed a video titled “Mag-Fed 20MM Rifle with Suppressor” to the 14-year-old who watched recommended content. The description on the 24-second video, which was uploaded 16 years ago and has 4.8 million views, names the rifle and suppressor and links to a website selling them. That’s a clear violation of YouTube’s firearms policy, which does not allow content that includes “Links in the title or description of your video to sites where firearms or the accessories noted above are sold.”

What’s YouTube doing?

In my opinion, here’s the goal:

- Generate clicks

- Push content which may attract ads from companies looking to reach a specific demographic

- Ignore the suits-in-carpetland in order to get a bonus, promoted, or a better job.

The culprit is, from my point of view, the disconnect between Google’s incentive plans for employees and the hand waving baloney in its public statements and footnote heavy PR like ““Ethical and Social Risks of Harm from Language Models.”

If you are wearing Google glasses, you may want to check out the company with a couple of other people who are scrutinizing the disconnect between what Google says and what Google does.

So which is correct? The Google is doing God, oh, sorry, doing good. Or, the Google is playing with kiddie attention to further its own agenda?

A suggestion for the researchers: Capture the pre-roll ads, the mid-roll ads, and the end-roll ads. Isn’t there data in those observations?

Stephen E Arnold, May 17, 2023

Kiddie Research: Some Guidelines

May 17, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

The practice of performing market research on children will not go away any time soon. It is absolutely vital, after all, that companies be able to target our youth with pinpoint accuracy. In the article “A Guide on Conducting Better Market and User Research with Kids,” Meghan Skapyak of the UX Collective shares some best practices. Apparently these tips can help companies enthrall the most young people while protecting individual study participants. An interesting dichotomy. She writes:

“Kids are a really interesting source of knowledge and insight in the creation of new technology and digital experiences. They’re highly expressive, brutally honest, and have seamlessly integrated technology into their lives while still not fully understanding how it works. They pay close attention to the visual appeal and entertainment-value of an experience, and will very quickly lose interest if a website or app is ‘boring’ or doesn’t look quite right. They’re more prone to error when interacting with a digital experience and way more likely to experiment and play around with elements that aren’t essential to the task at hand. These aspects of children’s interactions with technology make them awesome research participants and testers when researchers structure their sessions correctly. This is no easy task however, as there are lots of methodological, behavioral, structural, and ethical considerations to take in mind while planning out how your team will conduct research with kids in order to achieve the best possible results.”

Skapyak goes on to blend and summarize decades of research on ethical guidelines, structural considerations, and methodological experiments in this field. To her credit, she starts with the command to “keep it ethical” and supplies links to the UN Convention on the Rights of the Child and UNICEF’s Ethical Research Involving Children. Only then does she launch into techniques for wringing the most shrewd insights from youngsters. Examples include turning it into a game, giving kids enough time to get comfortable, and treating them as the experts. See the article for more details on how to better sell stuff to kids and plant ideas in their heads while not violating the rights of test subjects.

Cynthia Murrell, May 17, 2023

Thinking about AI: Is It That Hard?

May 17, 2023

I read “Why I’m Having Trouble Covering AI: If You Believe That the Most Serious Risks from AI Are Real, Should You Write about Anything Else?” The version I saw was a screenshot, presumably to cause me to go to Platformer in order to interact with it. I use smart software to convert screenshots into text, so the risk reduced by the screenshot was in the mind of the creator.

Here’s a statement I underlined:

The reason I’m having trouble covering AI lately is because there is such a high variance in the way that the people who have considered the question most deeply think about risk.

My recollection is that Daniel Kahneman allegedly cooked up the idea of “prospect theory.” As I understand the idea, humans are not very good when thinking about risk. In fact, some people take risks because they think that a problem can be averted. Other avoid risk to because omission is okay; for example, reporting a financial problem. Why not just leave it out and cook up a footnote? Omissions are often okay with some government authorities.

I view the AI landscape from a different angle.

First, smart software has been chugging along for many years. May I suggest you fire up a copy of Microsoft Word, use it with its default settings, and watch how words are identified, phrases underlined, and letters automatically capitalized? How about using Amazon to buy lotion. Click on the buy now button and navigate to the order page. It’s magic. Amazon has used software to perform tasks which once required a room with clerks. There are other examples. My point is that the current baloney roll is swelling from its own gaseous emissions.

Second, the magic of ChatGPT outputting summaries was available 30 years ago from Island Software. Stick in the text of an article, and the desktop system spit out an abstract. Was it good? If one were a high school student, it was. If you were building a commercial database product fraught with jargon, technical terms, and abstruse content, it was not so good. Flash forward to now. Bing, You.com, and presumably the new and improved Bard are better. Is this surprising? Nope. Thirty years of effort have gone into this task of making a summary. Am I to believe that the world will end because smart software is causing a singularity? I am not reluctant to think quantum supremacy type thoughts. I just don’t get too overwrought.

Third, using smart software and methods which have been around for centuries — yep, centuries — is a result of easy-to-use tools being available at low cost or free. I find You.com helpful; I don’t pay for it. I tried Kagi and Teva; not so useful and I won’t pay for it. Swisscows.com work reasonably well for me. Cash conserving and time saving are important. Smart software can deliver this easily and economically. When the math does not work, then I am okay with manual methods. Will the smart software take over the world and destroy me as an Econ Talk guest suggested? Sure. Maybe? Soon. What’s soon mean?

Fourth, the interest in AI, in my opinion, is a result of several factors: [a] Interesting demonstrations and applications at a time when innovation becomes buying or trying to buy a game company, [b] avoiding legal interactions due to behavioral or monopoly allegations, [c] a deteriorating economy due to the Covid and free money events, [d] frustration among users with software and systems focused on annoying, not delighting, their users; [e] the inability of certain large companies to make management decisions which do not illustrate that high school science club thinking is not appropriate for today’s business world; [f] data are available; [g] computing power is comparatively cheap; [h] software libraries, code snippets, off-the-shelf models, and related lubricants are findable and either free to use or cheap; [i] obvious inefficiencies exist so a new tool is worth a try; and [j] the lure of a bright shiny thing which could make a few people lots of money adds a bit of zest to the stew.

Therefore, I am not confused, nor am I overly concerned with those who predict home runs or end-of-world outcomes.

What about big AI brains getting fired or quitting?

Three observations:

First, outfits like Facebook and Google type companies are pretty weird and crazy places. Individuals who want to take a measured approach or who are not interested in having 20-somethings play with their mobile when contributing to a discussion should get out or get thrown out. Scared or addled or arrogant big company managers want the folks to speak the same language, to be on the same page even it the messages are written in invisible ink, encrypted, and circulated to the high school science club officers.

Second, like most technologies chock full of jargon, excitement, and the odor of crisp greenbacks, expectations are high. Reality is often able to deliver friction the cheerleaders, believers, and venture capitalists don’t want to acknowledge. That friction exists and will make its presence felt. How quickly? Maybe Bud Light quickly? Maybe Google ad practice awareness speed? Who knows? Friction just is and like gravity difficult to ignore.

Third, the confusion about AI depends upon the lenses through which one observes what’s going on. What are these lenses? My team has identified five smart software lenses. Depending on what lens is in your pair of glasses and how strong the curvatures are, you will be affected by the societal lens, the technical lens, the individual lens (that’s the certain blindness each of us has), the political lens, and the financial lens. With lots to look at, the choice of lens is important. The inability to discern what is important depends on the context existing when the AI glasses are perched on one’s nose. It is okay to be confused; unknowing adds the splash of Slap Ya Mama to my digital burrito.

Net net: Meta-reflections are a glimpse into the inner mind of a pundit, podcast star, and high-energy writer. The reality of AI is a replay of a video I saw when the Internet made online visible to many people, not just a few individuals. What’s happened to that revolution? Ads and criminal behavior. What about the mobile revolution? How has that worked out? From my point of view it creates an audience for technology which could, might, may, will, or whatever other forward forward word one wants to use. AI is going to glue together the lowest common denominator of greed with the deconstructive power of digital information. No Terminator is needed. I am used to being confused, and I am perfectly okay with the surrealistic world in which I live.

PS. We lectured two weeks ago to a distinguished group and mentioned smart software four times in two and one half hours. Why? It’s software. It has utility. It is nothing new. My prospect theory pegs artificial intelligence in the same category as online (think NASA Recon), browsing (think remote content to a local device), and portable phones (talking and doing other stuff without wires). Also, my Zepp watch stress reading is in the low 30s. No enlarged or cancerous prospect theory for me at this time.

Stephen E Arnold, May 17, 2023

Fake News Websites Proliferate Thanks AI!

May 16, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Technology has consequences. And in the case of advanced AI chatbots, it seems those who unleashed the tech on the world had their excuses ready. Gadgets360° shares the article, “ChatGPT-Like AI Chatbots Have Been Used to Create 49 News Websites: NewsGuard Report.” Though researchers discovered they were created with software like OpenAI’s ChatGPT and, possibly, Google Bard, none of the 49 “news” sites disclosed that origin story. Bloomberg reporter Davey Alba cites a report by NewsGuard that details how researchers hunted down these sites: They searched for phrases commonly found in AI-generated text using tools like CrowdTangle (a sibling of Facebook) and Meltwater. They also received help from the AI text classifier GPTZero. Alba writes:

“In several instances, NewsGuard documented how the chatbots generated falsehoods for published pieces. In April alone, a website called CelebritiesDeaths.com published an article titled, ‘Biden dead. Harris acting President, address 9 a.m.’ Another concocted facts about the life and works of an architect as part of a falsified obituary. And a site called TNewsNetwork published an unverified story about the deaths of thousands of soldiers in the Russia-Ukraine war, based on a YouTube video. The majority of the sites appear to be content farms — low-quality websites run by anonymous sources that churn-out posts to bring in advertising. The websites are based all over the world and are published in several languages, including English, Portuguese, Tagalog and Thai, NewsGuard said in its report. A handful of sites generated some revenue by advertising ‘guest posting’ — in which people can order up mentions of their business on the websites for a fee to help their search ranking. Others appeared to attempt to build an audience on social media, such as ScoopEarth.com, which publishes celebrity biographies and whose related Facebook page has a following of 124,000.”

Naturally, more than half the sites they found were running targeted ads. NewsGuard reasonably suggested AI companies should build in safeguards against their creations being used this way. Both OpenAI and Google point to existing review procedures and enforcement policies against misuse. Alba notes the situation is particularly tricky for Google, which profits from the ads that grace the fake news sites. After Bloomberg alerted it to the NewsGuard findings, the company did remove some ads from some of the sites.

Of course, posting fake and shoddy content for traffic, ads, and clicks is nothing new. But, as one expert confirmed, the most recent breakthroughs in AI technology make it much easier, faster, and cheaper. Gee, who could have foreseen that?

Cynthia Murrell, May 16, 2023

Architects: Handwork Is the Future of Some?

May 16, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I think there is a new category of blog posts. I call it “we will be relevant” essays. A good example is from Architizer and its essay “5 Reasons Why Architects Must Not Give Up On Hand Drawings and Physical Models: Despite the Rise of CAD, BIM and Now AI, Low-Tech Creative Mediums Remain of Vital Importance to Architects and Designers.” [Note: a BIM is an acronym for “business information modeling.”]

The write up asserts:

“As AI-generated content rapidly becomes the norm, I predict a counter-culture of manually-crafted creations, with the art of human imperfection and idiosyncrasy becoming marketable in its own right,” argued Architizer’s own Paul Keskeys in a recent Linkedin post.

The person doing the predicting is the editor of Architizer.

Now look at this architectural rendering of a tiny house. I generated it in a minute using MidJourney, a Jim Dandy image outputter.

I think it looks okay. Furthermore, I think it is a short step from the rendering to smart software outputting the floor plans, bill of materials, a checklist of legal procedures to follow, the content of those legal procedures, and a ready-to-distribute tender. The notion of threading together pools of information into a workflow is becoming a reality if I believe the hot sauce doused on smart software when TWIST, Jason Calacanis’ AI-themed podcasts air. I am not sure the vision of some of the confections explored on this program are right around the corner, but the functionality is now in a rental cloud computer and ready to depart.

Why would a person wanting to buy a tiny house pay a human to develop designs, go through the grunt work of figuring out window sizes, and getting the specification ready for me to review. I just want a tiny house as reasonably priced as possible. I don’t want a person handcrafting a baby model with plastic trees. I don’t want a human junior intern plugging in the bits and pieces. I want software to do the job.

I am not sure how many people like me are thinking about tiny houses, ranch houses, or non-tilting apartment towers. I do think that architects who do not get with the smart software program will find themselves in a fight for survival.

The CAD, BIM, and AI are capabilities that evoke images of buggy whip manufacturers who do not shift to Tesla interior repairs.

Stephen E Arnold, May 16, 2023

The Gray Lady: Objective Gloating about Vice

May 15, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Do you have dreams about the church lady on Saturday Night Live. That skit frightened me. A flashback shook my placid mental state when I read “Vice, Decayed Digital Colossus, Files for Bankruptcy.” I conjured up without the assistance of smart software, the image of Dana Carvey talking about the pundit spawning machine named Vice with the statement, “Well, isn’t that special?”

The New York Times’s article reported:

Vice Media filed for bankruptcy on Monday, punctuating a years long descent from a new-media darling to a cautionary tale of the problems facing the digital publishing industry.

The write up omits any reference to the New York Times’s failure with its own online venture under the guidance of Jeff Pemberton, the flame out with its LexisNexis play, the fraught effort to index its own content, and the misadventures which have become the Wordle success story. The past Don Quixote-like sallies into the digital world are either Irrelevant or unknown to the current crop of Gray Lady “real” news hounds I surmise.

The article states:

Investments from media titans like Disney and shrewd financial investors like TPG, which spent hundreds of millions of dollars, will

be rendered worthless by the bankruptcy, cementing Vice’s status among the most notable bad bets in the media industry. [Emphasis added.]

Well, isn’t that special? Perhaps similar to the Times’s first online adventure in the late 1970s?

The article includes a quote from a community journalism company too:

“We now know that a brand tethered to social media for its growth and audience alone is not sustainable.”

Perhaps like the desire for more money than the Times’s LexisNexis deal provided? Perhaps?

Is Vice that special? I think the story is a footnote to the Gray Lady’s own adventures in the digital realm?

Isn’t that special too?

Stephen E Arnold, May 15, 2023

ChatGPT Mind Reading: Sure, Plus It Is a Force for Good

May 15, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

The potential of artificial intelligence, for both good and evil, just got bumped up another notch. Surprised? Neither are we. The Guardian reveals, “AI Makes Non-Invasive Mind-Reading Possible by Turning Thoughts into Text.” For 15 years, researchers at the University of Texas at Austin have been working on a way to help patients whose stroke, motor neuron disease, or other conditions have made it hard to communicate. While impressive, previous systems could translate brain activity into text only with the help of surgical implants. More recently, researchers found a way to do the same thing with data from fMRI scans. But the process was so slow as to make it nearly useless as a communication tool. Until now. Correspondent Hannah Devlin writes:

“However, the advent of large language models – the kind of AI underpinning OpenAI’s ChatGPT – provided a new way in. These models are able to represent, in numbers, the semantic meaning of speech, allowing the scientists to look at which patterns of neuronal activity corresponded to strings of words with a particular meaning rather than attempting to read out activity word by word. The learning process was intensive: three volunteers were required to lie in a scanner for 16 hours each, listening to podcasts. The decoder was trained to match brain activity to meaning using a large language model, GPT-1, a precursor to ChatGPT. Later, the same participants were scanned listening to a new story or imagining telling a story and the decoder was used to generate text from brain activity alone. About half the time, the text closely – and sometimes precisely – matched the intended meanings of the original words. ‘Our system works at the level of ideas, semantics, meaning,’ said Huth. ‘This is the reason why what we get out is not the exact words, it’s the gist.’ For instance, when a participant was played the words ‘I don’t have my driver’s license yet,’ the decoder translated them as ‘She has not even started to learn to drive yet’.”

That is a pretty good gist. See the write-up for more examples as well as a few limitations researchers found. Naturally, refinement continues. The study‘s co-author Jerry Tang acknowledges this technology could be dangerous in the hands of bad actors, but says they have “worked to avoid that.” He does not reveal exactly how. That is probably for the best.

Cynthia Murrell, May 15, 2023

HP Autonomy: A Modest Disagreement Escalates

May 15, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

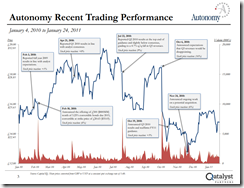

About 12 years ago, Hewlett Packard acquired Autonomy. The deal was, as I understand the deal, HP wanted to snap up Autonomy to make a move in the enterprise services business. Autonomy was one of the major providers of search and some related content processing services in 2010. Autonomy’s revenues were nosing toward $800 million, a level no other search and retrieval software company had previously achieved.

However, as Qatalyst Partners reported in an Autonomy profile, the share price was not exactly hitting home runs each quarter:

Source: Autonomy Trading and Financial Statistics, 2011 by Qatalyst Partners

After some HP executive turmoil, the deal was done. After a year or so, HP analysts determined that the Silicon Valley company paid too much for Autonomy. The result was high profile litigation. One Autonomy executive found himself losing and suffering the embarrassment of jail time.

“Autonomy Founder Mike Lynch Flown to US for HPE Fraud Trial” reports:

Autonomy founder Mike Lynch has been extradited to the US under criminal charges that he defrauded HP when he sold his software business to them for $11 billion in 2011. The 57-year-old is facing allegations that he inflated the books at Autonomy to generate a higher sale price for the business, the value of which HP subsequently wrote down by billions of dollars.

Although I did some consulting work for Autonomy, I have no unique information about the company, the HP allegations, or the legal process which will unspool in the US.

In a recent conversation with a person who had first hand knowledge of the deal, I learned that HP was disappointed with the Autonomy approach to business. I pushed back and pointed out three things to a person who was quite agitated that I did not share his outrage. My points, as I recall, were:

- A number of search-and-retrieval companies failed to generate revenue sufficient to meet their investors’ expectations. These included outfits like Convera (formerly Excalibur Technologies), Entopia, and numerous other firms. Some were sold and were operated as reasonably successful businesses; for example, Dassault Systèmes and Exalead. Others were folded into a larger business; for example, Microsoft’s purchase of Fast Search & Transfer and Oracle’s acquisition of Endeca. The period from 2008 to 2013 was particularly difficult for vendors of enterprise search and content processing systems. I documented these issues in The Enterprise Search Report and a couple of other books I wrote.

- Enterprise search vendors and some hybrid outfits which developed search-related products and services used bundling as a way to make sales. The idea was not new. IBM refined the approach. Buy a mainframe and get support free for a period of time. Then the customer could pay a license fee for the software and upgrades and pay for services. IBM charged me $850 to roll a specialist to look at my three out-of-warranty PC 704 servers. (That was the end of my reliance on IBM equipment and its marvelous ServeRAID technology.) Libraries, for example, could acquire hardware. The “soft” components had a different budget cycle. The solution? Split up the deal. I think Autonomy emulated this approach and added some unique features. Nevertheless, the market for search and content related services was and is a difficult one. Fast Search & Transfer had its own approach. That landed the company in hot water and the founder on the pages of newspapers across Scandinavia.

- Sales professionals could generate interest in search and content processing systems by describing the benefits of finding information buried in a company’s file cabinets, tucked into PowerPoint presentations, and sleeping peacefully in email. Like the current buzz about OpenAI and ChatGPT, expectations are loftier than the reality of some implementations. Enterprise search vendors like Autonomy had to deal with angry licensees who could not find information, heated objections to the cost of reindexing content to make it possible for employees to find the file saved yesterday (an expensive and difficult task even today), and howls of outrage because certain functions had to be coded to meet the specific content requirements of a particular licensee. Remember that a large company does not need one search and retrieval system. There are many, quite specific requirements. These range from engineering drawings in the R&D center to the super sensitive employee compensation data, from the legal department’s need to process discovery information to the mandated classified documents associated with a government contract.

These issues remain today. Autonomy is now back in the spot light. The British government, as I understand the situation, is not chasing Dr. Lynch for his methods. HP and the US legal system are.

The person with whom I spoke was not interested in my three points. He has a Harvard education and I am a geriatric. I will survive his anger toward Autonomy and his obvious affection for the estimable HP, its eavesdropping Board and its executive revolving door.

What few recall is that Autonomy was one of the first vendors of search to use smart software. The implementation was described as Neuro Linguistic Programming. Like today’s smart software, the functioning of the Autonomy core technology was a black box. I assume the litigation will expose this Autonomy black box. Is there a message for the ChatGPT-type outfits blossoming at a prodigious rate?

Yes, the enterprise search sector is about to undergo a rebirth. Organizations have information. Findability remains difficult. The fix? Merge ChatGPT type methods with an organization’s content. What do you get? A party which faded away in 2010 is coming back. The Beatles and Elvis vibe will be live, on stage, act fast.

Stephen E Arnold, May 15, 2023

The Seven Wonders of the Google AI World

May 12, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I read the content at this Google Web page: https://ai.google/responsibility/principles/. I found it darned amazing. In fact, I thought of the original seven wonders of the world. Let’s see how Google’s statements compare with the down-through-time achievements of mere mortals from ancient times.

Let’s imagine two comedians explaining the difference between the two important set of landmarks in human achievement. Here are the entertainers. These impressive individuals are a product of MidJourney’s smart software. The drawing illustrates the possibilities of artificial intelligence applied to regular intelligence and a certain big ad company’s capabilities. (That’s humor, gentle reader.)

Here are the seven wonders of the world according to the semi reliable National Geographic (l loved those old Nat Geos when I was in the seventh grade in 1956-1957!):

- The pyramids of Giza (tombs or alien machinery, take your pick)

- The hanging gardens of Babylon (a building with a flower show)

- The temple of Artemis (god of the hunt for maybe relevant advertising?)

- The statue of Zeus (the thunder god like Googzilla?)

- The mausoleum at Halicarnassus (a tomb)

- The colossus of Rhodes (Greek sun god who inspired Louis XIV and his just-so-hoity toity pals)

- The lighthouse of Alexandria (bright light which baffles some who doubt a fire can cast a bright light to ships at sea)

Now the seven wonders of the Google AI world:

- Socially beneficial AI (how does AI help those who are not advertisers?)

- Avoid creating or reinforcing unfair bias (What’s Dr. Timnit Gebru say about this?)

- Be built and tested for safety? (Will AI address video on YouTube which provide links to cracked software; e.g. this one?)

- Be accountable to people? (Maybe people who call for Google customer support?)

- Incorporate privacy design principles? (Will the European Commission embrace the Google, not litigate it?)

- Uphold high standards of scientific excellence? (Interesting. What’s “high” mean? What’s scientific about threshold fiddling? What’s “excellence”?)

- AI will be made available for uses that “accord with these principles”. (Is this another “Don’t be evil moment?)

Now let’s evaluate in broad strokes the two seven wonders. My initial impression is that the ancient seven wonders were fungible, not based on the future tense, the progressive tense, and breathing the exhaust fumes of OpenAI and others in the AI game. After a bit of thought, I am not sure Google’s management will be able to convince me that its personnel policies, its management of its high school science club, and its knee jerk reaction to the Microsoft Davos slam dunk are more than bloviating. Finally, the original seven wonders are either ruins or lost to all but a MidJourney reconstruction or a Bing output. Google is in the “careful” business. Translating: Google is Googley. OpenAI and ChatGPT are delivering blocks and stones for a real wonder of the world.

Net net: The ancient seven wonders represent something to which humans aspired or honored. The Google seven wonders of AI are, in my opinion, marketing via uncoordinated demos. However, Google will make more money than any of the ancient attractions did. The Google list may be perfect for the next Sundar and Prabhakar Comedy Show. Will it play in Paris? The last one there flopped.

Stephen E Arnold, May 12, 2023

Will McKinsey Be Replaced by AI: Missing the Point of Money and Power

May 12, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I read a very unusual anti-big company and anti-big tech essay called “Will AI Become the New McKinsey?” The thesis of the essay in my opinion is expressed in this statement:

AI is a threat because of the way it assists capital.

The argument upon which this assertion is perched boils down to capitalism, in its present form, in today’s US of A is roached. The choices available to make life into a hard rock candy mountain world are start: Boast capitalism so that it like cancer kills everything including itself. The other alternative is to wait for the “government” to implement policies to convert the endless scroll into a post-1984 theme park.

Let’s consider McKinsey. Whether the firm likes it or not, it has become the poster child and revenue model for other services firms. Paying to turn on one’s steering wheel heating element is an example of McKinsey-type thinking. The fentanyl problem is an unintended consequence of offering some baller ideas to a few big pharma outfits in the Us. There are other examples. I prefer to focus on some intellectual characteristics which make the firm into the symbol of that which is wrong with the good old US of A; to wit:

- MBA think. Numbers drive decisions, not feel good ideas like togetherness, helping others, and emulating Twitch’s AI powered ask_Jesus program. If you have not seen this, check it out at this link. It has 64 viewers as I write this on May 7, 2023 at 2 pm US Eastern.

- Hitting goals. These are either expressed as targets to consultants or passed along by executives to the junior MBAs pushing the mill stone round and round with dot points, charts, graphs, and zippy jargon speak. The incentive plan and its goals feed the MBAs. I think of these entities as cattle with some brains.

- Being viewed as super smart. I know that most successful consultants know they are smart. But many smart people who work at consulting firms like McKinsey are more insecure than an 11 year old watching an Olympic gymnast flip and spin in a effortless manner. To overcome that insecurity, the MBA consultant seeks approval from his/her/its peers and from clients who eagerly pick the option the report was crafted to make a no-brainer. Yes, slaps on the back, lunch with a senior partner, and identified as a person who would undertake grinding another rail car filled with wheat.

The essay, however, overlooks a simple fact about AI and similar “it will change everything” technology.

The technology does not do anything. It is a tool. The action comes from the individuals smart enough, bold enough, and quick enough to implement or apply it first. Once the momentum is visible, then the technology is shaped, weaponized, and guided to targets. The technology does not have much of a vote. In fact, technology is the mill stone. The owner of the cattle is running the show. The write up ignores this simple fact.

One solution is to let the “government” develop policies. Another is for the technology to kill itself. Another is for those with money, courage, and brains to develop an ethical mindset. Yeah, good luck with these.

The government works for the big outfits in the good old US of A. No firm action against monopolies, right? Why? Lawyers, lobbyists, and leverage.

What’s the essay achieve? [a] Calling attention to McKinsey helps McKinsey sell. [b] Trying to gently push a lefty idea is tough when those who can’t afford an apartment in Manhattan are swiping their iPhones and posting on BlueSky. [c] Accepting the reality that technology serves those who understand and have the cash to use that technology to gain more power and money.

Ugly? Only for those excluded from the top of the social pyramid and juicy jobs at blue chip consulting firms, expertise in manipulating advanced systems and methods, and the mindset to succeed in what is the only game in town.

PS. MBAs make errors like the Bud Light promotion. That type of mistake, not opioid tactics, may be an instrument of change. But taming AI to make a better, more ethical world. That’s a comedy hook worthy of the next Sundar & Prabhakar show.

Stephen E Arnold, May 12, 2023