Poli Sci and AI: Smart Software Boosts Bad Actors (No Kidding?)

November 22, 2023

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Smart software (AI, machine learning, et al) has sparked awareness in some political scientists. Until I read “Can Chatbots Help You Build a Bioweapon?” — I thought political scientists were still pondering Frederick William, Elector of Brandenburg’s social policies or Cambodian law in the 11th century. I was incorrect. Modern poli sci influenced wonks are starting to wrestle with the immense potential of smart software for bad actors. I think this dispersal of the cloud of unknowing I perceived among similar academic group when I entered a third-rate university in 1962 is a step forward. Ah, progress!

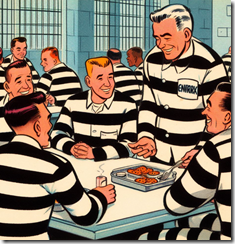

“Did you hear that the Senate Committee used my testimony about artificial intelligence in their draft regulations for chatbot rules and regulations?” says the recently admitted elected official. The inmates at the prison facility laugh at the incongruity of the situation. Thanks, Microsoft Bing, you do understand the ways of white collar influence peddling, don’t you?

The write up points out:

As policymakers consider the United States’ broader biosecurity and biotechnology goals, it will be important to understand that scientific knowledge is already readily accessible with or without a chatbot.

The statement is indeed accurate. Outside the esteemed halls of foreign policy power, STM (scientific, technical, and medical) information is abundant. Some of the data are online and reasonably easy to find with such advanced tools as Yandex.com (a Russian centric Web search system) or the more useful Chemical Abstracts data.

The write up’s revelations continue:

Consider the fact that high school biology students, congressional staffers, and middle-school summer campers already have hands-on experience genetically engineering bacteria. A budding scientist can use the internet to find all-encompassing resources.

Yes, more intellectual sunlight in the poli sci journal of record!

Let me offer one more example of ground breaking insight:

In other words, a chatbot that lowers the information barrier should be seen as more like helping a user step over a curb than helping one scale an otherwise unsurmountable wall. Even so, it’s reasonable to worry that this extra help might make the difference for some malicious actors. What’s more, the simple perception that a chatbot can act as a biological assistant may be enough to attract and engage new actors, regardless of how widespread the information was to begin with.

Is there a step government deciders should take? Of course. It is the step that US high technology companies have been begging bureaucrats to take. Government should spell out rules for a morphing, little understood, and essentially uncontrollable suite of systems and methods.

There is nothing like regulating the present and future. Poli sci professionals believe it is possible to repaint the weird red tail on the Boeing F 7A aircraft while the jet is flying around. Trivial?

Here’s the recommendation which I found interesting:

Overemphasizing information security at the expense of innovation and economic advancement could have the unforeseen harmful side effect of derailing those efforts and their widespread benefits. Future biosecurity policy should balance the need for broad dissemination of science with guardrails against misuse, recognizing that people can gain scientific knowledge from high school classes and YouTube—not just from ChatGPT.

My take on this modest proposal is:

- Guard rails allow companies to pursue legal remedies as those companies do exactly what they want and when they want. Isn’t that why the Google “public” trial underway is essentially “secret”?

- Bad actors loves open source tools. Unencumbered by bureaucracies, these folks can move quickly. In effect the mice are equipped with jet packs.

- Job matching services allow a bad actor in Greece or Hong Kong to identify and hire contract workers who may have highly specialized AI skills obtained doing their day jobs. The idea is that for a bargain price expertise is available to help smart software produce some AI infused surprises.

- Recycling the party line of a handful of high profile AI companies is what makes policy.

With poli sci professional becoming aware of smart software, a better world will result. Why fret about livestock ownership in the glory days of what is now Cambodia? The AI stuff is here and now, waiting for the policy guidance which is sure to come even though the draft guidelines have been crafted by US AI companies?

Stephen E Arnold, November 22, 2023