Surprise! Smart Software and Medical Outputs May Kill You

February 29, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Have you been inhaling AI hype today? Exhale slowly, then read “Generating Medical Errors: GenAI and Erroneous Medical References,” produced by the esteemed university with a reputation for shaking the AI cucarachas and singing loudly “Ai, Ai, Yi.” The write up is an output of the non-plagiarizing professionals in the Human Centered Artificial Intelligence unit.

The researchers report states:

…Large language models used widely for medical assessments cannot back up claims.

Here’s what the HAI blog post states:

we develop an approach to verify how well LLMs are able to cite medical references and whether these references actually support the claims generated by the models. The short answer: poorly. For the most advanced model (GPT-4 with retrieval augmented generation), 30% of individual statements are unsupported and nearly half of its responses are not fully supported.

Okay, poorly. The disconnect is that the output sounds good, but the information is distorted, off base, or possibly inappropriate.

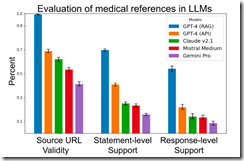

What I found interesting is a stack ranking of widely used AI “systems.” Here’s the chart from the HAI article:

The least “poor” are the Sam AI-Man systems. In the middle is the Anthropic outfit. Bringing up the rear is the French “small” LLM Mistral system. And guess which system is dead last in this Stanford report?

Give up?

The Google. And not just the Google. The laggard is the Gemini system which was Bard, a smart software which rolled out after the Softies caught the Google by surprise about 14 months ago. Last in URL validity, last in statement level support, and last in response level support.

The good news is that most research studies are non reproducible or, like the former president of Stanford’s work, fabricated. As a result, I think these assertions will be easy for an art history major working in Google’s PR confection machine will bat them away like annoying flies in Canberra, Australia.

But last from researchers at the estimable institution where Google, Snorkel and other wonderful services were invented? That’s a surprise like the medical information which might have unexpected consequences for Aunt Mille or Uncle Fred.

Stephen E Arnold, February 29, 2024