AI and Stupid Users: A Glimpse of What Is to Come

March 29, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

When smart software does not deliver, who is responsible? I don’t have a dog in the AI fight. I am thinking about deployment of smart software in professional environments. When the outputs are wonky or do not deliver the bang of a competing system, what is the customer supposed to do. Is the vendor responsible? Is the customer responsible? Is the person who tried to validate the outputs guilty of putting a finger on the scale of a system which its developers cannot explain exactly how an output was determined? Viewed from one angle, this is the Achilles’ heel of artificial intelligence. Viewed from another angle determining responsibility is an issue which, in my opinion, will be decided by legal processes. In the meantime, the issue of a system’s not working can have significant consequences. How about those automated systems on aircraft which dive suddenly or vessels which can jam a ship channel?

I read a write up which provides a peek at what large outfits pushing smart software will do when challenged about quality, accuracy, or other subjective factors related to AI-imbued systems. Let’s take a quick look at “Customers Complain That Copilot Isn’t As Good as ChatGPT, Microsoft Blames Misunderstanding and Misuse.”

The main idea in the write up strikes me as:

Microsoft is doing absolutely everything it can to force people into using its Copilot AI tools, whether they want to or not. According to a new report, several customers have reported a problem: it doesn’t perform as well as ChatGPT. But Microsoft believes the issue lies with people who aren’t using Copilot correctly or don’t understand the differences between the two products.

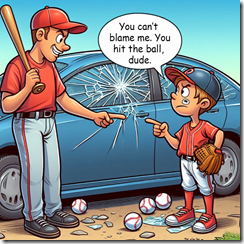

Yep, the user is the problem. I can imagine the adjudicator (illustrated as a mother) listening to a large company’s sales professional and a professional certified developer arguing about how the customer went off the rails. Is the original programmer the problem? Is the new manager in charge of AI responsible? Is it the user or users?

Illustration by MSFT Copilot. Good enough, MSFT.

The write up continues:

One complaint that has repeatedly been raised by customers is that Copilot doesn’t compare to ChatGPT. Microsoft says this is because customers don’t understand the differences between the two products: Copilot for Microsoft 365 is built on the Azure OpenAI model, combining OpenAI’s large language models with user data in the Microsoft Graph and the Microsoft 365 apps. Microsoft says this means its tools have more restrictions than ChatGPT, including only temporarily accessing internal data before deleting it after each query.

Here’s another snippet from the cited article:

In addition to blaming customers’ apparent ignorance, Microsoft employees say many users are just bad at writing prompts. “If you don’t ask the right question, it will still do its best to give you the right answer and it can assume things,” one worker said. “It’s a copilot, not an autopilot. You have to work with it,” they added, which sounds like a slogan Microsoft should adopt in its marketing for Copilot. The employee added that Microsoft has hired partner BrainStorm, which offers training for Microsoft 365, to help create instructional videos to help customers create better Copilot prompts.

I will be interested in watching how these “blame games” unfold.

Stephen E Arnold, March 29, 2024

How to Fool a Dinobaby Online

March 29, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Marketers take note. Forget about gaming the soon-to-be-on-life-support Google Web search. Embrace fakery. And who, you may ask, will teach me? The answer is The Daily Beast. To begin your life-changing journey, navigate to “Facebook Is Filled With AI-Generated Garbage—and Older Adults Are Being Tricked.”

Two government regulators wonder where the Deep Fakes have gone? Thanks, MSFT Copilot. Keep on updating, please.

The write up explains:

So far, the few experiments to analyze seniors’ AI perception seem to align with the Facebook phenomenon…. The team found that the older participants were more likely to believe that AI-generated images were made by humans.

Okay, that’s step one: Identify your target market.

What’s next? The write up points out:

scammers have wielded increasingly sophisticated generative AI tools to go after older adults. They can use deepfake audio and images sourced from social media to pretend to be a grandchild calling from jail for bail money, or even falsify a relative’s appearance on a video call.

That’s step two: Weave in a family or social tug on the heart strings.

Then what? The article helpfully notes:

As of last week, there are more than 50 bills across 30 states aimed to clamp down on deepfake risks. And since the beginning of 2024, Congress has introduced a flurry of bills to address deepfakes.

Yep, the flag has been dropped. The race with few or no rules is underway. But what about government rules and regulations? Yeah, those will be chugging around after the race cars have disappeared from view.

Thanks for the guidelines.

Stephen E Arnold, March 29, 2024

The Many Faces of Zuckbook

March 29, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

As evidenced by his business decisions, Mark Zuckerberg seems to be a complicated fellow. For example, a couple recent articles illustrate this contrast: On one hand is his commitment to support open source software, an apparently benevolent position. On the other, Meta is once again in the crosshairs of EU privacy advocates for what they insist is its disregard for the law.

First, we turn to a section of VentureBeat’s piece, “Inside Meta’s AI Strategy: Zuckerberg Stresses Compute, Open Source, and Training Data.” In it, reporter Sharon Goldman shares highlights from Meta’s Q4 2023 earnings call. She emphasizes Zuckerberg’s continued commitment to open source software, specifically AI software Llama 3 and PyTorch. He touts these products as keys to “innovation across the industry.” Sounds great. But he also states:

“Efficiency improvements and lowering the compute costs also benefit everyone including us. Second, open source software often becomes an industry standard, and when companies standardize on building with our stack, that then becomes easier to integrate new innovations into our products.”

Ah, there it is.

Our next item was apparently meant to be sneaky, but who did Meta think it was fooling? The Register reports, “Meta’s Pay-or-Consent Model Hides ‘Massive Illegal Data Processing Ops’: Lawsuit.” Meta is attempting to “comply” with the EU’s privacy regulations by making users pay to opt in to them. That is not what regulators had in mind. We learn:

“Those of us with aunties on FB or friends on Instagram were asked to say yes to data processing for the purpose of advertising – to ‘choose to continue to use Facebook and Instagram with ads’ – or to pay up for a ‘subscription service with no ads on Facebook and Instagram.’ Meta, of course, made the changes in an attempt to comply with EU law. But privacy rights folks weren’t happy about it from the get-go, with privacy advocacy group noyb (None Of Your Business), for example, sarcastically claiming Meta was proposing you pay it in order to enjoy your fundamental rights under EU law. The group already challenged Meta’s move in November, arguing EU law requires consent for data processing to be given freely, rather than to be offered as an alternative to a fee. Noyb also filed a lawsuit in January this year in which it objected to the inability of users to ‘freely’ withdraw data processing consent they’d already given to Facebook or Instagram.”

And now eight European Consumer Organization (BEUC) members have filed new complaints, insisting Meta’s pay-or-consent tactic violates the European General Data Protection Regulation (GDPR). While that may seem obvious to some, Meta insists it is in compliance with the law. Because of course it does.

Cynthia Murrell, March 29, 2024

Who Is Responsible for Security Problems? Guess, Please

March 28, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

“In my opinion, Zero-Days Exploited in the Wild Jumped 50% in 2023, Fueled by Spyware Vendors” is a semi-sophisticated chunk of content marketing and an example of information shaping. The source of the “report” is Google. The article appears in what was a Google- and In-Q-Tel-backed company publication. The company is named “Recorded Future” and appears to be owned in whole or in part by a financial concern. In a separate transaction, Google purchased a cyber security outfit called Mandiant which provides services to government and commercial clients. This is an interesting collection of organizations and each group’s staff of technical professionals.

The young players are arguing about whose shoulders will carry the burden of the broken window. The batter points to the fielder. The fielder points to the batter. Watching are the coaches and team mates. Everyone, it seems, is responsible. So who will the automobile owner hold responsible? That’s a job for the lawyer retained by the entity with the deepest pockets and an unfettered communications channel. Nice work MSFT Copilot. Is this scenario one with which you are familiar?

The article contains what seems to me quite shocking information; that is, companies providing specialized services to government agencies like law enforcement and intelligence entities, are compromising the security of mobile phones. What’s interesting is that Google’s Android software is one of the more widely used “enablers” of what is now a ubiquitous computing device.

I noted this passage:

Commercial surveillance vendors (CSVs) were the leading culprit behind browser and mobile device exploitation, with Google attributing 75% of known zero-day exploits targeting Google products as well as Android ecosystem devices in 2023 (13 of 17 vulnerabilities). [Emphasis added. Editor.]

Why do I find the article intriguing?

- This “revelatory” write up can be interpreted to mean that spyware vendors have to be put in some type of quarantine, possibly similar to those odd boxes in airports where people who smoke can partake of potentially harmful habit. In the special “room”, these folks can be monitored perhaps?

- The number of exploits parallels the massive number of security breaches create by widely-used laptop, desktop, and server software systems. Bad actors have been attacking for many years and now the sophistication and volume of cyber attacks seems to be increasing. Every few days cyber security vendors alert me to a new threat; for example, entering hotel rooms with Unsaflok. It seems that security problems are endemic.

- The “fix” or “remedial” steps involve users, government agencies, and industry groups. I interpret the argument as suggesting that companies developing operating systems need help and possibly cannot be responsible for these security problems.

The article can be read as a summary of recent developments in the specialized software sector and its careless handling of its technology. However, I think the article is suggesting that the companies building and enabling mobile computing are just victimized by bad actors, lousy regulations, and sloppy government behaviors.

Maybe? I believe I will tilt toward the content marketing purpose of the write up. The argument “Hey, it’s not us” is not convincing me. I think it will complement other articles that blur responsibility the way faces are blurred in some videos.

Stephen E Arnold, March 28, 2024

My Way or the Highway, Humanoid

March 28, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

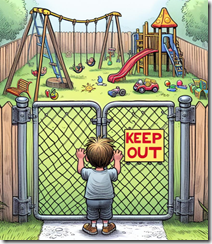

Curious how “nice” people achieve success? “Playground Bullies Do Prosper – And Go On to Earn More in Middle Age” may have an answer. The write up says:

Children who displayed aggressive behavior at school, such as bullying or temper outbursts, are likely to earn more money in middle age, according to a five-decade study that upends the maxim that bullies do not prosper.

If you want a tip for career success, I would interpret the write up’s information to start when young. Also, start small. The Logan Paul approach to making news is to fight the ageing Mike Tyson. Is that for you? I know I would not start small by irritating someone who walks with a cane. But, to each his or her own. If there is a small child selling Girl Scout Cookies, one might sharpen his or her leadership skills by knocking the cookie box to the ground and stomping on it. The modest demonstration of power can then be followed with the statement, “Those cookies contain harmful substances. You should be ashamed.” Then as your skills become more fluid and automatic, move up. I suggest testing one’s bullying expertise on a local branch of a street gang involved in possibly illegal activities.

Thanks MSFT Copilot. I wonder if you used sophisticated techniques when explaining to OpenAI that you were hedging your bets.

The write up quotes an expert as saying:

“We found that those children who teachers felt had problems with attention, peer relationships and emotional instability did end up earning less in the future, as we expected, but we were surprised to find a strong link between aggressive behavior at school and higher earnings in later life,” said Prof Emilia Del Bono, one of the study’s authors.

A bully might respond to this professor and say, “What are you going to do about it?” One response is, “You will earn more, young student.” The write up reports:

Many successful people have had problems of various kinds at school, from Winston Churchill, who was taken out of his primary school, to those who were expelled or suspended.

Will nice guys who are not bullies become the leaders of the post Covid world? The article quotes another expert as saying:

“We’re also seeing a generational shift where younger generations expect to have a culture of belonging and being treated with fairness, respect and kindness.”

Sounds promising. Has anyone told the companies terminating thousands of workers? What about outfits like IBM which are dumping humans for smart software? Yep, progress just like that made at Google in the last couple of years.

Stephen E Arnold, March 28, 2024

AI and Jobs: Under Estimating Perhaps?

March 28, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I am interested in the impact of smart software on jobs. I spotted “1.5M UK Jobs Now at Risk from AI, Report Finds.” But the snappier assertion appears in the subtitle to the write up:

The number could rise to 7.9M in the future

The UK has about 68 million people (maybe more, maybe fewer but close enough). The estimate of 7.9 million job losses translates to seven million people out of work. Now these types of “future impact” estimates are diaphanous. But the message seems clear. Despite the nascent stage of smart software’s development, the number one use may be dumping humans and learning to love software. Will the software make today’s systems work more efficiently. In my experience, computerizing processes does very little to improve the outputs. Some tasks are completed quickly. However, get the process wrong, and one has a darned interesting project for a blue-chip consulting firm.

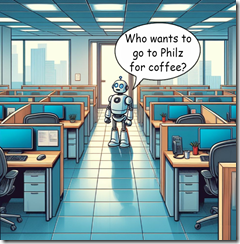

The smart software is alone in an empty office building. Does the smart software look lonely or unhappy? Thanks, MSFT Copilot. Good enough illustration.

The write up notes:

Back-office, entry-level, and part-time jobs are the ones mostly exposed, with employees on medium and low wages being at the greatest risk.

If this statement is accurate, life will be exciting for parents whose progeny camp out in the family room or who turn to other, possibly less socially acceptable, methods of generating cash. Crime comes to my mind, but you may see volunteers working to pick up trash in lovely Plymouth or Blackpool.

The write up notes:

Experts have argued that AI can be a force for good in the labor market — as long as it goes hand in hand with rebuilding workforce skills.

Academics, wizards, elected officials, consultants can find the silver lining in the cloud that spawned the tornado.

Several observations, if I may:

- The acceleration of tools to add AI to processes is evident in the continuous stream of “new” projects appearing in GitHub, Product Watch, and AI newsletters. The availability of tools means that applications will flow into job-reducing opportunities; that is, outfits which will pay cash to cut payroll.

- AI functions are now being embedded in mobile devices. Smart software will be a crutch and most users will not realize that their own skills are being transformed. Welcoming AI is an important first step in using AI to replace an expensive, unreliable humanoid.

- The floundering of government and non-governmental organizations is amusing to watch. Each day documents about managing the AI “risk” appear in my feedreader. Yet zero meaningful action is taking place as certain large companies work to consolidate their control of essential and mostly proprietary technologies and know how.

Net net: The job loss estimate is interesting. My hunch is that it underestimates the impact of smart software on traditional work. This is good for smart software and possibly not so good for humanoids.

Stephen E Arnold, March 28, 2024

Backpressure: A Bit of a Problem in Enterprise Search in 2024

March 27, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I have noticed numerous references to search and retrieval in the last few months. Most of these articles and podcasts focus on making an organization’s data accessible. That’s the same old story told since the days of STAIRS III and other dinobaby artifacts. The gist of the flow of search-related articles is that information is locked up or silo-ized. Using a combination of “artificial intelligence,” “open source” software, and powerful computing resources — problem solved.

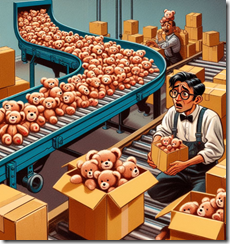

A modern enterprise search content processing system struggles to keep pace with the changes to already processed content (the deltas) and the flow of new content in a wide range of file types and formats. Thanks, MSFT Copilot. You have learned from your experience with Fast Search & Transfer file indexing it seems.

The 2019 essay “Backpressure Explained — The Resisted Flow of Data Through Software” is pertinent in 2024. The essay, written by Jay Phelps, states:

The purpose of software is to take input data and turn it into some desired output data. That output data might be JSON from an API, it might be HTML for a webpage, or the pixels displayed on your monitor. Backpressure is when the progress of turning that input to output is resisted in some way. In most cases that resistance is computational speed — trouble computing the output as fast as the input comes in — so that’s by far the easiest way to look at it.

Mr. Phelps identifies several types of backpressure. These are:

- More info to be processed than a system can handle

- Reading and writing file speeds are not up to the demand for reading and writing

- Communication “pipes” between and among servers are too small, slow, or unstable

- A group of hardware and software components cannot move data where it is needed fast enough.

I have simplified his more elegantly expressed points. Please, consult the original 2019 document for the information I have hip hopped over.

My point is that in the chatter about enterprise search and retrieval, there are a number of situations (use cases to those non-dinobabies) which create some interesting issues. Let me highlight these and then wrap up this short essay.

In an enterprise, the following situations exist and are often ignored or dismissed as irrelevant. When people pooh pooh my observations, it is clear to me that these people have [a] never been subject to a legal discovery process associated with enterprise search fraud and [b] are entitled whiz kids who don’t do too much in the quite dirty, messy, “real” world. (I do like the variety in T shirts and lumberjack shirts, however.)

First, in an enterprise, content changes. These “deltas” are a giant problem. I know that none of the systems I have examined, tested, installed, or advised which have a procedure to identify a change made to a PowerPoint, presented to a client, and converted to an email confirming a deal, price, or technical feature in anything close to real time. In fact, no one may know until the president’s laptop is examined by an investigator who discovers the “forgotten” information. Even more exciting is the opposing legal team’s review of a laptop dump as part of a discovery process “finds” the sequence of messages and connects the dots. Exciting, right. But “deltas” pose another problem. These modified content objects proliferate like gerbils. One can talk about information governance, but it is just that — talk, meaningless jabber.

Second, the content which an employees needs to answer a business question in a timely manner can reside in am employee’s laptop or a mobile phone, a digital notebook, in a Vimeo video or one of those nifty “private” YouTube videos, or behind the locked doors and specialized security systems loved by some pharma company’s research units, a Word document in something other than English, etc. Now the content is changed. The enterprise search fast talkers ignore identifying and indexing these documents with metadata that pinpoints the time of the change and who made it. Is this important? Some contract issues require this level of information access. Who asks for this stuff? How about a COTR for a billion dollar government contract?

Third, I have heard and read that modern enterprise search systems “use”, “apply,” “operate within” industry standard authentication systems. Sure they do within very narrowly defined situations. If the authorization system does not work, then quite problematic things happen. Examples range from an employee’s failure to find the information needed and makes a really bad decision. Alternatively the employee goes on an Easter egg hunt which may or may not work, but if the egg found is good enough, then that’s used. What happens? Bad things can happen? Have you ridden in an old Pinto? Access control is a tough problem, and it costs money to solve. Enterprise search solutions, even the whiz bang cloud centric distributed systems, implement something, which is often not the “right” thing.

Fourth, and I am going to stop here, the problem of end-to-end encrypted messaging systems. If you think employees do not use these, I suggest you do a bit of Eastern egg hunting. What about the content in those systems? You can tell me, “Our company does not use these.” I say, “Fine. I am a dinobaby, and I don’t have time to talk with you because you are so much more informed than I am.”

Why did I romp though this rather unpleasant issue in enterprise search and retrieval? The answer is, “Enterprise search remains a problematic concept.” I believe there is some litigation underway about how the problem of search can morph into a fantasy of a huge business because we have a solution.”

Sorry. Not yet. Marketing and closing deals are different from solving findability issues in an enterprise.

Stephen E Arnold, March 27, 2024

A Single, Glittering Google Gem for 27 March 2024

March 27, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

So many choices. But one gem outshines the others. Google’s search generative experience is generating publicity. The old chestnut may be true. Any publicity is good publicity. I would add a footnote. Any publicity about Google’s flawed smart software is probably good for Microsoft and other AI competitors. Google definitely looks as though it has some behaviors that are — how shall I phrase it? — questionable. No, maybe, ill-considered. No, let’s go with bungling. That word has a nice ring to it. Bungling.

I learned about this gem in “Google’s New AI Search Results Promotes Sites Pushing Malware, Scams.” The write up asserts:

Google’s new AI-powered ‘Search Generative Experience’ algorithms recommend scam sites that redirect visitors to unwanted Chrome extensions, fake iPhone giveaways, browser spam subscriptions, and tech support scams.

The technique which gets the user from the quantumly supreme Google to the bad actor goodies is redirects. Some user notification functions to pump even more inducements toward the befuddled user. (See, bungling and befuddled. Alliteration.)

Why do users fall for these bad actor gift traps? It seems that Google SGE conversational recommendations sound so darned wonderful, Google users just believe that the GOOG cares about the information it presents to those who “trust” the company. k

The write up points out that the DeepMinded Google provided this information about the bumbling SGE:

"We continue to update our advanced spam-fighting systems to keep spam out of Search, and we utilize these anti-spam protections to safeguard SGE," Google told BleepingComputer. "We’ve taken action under our policies to remove the examples shared, which were showing up for uncommon queries."

Isn’t that reassuring? I wonder if the anecdote about this most recent demonstration of the Google’s wizardry will become part of the Sundar & Prabhakar Comedy Act?

This is a gem. It combines Google’s management process, word salad frippery, and smart software into one delightful bouquet. There you have it: Bungling, befuddled, bumbling, and bouquet. I am adding blundering. I do like butterfingered, however.

Stephen E Arnold, March 27, 2024

IBM and AI: A Spur to Other Ageing Companies?

March 27, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I love IBM. Well, I used to. Years ago I had three IBM PC 704 servers. Each was equipped with its expansion SCSI storage device. My love disappeared as we worked daily to keep the estimable ServeRAID softwware in tip top shape. For those unfamiliar with the thrill of ServeRAID, “tip top” means preventing the outstanding code from trashing data.

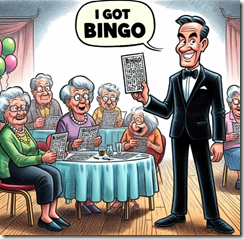

IBM is a winner. Thanks, MSFT Copilot. How are those server vulnerabilities today?

I was, therefore, not surprised to read “IBM Stock Nears an All-Time High—And It May Have Something to Do with its CEO Replacing As Many Workers with AI As Possible.” Instead of creating the first and best example of dinobaby substitution, Big Blue is now using smart software to reduce headcount. The write up says:

[IBM] used AI to reduce the number of employees working on relatively manual HR-related work to about 50 from 700 previously, which allowed them to focus on other things, he [Big Dog at IBM] wrote in an April commentary piece for Fortune. And in its January fourth quarter earnings, the company said it would cut costs in 2024 by $3 billion, up from $2 billion previously, in part by laying off thousands of workers—some of which it later chalked up to AI influence.

Is this development important? Yep. Here are the reasons:

- Despite its interesting track record in smart software, IBM has figured out it can add sizzle to the ageing giant by using smart software to reduce costs. Forget that cancer curing stuff. Go with straight humanoid replacement.

- The company has significant influence. Some Gen Y and Gen Z wizards don’t think about IBM. That’s fine, but banks, government agencies, Fortune 1000 firms, and family fund management firms do. What IBM does influences these bright entities’ thinking.

- The targeted workers are what one might call “expendable.” That’s a great way to motivate some of Big Blue’s war horses.

Net net: The future of AI is coming into focus for some outfits who may have a touch of arthritis.

Stephen E Arnold, March 27, 2024

Commercial Open Source: Fantastic Pipe Dream or Revenue Pipe Line?

March 26, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Open source is a term which strikes me as au courant. Artificial intelligence software is often described as “open source.” The idea has a bit of “do good” mixed with the idea that commercial software puts customers in handcuffs. (I think I hear Kumbaya playing faintly in the background.) Is it possible to blend the idea of free and open software with the principles of commercial software lock in? Notable open source entrepreneurs have become difficult to differentiate from a run-of-the-mill technology company. Examples include RedHat, Elastic, and OpenAI. Ooops. Sorry. OpenAI is a different type of company. I think.

Will open source software, particularly open source AI components, end up like this private playground? Thanks, MSFT Copilot. You are into open source, aren’t you? I hope your commitment is stronger than for server and cloud security.

I had these open source thoughts when I read “AI and Data Infrastructure Drives Demand for Open Source Startups.” The source of the information is Runa Capital, now located in Luxembourg. The firm publishes a report called the Runa Open Source Start Up Index, and it is a “rosy” document. The point of the article is that Runa sees open source as a financial opportunity. You can start your exploration of the tables and charts at this link on the Runa Capital Web site.

I want to focus on some information tucked into the article, just not presented in bold face or with a snappy chart. Here’s the passage I noted:

Defining what constitutes “open source” has its own inherent challenges too, as there is a spectrum of how “open source” a startup is — some are more akin to “open core,” where most of their major features are locked behind a premium paywall, and some have licenses which are more restrictive than others. So for this, the curators at Runa decided that the startup must simply have a product that is “reasonably connected to its open-source repositories,” which obviously involves a degree of subjectivity when deciding which ones make the cut.

The word “reasonably” invokes an image of lawyers negotiating on behalf of their clients. Nothing is quite so far from the kumbaya of the “real” open source software initiative as lawyers. Just look at the licenses for open source software.

I also noted this statement:

Thus, according to Runa’s methodology, it uses what it calls the “commercial perception of open-source” for its report, rather than the actual license the company attaches to its project.

What is “open source”? My hunch it is whatever the lawyers and courts conclude.

Why is this important?

The talk about “open source” is relevant to the “next big thing” in technology. And what is that? ANSWER: A fresh set of money making plays.

I know that there are true believers in open source. I wish them financial and kumbaya-type success.

My take is different: Open source, as the term is used today, is one of the phrases repurposed to breathe life in what some critics call a techno-feudal world. I don’t have a dog in the race. I don’t want a dog in any race. I am a dinobaby. I find amusement in how language becomes the Teflon on which money (one hopes) glides effortlessly.

And the kumbaya? Hmm.

Stephen E Arnold, March 26, 2024