AI Hermeneutics: The Fire Fights of Interpretation Flame

March 12, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

My hunch is that not too many of the thumb-typing, TikTok generation know what hermeneutics means. Furthermore, like most of their parents, these future masters of the phone-iverse don’t care. “Let software think for me” would make a nifty T shirt slogan at a technology conference.

This morning (March 12, 2024) I read three quite different write ups. Let me highlight each and then link the content of those documents to the the problem of interpretation of religious texts.

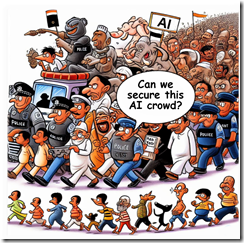

Thanks, MSFT Copilot. I am confident your security team is up to this task.

The first write up is a news story called “Elon Musk’s AI to Open Source Grok This Week.” The main point for me is that Mr. Musk will put the label “open source” on his Grok artificial intelligence software. The write up includes an interesting quote; to wit:

Musk further adds that the whole idea of him founding OpenAI was about open sourcing AI. He highlighted his discussion with Larry Page, the former CEO of Google, who was Musk’s friend then. “I sat in his house and talked about AI safety, and Larry did not care about AI safety at all.”

The implication is that Mr. Musk does care about safety. Okay, let’s accept that.

The second story is an ArXiv paper called “Stealing Part of a Production Language Model.” The authors are nine Googlers, two ETH wizards, one University of Washington professor, one OpenAI researcher, and one McGill University smart software luminary. In short, the big outfits are making clear that closed or open, software is rising to the task of revealing some of the inner workings of these “next big things.” The paper states:

We introduce the first model-stealing attack that extracts precise, nontrivial information from black-box production language models like OpenAI’s ChatGPT or Google’s PaLM-2…. For under $20 USD, our attack extracts the entire projection matrix of OpenAI’s ada and babbage language models.

The third item is “How Do Neural Networks Learn? A Mathematical Formula Explains How They Detect Relevant Patterns.” The main idea of this write up is that software can perform an X-ray type analysis of a black box and present some useful data about the inner workings of numerical recipes about which many AI “experts” feign total ignorance.

Several observations:

- Open source software is available to download largely without encumbrances. Good actors and bad actors can use this software and its components to let users put on a happy face or bedevil the world’s cyber security experts. Either way, smart software is out of the bag.

- In the event that someone or some organization has secrets buried in its software, those secrets can be exposed. One the secret is known, the good actors and the bad actors can surf on that information.

- The notion of an attack surface for smart software now includes the numerical recipes and the model itself. Toss in the notion of data poisoning, and the notion of vulnerability must be recast from a specific attack to a much larger type of exploitation.

Net net: I assume the many committees, NGOs, and government entities discussing AI have considered these points and incorporated these articles into informed policies. In the meantime, the AI parade continues to attract participants. Who has time to fool around with the hermeneutics of smart software?

Stephen E Arnold, March 12, 2024