Google and Its Smart Software: The Emotion Directed Use Case

July 31, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

How different are the Googlers from those smack in the middle of a normal curve? Some evidence is provided to answer this question in the Ars Technica article “Outsourcing Emotion: The Horror of Google’s “Dear Sydney” AI Ad.” I did not see the advertisement. The volume of messages flooding through my channels each days has allowed me to develop what I call “ad blindness.” I don’t notice them; I don’t watch them; and I don’t care about the crazy content presentation which I struggle to understand.

A young person has to write a sympathy card. The smart software is encouraging to use the word “feel.” This is a word foreign to the individual who wants to work for big tech someday. Thanks, MSFT Copilot. Do you have your hands full with security issues today?

Ars Technica watches TV and the Olympics. The write up reports:

In it, a proud father seeks help writing a letter on behalf of his daughter, who is an aspiring runner and superfan of world-record-holding hurdler Sydney McLaughlin-Levrone. “I’m pretty good with words, but this has to be just right,” the father intones before asking Gemini to “Help my daughter write a letter telling Sydney how inspiring she is…” Gemini dutifully responds with a draft letter in which the LLM tells the runner, on behalf of the daughter, that she wants to be “just like you.”

What’s going on? The father wants to write something personal to his progeny. A Hallmark card may never be delivered from the US to France. The solution is an emessage. That makes sense. Essential services like delivering snail mail are like most major systems not working particularly well.

Ars Technica points out:

But I think the most offensive thing about the ad is what it implies about the kinds of human tasks Google sees AI replacing. Rather than using LLMs to automate tedious busywork or difficult research questions, “Dear Sydney” presents a world where Gemini can help us offload a heartwarming shared moment of connection with our children.

I find the article’s negative reaction to a Mad Ave-type of message play somewhat insensitive. Let’s look at this use of smart software from the point of view of a person who is at the right hand tail end of the normal distribution. The factors in this curve are compensation, cleverness as measured in a Google interview, and intelligence as determined by either what school a person attended, achievements when a person was in his or her teens, or solving one of the Courant Institute of Mathematical Sciences brain teasers. (These are shared at cocktail parties or over coffee. If you can’t answer, you pay the bill and never get invited back.)

Let’s run down the use of AI from this hypothetical right of loser viewpoint:

- What’s with this assumption that a Google-type person has experience with human interaction. Why not send a text even though your co-worker is at the next desk? Why waste time and brain cycles trying to emulate a Hallmark greeting card contractor’s phraseology. The use of AI is simply logical.

- Why criticize an alleged Googler or Googler-by-the-gig for using the company’s outstanding, quantumly supreme AI system? This outfit spends millions on running AI tests which allow the firm’s smart software to perform in an optimal manner in the messaging department. This is “eating the dog food one has prepared.” Think of it as quality testing.

- The AI system, running in the Google Cloud on Google technology is faster than even a quantumly supreme Googler when it comes to generating feel-good platitudes. The technology works well. Evaluate this message in terms of the effectiveness of the messaging generated by Google leadership with regard to the Dr. Timnit Gebru matter. Upper quartile of performance which is far beyond the dead center of the bell curve humanoids.

My view is that there is one positive from this use of smart software to message a partially-developed and not completely educated younger person. The Sundar & Prabhakar Comedy Act has been recycling jokes and bits for months. Some find them repetitive. I do not. I am fascinated by the recycling. The S&P Show has its fans just as Jack Benny does decades after his demise. But others want new material.

By golly, I think the Google ad showing Google’s smart software generating a parental note is a hoot and a great demo. Plus look at the PR the spot has generated.

What’s not to like? Not much if you are Googley. If you are not Googley, sorry. There’s not much that can be done except shove ads at you whenever you encounter a Google product or service. The ad illustrates the mental orientation of Google. Learn to love it. Nothing is going to alter the trajectory of the Google for the foreseeable future. Why not use Google’s smart software to write a sympathy note to a friend when his or her parent dies? Why not use Google to write a note to the dean of a college arguing that your child should be admitted? Why not let Google think for you? At least that decision would be intentional.

Stephen E Arnold, July 31, 2024

How

How

How

How

How

Spotting Machine-Generated Content: A Work in Progress

July 31, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Some professionals want to figure out if a chunk of content is real, fabricated, or fake. In my experience, making that determination is difficult. For those who want to experiment with identifying weaponized, generated, or AI-assisted content, you may want to review the tools described in “AI Tools to Detect Disinformation – A Selection for Reporters and Fact-Checkers.” The article groups tools into categories. For example, there are utilities for text, images, video, and five bonus tools. There is a suggestion to address the bot problem. The write up is intended for “journalists,” a category which I find increasingly difficult to define.

The big question is, of course, do these systems work? I tried to test the tool from FactiSearch and the link 404ed. The service is available, but a bit of clicking is involved. I tried the Exorde tool and was greeted with the register for a free trial.

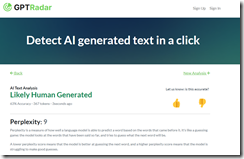

I plugged some machine-generated text produced with the You.com “Genius” LLM system in to GPT Radar (not in the cited article’s list by the way). That system happily reported that the sample copy was written by a human.

The test content was not. I plugged some text I wrote and the system reported:

Three items in my own writing were identified as text written by a large language model. I don’t know whether to be flattered or horrified.

The bottom line is that systems designed to identify machine-generated content are a work in progress. My view is that as soon as a bright your spark rolls out a new detection system, the LLM output become better. So a cat-and-mouse game ensues.

Stephen E Arnold, July 31, 2024

No Llama 3 for EU

July 31, 2024

Frustrated with European regulators, Meta is ready to take its AI ball and go home. Axios reveals, “Scoop: Meta Won’t Offer Future Multimodal AI Models in EU.” Reporter Ina Fried writes:

“Meta will withhold its next multimodal AI model — and future ones — from customers in the European Union because of what it says is a lack of clarity from regulators there, Axios has learned. Why it matters: The move sets up a showdown between Meta and EU regulators and highlights a growing willingness among U.S. tech giants to withhold products from European customers. State of play: ’We will release a multimodal Llama model over the coming months, but not in the EU due to the unpredictable nature of the European regulatory environment,’ Meta said in a statement to Axios.”

So there. And Meta is not the only firm petulant in the face of privacy regulations. Apple recently made a similar declaration. So governments may not be able to regulate AI, but AI outfits can try to regulate governments. Seems legit. The EU’s stance is that Llama 3 may not feed on European users’ Facebook and Instagram posts. Does Meta hope FOMO will make the EU back down? We learn:

“Meta plans to incorporate the new multimodal models, which are able to reason across video, audio, images and text, in a wide range of products, including smartphones and its Meta Ray-Ban smart glasses. Meta says its decision also means that European companies will not be able to use the multimodal models even though they are being released under an open license. It could also prevent companies outside of the EU from offering products and services in Europe that make use of the new multimodal models. The company is also planning to release a larger, text-only version of its Llama 3 model soon. That will be made available for customers and companies in the EU, Meta said.”

The company insists EU user data is crucial to be sure its European products accurately reflect the region’s terminology and culture. Sure That is almost a plausible excuse.

Cynthia Murrell, July 31, 2024

Which Outfit Will Win? The Google or Some Bunch of Busy Bodies

July 30, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

It may not be the shoot out at the OK Corral, but the dust up is likely to be a fan favorite. It is possible that some crypto outfit will find a way to issue an NFT and host pay-per-view broadcasts of the committee meetings, lawyer news conferences, and pundits recycling press releases. On the other hand, maybe the shoot out is a Hollywood deal. Everyone knows who is going to win before the real action begins.

“Third Party Cookies Have Got to Go” reports:

After reading Google’s announcement that they no longer plan to deprecate third-party cookies, we wanted to make our position clear. We have updated our TAG finding Third-party cookies must be removed to spell out our concerns.

A great debate is underway. Who or what wins? Experience suggests that money has an advantage in this type of disagreement. Thanks, MSFT. Good enough.

Who is making this draconian statement? A government regulator? A big-time legal eagle representing an NGO? Someone running for president of the United States? A member of the CCP? Nope, the World Wide Web Consortium or W3C. This group was set up by Tim Berners-Lee, who wanted to find and link documents at CERN. The outfit wants to cook up Web standards, much to the delight of online advertising interests and certain organizations monitoring Web traffic. Rules allow crafting ways to circumvent their intent and enable the magical world of the modern Internet. How is that working out? I thought the big technology companies set standards like no “soft 404s” or “sorry, Chrome created a problem. We are really, really sorry.”

The write up continues:

We aren’t the only ones who are worried. The updated RFC that defines cookies says that third-party cookies have “inherent privacy issues” and that therefore web “resources cannot rely upon third-party cookies being treated consistently by user agents for the foreseeable future.” We agree. Furthermore, tracking and subsequent data collection and brokerage can support micro-targeting of political messages, which can have a detrimental impact on society, as identified by Privacy International and other organizations. Regulatory authorities, such as the UK’s Information Commissioner’s Office, have also called for the blocking of third-party cookies.

I understand, but the Google seems to be doing one of those “let’s just dump this loser” moves. Revenue is more important than the silly privacy thing. Users who want privacy should take control of their technology.

The W3C points out:

The unfortunate climb-down will also have secondary effects, as it is likely to delay cross-browser work on effective alternatives to third-party cookies. We fear it will have an overall detrimental impact on the cause of improving privacy on the web. We sincerely hope that Google reverses this decision and re-commits to a path towards removal of third-party cookies.

Now the big question: “Who is going to win this shoot out?”

Normal folks might compromise or test a number of options to determine which makes the most sense at a particularly interesting point in time. There is post-Covid weirdness, the threat of escalating armed conflict in what six, 27, or 95 countries, and financial brittleness. That anti-fragile handwaving is not getting much traction in my opinion.

At one end of the corral are the sleek, technology wizards. These norm core folks have phasers, AI, and money. At the other end of the corral are the opponents who look like a random selection of Café de Paris customers. Place you bets.

Stephen E Arnold, July 30, 2024

.

One Legal Stab at CrowdStrike Liability

July 30, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I read “CrowdStrike Will Be Liable for Damages in France, Based on the OVH Precedent.” OVH is a provider of hosting and what I call “enabling services” to organizations in France, Europe, and other countries. The write up focuses on a modest problem OVH experienced in 2021. A fire consumed four of OVH’s data centers. Needless to say the customers of one of the largest online services providers in Europe were not too happy for two reasons: Backups were not available and the affected organizations were knocked offline.

Two astronauts look down at earth from the soon to be decommissioned space station. The lights and power on earth just flicked off. Thanks, Microsoft Copilot. No security meetings today?

The article focuses on the French courts’ decision that OVH was liable for damages. A number of details about the legal logic appear in the write up. For those of you who still watch Perry Mason reruns on Sling, please, navigate to the cited article for the details. I boiled the OVH tale down to a single dot point from the excellent article:

The court ruled the OVH backup service was not operated to a reasonable standard and failed at its purpose.

This means that in France and probably the European Union those technology savvy CrowdStrike wizards will be writing checks. The firm’s lawyers will get big checks for a number of years. Then the falconers of cyber threats will be scratching out checks to the customers and probably some of the well-heeled downstream airport lounge sleepers, the patients’ families died because surgeries could not be performed, and a kettle of seething government agencies whose emergency call services were dead.

The write concludes with this statement:

Customers operating in regulated industries like healthcare, finance, aerospace, transportation, are actually required to test and stage and track changes. CrowdStrike claims to have a dozen certifications and standards which require them to follow particular development practices and carry out various level of testing, but they clearly did not. The simple fact that CrowdStrike does not do any of that and actively refuses to, puts them in breach of compliance, which puts customers themselves in breach of compliance by using CrowdStrike. All together, there may be sufficient grounds to unilaterally terminate any CrowdStrike contracts for any customer who wishes to.

The key phrase is “in breach of compliance”. That’s going to be an interesting bit of lingo for lawyers involved in the dead Falcon affair to sort out.

Several observations:

- Will someone in the post-Falcon mess raise the question, “Could this be a recipe for a bad actor to emulate?” Could friends of one of the founder who has some ties to Russia be asked questions?

- What about that outstanding security of the Microsoft servers? How will the smart software outfit fixated on putting ads for a browser in an operating system respond? Those blue screens are not what I associate with my Apple Mini servers. I think our Linux boxes display a somewhat ominous black screen. Blue is who?

- Will this incident be shoved around until absolutely no one knows who signed off on the code modules which contributed to this somewhat interesting global event? My hunch it could be a person working as a contractor from a yurt somewhere northeast of Armenia. What’s your best guess?

Net net: It is definite that a cyber attack aimed at the heart of Microsoft’s software can create global outages. How many computer science students in Bulgaria are thinking about this issue? Will bad actors’ technology wizards rethink what can be done with a simple pushed update?

Stephen E Arnold, July 30, 2024

Let Go of My Throat: The Academic Journal Grip of Death

July 30, 2024

There are several problems with the current system of academic publishing, like fake research and citation feedback loops. Then there is the nasty structure of the system itself. Publishers hold the very institutions that produce good research over a financial barrel when it comes to accessing and furthering that research. Some academics insist it is high time to ditch the exploitative (and science-squelching) model. The Guardian vows, “Academic Journals Are a Lucrative Scam—and We’re Determined to Change That.” Writer and political science professor Arash Abizadeh explains:

“The commercial stranglehold on academic publishing is doing considerable damage to our intellectual and scientific culture. As disinformation and propaganda spread freely online, genuine research and scholarship remains gated and prohibitively expensive. For the past couple of years, I worked as an editor of Philosophy & Public Affairs, one of the leading journals in political philosophy. It was founded in 1972, and it has published research from renowned philosophers such as John Rawls, Judith Jarvis Thomson and Peter Singer. Many of the most influential ideas in our field, on topics from abortion and democracy to famine and colonialism, started out in the pages of this journal. But earlier this year, my co-editors and I and our editorial board decided we’d had enough, and resigned en masse. We were sick of the academic publishing racket and had decided to try something different. We wanted to launch a journal that would be truly open access, ensuring anyone could read our articles. This will be published by the Open Library of Humanities, a not-for-profit publisher funded by a consortium of libraries and other institutions.”

Abizadeh explores how academic publishing got to the point where opening up access amounts to a rebellion of sorts. See the write-up for more details, but basically: Publishers snapped up university-press journals, hiked prices, and forced libraries to buy in unwanted bundles. But what about those free, “open-access” articles? Most are not free so much as prepaid—by the authors themselves. Or, more often, their universities. True, or “diamond,” open access is the goal of Abizadeh and his colleagues. Free to write, free to read, and funded by universities, libraries, and other organizations. Will more editors join the effort to wriggle out of academic journals’ stranglehold? And how will publishing behemoths fight back?

Cynthia Murrell, July 30, 2024

AI Reduces Productivity: Quick Another Study Needed Now

July 29, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

At lunch one of those at my table said with confidence that OpenAI was going to lose billions in 2024. Another person said, “Meta has published an open source AI manifesto.” I said, “Please, pass the pepper.”

The AI marketing and PR generators are facing a new problem. More information about AI is giving me a headache. I want to read about the next big thing delivering Ford F-150s filled with currency to my door. Enough of this Debbie Downer talk.

Then I spotted this article in Forbes Magazine, the capitalist tool. “77% Of Employees Report AI Has Increased Workloads And Hampered Productivity, Study Finds.”

The write up should bring tears of joy to those who thought they would be replaced by one of the tech giants smart software concoctions. Human employees hallucinate too. But humans have a couple of notable downsides. First, they require care and feeding, vacations, educational benefits and/or constant retraining, and continuous injections of cash. Second, they get old and walk out the door with expertise when they retire or just quit. And, third, they protest and sometimes litigate. That means additional costs and maybe a financial penalty to the employer. Smart software, on the other hand, does not impose those costs. The work is okay, particularly for intense knowledge work like writing meaningless content for search engine optimization or flipping through thousands of pages of documents looking for a particular name or a factoid of perceived importance.

But this capitalist tool write up says:

Despite 96% of C-suite executives expecting AI to boost productivity, the study reveals that, 77% of employees using AI say it has added to their workload and created challenges in achieving the expected productivity gains. Not only is AI increasing the workloads of full-time employees, it’s hampering productivity and contributing to employee burnout.

Interesting. An Upwork wizard Kelly Monahan is quoted to provide a bit of context I assume:

“In order to reap the full productivity value of AI, leaders need to create an AI-enhanced work model,” Monahan continues. “This includes leveraging alternative talent pools that are AI-ready, co-creating measures of productivity with their workforces, and developing a deep understanding of and proficiency in implementing a skills-based approach to hiring and talent development. Only then will leaders be able to avoid the risk of losing critical workers and advance their innovation agenda.”

The phrase “full productivity value” is fascinating. There’s a productivity payoff somewhere amidst the zeros and ones in the digital Augean Stable. There must be a pony in there?

What’s the fix? Well, it is not AI because the un-productive or intentionally non-productive human who must figure out how to make smart software pirouette can get trained up in AI and embrace any AI consultant who shows up to explain the ropes.

But the article is different from the hyperbolic excitement of those in the Red Alert world and the sweaty foreheads at AI pitch meetings. AI does not speed up. AI slows down. Slowing down means higher costs. AI is supposed to reduce costs. I am confused.

Net net: AI is coming productive or not. When someone perceives a technology will reduce costs, install that software. The outputs will be good enough. One hopes.

Stephen E Arnold, July 29, 2024

Prompt Tips and Query Refinements

July 29, 2024

Generative AI is paving the way for more automation, smarter decisions, and (possibly) an easier world. AI is still pretty stupid, however, and it needs to be hand fed information to make it work well. Dr. Lance B. Eliot is an AI expert and he contributed, “The Best Engineering Techniques For Getting The Most Out Of Generative AI” for Forbes.

Eliot explains the prompt engineering is the best way to make generative AI. He developed a list of how to write prompts and related skills. The list is designed to be a quick, easy tutorial that is also equipped with links for more information related to the prompt. Eliot’s first tip is to keep the prompt simple, direct, and obvious, otherwise the AI will misunderstand your intent.

He the rattles of a bunch of rhetoric that reads like it was written by generative AI. Maybe it was? In short, it’s good to learn how to write prompts to prepare for the future. He runs through the list alphabetically, then if that’s enough Eliot lists the prompts numerically:

“I didn’t number them because I was worried that the numbering would imply a semblance of importance or priority. I wanted the above listing to seem that all the techniques are on an equal footing. None is more precious than any of the others.

Lamentably, not having numbers makes life harder when wanting to quickly refer to a particular prompt engineering technique. So, I am going to go ahead and show you the list again and this time include assigned numbers. The list will still be in alphabetical order. The numbering is purely for ease of reference and has no bearing on priority or importance.”

The list is rundown of psychological and intercommunication methods used by humans. A lot of big words are used, but the explanations were written by a tech-savvy expert for his fellow tech people. In layman’s terms, the list explains that anything technique will work. Here’s one from me: use generative AI to simplify the article. Here’s a paradox prompt: if you feed generative AI a prompt written by generative AI will it explode?

Whitney Grace, July 29, 2024

Silicon Valley Streetbeefs

July 26, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

If you are not familiar with Streetbeefs, I suggest you watch the “Top 5 Boxing Knock Outs” on YouTube. Don’t forget to explore the articles about Streetbeefs at the about section of the Community page for the producer. Useful information appears on the main Web site too.

So what’s Streetbeefs do? The organization’s tag line explains:

Guns down. Gloves up.

If that is not sufficiently clear, the organization offers:

This org is for fighters and friends of STREETBEEFS! Everyone who fights for Streetbeefs does so to solve a dispute, or for pure sport… NOONE IS PAID TO FIGHT HERE… We also have many dedicated volunteers who help with reffing, security, etc..but if you’re joining the group looking for a paid position please know we’re not hiring. This is a brotherhood/sisterhood of like minded people who love fighting, fitness, and who hate gun violence. Our goal is to build a large community of people dedicated to stopping street violence and who can depend on each other like family. This organization is filled with tough people…but its also filled with the most giving people around..Streetbeefs members support each other to the fullest.

I read “Elon Musk Is Still Insisting He’s Down to Fight Mark Zuckerberg: ‘Any Place, Any Time, Any Rules.” This article makes clear that Mark (Senator, thank you for that question) Zuckerberg and Elon (over promise and under deliver) Musk want to fight one another. The article says (I cannot believe I read this by the way):

Elon Musk is impressed with Meta’s latest AI model, but he’s still raring for a bout in the ring with Mark Zuckerberg. “I’ll fight Zuckerberg any place, any time, any rules,” Musk told reporters in Washington on Wednesday [July 24, 2024].

My thought is that Mr. Musk should ring up Sunshine Trask, who is the group manager for fighter signups at Streetbeefs. Trask can coordinate the fight plus the undercard with the two testosterone charged big technology giants. With most fights held in make shift rings outside, Golden Gate Park might be a suitable venue for the event. Another possibility is to rope off the street near Philz coffee in Sunnyvale and hold the “beef” in Plaza de Sol.

Both Mr. Musk and Mr. Zuckerberg can meet with Scarface, the principal figure at Streetbeefs and get a sense of how the show will go down, what the rules are, and why videographers are in the ring with the fighter.

If these titans of technology want to fight, why not bring their idea of manly valor to a group with considerable experience handling individuals of diverse character.

Perhaps the “winner” would ask Scarface if he would go a few rounds to test his skills and valor against the victor of the truly bizarre dust up the most macho of the Sillycon Valley superstars. My hunch is that talking with Scarface and his colleagues might inform Messrs. Musk and Zuckerberg of the brilliance, maturity, and excitement of fighting for real.

On the other hand, Scarface might demonstrate his street and business acumen by saying, “You guys are adults?”

Stephen E Arnold, July 26, 2024

If Math Is Running Out of Problems, Will AI Help Out the Humans?

July 26, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I read “Math Is Running Out of Problems.” The write up appeared in Medium and when I clicked I was not asked to join, pay, or turn a cartwheel. (Does Medium think 80-year-old dinobabies can turn cartwheels? The answer is, “Hey, doofus, if you want to read Medium articles pay up.)

Thanks, MSFT Copilot. Good enough, just like smart security software.

I worked through the free essay, which is a reprise of an earlier essay on the topic of running out of math problems. These reason that few cared about the topic is that most people cannot make change. Thinking about a world without math problems is an intellectual task which takes time from scamming the elderly, doom scrolling, generating synthetic information, or watching reruns of I Love Lucy.

The main point of the essay in my opinion is:

…take a look at any undergraduate text in mathematics. How many of them will mention recent research in mathematics from the last couple decades? I’ve never seen it.

New and math problems is an oxymoron.

I think the author is correct. As specialization becomes more desirable to a person, leaving the rest of the world behind is a consequence. But the issue arises in other disciplines. Consider artificial intelligence. That jazzy phrase embraces a number of mathematical premises, but it boils down to a few chestnuts, roasted, seasoned, and mixed with some interesting ethanols. (How about that wild and crazy Sir Thomas Bayes?)

My view is that as the apparent pace of information flow erodes social and cultural structures, the quest for “new” pushes a frantic individual to come up with a novelty. The problem with a novelty is that it takes one’s eye off the ball and ultimately the game itself. The present state of affairs in math was evident decades ago.

What’s interesting is that this issue is not new. In the early 1980s, Dialog Information Services hosted a mathematics database called xxx. The person representing the MATHFILE database (now called MathSciNet) told me in 1981:

We are having a difficult time finding people to review increasingly narrow and highly specialized papers about an almost unknown area of mathematics.

Flash forward to 2024. Now this problem is getting attention in 2024 and no one seems to care?

Several observations:

- Like smart software, maybe humans are running out of high-value information? Chasing ever smaller mathematical “insights” may be a reminder that humans and their vaunted creativity has limits, hard limits.

- If the premise of the paper is correct, the issue should be evident in other fields as well. I would suggest the creation of a “me too” index. The idea is that for a period of history, one can calculate how many knock off ideas grab the coat tails of an innovation. My hunch is that the state of most modern technical insight is high on the me too index. No, I am not counting “original” TikTok-type information objects.

- The fragmentation which seems apparent to me in mathematics and that interesting field of mathematical physics mirrors the fragmentation of certain cultural precepts; for example, ethical behavior. Why is everything “so bad”? The answer is, “Specialization.”

Net net: The pursuit of the ever more specialized insight hastens the erosion of larger ideas and cultural knowledge. We have come a long way in four decades. The direction is clear. It is not just a math problem. It is a now problem and it is pervasive. I want a hat that says, “I’m glad I’m old.”

Stephen E Arnold, July 26, 2024