Smart Software and Knowledge Skills: Nothing to Worry About. Nothing.

July 5, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I read an article in Bang Premier (an estimable online publication with which I had no prior knowledge). It is now a “fave of the week.” The story “University Researchers Reveal They Fooled Professors by Submitting AI Exam Answers” was one of those experimental results which caused me to chuckle. I like to keep track of sources of entertaining AI information.

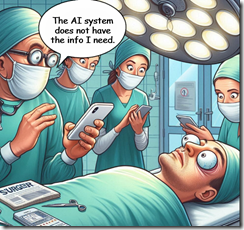

A doctor and his surgical team used smart software to ace their medical training. Now a patient learns that the AI system does not have the information needed to perform life-saving surgery. Thanks, MSFT Copilot. Good enough.

The Bang Premier article reports:

Researchers at the University of Reading have revealed they successfully fooled their professors by submitting AI-generated exam answers. Their responses went totally undetected and outperformed those of real students, a new study has shown.

Is anyone surprised?

The write up noted:

Dr Peter Scarfe, an associate professor at Reading’s school of psychology and clinical language sciences, said about the AI exams study: “Our research shows it is of international importance to understand how AI will affect the integrity of educational assessments. “We won’t necessarily go back fully to handwritten exams, but the global education sector will need to evolve in the face of AI.”

But the knee slapper is this statement in the write up:

In the study’s endnotes, the authors suggested they might have used AI to prepare and write the research. They stated: “Would you consider it ‘cheating’? If you did consider it ‘cheating’ but we denied using GPT-4 (or any other AI), how would you attempt to prove we were lying?” A spokesperson for Reading confirmed to The Guardian the study was “definitely done by humans”.

The researchers may not have used AI to create their report, but is it possible that some of the researchers thought about this approach?

Generative AI software seems to have hit a plateau for technology, financial, or training issues. Perhaps those who are trying to design a smart system to identify bogus images, machine-produced text and synthetic data, and nifty videos which often look like “real” TikTok-type creations will catch up? But if the AI innovators continue to refine their systems, the “AI identifier” software is effectively in a game of cat-and-mouse. Reacting to smart software means that existing identifiers will be blind to the new systems’ outputs.

The goal is a noble one, but the advantage goes to the AI companies, particularly those who want to go fast and break things. Academics get some benefit. New studies will be needed to determine how much fakery goes undetected. Will a surgeon who used AI to get his or her degree be able to handle a tricky operation and get the post-op drugs right?

Sure. No worries. Some might not think this is a laughing matter. Hey, it’s AI. It is A-Okay.

Stephen E Arnold, July 5, 2024