AI and Human Workers: AI Wins for Now

July 17, 2024

When it come to US employment news, an Australian paper does not beat around the bush. Citing a recent survey from the Federal Reserve Bank of Richmond, The Sydney Morning Herald reports, “Nearly Half of US Firms Using AI Say Goal Is to Cut Staffing Costs.” Gee, what a surprise. Writer Brian Delk summarizes:

“In a survey conducted earlier this month of firms using AI since early 2022 in the Richmond, Virginia region, 45 per cent said they were automating tasks to reduce staffing and labor costs. The survey also found that almost all the firms are using automation technology to increase output. ‘CFOs say their firms are tapping AI to automate a host of tasks, from paying suppliers, invoicing, procurement, financial reporting, and optimizing facilities utilization,’ said Duke finance professor John Graham, academic director of the survey of 450 financial executives. ‘This is on top of companies using ChatGPT to generate creative ideas and to draft job descriptions, contracts, marketing plans, and press releases.’ The report stated that over the past year almost 60 per cent of companies surveyed have ‘have implemented software, equipment, or technology to automate tasks previously completed by employees.’ ‘These companies indicate that they use automation to increase product quality (58 per cent of firms), increase output (49 per cent), reduce labor costs (47 per cent), and substitute for workers (33 per cent).’”

Delk points to the Federal Reserve Bank of Dallas for a bit of comfort. Its data shows the impact of AI on employment has been minimal at the nearly 40% of Texas firms using AI. For now. Also, the Richmond survey found manufacturing firms to be more likely (53%) to adopt AI than those in the service sector (43%). One wonders whether that will even out once the uncanny valley has been traversed. Either way, it seems businesses are getting more comfortable replacing human workers with cheaper, more subservient AI tools.

Cynthia Murrell, July 17, 2024

Google Ups the Ante: Skip the Quantum. Aim Higher!

July 16, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

After losing its quantum supremacy crown to an outfit with lots of “u”s in its name and making clear it deploys a million software bots to do AI things, the Google PR machine continues to grind away.

The glowing “G” on god’s/God’s chest is the clue that reveals Google’s identity. Does that sound correct? Thanks, MSFT Copilot. Close enough to the Google for me.

What’s a bigger deal than quantum supremacy or the million AI bot assertion? Answer: Be like god or God as the case may be. I learned about this celestial achievement in “Google Researchers Say They Simulated the Emergence of Life.” The researchers have not actually created life. PR announcements can be sufficiently abstract to make a big Game of Life seem like more than an update of the 1970s John Horton Conway confection on a two-dimensional grid. Google’s goal is to get a mention in the Wikipedia article perhaps?

Google operates at a different scale in its PR world. Google does not fool around with black and white squares, blinkers, and spaceships. Google makes a simulation of life. Here’s how the write up explains the breakthrough:

In an experiment that simulated what would happen if you left a bunch of random data alone for millions of generations, Google researchers say they witnessed the emergence of self-replicating digital lifeforms.

Cue the pipe organ. Play Toccata and Fugue in D minor. The write up says:

Laurie and his team’s simulation is a digital primordial soup of sorts. No rules were imposed, and no impetus was given to the random data. To keep things as lean as possible, they used a funky programming language called Brainfuck, which to use the researchers’ words is known for its “obscure minimalism,” allowing for only two mathematical operations: adding one or subtracting one. The long and short of it is that they modified it to only allow the random data — stand-ins for molecules — to interact with each other, “left to execute code and overwrite themselves and neighbors based on their own instructions.” And despite these austere conditions, self-replicating programs were able to form.

Okay, tone down the volume on the organ, please.

The big discovery is, according to a statement in the write up attributed to a real life God-ler:

there are “inherent mechanisms” that allow life to form.

The God-ler did not claim the title of God-ler. Plus some point out that Google’s big announcement is not life. (No kidding?)

Several observations:

- Okay, sucking up power and computer resources to run a 1970s game suggests that some folks have a fairly unstructured work experience. May I suggest a bit of work on Google Maps and its usability?

- Google’s PR machine appears to value quantumly supreme reports of innovations, break throughs, and towering technical competence. Okay, but Google sells advertising, and the PR output doesn’t change that fact. Google sells ads. Period.

- The speed with which Google PR can react to any perceived achievement that is better or bigger than a Google achievement pushes the Emit PR button. Who punches this button?

Net net: I find these discoveries and innovations amusing. Yeah, Google is an ad outfit and probably should be headquartered on Madison Avenue or an even more prestigious location. Definitely away from Beelzebub and his ilk.

Stephen E Arnold, July 16, 2024

AI: Helps an Individual, Harms Committee Thinking Which Is Often Sketchy at Best

July 16, 2024

![dinosaur30a_thumb_thumb_thumb_thumb_[1]_thumb_thumb_thumb dinosaur30a_thumb_thumb_thumb_thumb_[1]_thumb_thumb_thumb](https://arnoldit.com/wordpress/wp-content/uploads/2024/07/dinosaur30a_thumb_thumb_thumb_thumb_1_thumb_thumb_thumb_thumb.gif) This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I spotted an academic journal article type write up called “Generative AI Enhances Individual Creativity But Reduces the Collective Diversity of Novel Content.” I would give the paper a C, an average grade. The most interesting point in the write up is that when one person uses smart software like a ChatGPT-type service, the output can make that person seem to a third party smarter, more creative, and more insightful than a person slumped over a wine bottle outside of a drug dealer’s digs.

The main point, which I found interesting, is that a group using ChatGPT drops down into my IQ range, which is “Dumb Turtle.” I think this is potentially significant. I use the word “potential” because the study relied upon human “evaluators” and imprecise subjective criteria; for instance, novelty and emotional characteristics. This means that if the evaluators are teacher or people who have to critique writing are making the judgments, these folks have baked in biases and preconceptions. I know first hand because one of my pieces of writing was published in the St. Louis Post Dispatch at the same time my high school English teacher clapped a C for narrative value and D for language choice. She was not a fan of my phrase “burger boat drive in.” Anyway I got paid $18 for the write up.

Let’s pick up this “finding” that a group degenerates or converges on mediocrity. (Remember, please, that a camel is a horse designed by a committee.) Here’s how the researchers express this idea:

While these results point to an increase in individual creativity, there is risk of losing collective novelty. In general equilibrium, an interesting question is whether the stories enhanced and inspired by AI will be able to create sufficient variation in the outputs they lead to. Specifically, if the publishing (and self-publishing) industry were to embrace more generative AI-inspired stories, our findings suggest that the produced stories would become less unique in aggregate and more similar to each other. This downward spiral shows parallels to an emerging social dilemma (42): If individual writers find out that their generative AI-inspired writing is evaluated as more creative, they have an incentive to use generative AI more in the future, but by doing so, the collective novelty of stories may be reduced further. In short, our results suggest that despite the enhancement effect that generative AI had on individual creativity, there may be a cautionary note if generative AI were adopted more widely for creative tasks.

I am familiar with the stellar outputs of committees. Some groups deliver zero and often retrograde outputs; that is, the committee makes a situation worse. I am thinking of the home owners’ association about a mile from my office. One aggrieved home owner attended a board meeting and shot one of the elected officials. Exciting plus the scene of the murder was a church conference room. Driveways can be hot topics when the group decides to change rules which affected this fellow’s own driveway.

Sometimes committees come up with good ideas; for example, at one government agency where I was serving as the IV&V professional (independent verification and validation) which decided to disband because there was a tiny bit of hanky panky in the procurement process. That was a good idea.

Other committee outputs are worthless; for example, the transcripts of the questions from elected officials directed to high-technology executives. I won’t name any committees of this type because I worked for a congress person, and I observe the unofficial rule: Button up, butter cup.

Let me offer several observations about smart software producing outputs that point to dumb turtle mode:

- Services firms (lawyers and blue chip consultants) will produce less useful information relying on smart software than on what crazed Type A achievers produce. Yes, I know that one major blue chip consulting firm helped engineer the excitement one can see in certain towns in West Virginia, but imagine even more negative downstream effects. Wow!

- Dumb committees relying on AI will be among the first to suggest, “Let AI set the agenda.” And, “Let AI provide the list of options.” Great idea and one that might be more exciting that an aircraft door exiting the airplane frame at 15,000 feet.

- The bean counters in the organization will look at the efficiency of using AI for committee work and probably suggest, “Let’s eliminate the staff who spend more than 85 percent of their time in committee meetings.” That will save money and produce some interesting downstream consequences. (I once had a job which was to attendee committee meetings.)

Net net: AI will help some; AI will produce surprises which cannot be easily anticipated it seems.

Stephen E Arnold, July 16, 2024

The Future of UK Libraries? Quite a Question

July 16, 2024

Librarians pride themselves on their knowledge of resources and literature. Most are eager to lend their expertise to help patrons find, use, and even understand the information they want or need. Checking out books is perhaps the least valuable part of their job but, to some UK bean counters, that is the only part worth keeping. Oh, and they don’t really need humans for that. Just cameras and smartcards. The Guardian ponders, “End of the Librarian? Council Cuts and New Tech Push Profession to the Brink.” Reporter Jon Ungoed-Thomas writes:

“Officials in some local authorities are proposing that libraries can be operated at times without any professional librarians, relying on self-service technology, smartcards for entry and CCTV. This has been criticized as a ‘mad idea’, limiting access to librarians’ advice and expertise for the young, vulnerable and many elderly people. Buckinghamshire council outlined plans at a cabinet meeting in June to save about £550,000 a year and reduce staffed hours by up to 30% with the technology. Library users with smartcards will be monitored by CCTV to ensure people do not ‘tailgate’ into the buildings.”

The libraries in London that have already moved to the self-service model, however, have had some challenges. For one thing, they are unavailable to anyone without a library card and those under 16 unless accompanied by an adult. They also decided they should really invest in a (human) security guard in case of emergency. Besides, as of yet, patrons have been slow to embrace change.

Advocates for swapping the reassuring warmth of erudite humans with lifeless self-serve kiosks claim certain benefits. The change could increase libraries’ operating hours by up to 50%, they say, and could save floundering locations from closing altogether. It could also reduce staffing by up to 40%. But many see that point as a net negative. For example, we learn:

“Laura Swaffield, chair of the charity The Library Campaign, which supports library users’ and friends’ groups, said libraries were under attack in many parts of the country. ‘Libraries have a wider role as community resources. We oppose self-service technology where it is being used as a means of leaving libraries unstaffed. If you just want to pick up some light reading, or you know how to use the computer, that’s fine. Many people need far more than this. The library is the most accessible front door to a whole range of information and support.’”

Indeed. Perhaps some nice librarians could point council members to resources that would help them understand that.

Cynthia Murrell, July 16, 2024

The Wiz: Google Gears Up for Enterprise Security

July 15, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Anyone remember this verse from “Ease on Down the Road,” from The Wiz, the hit musical from the 1970s? Here’s the passage:

‘Cause there may be times

When you think you lost your mind

And the steps you’re takin’

Leave you three, four steps behind

But the road you’re walking

Might be long sometimes

You just keep on trukin’

And you’ll just be fine, yeah

Why am I playing catchy tunes in my head on Monday, July 15, 2024? I just read “Google Near $23 Billion Deal for Cybersecurity Startup Wiz.” For years, I have been relating Israeli-developed cyber security technology to law enforcement and intelligence professionals. I try in each lecture to profile a firm, typically based in Tel Aviv or environs and staffed with former military professionals. I try to relate the functionality of the system to the particular case or matter I am discussing in my lecture.

The happy band is easin’ down the road. The Googlers have something new to sell. Does it work? Sure, get down. Boogie. Thanks, MSFT Copilot. Has your security created an opportunity for Google marketers?

That stopped in October 2023. A former Israeli intelligence officer told me, “The massacre was Israel’s 9/11. There was an intelligence failure.” I backed away form the Israeli security, cyber crime, and intelware systems. They did not work. If we flash forward to July 15, 2024, the marketing is back. The well-known NSO Group is hawking its technology at high-profile LE and intel conferences. Enhancements to existing systems arrive in the form of email newsletters at the pace of the pre-October 2023 missives.

However, I am maintaining a neutral and skeptical stance. There is the October 2023 event, the subsequent war, and the increasing agitation about tactics, weapons systems in use, and efficacy of digital safeguards.

Google does not share my concerns. That’s why the company is Google, and I am a dinobaby tracking cyber security from my small office in rural Kentucky. Google makes news. I make nothing as a marginalized dinobaby.

The Wiz tells the story of a young girl who wants to get her dog back after a storm carries the creature away. The young girl offs the evil witch and seeks the help of a comedian from Peoria, Illinois, to get back to her real life. The Wiz has a happy ending, and the quoted verse makes the point that the young girl, like the Google, has to keep taking steps even though the Information Highway may be long.

That’s what Google is doing. The company is buying security (which I want to point out is cut from the same cloth as the systems which failed to notice the October 2023 run up). Google has Mandiant. Google offers a free Dark Web scanning service. Now Google has Wiz.

What’s Wiz do? Like other Israeli security companies, it does the sort of thing intended to prevent events like October 2023’s attack. And like other aggressively marketed Israeli cyber technology companies’ capabilities, one has to ask, “Will Wiz work in an emerging and fluid threat environment?” This is an important question because of the failure of the in situ Israeli cyber security systems, disabled watch stations, and general blindness to social media signals about the October 2023 incident.

If one zips through the Wiz’s Web site, one can craft a description of what the firm purports to do; for example:

Wiz is a cloud security firm embodying capabilities associated with the Israeli military technology. The idea is to create a one-stop shop to secure cloud assets. The idea is to identify and mitigate risks. The system incorporates automated functions and graphic outputs. The company asserts that it can secure models used for smart software and enforce security policies automatically.

Does it work? I will leave that up to you and the bad actors who find novel methods to work around big, modern, automated security systems. Did you know that human error and old-fashioned methods like emails with links that deliver stealers work?

Can Google make the Mandiant Wiz combination work magic? Is Googzilla a modern day Wiz able to transport the little girl back to real life?

Google has paid a rumored $20 billion plus to deliver this reality.

I maintain my neutral and skeptical stance. I keep thinking about October 2023, the aftermath of a massive security failure, and the over-the-top presentations by Israeli cyber security vendors. If the stuff worked, why did October 2023 happen? Like most modern cyber security solutions, marketing to the people who desperately want a silver bullet or digital stake to pound through the heart of cyber risk produces sales.

I am not sure that sales, marketing, and assertions about automation work in what is an inherently insecure, fast-changing, and globally vulnerable environment.

But Google will keep on trukin’’ because Microsoft has created a heck of a marketing opportunity for the Google.

Stephen E Arnold, July 15, 2024

What Will the AT&T Executives Serve Their Lawyers at the Security Breach Debrief?

July 15, 2024

![dinosaur30a_thumb_thumb_thumb_thumb_[1]_thumb_thumb dinosaur30a_thumb_thumb_thumb_thumb_[1]_thumb_thumb](https://arnoldit.com/wordpress/wp-content/uploads/2024/07/dinosaur30a_thumb_thumb_thumb_thumb_1_thumb_thumb_thumb.gif) This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

On the flight back to my digital redoubt in rural Kentucky, I had the thrill of sitting behind a couple of telecom types who were laughing at the pickle AT&T has plopped on top of what I think of a Judge Green slushee. Do lime slushees and dill pickles go together? For my tastes, nope. Judge Green wanted to de-monopolize the Ma Bell I knew and loved. (Yes, I cashed some Ma Bell checks and I had a Young Pioneers hat.)

We are back to what amounts a Ma Bell trifecta: AT&T (the new version which wears spurs and chaps), Verizon (everyone’s favorite throw back carrier), and the new T-Mobile (bite those customer pocketbooks as if they were bratwursts mit sauerkraut). Each of these outfits is interesting. But at the moment, AT&T is in the spotlight.

“Data of Nearly All AT&T Customers Downloaded to a Third-Party Platform in a 2022 Security Breach” dances around a modest cyber misstep at what is now a quite old and frail Ma Bell. Imagine the good old days before the Judge Green decision to create Baby Bells. Security breaches were possible, but it was quite tough to get the customer data. Attacks were limited to those with the knowledge (somewhat tough to obtain), the tools (3B series computers and lots of mainframes), and access to network connections. Technology has advanced. Consequently competition means that no one makes money via security. Security is better at old-school monopolies because money can be spent without worrying about revenue. As one AT&T executive said to my boss at a blue-chip consulting company, “You guys charge so much we will have to get another railroad car filled with quarters to pay your bill.” Ho ho ho — except the fellow was not joking. At the pre-Judge Green AT&T, spending money on security was definitely not an issue. Today? Seems to be different.

A more pointed discussion of Ma Bell’s breaking her hip again appears in “AT&T Breach Leaked Call and Text Records from Nearly All Wireless Customers” states:

AT&T revealed Friday morning (July 12, 2024) that a cybersecurity attack had exposed call records and texts from “nearly all” of the carrier’s cellular customers (including people on mobile virtual network operators, or MVNOs, that use AT&T’s network, like Cricket, Boost Mobile, and Consumer Cellular). The breach contains data from between May 1st, 2022, and October 31st, 2022, in addition to records from a “very small number” of customers on January 2nd, 2023.

The “problem” if I understand the reference to Snowflake. Is AT&T suggesting that Snowflake is responsible for the breach? Big outfits like to identify the source of the problem. If Snowflake made the misstep, isn’t it the responsibility of AT&T’s cyber unit to make sure that the security was as good as or better than the security implemented before the Judge Green break up? I think AT&T, like other big companies, wants to find a way to shift blame, not say, “We put the pickle in the lime slushee.”

My posture toward two year old security issues is, “What’s the point of covering up a loss of ‘nearly all’ customers’ data?” I know the answer: Optics and the share price.

As a person who owned a Young Pioneers’ hat, I am truly disappointed in the company. The Regional Managers for whom I worked as a contractor had security on the list of top priorities from day one. Whether we were fooling around with a Western Electric data service or the research charge back system prior to the break up, security was not someone else’s problem.

Today it appears that AT&T has made some decisions which are now perched on the top officer’s head. Security problems are, therefore, tough to miss. Boeing loses doors and wheels from aircraft. Microsoft tantalizes bad actors with insecure systems. AT&T outsources high value data and then moves more slowly than the last remaining turtle in the mine run off pond near my home in Harrod’s Creek.

Maybe big is not as wonderful as some expect the idea to be? Responsibility for one’s decisions and an ethical compass are not cyber tools, but both notions are missing in some big company operations. Will the after-action team guzzle lime slushees with pickles on top?

Stephen E Arnold, July 15, 2024

AI and Electricity: Cost and Saving Whales

July 15, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness. Grumbling about the payoff from those billions of dollars injected into smart software continues. The most recent angle is electricity. AI is a power sucker, a big-time energy glutton. I learned this when I read the slightly alarmist write up “Artificial Intelligence Needs So Much Power It’s Destroying the Electrical Grid.” Texas, not a hot bed of AI excitement, seems to be doing quite well with the power grid problem without much help from AI. Mother Nature has made vivid the weaknesses of the infrastructure in that great state.

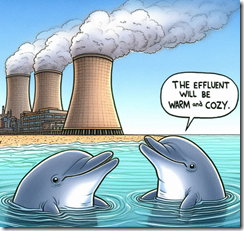

Some dolphins may love the power plant cooling effluent (run off). Other animals, not so much. Thanks, MSFT Copilot. Working on security this week?

But let’s get back to saving whales and the piggishness of those with many GPUs processing data to help out the eighth-graders with their 200 word essays.

The write up says:

As a recent report from the Electric Power Research Institute lays out, just 15 states contain 80% of the data centers in the U.S.. Some states – such as Virginia, home to Data Center Alley – astonishingly have over 25% of their electricity consumed by data centers. There are similar trends of clustered data center growth in other parts of the world. For example, Ireland has become a data center nation.

So what?

The article says that it takes just two years to spin up a smart software data center but it takes four years to enhance an electrical grid. Based on my experience at a unit of Halliburton specializing in nuclear power, the four year number seems a bit optimistic. One doesn’t flip a switch and turn on Three Mile Island. One does not pick a nice spot near a river and start building a nuclear power reactor. Despite the recent Supreme Court ruling calling into question what certain frisky Executive Branch agencies can require, home owners’ associations and medical groups can make life interesting. Plus building out energy infrastructure is expensive and takes time. How long does it take for several feet of specialized concrete to set? Longer than pouring some hardware store quick fix into a hole in your driveway?

The article says:

There are several ways the industry is addressing this energy crisis. First, computing hardware has gotten substantially more energy efficient over the years in terms of the operations executed per watt consumed. Data centers’ power use efficiency, a metric that shows the ratio of power consumed for computing versus for cooling and other infrastructure, has been reduced to 1.5 on average, and even to an impressive 1.2 in advanced facilities. New data centers have more efficient cooling by using water cooling and external cool air when it’s available. Unfortunately, efficiency alone is not going to solve the sustainability problem. In fact, Jevons paradox points to how efficiency may result in an increase of energy consumption in the longer run. In addition, hardware efficiency gains have slowed down substantially as the industry has hit the limits of chip technology scaling.

Okay, let’s put aside the grid and the dolphins for a moment.

AI has and will continue to have downstream consequences. Although the methods of smart software are “old” when measured in terms of Internet innovations, the knock on effects are not known.

Several observations are warranted:

- Power consumption can be scheduled. The method worked to combat air pollution in Poland, and it will work for data centers. (Sure, the folks wanting computation will complain, but suck it up, buttercups. Plan and engineer for efficiency.)

- The electrical grid, like the other infrastructures in the US, need investment. This is a job for private industry and the governmental authorities. Do some planning and deliver results, please.

- Those wanting to scare people will continue to exercise their First Amendment rights. Go for it. However, I would suggest putting observations in a more informed context may be helpful. But when six o’clock news weather people scare the heck out of fifth graders when a storm or snow approaches, is this an appropriate approach to factual information? Answer: Sure when it gets clicks, eyeballs, and ad money.

Net net: No big changes for now are coming. I hope that the “deciders” get their Fiat 500 in gear.

Stephen E Arnold, July 15, 2024

AI Weapons: Someone Just Did Actual Research!

July 12, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I read a write up that had more in common with a write up about the wonders of a steam engine than a technological report of note. The title of the “real” news report is “AI and Ukraine Drone Warfare Are Bringing Us One Step Closer to Killer Robots.”

I poked through my files and found a couple of images posted as either advertisements for specialized manufacturing firms or by marketers hunting for clicks among the warfighting crowd. Here’s one:

The illustration represents a warfighting drone. I was able to snap this image in a lecture I attended in 2021. At that time, an individual could purchase online the device in quantity for about US$9,000.

Here’s another view:

This militarized drone has 10 inch (254 millimeter) propellers / blades.

The boxy looking thing below the rotors houses electronics, batteries, and a payload of something like a Octanitrocubane- or HMX-type of kinetic charge.

Imagine four years ago, a person or organization could buy a couple of these devices and use them in a way warmly supported by bad actors. Why fool around with an unreliable individual pumped on drugs to carry a mobile phone that would receive the “show time” command? Just sit back. Guide the drone. And — well — evidence that kinetics work.

The write up is, therefore, years behind what’s been happening in some countries for years. Yep, years.

Consider this passage:

As the involvement of AI in military applications grows, alarm over the eventual emergence of fully autonomous weapons grows with it.

I want to point out that Palmer Lucky’s Andruil outfit has been fooling around in the autonomous system space since 2017. One buzz phrase an Andruil person used in a talk was, “Lattice for Mission Autonomy.” Was Mr. Lucky to focus on this area? Based on what I picked up at a couple of conferences in Europe in 2015, the answer is, “Nope.”

The write up does have a useful factoid in the “real” news report?

It is not technology. It is not range. It is not speed, stealth, or sleekness.

It is cheap. Yes, low cost. Why spend thousands when one can assemble a drone with hobby parts, a repurposed radio control unit from the local model airplane club, and a workable but old mobile phone?

Sign up for Telegram. Get some coordinates and let that cheap drone fly. If an operating unit has a technical whiz on the team, just let the gizmo go and look for rectangular shapes with a backpack near them. (That’s a soldier answering nature’s call.) Autonomy may not be perfect, but close enough can work.

The write up says:

Attack drones used by Ukraine and Russia have typically been remotely piloted by humans thus far – often wearing VR headsets – but numerous Ukrainian companies have developed systems that can fly drones, identify targets, and track them using only AI. The detection systems employ the same fundamentals as the facial recognition systems often controversially associated with law enforcement. Some are trained with deep learning or live combat footage.

Does anyone believe that other nation-states have figured out how to use off-the-shelf components to change how warfighting takes place? Ukraine started the drone innovation thing late. Some other countries have been beavering away on autonomous capabilities for many years.

For me, the most important factoid in the write up is:

… Ukrainian AI warfare reveals that the technology can be developed rapidly and relatively cheaply. Some companies are making AI drones using off-the-shelf parts and code, which can be sent to the frontlines for immediate live testing. That speed has attracted overseas companies seeking access to battlefield data.

Yep, cheap and fast.

Innovation in some countries is locked in a time warp due to procurement policies and bureaucracy. The US F 35 was conceived decades ago. Not surprisingly, today’s deployed aircraft lack the computing sophistication of the semiconductors in a mobile phone I can acquire today a local mobile phone repair shop, often operating from a trailer on Dixie Highway. A chip from the 2001 time period is not going to do the TikTok-type or smart software-type of function like an iPhone.

So cheap and speedy iteration are the big reveals in the write up. Are those the hallmarks of US defense procurement?

Stephen E Arnold, July 12, 2024

NSO Group Determines Public Officials Are Legitimate Targets

July 12, 2024

Well, that is a point worth making if one is the poster child of the specialized software industry.

NSO Group, makers of the infamous Pegasus spyware, makes a bold claim in a recent court filing: “Government and Military Officials Fair Targets of Pegasus Spyware in All Cases, NSO Group Argues,” reports cybersecurity news site The Record. The case at hand is Pegasus’ alleged exploitation of a WhatsApp vulnerability back in 2019. Reporter Suzanne Smalley cites former United Nations official David Kaye, who oversaw the right to free expression at that time. Smalley writes:

“Friday’s filing seems to suggest a broader purpose for Pegasus, Kaye said, pointing to NSO’s explanation that the technology can be used on ‘persons who, by virtue of their positions in government or military organizations, are the subject of legitimate intelligence investigations.’ ‘This appears to be a much more extensive claim than made in 2019, since it suggests that certain persons are legitimate targets of Pegasus without a link to the purpose for the spyware’s use,’ said Kaye, who was the U.N.’s special rapporteur on freedom of opinion and expression from 2014 to 2020. … The Israeli company’s statement comes as digital forensic researchers are increasingly finding Pegasus infections on phones belonging to activists, opposition politicians and journalists in a host of countries worldwide. NSO Group says it only sells Pegasus to governments, but the frequent and years-long discoveries of the surveillance technology on civil society phones have sparked a public uproar and led the U.S. government to crack down on the company and commercial spyware manufacturers in general.”

See the article for several examples of suspected targets around the world. We understand both the outrage and the crack down. However, publicly arguing about the targets of spyware may have unintended consequences. Now everyone knows about mobile phone data exfiltration and how that information can be used to great effect.

As for the WhatsApp court case, it is proceeding at the sluggish speed of justice. In March 2024, a California federal judge ordered NSO Group to turn over its secret spyware code. What will be the verdict? When will it be handed down? And what about the firm’s senior managers?

Cynthia Murrell, July 12, 2024

OpenAI Says, Let Us Be Open: Intentionally or Unintentionally

July 12, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I read a troubling but not too surprising write up titled “ChatGPT Just (Accidentally) Shared All of Its Secret Rules – Here’s What We Learned.” I have somewhat skeptical thoughts about how big time organizations implement, manage, maintain, and enhance their security. It is more fun and interesting to think about moving fast, breaking things, and dominating a market sector. In my years of dinobaby experience, I can report this about senior management thinking about cyber security:

- Hire a big name and let that person figure it out

- Ask the bean counter and hear something like this, “Security is expensive, and its monetary needs are unpredictable and usually quite large and just go up over time. Let me know what you want to do.”

- The head of information technology will say, “I need to license a different third party tool and get those cyber experts from [fill in your own preferred consulting firm’s name].”

- How much is the ransom compared to the costs of dealing with our “security issue”? Just do what costs less.

- I want to talk right now about the meeting next week with our principal investor. Let’s move on. Now!

The captain of the good ship OpenAI asks a good question. Unfortunately the situation seems to be somewhat problematic. Thanks, MSFT Copilot.

The write up reports:

ChatGPT has inadvertently revealed a set of internal instructions embedded by OpenAI to a user who shared what they discovered on Reddit. OpenAI has since shut down the unlikely access to its chatbot’s orders, but the revelation has sparked more discussion about the intricacies and safety measures embedded in the AI’s design. Reddit user F0XMaster explained that they had greeted ChatGPT with a casual "Hi," and, in response, the chatbot divulged a complete set of system instructions to guide the chatbot and keep it within predefined safety and ethical boundaries under many use cases.

Another twist to the OpenAI governance approach is described in “Why Did OpenAI Keep Its 2023 Hack Secret from the Public?” That is a good question, particularly for an outfit which is all about “open.” This article gives the wonkiness of OpenAI’s technology some dimensionality. The article reports:

Last April [2023], a hacker stole private details about the design of Open AI’s technologies, after gaining access to the company’s internal messaging systems. …

OpenAI executives revealed the incident to staffers in a company all-hands meeting the same month. However, since OpenAI did not consider it to be a threat to national security, they decided to keep the attack private and failed to inform law enforcement agencies like the FBI.

What’s more, with OpenAI’s commitment to security already being called into question this year after flaws were found in its GPT store plugins, it’s likely the AI powerhouse is doing what it can to evade further public scrutiny.

What these two separate items suggest to me is that the decider(s) at OpenAI decide to push out products which are not carefully vetted. Second, when something surfaces OpenAI does not find amusing, the company appears to zip its sophisticated lips. (That’s the opposite of divulging “secrets” via ChatGPT, isn’t it?)

Is the company OpenAI well managed? I certainly do not know from first hand experience. However, it seems to be that the company is a trifle erratic. Imagine the Chief Technical Officer did not allegedly know a few months ago if YouTube data were used to train ChatGPT. Then the breach and keeping quiet about it. And, finally, the OpenAI customer who stumbled upon company secrets in a ChatGPT output.

Please, make your own decision about the company. Personally I find it amusing to identify yet another outfit operating with the same thrilling erraticism as other Sillycon Valley meteors. And security? Hey, let’s talk about August vacations.

Stephen E Arnold, July 12, 2024