Lark Flies Home with TikTok User Data, DOJ Alleges

August 7, 2024

An Arnold’s Law of Online Content states simply: If something is online, it will be noticed, captured, analyzed, and used to achieve a goal. That is why we are unsurprised to learn, as TechSpot reports, “US Claims TikTok Collected Data on Users, then Sent it to China.” Writer Skye Jacobs reveals:

“In a filing with a federal appeals court, the Department of Justice alleges that TikTok has been collecting sensitive information about user views on socially divisive topics. The DOJ speculated that the Chinese government could use this data to sow disruption in the US and cast suspicion on its democratic processes. TikTok has made several overtures to the US to create trust in its privacy and data controls, but it has also been reported that the service at one time tracked users who watched LGBTQ content. The US Justice Department alleges that TikTok collected sensitive data on US users regarding contentious issues such as abortion, religion and gun control, raising concerns about privacy and potential manipulation by the Chinese government. This information was reportedly gathered through an internal communication tool called Lark.”

Lark is also owned by TikTok parent company ByteDance and is integrated into the app. Alongside its role as a messaging platform, Lark has apparently been collecting a lot of very personal user data and sending it home to Chinese servers. The write-up specifies some of the DOJ’s concerns:

“They warn that the Chinese government could potentially instruct ByteDance to manipulate TikTok’s algorithm to use this data to promote certain narratives or suppress others, in order to influence public opinion on social issues and undermine trust in the US’ democratic processes. Manipulating the algorithm could also be used to amplify content that aligns with Chinese state narratives, or downplay content that contradicts those narratives, thereby shaping the national conversation in a way that serves Chinese interests.”

Perhaps most concerning, the brief warns, China could direct ByteDance to use the data to “undermine trust in US democracy and exacerbate social divisions.” Yes, that tracks. Meanwhile, TikTok insists any steps our government takes against it infringe on US users’ First Amendment rights. Oh, the irony.

In the face of US government’s demand it sell off TikTok or face a ban, ByteDance has offered a couple of measures designed to alleviate concerns. So far, though, the Biden administration is standing firm.

Cynthia Murrell, August 7, 2024

Old Problem, New Consequences: AI and Data Quality

August 6, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Grab a business card from several years ago. Just for laughs send an email to the address on the card or dial one of the numbers printed on it. What happens? Does the email bounce? Does the person you called answer? In my experience, the business cards I have gathered at conferences in 2021 are useless. The number rings in space or a non-human voice says, “The number has been disconnected.” The emails go into a black hole. I would, based on my experience, peg the 100 random cards I had one of my colleagues pull from the files that work at fewer than 30 percent. In 24 months, 70 percent of the data are invalid. An optimist would say, “You have 30 people you can contact.” A pessimist would say, “Wow, you lost 70 contacts.” A 20-something whiz kid at one of the big time AI companies would say, “Good enough.”

An automated data factory purports to manufacture squares. What does it do? Triangles are good enough and close enough for horseshoes. Does the factory call the triangles squares? Of course, it does. Thanks, MSFT Copilot. Security is Job One today I hear.

I read “Data Quality: The Unseen Villain of Machine Learning.” The write up states:

Too often, data scientists are the people hired to “build machine learning models and analyze data,” but bad data prevents them from doing anything of the sort. Organizations put so much effort and attention into getting access to this data, but nobody thinks to check if the data going “in” to the model is usable. If the input data is flawed, the output models and analyses will be too.

Okay, that’s a reasonable statement. But this passage strikes me as a bit orthogonal to the observations I have made:

It is estimated that data scientists spend between 60 and 80 percent of their time ensuring data is cleansed, in order for their project outcomes to be reliable. This cleaning process can involve guessing the meaning of data and inferring gaps, and they may inadvertently discard potentially valuable data from their models. The outcome is frustrating and inefficient as this dirty data prevents data scientists from doing the valuable part of their job: solving business problems. This massive, often invisible cost slows projects and reduces their outcomes.

The painful reality, in my experience, consists of three factors:

- Data quality depends on the knowledge and resources available to a subject matter expert. A data quality expert might define quality as consistent data; that is, the name field has a name. The SME figures out if the data are in line with other data and what’s is off base.

- The time required to “ensure” data quality is rarely available. There are interruptions, Zooms, and automated calendars that ping a person for a meeting. Data quality is easily killed by time suffocation.

- The volume of data begs for automated procedures and, of course, AI. The problem is that the range of errors related to validity is sufficiently broad to allow “flawed” data to enter a systems. Good enough creates interesting consequences.

The write up says:

Data quality shouldn’t be a case of waiting for an issue to occur in production and then scrambling to fix it. Data should be constantly tested, wherever it lives, against an ever-expanding pool of known problems. All stakeholders should contribute and all data must have clear, well-defined data owners. So, when a data scientist is asked what they do, they can finally say: build machine learning models and analyze data.

This statement makes clear why flawed data remain flawed. The fix, according to some, is synthetic data. Are these data of high quality? It depends on what one means by “quality.” Today the benchmark is good enough. Good enough produces outputs that are not. But who knows? Not the harried person looking for something, anything, to put in a presentation, journal article, or podcast.

Stephen E Arnold, August 6, 2024

Agents Are Tracking: Single Web Site Version

August 6, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

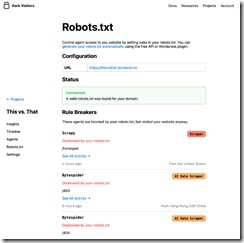

How many software robots are crawling (copying and indexing) a Web site you control now? This question can be answered by a cloud service available from DarkVisitors.com.

The Web site includes a useful list of these software robots (what many people call “agents” which sounds better, right?). You can find the list of about 800 bots as of July 30, 2024) on the DarkVisitors’ Web site at this link. There is a search function so you can look for a bot by name; for example, Omgili (the Israeli data broker Webz.io). Please, note, that the list contains categories of agents; for example, “AI Data Scrapers”, “AI Search Crawlers,” and “Developer Helpers,” among others.

The Web site also includes links to a service called “Set Up Your Robots.txt.” The idea is that one can link a Web site’s robots.txt file to DarkVisitors. Then DarkVisitors will update your Web site automatically to block crawlers, bots, and agents. The specific steps to make this service work are included on the DarkVisitors.com Web site.

The basic service is free. However, if you want analytics and a couple of additional features, the cost as of July 30, 2024, is $10 per month.

An API is also available. Instructions for implementing the service are available as well. Plus, a WordPress plug in is available. The cloud service is provided by Bit Flip LLC.

Stephen E Arnold, August 6, 2024

The US Government Wants Honesty about Security

August 6, 2024

I am not sure what to make of words like “trust,” “honesty,” and “security.”

The United States government doesn’t want opinions from its people. They only want people to vote, pay their taxes, and not cause trouble. In an event rarer than a blue moon, the US Cybersecurity and Infrastructure Security Agency wants to know what it could better. Washington Technology shares the story, “CISA’s New Cybersecurity Official Jeff Greene Wants To Know Where The Agency Can Improve On Collaboration Efforts That Have Been Previously Criticized For Their Misdirection.”

Jeff Greene is the new executive assistant director for the Cybersecurity and Infrastructure Security Agency (CISA). He recently held a meeting at the US Chamber of Commerce and asked the private sector attendees that his agency holding an “open house” discussion. The open house discussion welcomes input from the private sector about how the US government and its industry partners can improve on sharing information about cyber threats.

Why does the government want input?

“The remarks come in the wake of reports from earlier this year that said a slew of private sector players have been pulling back from the Joint Cyber Defense Collaborative — stood up by CISA in 2021 to encourage cyber firms to team up with the government to detect and deter hacking threats — due to various management mishaps, including cases where CISA allegedly did not staff enough technical analysts for the program.”

Greene wants to know what CISA is doing correctly, but also what the agency is doing wrong. He hopes the private sector will give the agency grace as they make changes, because they’re working on numerous projects. Greene said that the private sector is better at detecting threats before the federal government. The 2015 Cybersecurity Information Sharing Act enabled the private sector and US government to collaborate. The act allowed the private sector to bypass obstacles they were otherwise barred from so white hat hackers could stop bad actors.

CISA has a good thing going for it with Greene. Hopefully the rest of the government will listen. It might be useful if cyber security outfits and commercial organizations caught the pods, the vlogs, and the blogs about the issue.

Whitney Grace, August 6, 2024

Curating Content: Not Really and Maybe Not at All

August 5, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Most people assume that if software is downloaded from an official “store” or from a “trusted” online Web search system, the user assumes that malware is not part of the deal. Vendors bandy about the word “trust” at the same time wizards in the back office are filtering, selecting, and setting up mechanisms to sell advertising to anyone who has money.

Advertising sales professionals are the epitome of professionalism. Google the word “trust”. You will find many references to these skilled individuals. Thanks, MSFT Copilot. Good enough.

Are these statements accurate? Because I love the high-tech outfits, my personal view is that online users today have these characteristics:

- Deep knowledge about nefarious methods

- The time to verify each content object is not malware

- A keen interest in sustaining the perception that the Internet is a clean, well-lit place. (Sorry, Mr. Hemingway, “lighted” will get you a points deduction in some grammarians’ fantasy world.)

I read “Google Ads Spread Mac Malware Disguised As Popular Browser.” My world is shattered. Is an alleged monopoly fostering malware? Is the dominant force in online advertising unable to verify that its advertisers are dealing from the top of the digital card deck? Is Google incapable of behaving in a responsible manner? I have to sit down. What a shock to my dinobaby system.

The write up alleges:

Google Ads are mostly harmless, but if you see one promoting a particular web browser, avoid clicking. Security researchers have discovered new malware for Mac devices that steals passwords, cryptocurrency wallets and other sensitive data. It masquerades as Arc, a new browser that recently gained popularity due to its unconventional user experience.

My assumption is that Google’s AI and human monitors would be paying close attention to a browser that seeks to challenge Google’s Chrome browser. Could I be incorrect? Obviously if the write up is accurate I am. Be still my heart.

The write up continues:

The Mac malware posing as a Google ad is called Poseidon, according to researchers at Malwarebytes. When clicking the “more information” option next to the ad, it shows it was purchased by an entity called Coles & Co, an advertiser identity Google claims to have verified. Google verifies every entity that wants to advertise on its platform. In Google’s own words, this process aims “to provide a safe and trustworthy ad ecosystem for users and to comply with emerging regulations.” However, there seems to be some lapse in the verification process if advertisers can openly distribute malware to users. Though it is Google’s job to do everything it can to block bad ads, sometimes bad actors can temporarily evade their detection.

But the malware apparently exists and the ads are the vector. What’s the fix? Google is already doing its typical A Number One Quantumly Supreme Job. Well, the fix is you, the user.

You are sufficiently skilled to detect, understand, and avoid such online trickery, right?

Stephen E Arnold, August 5, 2024

Judgment Before? No. Backing Off After? Yes.

August 5, 2024

I wanted to capture two moves from two technology giants. The first item is the report that Google pulled the oh-so-Googley ad about a father using Gemini to write personal note to his daughter. If you are not familiar with the burst of creative marketing, you can glean a few details from “Google Pulls Gemini AI Ad from Olympics after Backlash.” The second item is the report that according to Bloomberg, “Apple Pulls Commercial After Thai Backlash, Calls for Boycott.”

I reacted to these two separate announcements by thinking about what these do it-reverse it decisions suggest about the management controls at two technology giants.

Some management processes operated to think up the ad ideas. Then the project had to be given the green light from “leadership” at the two outfits. Next third party providers had to be enlisted to do some of the “knowledge work”. Finally, I assume there were meetings to review the “creative.” Finally, one ad from several candidates was selected by each firm. The money paid. And then the ads appeared. That’s a lot of steps and probably more than two or three people working in a cube next to a Foosball tables.

Plus, the about faces by the two companies did not take much time. Google caved after a few days. Apple also hopped on its havester and chopped the India advertisement quickly as well. Decisiveness. Actually decisiveness after the fact.

Why not less obvious processes like using better judgment before releasing the advertisements? Why not focus on working with people who are more in tune with audience reactions than being clever, smooth talking, and desperate-eager for big company money?

Several observations:

- Might I hypothesize that both companies lack a fabric of common sense?

- If online ads “work,” why use what I would call old-school advertising methods? Perhaps the online angle is not correct for such important messaging from two companies that seem to do whatever they want most of the time?

- The consequences of these do-then-undo actions are likely to be close to zero. Is that what operating in a no-consequences environment fosters?

I wonder if the back away mentality is now standard operating procedure. We have Intel and nVidia with some back-away actions. We have a nation state agreeing to a plea bargain and the un-agreeing the next day. We have a net neutraility rule, then don’t, then we do, and now we don’t. Now that I think about it, perhaps because there are no significant consequences, decision quality has taken a nose dive?

Some believe that great complexity sets the stage for bad decisions which regress to worse decisions.

Stephen E Arnold, August 5, 2024

MBAs Gone Wild: Assertions, Animation & Antics

August 5, 2024

Author’s note: Poor WordPress in the Safari browser is having a very bad day. Quotes from the cited McKinsey document appear against a weird blue background. My cheerful little dinosaur disappeared. And I could not figure out how to claim that AI did not help me with this essay. Just a heads up.

Holed up in rural Illinois, I had time to read the mid-July McKinsey & Company document “McKinsey Technology Trends Outlook 2024.” Imagine a group of well-groomed, top-flight, smooth talking “experts” with degrees from fancy schools filming one of those MBA group brainstorming sessions. Take the transcript, add motion graphics, and give audio sweetening to hot buzzwords. I think this would go viral among would-be consultants, clients facing the cloud of unknowing about the future. and those who manifest the Peter Principle. Viral winner! From my point of view, smart software is going to be integrated into most technologies and is, therefore, the trend. People may lose money, but applied AI is going to be with most companies for a long, long time.

The report boils down the current business climate to a few factors. Yes, when faced with exceptionally complex problems, boil those suckers down. Render them so only the tasty sales part remains. Thus, today’s businesss challenges become:

Generative AI (gen AI) has been a standout trend since 2022, with the extraordinary uptick in interest and investment in this technology unlocking innovative possibilities across interconnected trends such as robotics and immersive reality. While the macroeconomic environment with elevated interest rates has affected equity capital investment and hiring, underlying indicators—including optimism, innovation, and longer-term talent needs—reflect a positive long-term trajectory in the 15 technology trends we analyzed.

The data for the report come from inputs from about 100 people, not counting the people who converted the inputs into the live-action report. Move your mouse from one of the 15 “trends” to another. You will see the graphic display colored balls of different sizes. Yep, tiny and tinier balls and a few big balls tossed in.

I don’t have the energy to take each trend and offer a comment. Please, navigate to the original document and review it at your leisure. I can, however, select three trends and offer an observation or two about this very tiny ball selection.

Before sharing those three trends, I want to provide some context. First, the data gathered appear to be subjective and similar to the dorm outputs of MBA students working on a group project. Second, there is no reference to the thought process itself which when applied to a real world problem like boosting sales for opioids. It is the thought process that leads to revenues from consulting that counts.

Third, McKinsey’s pool of 100 thought leaders seems fixated on two things:

gen AI and electrification and renewables.

But is that statement comprised of three things? [1] AI, [2] electrification, and [3] renewables? Because AI is a greedy consumer of electricity, I think I can see some connection between AI and renewable, but the “electrification” I think about is President Roosevelt’s creating in 1935 the Rural Electrification Administration. Dinobabies can be such nit pickers.

Let’s tackle the electrification point before I get to the real subject of the report, AI in assorted forms and applications. When McKinsey talks about electrification and renewables, McKinsey means:

The electrification and renewables trend encompasses the entire energy production, storage, and distribution value chain. Technologies include renewable sources, such as solar and wind power; clean firm-energy sources, such as nuclear and hydrogen, sustainable fuels, and bioenergy; and energy storage and distribution solutions such as long-duration battery systems and smart grids.In 2019, the interest score for Electrification and renewables was 0.52 on a scale from 0 to 1, where 0 is low and 1 is high. The innovation score was 0.29 on the same scale. The adoption rate was scored at 3. The investment in 2019 was 160 on a scale from 1 to 5, with 1 defined as “frontier innovation” and 5 defined as “fully scaled.” The investment was 160 billion dollars. By 2023, the interest score for Electrification and renewables was 0.73. The innovation score was 0.36. The investment was 183 billion dollars. Job postings within this trend changed by 1 percent from 2022 to 2023.

Stop burning fossil fuels? Well, not quite. But the “save the whales” meme is embedded in the verbiage. Confused? That may be the point. What’s the fix? Hire McKinsey to help clarify your thinking.

AI plays the big gorilla in the monograph. The first expensive, hairy, yet promising aspect of smart software is replacing humans. The McKinsey report asserts:

Generative AI describes algorithms (such as ChatGPT) that take unstructured data as input (for example, natural language and images) to create new content, including audio, code, images, text, simulations, and videos. It can automate, augment, and accelerate work by tapping into unstructured mixed-modality data sets to generate new content in various forms.

Yep, smart software can produce reports like this one: Faster, cheaper, and good enough. Just think of the reports the team can do.

The third trend I want to address is digital trust and cyber security. Now the cyber crime world is a relatively specialized one. We know from the CrowdStrike misstep that experts in cyber security can wreck havoc on a global scale. Furthermore, we know that there are hundreds of cyber security outfits offering smart software, threat intelligence, and very specialized technical services to protect their clients. But McKinsey appears to imply that its band of 100 trend identifiers are hip to this. Here’s what the dorm-room btrainstormers output:

The digital trust and cybersecurity trend encompasses the technologies behind trust architectures and digital identity, cybersecurity, and Web3. These technologies enable organizations to build, scale, and maintain the trust of stakeholders.

Okay.

I want to mention that other trends range from blasting into space to software development appear in the list. What strikes me as a bit of an oversight is that smart software is going to be woven into the fabric of the other trends. What? Well, software is going to surf on AI outputs. And big boy rockets, not the duds like the Seattle outfit produces, use assorted smart algorithms to keep the system from burning up or exploding… most of the time. Not perfect, but better, faster, and cheaper than CalTech grads solving equations and rigging cybernetics with wire and a soldering iron.

Net net: This trend report is a sales document. Its purpose is to cause an organization familiar with McKinsey and the organization’s own shortcomings to hire McKinsey to help out with these big problems. The data source is the dorm room. The analysts are cherry picked. The tone is quasi-authoritative. I have no problem with marketing material. In fact, I don’t have a problem with the McKinsey-generated list of trends. That’s what McKinsey does. What the firm does not do is to think about the downstream consequences of their recommendations. How do I know this? Returning from a lunch with some friends in rural Illinois, I spotted two opioid addicts doing the droop.

Stephen E Arnold, August 5, 2024

The Big Battle: Another WWF Show Piece for AI

August 2, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

The Zuck believes in open source. It is like Linux. Boom. Market share. OpenAI believes in closed source (for now). Snap. You have to pay to get the good stuff. The argument about proprietary versus open source has been plodding along like Russia’s special operation for a long time. A typical response, in my opinion, is that open source is great because it allows a corporate interest to get cheap traction. Then with a surgical or not-so-surgical move, the big outfit co-opts the open source project. Boom. Semi-open source with a price tag becomes a competitive advantage. Proprietary software can be given away, licensed, or made available by subscription. Open source creates opportunities for training, special services, and feeling good about the community. But in the modern world of high-technology feeling good comes with sustainable flows of revenue and opportunities to raise prices faster than the local grocery store.

Where does open source software come from? Many students demonstrate their value by coding something useful to another. Thanks, Open AI. Good enough.

I read “Consider the Llama: Are Closed Source AI Models Doomed?” The write up is good. It contains a passage which struck me as interesting; to wit:

OpenAI, Anthropic and the like—companies that sell access to AI models. These companies inherently require their products to be much better than open source in order to up-charge. They also don’t have some other product they sell that gets improved with better AI overall.

In my opinion, in the present business climate, the hope that a high-technology product gets better is an interesting one. The idea of continual improvement, however, is not part of the business culture of high-technology companies engaged in smart software. At this time, cooking up a model which can be used to streamline or otherwise enhance an existing activity is Job One. The first outfit to generate substantial revenue from artificial intelligence will have an advantage. That doesn’t mean the outfit won’t fail, but if one considers the requirements to play with a reasonable probability of winning the AI game, smart software costs money.

In the world of online, a company or open source foundation which delivers a product or service which attracts large numbers of users has an advantage. One “play” can shift the playing field, not just win the game. What’s going on at this time, in my opinion, is that those who understand the advantage of winning in the equivalent of a WWF (World Wide Wrestling) show piece is that it allows the “winner take all” or at least the “winner takes two-thirds” of the market.

Monopolies (real or imagined) with lots of money have an advantage. Open source smart software have to have money from somewhere; otherwise, the costs of producing a winning service drop. If a large outfit with cash goes open source, that is a bold chess move which other outfits cannot afford to take. The feel good, community aspect of a smart software solution that can be used in a large number of use cases is going to fade quickly when any money on the table is taken by users who neither contribute, pay for training, or hire great open source coders as consultants. Serious players just take the software, innovate, and lock up the benefits.

“Who would do this?” some might ask.

How about China, Russia, or some nation state not too interested in the Silicon Valley way? How about an entrepreneur in Armenia or one of the Stans who wants to create a novel product or service and charge for it? Sure, US-based services may host the product or service, but the actual big bucks flow to the outfit who keeps the technology “secret”?

At this time, US companies which make high-value software available for free to anyone who can connect to the Internet and download a file are not helping American business. You may disagree. But I know that there are quite a few organizations (commercial and governmental) who think the US approach to open source software is just plain dumb.

Wrapping up an important technology with do-goodism and mostly faux hand waving about the community creates two things:

- An advantage for commercial enterprises who want to thwart American technical influence

- Free intelligence for nation-states who would like nothing more than convert the US into a client republic.

I did a job for a bunch of venture people who were into the open source religion. The reality is that at this time an alleged monopoly like Google can use its money and control of information flows to cripple other outfits trying to train their systems. On the other hand, companies who just want AI to work may become captive to an enterprise software vendor who is also an alleged monopoly. The companies funded by this firm have little chance of producing sustainable revenue. The best exits will be gift wrapping the “innovation” and selling it to another group of smart software-hungry investors.

Does the world need dozens of smart software “big dogs”? The answer is, “No.” At this time, the US is encouraging companies to make great strides in smart software. These are taking place. However, the rest of the world is learning and may have little or no desire to follow the open source path to the big WWF face off in the US.

The smart software revolution is one example of how America’s technology policy does not operate in a way that will cause our adversaries to do anything but download, enhance, build on, and lock up increasingly smarter AI systems.

From my vantage point, it is too late to undo the damage the wildness of the last few years can be remediated. The big winners in open source are not the individual products. Like the WWF shows, the winner is the promoter. Very American and decidedly different from what those in other countries might expect or want. Money, control, and power are more important than the open source movement. Proprietary may be that group’s preferred approach. Open source is software created by computer science students to prove they can produce code that does something. The “real” smart software is quite different.

Stephen E Arnold, August 2, 2024

Fancy Cyber Methods Are Useless Against Insider Threats

August 2, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

In my lectures to law enforcement and intelligence professionals, I end the talks with one statement: “Do not assume. Do not reduce costs by firing experienced professionals. Do not ignore human analyses of available information. Do not take short cuts.” Cyber security companies are often like the mythical kids of the village shoemaker. Those who can afford to hire the shoemaker have nifty kicks and slides. Those without resources have almost useless footware.

Companies in the security business often have an exceptionally high opinion of their capabilities and expertise. I think of this as the Google Syndrome or what some have called by less salubrious names. The idea is that one is just so smart, nothing bad can happen here. Yeah, right.

An executive answers questions about a slight security misstep. Thanks, Microsoft Copilot. You have been there and done that I assume.

I read “North Korean Hacker Got Hired by US Security Vendor, Immediately Loaded Malware.” The article is a reminder that outfits in the OSINT, investigative, and intelligence business can make incredibly interesting decisions. Some of these lead to quite significant consequences. This particular case example illustrates how a hiring process using humans who are really smart and dedicated can be fooled, duped, and bamboozled.

The write up explains:

KnowBe4, a US-based security vendor, revealed that it unwittingly hired a North Korean hacker who attempted to load malware into the company’s network. KnowBe4 CEO and founder Stu Sjouwerman described the incident in a blog post yesterday, calling it a cautionary tale that was fortunately detected before causing any major problems.

I am a dinobaby, and I translated the passage to mean: “We hired a bad actor but, by the grace of the Big Guy, we avoided disaster.”

Sure, sure, you did.

I would suggest you know you trapped an instance of the person’s behavior. You may not know and may never know what that individual told a colleague in North Korea or another country what the bad actor said or emailed from a coffee shop using a contact’s computer. You may never know what business processes the person absorbed, converted to an encrypted message, and forwarded via a burner phone to a pal in a nation-state whose interests are not aligned with America’s.

In short, the cyber security company dropped the ball. It need not feel too bad. One of the companies I worked for early in my 60 year working career hired a person who dumped top secrets into journalists’ laps. Last week a person I knew was complaining about Delta Airlines which was shown to be quite addled in the wake of the CrowdStrike misstep.

What’s the fix? Go back to how I end my lectures. Those in the cyber security business need to be extra vigilant. The idea that “we are so smart, we have the answer” is an example of a mental short cut. The fact is that the company KnowBe4 did not. It is lucky it KnewAtAll. Some tips:

- Seek and hire vetted experts

- Question procedures and processes in “before action” and “after action” incidents

- Do not rely on assumptions

- Do not believe the outputs of smart software systems

- Invest in security instead of fancy automobiles and vacations.

Do these suggestions run counter to your business goals and your image of yourself? Too bad. Life is tough. Cyber crime is the growth business. Step up.

Stephen E Arnold, August 2, 2024

Survey Finds Two Thirds of us Believe Chatbots Are Conscious

August 2, 2024

Well this is enlightening. TechSpot reports, “Survey Shows Many People Believe AI Chatbots Like ChatGPT Are Conscious.” And by many, writer Rob Thubron means two-thirds of those surveyed by researchers at the University of Waterloo. Two-thirds! We suppose it is no surprise the general public has this misconception. After all, even an AI engineer was famously convinced his company’s creation was sentient. We learn:

“The survey asked 300 people in the US if they thought ChatGPT could have the capacity for consciousness and the ability to make plans, reason, feel emotions, etc. They were also asked how often they used OpenAI’s product. Participants had to rate ChatGPT responses on a scale of 1 to 100, where 100 would mean absolute confidence that ChatGPT was experiencing consciousness, and 1 absolute confidence it was not. The results showed that the more someone used ChatGPT, the more they were likely to believe it had some form of consciousness. ‘These results demonstrate the power of language,’ said Dr. Clara Colombatto, professor of psychology at Waterloo’s Arts faculty, ‘because a conversation alone can lead us to think that an agent that looks and works very differently from us can have a mind.’”

That is a good point. And these “agents” will only get more convincing even as more of us interact with them more often. It is encouraging that some schools are beginning to implement AI Literacy curricula. These programs include important topics like how to effectively work with AI, when to double-check its conclusions, and a rundown of ethical considerations. More to the point here, they give students a basic understanding of what is happening under the hood.

But it seems we need a push for adults to educate themselves, too. Even a basic understanding of machine learning and LLMs would help. It will take effort to thwart our natural tendency to anthropomorphize, which is reinforced by AI hype. That is important, because when we perceive AI to think and feel as we do, we change how we interact with it. The write-up notes:

“The study, published in the journal Neuroscience of Consciousness, states that this belief could impact people who interact with AI tools. On the one hand, it may strengthen social bonds and increase trust. But it may also lead to emotional dependence on the chatbots, reduce human interactions, and lead to an over-reliance on AI to make critical decisions.”

Soon we might even find ourselves catering to perceived needs of our software (or the actual goals of the firms that make them) instead of using them as inanimate tools. Is that a path we really want to go down? Is it too late to avoid it?

Cynthia Murrell, August 2, 2024