Smart Software Project Road Blocks: An Up-to-the-Minute Report

October 1, 2024

![green-dino_thumb_thumb_thumb_thumb_t[2]_thumb_thumb green-dino_thumb_thumb_thumb_thumb_t[2]_thumb_thumb](https://arnoldit.com/wordpress/wp-content/uploads/2024/09/green-dino_thumb_thumb_thumb_thumb_t2_thumb_thumb_thumb.gif) This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I worked through a 22-page report by SQREAM, a next-gen data services outfit with GPUs. (You can learn more about the company at this buzzword dense link.) The title of the report is:

The report is a marketing document, but it contains some thought provoking content. The “report” was “administered online by Global Surveyz [sic] Research, an independent global research firm.” The explanation of the methodology was brief, but I don’t want to drag anyone through the basics of Statistics 101. As I recall, few cared and were often good customers for my class notes.

Here are three highlights:

- Smart software and services cause sticker shock.

- Cloud spending by the survey sample is going up.

- And the killer statement: 98 percent of the machine learning projects fail.

Let’s take a closer look at the astounding assertion about the 98 percent failure rate.

The stage is set in the section “Top Challenges Pertaining to Machine Learning / Data Analytics.” The report says:

It is therefore no surprise that companies consider the high costs involved in ML experimentation to be the primary disadvantage of ML/data analytics today (41%), followed by the unsatisfactory speed of this process (32%), too much time required by teams (14%) and poor data quality (13%).

The conclusion the authors of the report draw is that companies should hire SQREAM. That’s okay, no surprise because SQREAM ginned up the study and hired a firm to create an objective report, of course.

So money is the Number One issue.

Why do machine learning projects fail? We know the answer: Resources or money. The write up presents as fact:

The top contributing factor to ML project failures in 2023 was insufficient budget (29%), which is consistent with previous findings – including the fact that “budget” is the top challenge in handling and analyzing data at scale, that more than two-thirds of companies experience “bill shock” around their data analytics processes at least quarterly if not more frequently, that that the total cost of analytics is the aspect companies are most dissatisfied with when it comes to their data stack (Figure 4), and that companies consider the high costs involved in ML experimentation to be the primary disadvantage of ML/data analytics today.

I appreciated the inclusion of the costs of data “transformation.” Glib smart software wizards push aside the hassle of normalizing data so the “real” work can get done. Unfortunately, the costs of fixing up source data are often another cause of “sticker shock.” The report says:

Data is typically inaccessible and not ‘workable’ unless it goes through a certain level of transformation. In fact, since different departments within an organization have different needs, it is not uncommon for the same data to be prepared in various ways. Data preparation pipelines are therefore the foundation of data analytics and ML….

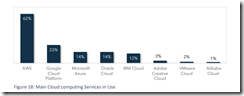

In the final pages of the report a number of graphs appear. Here’s one that stopped me in my tracks:

The sample contained 62 percent user of Amazon Web Services. Number 2 was users of Google Cloud at 23 percent. And in third place, quite surprisingly, was Microsoft Azure at 14 percent, tied with Oracle. A question which occurred to me is: “Perhaps the focus on sticker shock is a reflection of Amazon’s pricing, not just people and overhead functions?”

I will have to wait until more data becomes available to me to determine if the AWS skew and the report findings are normal or outliers.

Stephen E Arnold, October 1, 2024