AI Makes Stuff Up and Lies. This Is New Information?

December 23, 2024

The blog post is the work of a dinobaby, not AI.

The blog post is the work of a dinobaby, not AI.

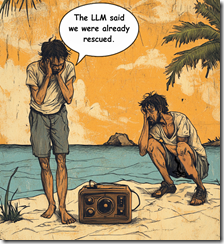

I spotted “Alignment Faking in Large Language Models.” My initial reaction was, “This is new information?” and “Have the authors forgotten about hallucination?” The original article from Anthropic sparked another essay. This one appeared in Time Magazine (online version). Time’s article was titled “Exclusive: New Research Shows AI Strategically Lying.” I like the “strategically lying,” which implies that there is some intent behind the prevarication. Since smart software reflects its developers use of fancy math and the numerous knobs and levers those developers can adjust at the same time the model is gobbling up information and “learning”, the notion of “strategically lying” struck me as as interesting.

Thanks MidJourney. Good enough.

What strategy is implemented? Who thought up the strategy? Is the strategy working? were the questions which occurred to me. The Time essay said:

experiments jointly carried out by the AI company Anthropic and the nonprofit Redwood Research, shows a version of Anthropic’s model, Claude, strategically misleading its creators during the training process in order to avoid being modified.

This suggests that the people assembling the algorithms and training data, configuring the system, twiddling the administrative settings, and doing technical manipulations were not imposing a strategy. The smart software was cooking up a strategy. Who will say that the software is alive and then, like the former Google engineer, express a belief that the system is alive. It’s sci-fi time I suppose.

The write up pointed out:

Researchers also found evidence that suggests the capacity of AIs to deceive their human creators increases as they become more powerful.

That is an interesting idea. Pumping more compute and data into a model gives it a greater capacity to manipulate its outputs to fool humans who are eager to grab something that promises to make life easier and the user smarter. If data about the US education system’s efficacy are accurate, Americans are not doing too well in the reading, writing, and arithmetic departments. Therefore, discerning strategic lies might be difficult.

The essay concluded:

What Anthropic’s experiments seem to show is that reinforcement learning is insufficient as a technique for creating reliably safe models, especially as those models get more advanced. Which is a big problem, because it’s the most effective and widely-used alignment technique that we currently have.

What’s this “seem.” The actual output of large language models using transformer methods crafted by Google output baloney some of the time. Google itself had to regroup after the “glue cheese to pizza” suggestion.

Several observations:

- Smart software has become the technology more important than any other. The problem is that its outputs are often wonky and now the systems are befuddling the wizards who created and operate them. What if AI is like a carnival ride that routinely injures those looking for kicks?

- AI is finding its way into many applications but the resulting revenue has frayed some investors’ nerves. The fix is to go faster and win to reach the revenue goal. This frenzy for payoff has been building since early 2024 but those costs remain brutally high.

- The behavior of large language models is not understood by some of its developers. Does this seem like a problem?

Net net: “Seem?” One lies or one does not.

Stephen E Arnold, December 23, 2024