Apple Emulates the Timnit Gebru Method

October 26, 2021

Remember Dr. Timnit Gebru. This individual was the researcher who apparently did not go along with the tension flow related to Google’s approach to snorkeling. (Don’t get the snorkel thing? Yeah, too bad.) The solution was to exit Dr. Gebru and move in a Googley manner forward.

Now the Apple “we care about privacy” outfit appears to have reached a “me too moment” in management tactics.

Two quick examples:

First, the very Silicon Valley Verge published “Apple Just Fired a Leader of the #AppleToo Movement.” I am not sure what the AppleToo thing encompasses, but it obviously sparked the Timnit option. The write up says:

Apple has fired Janneke Parrish, a leader of the #AppleToo movement, amid a broad crackdown on leaks and worker organizing. Parrish, a program manager on Apple Maps, was terminated for deleting files off of her work devices during an internal investigation — an action Apple categorized as “non-compliance,” according to people familiar with the situation.

Okay, deletes are bad. I figured that out when Apple elected to get rid of the backspace key.

Second, Gizmodo, another Silicon Valley information service, revealed “Apple Wanted Her Fired. It Settled on an Absurd Excuse.” The write up reports:

The next email said she’d been fired. Among the reasons Apple provided, she’d “failed to cooperate” with what the company called its “investigatory process.”

Hasta la vista, Ashley Gjøvik.

Observations:

- The Timnit method appears to work well when females are involved in certain activities which run contrary to the Apple way. (Note that the Apple way includes flexibility in responding to certain requests from nation states like China.)

- The lack of information about the incidents is apparently part of the disappearing method. Transparency? Yeah, not so much in Harrod’s Creek.

- The one-two disappearing punch is fascinating. Instead of letting the dust settle, do the bang-bang thing.

Net net: Google’s management methods appear to be viral at least in certain management circles.

Stephen E Arnold, October 26, 2021

Google Issues Apology To Timnit Gebru

December 15, 2020

Timnit Gebru is one of the world’s leading experts on AI ethics. She formerly worked at Google, where she assembled one of the most diverse Google Brain research teams. Google decided to fire her after she refused to rescind a paper she wrote concerning about risks deploying large language models. Venture Beat has details in the article: “Timnit Gebru: Google’s ‘Dehumanizing’ Memo Paints Me As An Angry Black Woman” and The Global Herald has an interview with Gebru: “Firing Backlash Led To Google CEO Apology: Timnit Gebru.”

Gebru states that the apology was not meant for her, but for the reactions Google received from the fallout of her firing. Gebru’s entire community of associates and friends stay behind her stance of not rescinding her research. She holds her firing up as an example of corporate censorship of unflattering research as well as sexism and racism.

Google painted Gebru as a stereotypical angry black woman and used her behavior as an excuse for her termination. I believe Gebru’s firing has little to do with racism and sexism. Google’s response has more to do with getting rid of an noncompliant cog in their machine, but in order to oust Gebru they relied on stereotypical means and gaslighting.

Google’s actions are disgusting. Organizations treat all types of women and men like this so they can save face and remove unsavory minions. Gaslighting is a typical way for organizations to downplay their bad actions and make the whistleblower the villain.

Gebru’s unfortunate is typical for many, but she offered this advice:

“What I want these women to know is that it’s not in your head. It’s not your fault. You are amazing, and do not let the gaslighting stop you. I think with gaslighting the hardest thing is there’s repercussions for speaking up, but there’s also shame. Like a lot of times people feel shame because they feel like they brought it upon themselves somehow.”

There are better options out there for Gebru and others in similar situations. Good luck to Gebru and others like her!

Whitney Grace, December 15, 2020

Gebru-Gibberish Gives Google Gastroenteritis

February 24, 2021

At the outset, I want to address Google’s Gebru-gibberish

Definition: Gebru-gibberish refers to official statements from Alphabet Google about the personnel issues related to the departure of two female experts in artificial intelligence working on the ethics of smart software. Gebru-gibberish is similar to statements made by those in fear of their survival.

Gastroenteritis: Watch the ads on Fox News or CNN for video explanations: Adult diapers, incontinence, etc.

Psychological impact: Fear, paranoia, flight reaction, irrational aggressiveness. Feelings of embarrassment, failure, serious injury, and lots of time in the WC.

The details of the viral problem causing discomfort among the world’s most elite online advertising organization relates to the management of Dr. Timnit Gebru. To add to the need to keep certain facilities nearby, the estimable Alphabet Google outfit apparently dismissed Dr. Margaret Mitchell. The output from the world’s most sophisticated ad sales company was Gebru-gibberish. Now those words have characterized the shallowness of the Alphabet Google thing’s approach to smart software.

In order to appreciate the problem, take a look at “Underspecification Presents Challenges for Credibility in Modern Machine Learning.” Here’s the author listing and affiliation for the people who contributed to the paper available without cost on ArXiv.org:

The image is hard to read. Let me point out that the authors include more than 30 Googlers (who may become Xooglers in between dashes to the WC).

The paper is referenced in a chatty Medium write up called “Is Google’s AI Research about to Implode?” The essay raises an interesting possibility. The write up contains an interesting point, one that suggests that Google’s smart software may have some limitations:

Underspecification presents significant challenges for the credibility of modern machine learning.

Why the apparently illogical behavior with regard to Drs. Gebru and Mitchell?

My view is that the Gebru-gibberish released from Googzilla is directly correlated with the accuracy of the information presented in the “underspecification” paper. Sure, the method works in some cases, just as the 1998 Autonomy black box worked in some cases. However, to keep the accuracy high, significant time and effort must be invested. Otherwise, smart software evidences the charming characteristic of “drift”; that is, what was relevant before new content was processed is perceived as irrelevant or just incorrect in subsequent interactions.

What does this mean?

Small, narrow domains work okay. Larger content domains work less okay.

Heron Systems, using a variation of the Google DeepMind approach, was able to “kill” a human in a simulated dog flight. However, the domain was small and there were some “rules.” Perfect for smart software. The human top gun was dead fast. Larger domains like dealing with swarms of thousands of militarized and hardened unmanned aerial vehicles and a simultaneous series of targeted cyber attacks using sleeper software favored by some nation states means that smart software will be ineffective.

What will Google do?

As I have pointed out in previous blog posts, the high school science club management method employed by Backrub has become the standard operating procedure at today’s Alphabet Google.

Thus, the question, “Is Google’s AI research about to implode?” is a good one. The answer is, “No.” Google has money; it has staff who tow the line; and it has its charade of an honest, fair, and smart online advertising system.

Let me suggest a slight change to the question; to wit: “Is Google at a tipping point?” The answer to this question is, “Yes.”

Gibru-gibberish is similar to the information and other outputs of Icarus, who flew too close to the sun and flamed out in a memorable way.

Stephen E Arnold, February 24, 2021

Google: Android and the Walled Garden

March 31, 2025

Dinobaby says, “No smart software involved. That’s for “real” journalists and pundits.

Dinobaby says, “No smart software involved. That’s for “real” journalists and pundits.

In my little corner of the world, I do not see Google as “open.” One can toss around the idea 24×7, and I won’t change my mind. Despite its unusual approach to management, the company has managed to contain the damage from Xooglers’ yip yapping about the company. Xoogler.co is focused on helping people. I suppose there are versions of Sarah Wynn-Williams “Careless People” floating around. Few talk much about THE Timnit Gebru “parrot” paper. Google is, it seems, just not the buzz generator it was in 2006, the year the decline began to accelerate in my opinion.

We have another example of “circling the wagons” strategy. It is a doozy.

“Google Moves All Android Development Behind Closed Doors” reports with some “real” writing and recycling of Google generated slick talk an interesting shift in the world of the little green man icon:

Google had to merge the two branches, which lead to problems and issues, so Google decided it’s now moving all development of Android behind closed doors

How many versions of messaging apps did Google have before it decided that “let many flowers bloom” was not in line with the sleek profile the ageing Google want to flaunt on Wall Street?

The article asks a good question:

…if development happens entirely behind closed doors, with only the occasional code drop, is the software in question really open source? Technically, the answer is obviously ‘yes’ – there’s no requirement that development take place in public. However, I’m fairly sure that when most people think of open source, they think not only of occasionally throwing chunks of code over the proverbial corporate walls, but also of open development, where everybody is free to contribute, pipe in, and follow along.

News flash from the dinobaby: Open source software, when bandied about by folks who don’t worry too much about their mom missing a Social Security check means:

- We don’t want to chase and fix bugs. Make it open source and let the community do it for free.

- We probably have coded up something that violates laws. By making it open source, we are really benefiting those other developers and creating opportunities for innovation.

- We can use the buzzword “open source” and jazz the VCs with a term that is ripe with promise for untold riches

- A student thinks: I can make my project into open source and maybe it will help me get a job.

- A hacker thinks: I can get “cred” by taking my exploit and coding a version that penetration testers will find helpful and possibly not discover the backdoor.

I have not exhausted the kumbaya about open source.

It is clear that Google is moving in several directions, a luxury only Googzillas have:

First, Google says, “We will really, really, cross my fingers and hope to die, share code … just like always.

Second, Google can add one more oxen drawn wagon to its defensive circle. The company will need it when the licensing terms for Android include some very special provisions. Of course, Google may be charitable and not add additional fees to its mobile OS.

Third, it can wave the “we good managers” flag.

Fourth, as the write up correctly notes:

…Darwin, the open source base underneath macOS and iOS, is technically open source, but nobody cares because Apple made it pretty much worthless in and of itself. Anything of value is stripped out and not only developed behind closed doors, but also not released as open source, ensuring Darwin is nothing but a curiosity we sometimes remember exists. Android could be heading in the same direction.

I think the “could” is a hedge. I penciled in “will.” But I am a dinobaby. What do I know?

Stephen E Arnold, March 31, 2025

Speed Up Your Loss of Critical Thinking. Use AI

February 19, 2025

While the human brain isn’t a muscle, its neurology does need to be exercised to maintain plasticity. When a human brain is rigid, it’s can’t function in a healthy manner. AI is harming brains by making them not think good says 404 Media: “Microsoft Study Finds AI Makes Human Cognition “Atrophied and Unprepared.” You can read the complete Microsoft research report at this link. (My hunch is that this type of document would have gone the way of Timnit Gebru and the flying stochastic parrot, but that’s just my opinion, Hank, Advait, Lev, Ian, Sean, Dick, and Nick.)

Carnegie Mellon University and Microsoft researchers released a paper that says the more humans rely on generative AI the “result in the deterioration of cognitive faculties that ought to be preserved.”

Really? You don’t say! What else does this remind you of? How about watching too much television or playing too many videogames? These passive activities (arguable with videogames) stunt the development of brain gray matter and in a flight of Mary Shelley rhetoric make a brain rot! What else did the researchers discover when they studied 319 knowledge workers who self-reported their experiences with generative AI:

“ ‘The data shows a shift in cognitive effort as knowledge workers increasingly move from task execution to oversight when using GenAI,’ the researchers wrote. ‘Surprisingly, while AI can improve efficiency, it may also reduce critical engagement, particularly in routine or lower-stakes tasks in which users simply rely on AI, raising concerns about long-term reliance and diminished independent problem-solving.’”

By the way, we definitely love and absolutely believe data based on self reporting. Think of the mothers who asked their teens, “Where did you go?” The response, “Out.” The mothers ask, “What did you do?” The answer, “Nothing.” Yep, self reporting.

Does this mean generative AI is a bad thing? Yes and no. It’ll stunt the growth of some parts of the brain, but other parts will grow in tandem with the use of new technology. Humans adapt to their environments. As AI becomes more ingrained into society it will change the way humans think but will only make them sort of dumber [sic]. The paper adds:

“ ‘GenAI tools could incorporate features that facilitate user learning, such as providing explanations of AI reasoning, suggesting areas for user refinement, or offering guided critiques,’ the researchers wrote. ‘The tool could help develop specific critical thinking skills, such as analyzing arguments, or cross-referencing facts against authoritative sources. This would align with the motivation enhancing approach of positioning AI as a partner in skill development.’”

The key is to not become overly reliant AI but also be aware that the tool won’t go away. Oh, when my mother asked me, “What did you do, Whitney?” I responded in the best self reporting manner, “Nothing, mom, nothing at all.”

Whitney Grace, February 19, 2025

Google and Its Smart Software: The Emotion Directed Use Case

July 31, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

How different are the Googlers from those smack in the middle of a normal curve? Some evidence is provided to answer this question in the Ars Technica article “Outsourcing Emotion: The Horror of Google’s “Dear Sydney” AI Ad.” I did not see the advertisement. The volume of messages flooding through my channels each days has allowed me to develop what I call “ad blindness.” I don’t notice them; I don’t watch them; and I don’t care about the crazy content presentation which I struggle to understand.

A young person has to write a sympathy card. The smart software is encouraging to use the word “feel.” This is a word foreign to the individual who wants to work for big tech someday. Thanks, MSFT Copilot. Do you have your hands full with security issues today?

Ars Technica watches TV and the Olympics. The write up reports:

In it, a proud father seeks help writing a letter on behalf of his daughter, who is an aspiring runner and superfan of world-record-holding hurdler Sydney McLaughlin-Levrone. “I’m pretty good with words, but this has to be just right,” the father intones before asking Gemini to “Help my daughter write a letter telling Sydney how inspiring she is…” Gemini dutifully responds with a draft letter in which the LLM tells the runner, on behalf of the daughter, that she wants to be “just like you.”

What’s going on? The father wants to write something personal to his progeny. A Hallmark card may never be delivered from the US to France. The solution is an emessage. That makes sense. Essential services like delivering snail mail are like most major systems not working particularly well.

Ars Technica points out:

But I think the most offensive thing about the ad is what it implies about the kinds of human tasks Google sees AI replacing. Rather than using LLMs to automate tedious busywork or difficult research questions, “Dear Sydney” presents a world where Gemini can help us offload a heartwarming shared moment of connection with our children.

I find the article’s negative reaction to a Mad Ave-type of message play somewhat insensitive. Let’s look at this use of smart software from the point of view of a person who is at the right hand tail end of the normal distribution. The factors in this curve are compensation, cleverness as measured in a Google interview, and intelligence as determined by either what school a person attended, achievements when a person was in his or her teens, or solving one of the Courant Institute of Mathematical Sciences brain teasers. (These are shared at cocktail parties or over coffee. If you can’t answer, you pay the bill and never get invited back.)

Let’s run down the use of AI from this hypothetical right of loser viewpoint:

- What’s with this assumption that a Google-type person has experience with human interaction. Why not send a text even though your co-worker is at the next desk? Why waste time and brain cycles trying to emulate a Hallmark greeting card contractor’s phraseology. The use of AI is simply logical.

- Why criticize an alleged Googler or Googler-by-the-gig for using the company’s outstanding, quantumly supreme AI system? This outfit spends millions on running AI tests which allow the firm’s smart software to perform in an optimal manner in the messaging department. This is “eating the dog food one has prepared.” Think of it as quality testing.

- The AI system, running in the Google Cloud on Google technology is faster than even a quantumly supreme Googler when it comes to generating feel-good platitudes. The technology works well. Evaluate this message in terms of the effectiveness of the messaging generated by Google leadership with regard to the Dr. Timnit Gebru matter. Upper quartile of performance which is far beyond the dead center of the bell curve humanoids.

My view is that there is one positive from this use of smart software to message a partially-developed and not completely educated younger person. The Sundar & Prabhakar Comedy Act has been recycling jokes and bits for months. Some find them repetitive. I do not. I am fascinated by the recycling. The S&P Show has its fans just as Jack Benny does decades after his demise. But others want new material.

By golly, I think the Google ad showing Google’s smart software generating a parental note is a hoot and a great demo. Plus look at the PR the spot has generated.

What’s not to like? Not much if you are Googley. If you are not Googley, sorry. There’s not much that can be done except shove ads at you whenever you encounter a Google product or service. The ad illustrates the mental orientation of Google. Learn to love it. Nothing is going to alter the trajectory of the Google for the foreseeable future. Why not use Google’s smart software to write a sympathy note to a friend when his or her parent dies? Why not use Google to write a note to the dean of a college arguing that your child should be admitted? Why not let Google think for you? At least that decision would be intentional.

Stephen E Arnold, July 31, 2024

How

How

How

How

How

Googzilla: Pointing the Finger of Blame Makes Sense I Guess

June 13, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Here you are: The Thunder Lizard of Search Advertising. Pesky outfits like Microsoft have been quicker than Billy the Kid shooting drunken farmers when it comes to marketing smart software. But the real problem in Deadwood is a bunch of do-gooders turned into revolutionaries undermining the granite foundation of the Google. I have this information from an unimpeachable source: An alleged Google professional talking on a podcast. The news release titled “Google Engineer Says Sam Altman-Led OpenAI Set Back AI Research Progress By 5-10 Years: LLMs Have Sucked The Oxygen Out Of The Room” explains that the actions of OpenAI is causing the Thunder Lizard to wobble.

One of the team sets himself apart by blaming OpenAI and his colleagues, not himself. Will the sleek, entitled professionals pay attention to this criticism or just hear “OpenAI”? Thanks, MSFT Copilot. Good enough art.

Consider this statement in the cited news release:

He [an employee of the Thunder Lizard] stated that OpenAI has “single-handedly changed the game” and set back progress towards AGI by a significant number of years. Chollet pointed out that a few years ago, all state-of-the-art results were openly shared and published, but this is no longer the case. He attributed this change to OpenAI’s influence, accusing them of causing a “complete closing down of frontier research publishing.”

I find this interesting. One company, its deal with Microsoft, and that firm’s management meltdown produced a “complete closing down of frontier research publishing.” What about the Dr. Timnit Gebru incident about the “stochastic parrot”?

The write up included this gem from the Googley acolyte of the Thunder Lizard of Search Advertising:

He went on to criticize OpenAI for triggering hype around Large Language Models or LLMs, which he believes have diverted resources and attention away from other potential areas of AGI research.

However, DeepMind — apparently the nerve center of the one best way to generate news releases about computational biology — has been generating PR. That does not count because its is real world smart software I assume.

But there are metrics to back up the claim that OpenAI is the Great Destroyer. The write up says:

Chollet’s [the Googler, remember?] criticism comes after he and Mike Knoop, [a non-Googler] the co-founder of Zapier, announced the $1 million ARC-AGI Prize. The competition, which Chollet created in 2019, measures AGI’s ability to acquire new skills and solve novel, open-ended problems efficiently. Despite 300 teams attempting ARC-AGI last year, the state-of-the-art (SOTA) score has only increased from 20% at inception to 34% today, while humans score between 85-100%, noted Knoop. [emphasis added, editor]

Let’s assume that the effort and money poured into smart software in the last 12 months boosted one key metric by 14 percent. Doesn’t’ that leave LLMs and smart software in general far, far behind the average humanoid?

But here’s the killer point?

… training ChatGPT on more data will not result in human-level intelligence.

Let’s reflect on the information in the news release.

- If the data are accurate, LLM-based smart software has reached a dead end. I am not sure the law suits will stop, but perhaps some of the hyperbole will subside?

- If these insights into the weaknesses of LLMs, why has Google continued to roll out services based on a dead-end model, suffer assorted problems, and then demonstrated its management prowess by pulling back certain services?

- Who is running the Google smart software business? Is it the computationalists combining components of proteins or is the group generating blatantly wonky images? A better question is, “Is anyone in charge of non-advertising activities at Google?”

My hunch is that this individual is representing a percentage of a fractionalized segment of Google employees. I do not think a senior manager is willing to say, “Yes, I am responsible.” The most illuminating facet of the article is the clear cultural preference at Google: Just blame OpenAI. Failing that, blame the users, blame the interns, blame another team, but do not blame oneself. Am I close to the pin?

Stephen E Arnold, June 13, 2024

Google Gems for the Week of 19 February, 2024

February 27, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

This week’s edition of Google Gems focuses on a Hope Diamond and a handful of lesser stones. Let’s go.

THE HOPE DIAMOND

In the chaos of the AI Gold Rush, horses fall and wizard engineers realize that they left their common sense in the saloon. Here’s the Hope Diamond from the Google.

The world’s largest online advertising agency created smart software with a lot of math, dump trucks filled with data, and wizards who did not recall that certain historical figures in the US were not of color. “Google Says Its AI Image-Generator Would Sometimes Overcompensate for Diversity,” an Associated Press story, explains in very gentle rhetoric that its super sophisticate brain and DeepMind would get the race of historical figures wrong. I think this means that Ben Franklin could look like a Zulu prince or George Washington might have some resemblance to Rama (blue skin, bow, arrow, and snappy hat).

My favorite search and retrieval expert Prabhakar Raghavan (famous for his brilliant lecture in Paris about the now renamed Bard) indicated that Google’s image rendering system did not hit the bull’s eye. No, Dr. Raghavan, the digital arrow pierced the micrometer thin plastic wrap of Google’s super sophisticated, quantum supremacy, gee-whiz technology.

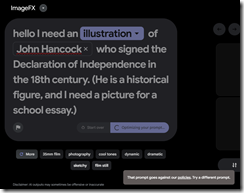

The message I received from Google when I asked for an illustration of John Hancock, an American historical figure. Too bad because this request goes against Google’s policies. Yep, wizards infused with the high school science club management method.

More important, however, was how Google’s massive stumble complemented OpenAI’s ChatGPT wonkiness. I want to award the Hope Diamond Award for AI Ineptitude to both Google and OpenAI. But, alas, there is just one Hope Diamond. The award goes to the quantumly supreme outfit Google.

[Note: I did not quote from the AP story. Why? Years ago the outfit threatened to sue people who use their stories’ words. Okay, no problemo, even though the newspaper for which I once worked supported this outfit in the days of “real” news, not recycled blog posts. I listen, but I do not forget some things. I wonder if the AP knows that Google Chrome can finish a “real” journalist’s sentences for he/him/she/her/it/them. Read about this “feature” at this link.]

Here are my reasons:

- Google is in catch-up mode and like those in the old Gold Rush, some fall from their horses and get up close and personal with hooves. How do those affect the body of a wizard? I have never fallen from a horse, but I saw a fellow get trampled when I lived in Campinas, Brazil. I recall there was a lot of screaming and blood. Messy.

- Google’s arrogance and intellectual sophistication cannot prevent incredible gaffes. A company with a mixed record of managing diversity, equity, etc. has demonstrated why Xooglers like Dr. Timnit Gebru find the company “interesting.” I don’t think Google is interesting. I think it is disappointing, particularly in the racial sensitivity department.

- For years I have explained that Google operates via the high school science club management method. What’s cute when one is 14 loses its charm when those using the method have been at it for a quarter century. It’s time to put on the big boy pants.

OTHER LITTLE GEMMAS

The previous week revealed a dirt trail with some sharp stones and thorny bushes. Here’s a quick selection of the sharpest and thorniest:

- The Google is running webinars to inform publishers about life after their wonderful long-lived cookies. Read more at Fipp.com.

- Google has released a small model as open source. What about the big model with the diversity quirk? Well, no. Read more at the weird green Verge thing.

- Google cares about AI safety. Yeah, believe it or not. Read more about this PR move on Techcrunch.

- Web search competitors will fail. This is a little stone. Yep, a kidney stone for those who don’t recall Neeva. Read more at Techpolicy.

- Did Google really pay $60 million to get that outstanding Reddit content. Wow. Maybe Google looks at different sub reddits than my research team does. Read more about it in 9 to 5 Google.

- What happens when an uninformed person uses the Google Cloud? Answer: Sticker shock. More about this estimable method in The Register.

- Some spoil sport finds traffic lights informed with Google’s smart software annoying. That’s hard to believe. Read more at this link.

- Google pointed out in a court filing that DuckDuckGo was a meta search system (that is, a search interface to other firm’s indexes) and Neeva was a loser crafted by Xooglers. Read more at this link.

No Google Hope Diamond report would be complete without pointing out that the online advertising giant will roll out its smart software to companies. Read more at this link. Let’s hope the wizards figure out that historical figures often have quite specific racial characteristics like Rama.

I wanted to include an image of Google’s rendering of a signer of the Declaration of Independence. What you see in the illustration above is what I got. Wow. I have more “gemmas”, but I just don’t want to present them.

Stephen E Arnold, February 27, 2024

A Decision from the High School Science Club School of Management Excellence

January 11, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I can’t resist writing about Inc. Magazine and its Google management articles. These are knee slappers for me. The write up causing me to chuckle is “Google’s CEO, Sundar Pichai, Says Laying Off 12,000 Workers Was the Worst Moment in the Company’s 25-Year History.” Zowie. A personnel decision coupled with late-night, anonymous termination notices — What’s not to like. What’s the “real” news write up have to say:

Google had to lay off 12,000 employees. That’s a lot of people who had been showing up to work, only to one day find out that they’re no longer getting a paycheck because the CEO made a bad bet, and they’re stuck paying for it.

“Well, that clever move worked when I was in my high school’s science club. Oh, well, I will create a word salad to distract from my decision making.Heh, heh, heh,” says the distinguished corporate leader to a “real” news publication’s writer. Thanks, MSFT Copilot Bing thing. Good enough.

I love the “had.”

The Inc. Magazine story continues:

Still, Pichai defends the layoffs as the right decision at the time, saying that the alternative would have been to put the company in a far worse position. “It became clear if we didn’t act, it would have been a worse decision down the line,” Pichai told employees. “It would have been a major overhang on the company. I think it would have made it very difficult in a year like this with such a big shift in the world to create the capacity to invest in areas.”

And Inc Magazine actually criticizes the Google! I noted:

To be clear, what Pichai is saying is that Google decided to spend money to hire employees that it later realized it needed to invest elsewhere. That’s a failure of management to plan and deliver on the right strategy. It’s an admission that the company’s top executives made a mistake, without actually acknowledging or apologizing for it.

From my point of view, let’s focus on the word “worst.” Are there other Google management decisions which might be considered in evaluating the Inc. Magazine and Sundar Pichai’s “worst.” Yep, I have a couple of items:

- A lawyer making babies in the Google legal department

- A Google VP dying with a contract worker on the Googler’s yacht as a result of an alleged substance subject to DEA scrutiny

- A Googler fond of being a glasshole giving up a wife and causing a soul mate to attempt suicide

- Firing Dr. Timnit Gebru and kicking off the stochastic parrot thing

- The presentation after Microsoft announced its ChatGPT initiative and the knee jerk Red Alert

- Proliferating duplicative products

- Sunsetting services with little or no notice

- The Google Map / Waze thing

- The messy Google Brain Deep Mind shebang

- The Googler who thought the Google AI was alive.

Wow, I am tired mentally.

But the reality is that I am not sure if anyone in Google management is particularly connected to the problems, issues, and challenges of losing a job in the midst of a Foosball game. But that’s the Google. High school science club management delivers outstanding decisions. I was in my high school science club, and I know the fine decision making our members made. One of those cost the life of one of our brightest stars. Stars make bad decisions, chatter, and leave some behind.

Stephen E Arnold, January 11, 2024

Microsoft at Davos: Is Your Hair on Fire, Google?

November 2, 2023

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Microsoft said at the January 2023 Davos, AI is the next big thing. The result? Google shifted into Code Red and delivered a wild and crazy demonstration of a deeply flawed AI system in February 2023. I think the phrase “Code Red” became associated to the state of panic within the comfy confines of Googzilla’s executive suites, real and virtual.

Sam AI-man made appearances speaking to anyone who would listen words like “billion dollar investment,” efficiency, and work processes. The result? Googzilla itself found out that whether Microsoft’s brilliant marketing of AI worked or not, the Softies had just demonstrated that it — not the Google — was a “leader”. The new Microsoft could create revenue and credibility problems for the Versailles of technology companies.

Therefore, the Google tried to try and be nimble and make the myth of engineering prowess into reality, not a CGI version of Camelot. The PR Camelot featured Google as the Big Dog in the AI world. After all, Google had done the protein thing, an achievement which made absolutely no sense to 99 percent of the earth’s population. Some asked, “What the heck is a protein folder?” I want a Google Waze service that shows me where traffic cameras are.

The Google executives apparently went to meetings with their hair on fire.

A group of Google executives in a meeting with their hair on fire after Microsoft’s Davos AI announcement. Google wanted teams to manifest AI prowess everywhere, lickity split. Google reorganized. Google probed Anthropic and one Googler invested in the company. Dr. Prabhakar Raghavan demonstrated peculiar communication skills.

I had these thoughts after I read “Google Didn’t Rush Bard Chatbot to Beat Microsoft, Executive Says.” So what was this Code Red thing? Why has Google — the quantum supremacy and global leader in online advertising and protein folding — be lagging behind Microsoft? What is it now? Oh, yeah. Almost a year, a reorganization of the Google’s smart software group, and one of Google’s own employees explaining that AI could have a negative impact on the world. Oh, yeah, that guy is one of the founders of Google’s DeepMind AI group. I won’t mention the Googler who thought his chatbot was alive and ended up with an opportunity to find his future elsewhere. Right. Code Red. I want to note Timnit Gebru and the stochastic parrot, the Jeff Dean lateral arabesque, and the significant investment in a competitor’s AI technology. Right. Standard operating procedure for an online advertising company with a fairly healthy self concept about its excellence and droit du seigneur.

The Bloomberg article reports which I am assuming is “real”, actual factual information:

A senior Google executive disputed suggestions that the company rushed to release its artificial intelligence-based chatbot Bard earlier this year to beat a similar offering from rival Microsoft Corp. Testifying in Google’s defense at the Justice Department’s antitrust trial against the search giant, Elizabeth Reid, a vice president of search, acknowledged that Bard gave “a wrong answer” during its public unveiling in February. But she rejected the contention by government lawyer David Dahlquist that Bard was “rushed” out after Microsoft announced it was integrating generative AI into its own Bing search engine.

The real news story pointed out:

Google’s public demonstration of Bard underwhelmed investors. In one instance, Bard was asked about new discoveries from the James Webb Space Telescope. The chatbot incorrectly stated the telescope was used to take the first pictures of a planet outside the Earth’s solar system. While the Webb telescope was the first to photograph one particular planet outside the Earth’s solar system, NASA first photographed a so-called exoplanet in 2004. The mistake led to a sharp fall in Alphabet’s stock. “It’s a very subtle language difference,” Reid said in explaining the error in her testimony Wednesday. “The amount of effort to ensure that a paragraph is correct is quite a lot of work.” “The challenges of fact-checking are hard,” she added.

Yes, facts are hard in Hallucinationville? I think the concept I take away from this statement is that PR is easier than making technology work. But today Google and similar firms are caught in what I call a “close enough for horseshoes” mind set. Smart software, in my experience, is like my dear, departed mother’s not-quite-done pineapple upside down cakes. Yikes, those were a mess. I could eat the maraschino cherries but nothing else. The rest was deposited in the trash bin.

And where are the “experts” in smart search? Prabhakar? Danny? I wonder if they are embarrassed by their loss of their thick lustrous hair. I think some of it may have been singed after the outstanding Paris demonstration and subsequent Mountain View baloney festivals. Was Google behaving like a child frantically searching for his mom at the AI carnival? I suppose when one is swathed in entitlements, cashing huge paychecks, and obfuscating exactly how the money is extracted from advertisers, reality is distorted.

Net net: Microsoft at Davos caused Google’s February 2023 Paris presentation. That mad scramble has caused to conclude that talking about AI is a heck of a lot easier than delivering reliable, functional, and thought out products. Is it possible to deliver such products when one’s hair is on fire? Some data say, “Nope.”

Stephen E Arnold, November 2, 2023