What Happens When Understanding Technology Is Shallow? Weakness

February 14, 2025

Yep, a dinobaby wrote this blog post. Replace me with a subscription service or a contract worker from Fiverr. See if I care.

Yep, a dinobaby wrote this blog post. Replace me with a subscription service or a contract worker from Fiverr. See if I care.

I like this question. Even more satisfying is that a big name seems to have answered it. I refer to an essay by Gary Marcus in “The Race for “AI Supremacy” Is Over — at Least for Now.”

Here’s the key passage in my opinion:

China caught up so quickly for many reasons. One that deserves Congressional investigation was Meta’s decision to open source their LLMs. (The question that Congress should ask is, how pivotal was that decision in China’s ability to catch up? Would we still have a lead if they hadn’t done that? Deepseek reportedly got its start in LLMs retraining Meta’s Llama model.) Putting so many eggs in Altman’s basket, as the White House did last week and others have before, may also prove to be a mistake in hindsight. … The reporter Ryan Grim wrote yesterday about how the US government (with the notable exception of Lina Khan) has repeatedly screwed up by placating big companies and doing too little to foster independent innovation

The write up is quite good. What’s missing, in my opinion, is the linkage of a probe to determine how a technology innovation released as a not-so-stealthy open source project can affect the US financial markets. The result was satisfying to the Chinese planners.

Also, the write up does not put the probe or “foray” in a strategic context. China wants to make certain its simple message “China smart, US dumb” gets into the world’s communication channels. That worked quite well.

Finally, the write up does not point out that the US approach to AI has given China an opportunity to demonstrate that it can borrow and refine with aplomb.

Net net: I think China is doing Shien and Temu in the AI and smart software sector.

Stephen E Arnold, February 14, 2025

AI and the Workplace: Change Will Happen, Just Not the Way Some Think

May 15, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I read “AI and the Workplace.” The essay contains observations related to smart software in the workplace. The idea is that employees who are savvy will experiment and try to use the technology within today’s work framework. I think that will happen just as the essay suggests. However, I think there is a larger, more significant impact that is easy to miss. Looking at today’s workplace is missing a more significant impact. Employees either [a] want to keep their job, [b] gain new skills and get a better job, or [c] quit to vegetate or become an entrepreneur. I understand.

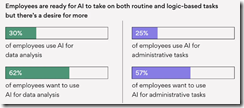

The data in the report make clear that some employees are what I call change flexible; that is, these motivated individuals differentiate from others at work by learning and experimenting. Note that more than half the people in the “we don’t use AI” categories want to use AI.

These data come from the cited article and an outfit called Asana.

The other data in the report. Some employees get a productivity boost; others just chug along, occasionally getting some benefit from AI. The future, therefore, requires learning, double checking outputs, and accepting that it is early days for smart software. This makes sense; however, it misses where the big change will come.

In my view, the major shift will appear in companies founded now that AI is more widely available. These organizations will be crafted to make optimal use of smart software from the day the new idea takes shape. A new news organization might look like Grok News (the Elon Musk project) or the much reviled AdVon. But even these outfits are anchored in the past. Grok News just substitutes smart software (which hopefully will not kill its users) for old work processes and outputs. AdVon was a “rip and replace” tool for Sports Illustrated. That did not go particularly well in my opinion.

The big job impact will be on new organizational set ups with AI baked in. The types of people working at these organizations will not be from the lower 98 percent of the work force pool. I think the majority of employees who once expected to work in information processing or knowledge work will be like a 58 year old brand manager at a vape company. Job offers will not be easy to get and new companies might opt for smart software and search engine optimization marketing. How many workers will that require? Maybe zero. Someone on Fiverr.com will do the job for a couple of hundred dollars a month.

In my view, new companies won’t need workers who are not in the top tier of some high value expertise. Who needs a consulting team when one bright person with knowledge of orchestrating smart software is able to do the work of a marketing department, a product design unit, and a strategic planning unit? In fact, there may not be any “employees” in the sense of workers at a warehouse or a consulting firm like Deloitte.

Several observations are warranted:

- Predicting downstream impacts of a technology unfamiliar to a great many people is tricky and sometimes impossible. Who knew social media would spawn a renaissance in getting tattooed?

- Visualizing how an AI-centric start up is assembled is a challenge? I submit it won’t look like an insurance company today. What’s a Tesla repair station look like? The answer, “Not much.”

- Figuring out how to be one of the elite who gets a job means being perceived as “smart.” Unlike Alina Habba, I know that I cannot fake “smart.” How many people will work hard to maximize the return on their intelligence? The answer, in my experience, is, “Not too many, dinobaby.”

Looking at the future from within the framework of today’s datasphere distorts how one perceives impact. I don’t know what the future looks like, but it will have some quite different configurations than the companies today have. The future will arrive slowly and then it becomes the foundation of a further evolution. What’s the grandson of tomorrow’s AI firm look like? Beauty will be in the eye of the beholder.

Net net: Where will the never-to-be-employed find something meaningful to do?

Stephen E Arnold, May 15, 2024

Peak AI? Do You Know What Happened to Catharists? Quiz ChatGPT or Whatever

March 21, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “Have We Reached Peak AI?” The question is an interesting one because some alleged monopolies are forming alliances with other alleged monopolies. Plus wonderful managers from an alleged monopoly is joining another alleged monopoly to lead a new “unit” of the alleged monopoly. At the same time, outfits like the usually low profile Thomson Reuters suggested that it had an $8 billion war chest for smart software. My team and I cannot keep up with the announcements about AI in fields ranging from pharma to ransomware from mysterious operators under the control of wizards in China and Russia.

Thanks, MSFT Copilot. You did a good job on the dinobabies.

Let’s look at a couple of statements in the essay which addresses the “peak AI” question.

I noticed that OpenAI is identified as an exemplar of a company that sticks to a script, avoids difficult questions, and gets a velvet glove from otherwise pointy fingernailed journalists. The point is well taken; however, attention does not require substance. The essay states:

OpenAI’s messaging and explanations of what its technology can (or will) do have barely changed in the last few years, returning repeatedly to “eventually” and “in the future” and speaking in the vaguest ways about how businesses make money off of — let alone profit from — integrating generative AI.

What if the goal of the interviews and the repeated assertions about OpenAI specifically and smart software in general is publicity and attention. Cut off the buzz for any technology and it loses its shine. Buzz is the oomph in the AI hot house. Who cares about Microsoft buying into games? Now who cares about Microsoft hooking up with OpenAI, Mistral, and Inception? That’s what the meme life delivers. Games, sigh. AI, let’s go and go big.

Another passage in the essay snagged me:

I believe a large part of the artificial intelligence boom is hot air, pumped through a combination of executive bullshitting and a compliant media that will gladly write stories imagining what AI can do rather than focus on what it’s actually doing.

One of my team members accused me of FOMO when I told Howard to get us a Flipper. (Can one steal a car with a Flipper? The answer is, “Not without quite a bit of work.) The FOMO was spot on. I had a “fear of missing out.” Canada wants to ban the gizmos. Hence, my request, “Get me a Flipper.” Most of the “technology” in the last decade is zipping along on marketing, PR, and YouTube videos. (I just watched a private YouTube video about intelware which incorporates lots of AI. Is the product available? Nope. But… soon. Let the marketing and procurement wheels begin turning.)

Journalists (often real ones) fall prey to FOMO. Just as I wanted a Flipper, the “real” journalists want to write about what’s apparently super duper important. The Internet is flagging. Quantum computing is old hat and won’t run in a mobile phone. The new version of Steve Gibson’s Spinrite is not catching the attention of blue chip investment firms. Even the enterprise search revivifier Glean is not magnetic like AI.

The issue for me is more basic than the “Peak AI” thesis; to wit, What is AI? No one wants to define it because it is a bit like “security.” The truth is that AI is a way to make money in what is a fairly problematic economic setting. A handful of companies are drowning in cash. Others are not sure they can keep the lights on.

The final passage I want to highlight is:

Eventually, one of these companies will lose a copyright lawsuit, causing a brutal reckoning on model use across any industry that’s integrated AI. These models can’t really “forget,” possibly necessitating a costly industry-wide retraining and licensing deals that will centralize power in the larger AI companies that can afford them.

I would suggest that Google has already been ensnared by the French regulators. AI faces an on-going flow of legal hassles. These range from cash-starved publishers to the work-from-home illustrator who does drawings for a Six-Flags-Over-Jesus type of super church. Does anyone really want to get on the wrong side of a super church in (heaven forbid) Texas?

I think the essay raises a valid point: AI is a poster child of hype.

However, as a dinobaby, I know that technology is an important part of the post-industrial set up in the US of A. Too much money will be left on the table unless those savvy to revenue flows and stock upsides ignore the mish-mash of AI. In an unregulated setting, people need and want the next big thing. Okay, it is here. Say “hello” to AI.

Stephen E Arnold, March 21, 2024

Making Chips: What Happens When Sanctions Spark Work Arounds

October 25, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[2] Vea4_thumb_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2023/10/Vea4_thumb_thumb_thumb_thumb_thumb_t2_thumb-21.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Maybe the Japanese outfit Canon is providing an example of the knock on effects of sanctions. On the other hand, maybe this is just PR. My hunch is more information will become available in the months ahead. “Nanoimprint Lithography Semiconductor Manufacturing System That Covers Diverse Applications with Simple Patterning Mechanism” discloses:

On October 13, 2023, Canon announced today the launch of the FPA-1200NZ2C nanoimprint semiconductor manufacturing equipment, which executes circuit pattern transfer, the most important semiconductor manufacturing process.

“This might be important,” says a technologically oriented animal in rural Kentucky. Thanks, MidJourney, continue to descend gradiently.

The idea is small and printing traces of a substance. The application is part of the expensive and delicate process of whipping out modern chips.

The write up continues:

By bringing to market semiconductor manufacturing equipment with nanoimprint lithography (NIL) technology, in addition to existing photolithography systems, Canon is expanding its lineup of semiconductor manufacturing equipment to meet the needs of a wide range of users by covering from the most advanced semiconductor devices to the existing devices.

Several observations are warranted:

- Oh, oh. A new process may be applicable to modern chip manufacturing.

- The system and method may be of value to countries dealing with US sanctions.

- Clever folks find ways to do things that regulatory language cannot anticipate.

Is this development important even if the Canon announcement is a bit fluffy? Yep, because the information about the system and method provide important road signs on the information superhighway. Canon does cameras, owns some intelware technology, and now allegedly provides an alternative to the traditional way to crank out advanced semiconductors.

Stephen E Arnold, October 25, 2023

What Happens When Misinformation Is Sucked Up by Smart Software? Maybe Nothing?

February 22, 2023

I noted an article called “New Research Finds Rampant Misinformation Spreading on WhatsApp within Diasporic Communities.” The source is the Daily Targum. I mention this because the news source is the Rutgers University Campus news service. The article provides some information about a study of misinformation on that lovable Facebook property WhatsApp.

Several points in the article caught my attention:

- Misinformation on WhatsApp caused people to be killed; Twitter did its part too

- There is an absence of fact checking

- There are no controls to stop the spread of misinformation

What is interesting about studies conducted by prestigious universities is that often the findings are neither novel nor surprising. In fact, nothing about social media companies reluctance to spend money or launch ethical methods is new.

What are the consequences? Nothing much: Abusive behavior, social disruption, and, oh, one more thing, deaths.

Stephen E Arnold, February 22, 2023

Southwest Crash: What Has Been Happening to Search for Years Revealed

January 2, 2023

What’s the connection between the failure of Southwest Airlines’ technology infrastructure and search? Most people, including assorted content processing experts, would answer the question this way:

None. Finding information and making reservations are totally unrelated.

Fair enough.

“The Shameful Open Secret Behind Southwest’s Failure” does not reference finding information as the issue. We learn:

This problem — relying on older or deficient software that needs updating — is known as incurring “technical debt,” meaning there is a gap between what the software needs to be and what it is. While aging code is a common cause of technical debt in older companies — such as with airlines which started automating early — it can also be found in newer systems, because software can be written in a rapid and shoddy way, rather than in a more resilient manner that makes it more dependable and easier to fix or expand.

I think this is a reasonable statement. I suppose a reader with my interest in search and retrieval can interpret the comments as applicable to looking up who owns some of the domains hosted on Megahost.com or some similar service provider. With a little thought, the comment can be stretched to cover the failure some organizations have experienced when trying to index content within their organizations so that employees can find a PowerPoint used by a young sales professional at a presentation at a trade show several weeks in the past.

My view point is that the Southwest failure provides a number of useful insights into the fragility of the software which operates out of sight and out of mind until that software fails.

Here’s my list of observations:

- Failure is often a real life version of the adage “the straw that broke the camel’s back”. The idea is that good enough software chugs along until it simply does not work.

- Modern software cannot be quickly, easily, or economically fixed. Many senior managers believe that software wrappers and patches can get the camel back up and working.

- Patched systems may have hidden, technical or procedural issues. A system may be returned but it may harbor hidden gotchas; for example, the sales professionals PowerPoint. The software may not be in the “system” and, therefore, cannot be found. No problem until a lawyer comes knocking about a disconnect between an installed system and what the sales professional asserted. Findability is broken by procedures, lack of comprehensive data collection, or an error importing a file. Sharing blame is not popular in some circles.

What’s this mean?

My view is that many systems and software work as intended; that is, well enough. No user is aware of certain flaws or errors, particularly when these are shared. Everyone lives with the error, assuming the mistake is the way something is. In search, if one looks for data about Megahost.com and the data are not available, it is easy to say, “Nothing to learn. Move on.” A rounding error in Excel. Move on. An enterprise search system which cannot locate a document? Just move on or call the author and ask for a copy.

The Southwest meltdown is important. The failure of the system makes clear the state of mission critical software. The problem exists in other systems as well, including, tax systems, command and control systems, health care systems, and word processors which cannot reliably number items in a list, among others.

An interesting and exciting 2023 may reveal other Southwest case examples.

Stephen E Arnold, January 2, 2023

What Happens When Wild West Innovation Operates without Barbed Wires to Corral the Doggies?

June 1, 2022

I have seen quite a few comments about a facial recognition company called PimEyes. My hunch is that ClearView.ai is happy to see that another magnet for commentary has surfaced. The write up I found interesting was “The Facial Recognition Search Engine Apocalypse Is Coming.” The cited article points to the New York Times’ exposé of a high tech outfit the NYT professionals did not know much about. Dispersing this cloud of unknowing allows for comments about the negatives associated with facial recognition. Security benefits related to entering a building with restricted access? Well, not a factor.

Here’s the comment I found in the cited article that edges closer to a substantive issue:

I’d never heard of PimEyes before, but suspect we’ll be hearing about it more going forward. I also suspect this is a losing game of whack-a-mole. Machine learning is clearly already good enough to do this with spooky accuracy, and it’s only going to get better. Should we try passing legislation to strictly regulate facial recognition search? Sure. But I suspect it’s futile, particularly given the global nature of the internet.

With few consequences and no government-generated guidelines, what will high school science club members do when they leave behind rocket motors and Raspberry Pi spy cameras? These clever lads and lassies do pretty much whatever they want.

Is that a good thing or a bad thing? My hunch is that guidelines, like the wonky sonnet form Willie Shakespeare followed, can improve innovation and possibly spill over into adulting thought about a particular capability. Right now, stampede those cows, partner! It may not be an apocalypse, but those hooves can do some damage to humanoids who are in the path of progress.

Stephen E Arnold, June 1, 2022

Silicon Valley Change? It Is Happening

March 21, 2022

I read a New York Magazine article which used the phrase “vibe shift.” You can find that story at this link. This is a very hip write up, and the vibe shift is a spotted trend, not to be confused with a spotted crocuta.

I came across this story which has hopped on the vibe shift zoo train: “The Vibe Shift in Silicon Valley.” Now the locale becomes important. The table below provides the vibe shift which existed three years ago and the spotted trend of the vibe shift:

| The Unshifted Vibe | The Shifted Vibe |

| Facebook is the “center” of the digital universe | TikTok, the China-affiliated outfit, is the new center of the universe |

| Info diffuses quickly | Info struggles to be diffused |

| Fix the Web | Replace the Web |

| Data are “mind control” | Data are a “personal liability” |

| The US is the big dog in tech regulation | Europe and Apple are the kings of the rules jungle |

| Tech destroys “our politics” | Tech harms children |

The article says:

Of course, there are many more shifts you could probably name that would support a full-time tech reporter at any publication: the heightened importance of chip manufacturing and innovation; the global supply chain; the post-COVID gig economy; and the decriminalization of psychedelics, which isn’t exactly tech but is definitely tech-adjacent. But when I think about my own coverage, these are the shifts that are guiding it: my evolving sense of where power is moving in tech and the surrounding culture.

What adaptations should one make? Here are a few suggestions:

- Watch TikToks then post your own TikToks in order to immerse and understand the new power center.

- Info does not diffuse. That’s interesting because in my upcoming National Cyber Crime lecture about open source intelligence, information is diffusing and quite a percentage of those data are helpful to investigators.

- I like the “replace” but is the alternative Web 3? How do those Web 3 apps work without ISPs and data centers? They don’t unless one is in a crowd and pals are using mobile based mesh methods. Yeah, rip and replace? Not likely.

- Mind control and a personal liability. Remember Scott McNealy’s observation: Privacy is dead. Get over it. Reality is different from the vibe.

- In the US, government has shifted responsibility for space flight, regulation, and community actions to for profit outfits. In Europe, a clown car of regulators are trying to tame the US outfits which have made less than positive contributions to social cohesion. That’s a responsible path for what and for whom?

- Tech has destroyed politics. Okay. Tech is harming children. Okay. I am not sure politics has been torn apart, and children have been at risk is a well worn rallying point when certain entities want to contain human trafficking and related actions.

What is the situation in Silicon Valley? Here are my observations:

- The Wild West approach to business has irritated quite a few folks, and there is a backlash or techlash if one prefers hippy dippy jargon

- The high school science club approach to decision making has lost its charm. Example: Google is sponsoring an F1 vehicle and the company is probably unaware that two other content centric outfits used this “marketing” so senior executives could sniff fumes and rub shoulders with classy people. And the companies? Northern Light and Autonomy.

- The lack of ethical frameworks has allowed social media companies and third party data aggregators to “nudge” people for the purpose of enriching themselves and gaining influence. Yep, ethical behavior may be making a come back.

- Many in Silicon Valley ignored the message in Jacques Ellul’s book Le bluff technologique. Short summary: Fixing problems with technology spawns new problems which people believe can be fixed by technologies. Ho ho ho.

Vibe shift? How about change emerging from those who are belatedly realizing the inherent problems of the Silicon Valley ethos.

Stephen E Arnold, March 21, 2022

Rogue in Vogue: What Can Happen When Specialized Software Becomes Available

October 25, 2021

I read “New York Times Journalist Ben Hubbard Hacked with Pegasus after Reporting on Previous Hacking Attempts.” I have no idea if the story is true or recounted accurately. The main point strikes me that a person or group allegedly used the NSO Group tools to compromise the mobile of a journalist.

The article concludes:

Hubbard was repeatedly subjected to targeted hacking with NSO Group’s Pegasus spyware. The hacking took place after the very public reporting in 2020 by Hubbard and the Citizen Lab that he had been a target. The case starkly illustrates the dissonance between NSO Group’s stated concerns for human rights and oversight, and the reality: it appears that no effective steps were taken by the company to prevent the repeated targeting of a prominent American journalist’s phone.

The write up makes clear one point I have commented upon in the past; that is, making specialized software and systems available without meaningful controls creates opportunities for problematic activity.

When specialized technology is developed using expertise and sometimes money and staff of nation states, making these tools widely available means a loss of control.

As access and knowledge of specialized tool systems and methods diffuses, it becomes easier and easier to use specialized technology for purposes for which the innovations were not intended.

Now bad actors, introductory programming classes in many countries, individuals with agendas different from those of their employer, disgruntled software engineers, and probably a couple of old time programmers with a laptop in an elder care facility can:

- Engage in Crime as a Service

- Use a bot to poison data sources

- Access a target’s mobile device

- Conduct surveillance operations

- Embed obfuscated code in open source software components.

If the cited article is not accurate, it provides sufficient information to surface and publicize interesting ideas. If the write up is accurate, the control mechanisms in the countries actively developing and licensing specialized software are not effective in preventing misuse. For cloud services, the controls should be easier to apply.

Is every company, every nation, and every technology savvy individual a rogue? I hope not.

Stephen E Arnold, October 25, 2021

What Happens when an AI Debates Politics?

April 20, 2021

IBM machine-learning researcher Noam Slonim spent years developing a version of IBM’s Watson that he hoped could win a formal debate. The New Yorker describes his journey and the results in, “The Limits of Political Debate.” We learn of the scientist’s inspiration following Watson’s Jeopardy win and his request that the AI be given Scarlett Johansson’s voice (and why it was not). Writer Benjamin Wallace-Wells also tells us:

“The young machine learned by scanning the electronic library of LexisNexis Academic, composed of news stories and academic journal articles—a vast account of the details of human experience. One engine searched for claims, another for evidence, and two more engines characterized and sorted everything that the first two turned up. If Slonim’s team could get the design right, then, in the short amount of time that debaters are given to prepare, the machine could organize a mountain of empirical information. It could win on evidence.”

Ah, but evidence is just one part. Upon consulting with a debate champion, Slonim learned more about the very human art of argument. Wallace-Wells continues:

“Slonim realized that there were a limited number of ‘types of argumentation,’ and these were patterns that the machine would need to learn. How many? Dan Lahav, a computer scientist on the team who had also been a champion debater, estimated that there were between fifty and seventy types of argumentation that could be applied to just about every possible debate question. For I.B.M., that wasn’t so many. Slonim described the second phase of Project Debater’s education, which was somewhat handmade: Slonim’s experts wrote their own modular arguments, relying in part on the Stanford Encyclopedia of Philosophy and other texts. They were trying to train the machine to reason like a human.”

Did they succeed? That is (ahem) debatable. The system was put to the test against experienced debater Harish Natarajan in front of a human audience. See the article for the details, but in the end the human won—sort of. The audience sided with him, but the more Slonim listened to the debate the more he realized the AI had made the better case by far. Natarajan, in short, was better at manipulating his listeners.

Since this experience, Slonim has turned to using Project Debater’s algorithms to analyze arguments being made in the virtual public square. Perhaps, Wallace-Wells speculates, his efforts will grow into an “argument checker” tool much like the grammar checkers that are now common. Would this make for political debates that are more empirical and rational than the polarized arguments that now dominate the news? That would be a welcome change.

Cynthia Murrell, April 20, 2021