Stop Indexing! And Pay Up!

July 17, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I read “Apple, Nvidia, Anthropic Used Thousands of Swiped YouTube Videos to Train AI.” The write up appears in two online publications, presumably to make an already contentious subject more clicky. The assertion in the title is the equivalent of someone in Salem, Massachusetts, pointing at a widower and saying, “She’s a witch.” Those willing to take the statement at face value would take action. The “trials” held in colonial Massachusetts. My high school history teacher was a witchcraft trial buff. (I think his name was Elmer Skaggs.) I thought about his descriptions of the events. I recall his graphic depictions and analysis of what I recall as “dunking.” The idea was that if a person was a witch, then that person could be immersed one or more times. I think the idea had been popular in medieval Europe, but it was not a New World innovation. Me-too is a core way to create novelty. The witch could survive being immersed for a period of time. With proof, hanging or burning were the next step. The accused who died was obviously not a witch. That’s Boolean logic in a pure form in my opinion.

The Library in Alexandria burns in front of people who wanted to look up information, learn, and create more information. Tough. Once the cultural institution is gone, just figure out the square root of two yourself. Thanks, MSFT Copilot. Good enough.

The accusations and evidence in the article depict companies building large language models as candidates for a test to prove that they have engaged in an improper act. The crime is processing content available on a public network, indexing it, and using the data to create outputs. Since the late 1960s, digitizing information and making it more easily accessible was perceived as an important and necessary activity. The US government supported indexing and searching of technical information. Other fields of endeavor recognized that as the volume of information expanded, the traditional methods of sitting at a table, reading a book or journal article, making notes, analyzing the information, and then conducting additional research or writing a technical report was simply not fast enough. What worked in a medieval library was not a method suited to put a satellite in orbit or perform other knowledge-value tasks.

Thus, online became a thing. Remember, we are talking punched cards, mainframes, and clunky line printers one day there was the Internet. The interest in broader access to online information grew and by 1985, people recognized that online access was useful for many tasks, not just looking up information about nuclear power technologies, a project I worked on in the 1970s. Flash forward 50 years, and we are upon the moment one can read about the “fact” that Apple, Nvidia, Anthropic Used Thousands of Swiped YouTube Videos to Train AI.

The write up says:

AI companies are generally secretive about their sources of training data, but an investigation by Proof News found some of the wealthiest AI companies in the world have used material from thousands of YouTube videos to train AI. Companies did so despite YouTube’s rules against harvesting materials from the platform without permission. Our investigation found that subtitles from 173,536 YouTube videos, siphoned from more than 48,000 channels, were used by Silicon Valley heavyweights, including Anthropic, Nvidia, Apple, and Salesforce.

I understand the surprise some experience when they learn that a software script visits a Web site, processes its content, and generates an index (a buzzy term today is large language model, but I prefer the simpler word index.)

I want to point out that for decades those engaged in making information findable and accessible online have processed content so that a user can enter a query and get a list of indexed items which match that user’s query. In the old days, one used Boolean logic which we met a few moments ago. Today a user’s query (the jazzy term is prompt now) is expanded, interpreted, matched to the user’s “preferences”, and a result generated. I like lists of items like the entries I used to make on a notecard when I was a high school debate team member. Others want little essays suitable for a class assignment on the Salem witchcraft trials in Mr. Skaggs’s class. Today another system can pass a query, get outputs, and then take another action. This is described by the in-crowd as workflow orchestration. Others call it, “taking a human’s job.”

My point is that for decades, the index and searching process has been without much innovation. Sure, software scripts can know when to enter a user name and password or capture information from Web pages that are transitory, disappearing in the blink of an eye. But it is still indexing over a network. The object remains to find information of utility to the user or another system.

The write up reports:

Proof News contributor Alex Reisner obtained a copy of Books3, another Pile dataset and last year published a piece in The Atlantic reporting his finding that more than 180,000 books, including those written by Margaret Atwood, Michael Pollan, and Zadie Smith, had been lifted. Many authors have since sued AI companies for the unauthorized use of their work and alleged copyright violations. Similar cases have since snowballed, and the platform hosting Books3 has taken it down. In response to the suits, defendants such as Meta, OpenAI, and Bloomberg have argued their actions constitute fair use. A case against EleutherAI, which originally scraped the books and made them public, was voluntarily dismissed by the plaintiffs. Litigation in remaining cases remains in the early stages, leaving the questions surrounding permission and payment unresolved. The Pile has since been removed from its official download site, but it’s still available on file sharing services.

The passage does a good job of making clear that most people are not aware of what indexing does, how it works, and why the process has become a fundamental component of many, many modern knowledge-centric systems. The idea is to find information of value to a person with a question, present relevant content, and enable the user to think new thoughts or write another essay about dead witches being innocent.

The challenge today is that anyone who has written anything wants money. The way online works is that for any single user’s query, the useful information constitutes a tiny, miniscule fraction of the information in the index. The cost of indexing and responding to the query is high, and those costs are difficult to control.

But everyone has to be paid for the information that individual “created.” I understand the idea, but the reality is that the reason indexing, search, and retrieval was invented, refined, and given numerous life extensions was to perform a core function: Answer a question or enable learning.

The write up makes it clear that “AI companies” are witches. The US legal system is going to determine who is a witch just like the process in colonial Salem. Several observations are warranted:

- Modifying what is a fundamental mechanism for information retrieval may be difficult to replace or re-invent in a quick, cost-efficient, and satisfactory manner. Digital information is loosey goosey; that is, it moves, slips, and slides either by individual’s actions or a mindless system’s.

- Slapping fines and big price tags on what remains an access service will take time to have an impact. As the implications of the impact become more well known to those who are aggrieved, they may find that their own information is altered in a fundamental way. How many research papers are “original”? How many journalists recycle as a basic work task? How many children’s lives are lost when the medical reference system does not have the data needed to treat the kid’s problem?

- Accusing companies of behaving improperly is definitely easy to do. Many companies do ignore rules, regulations, and cultural norms. Engineering Index’s publisher leaned that bootleg copies of printed Compendex indexes were available in China. What was Engineering Index going to do when I learned this almost 50 years ago? The answer was give speeches, complain to those who knew what the heck a Compendex was, and talk to lawyers. What happened to the Chinese content pirates? Not much.

I do understand the anger the essay expresses toward large companies doing indexing. These outfits are to some witches. However, if the indexing of content is derailed, I would suggest there are downstream consequences. Some of those consequences will make zero difference to anyone. A government worker at a national lab won’t be able to find details of an alloy used in a nuclear device. Who cares? Make some phone calls? Ask around. Yeah, that will work until the information is needed immediately.

A student accustomed to looking up information on a mobile phone won’t be able to find something. The document is a 404 or the information returned is an ad for a Temu product. So what? The kid will have to go the library, which one hopes will be funded, have printed material or commercial online databases, and a librarian on duty. (Good luck, traditional researchers.) A marketing team eager to get information about the number of Telegram users in Ukraine won’t be able to find it. The fix is to hire a consultant and hope those bright men and women have a way to get a number, a single number, good, bad, or indifferent.)

My concern is that as the intensity of the objections about a standard procedure for building an index escalate, the entire knowledge environment is put at risk. I have worked in online since 1962. That’s a long time. It is amazing to me that the plumbing of an information economy has been ignored for a long time. What happens when the companies doing the indexing go away? What happens when those producing the government reports, the blog posts, or the “real” news cannot find the information needed to create information? And once some information is created, how is another person going to find it. Ask an eighth grader how to use an online catalog to find a fungible book. Let me know what you learn? Better yet, do you know how to use a Remac card retrieval system?

The present concern about information access troubles me. There are mechanisms to deal with online. But the reason content is digitized is to find it, to enable understanding, and to create new information. Digital information is like gerbils. Start with a couple of journal articles, and one ends up with more journal articles. Kill this access and you get what you wanted. You know exactly who is the Salem witch.

Stephen E Arnold, July 17, 2024

x

x

x

x

x

x

Are Experts Misunderstanding Google Indexing?

April 12, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Google is not perfect. More and more people are learning that the mystics of Mountain View are working hard every day to deliver revenue. In order to produce more money and profit, one must use Rust to become twice as wonderful than a programmer who labors to make C++ sit up, bark, and roll over. This dispersal of the cloud of unknowing obfuscating the magic of the Google can be helpful. What’s puzzling to me is that what Google does catches people by surprise. For example, consider the “real” news presented in “Google Books Is Indexing AI-Generated Garbage.” The main idea strikes me as:

But one unintended outcome of Google Books indexing AI-generated text is its possible future inclusion in Google Ngram viewer. Google Ngram viewer is a search tool that charts the frequencies of words or phrases over the years in published books scanned by Google dating back to 1500 and up to 2019, the most recent update to the Google Books corpora. Google said that none of the AI-generated books I flagged are currently informing Ngram viewer results.

Thanks, Microsoft Copilot. I enjoyed learning that security is a team activity. Good enough again.

Indexing lousy content has been the core function of Google’s Web search system for decades. Search engine optimization generates information almost guaranteed to drag down how higher-value content is handled. If the flagship provides the navigation system to other ships in the fleet, won’t those vessels crash into bridges?

In order to remediate Google’s approach to indexing requires several basic steps. (I have in various ways shared these ideas with the estimable Google over the years. Guess what? No one cared, understood, and if the Googler understood, did not want to increase overhead costs. So what are these steps? I shall share them:

- Establish an editorial policy for content. Yep, this means that a system and method or systems and methods are needed to determine what content gets indexed.

- Explain the editorial policy and what a person or entity must do to get content processed and indexed by the Google, YouTube, Gemini, or whatever the mystics in Mountain View conjure into existence

- Include metadata with each content object so one knows the index date, the content object creation date, and similar information

- Operate in a consistent, professional manner over time. The “gee, we just killed that” is not part of the process. Sorry, mystics.

Let me offer several observations:

- Google, like any alleged monopoly, faces significant management challenges. Moving information within such an enterprise is difficult. For an organization with a Foosball culture, the task may be a bit outside the wheelhouse of most young people and individuals who are engineers, not presidents of fraternities or sororities.

- The organization is under stress. The pressure is financial because controlling the cost of the plumbing is a reasonably difficult undertaking. Second, there is technical pressure. Google itself made clear that it was in Red Alert mode and keeps adding flashing lights with each and every misstep the firm’s wizards make. These range from contentious relationships with mere governments to individual staff member who grumble via internal emails, angry Googler public utterances, or from observed behavior at conferences. Body language does speak sometimes.

- The approach to smart software is remarkable. Individuals in the UK pontificate. The Mountain View crowd reassures and smiles — a lot. (Personally I find those big, happy looks a bit tiresome, but that’s a dinobaby for you.)

Net net: The write up does not address the issue that Google happily exploits. The company lacks the mental rigor setting and applying editorial policies requires. SEO is good enough to index. Therefore, fake books are certainly A-OK for now.

Stephen E Arnold, April 12, 2024

AI Shocker? Automatic Indexing Does Not Work

May 8, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I am tempted to dig into my more than 50 years of work in online and pull out a chestnut or two. l will not. Just navigate to “ChatGPT Is Powered by These Contractors Making $15 an Hour” and check out the allegedly accurate statements about the knowledge work a couple of people do.

The write up states:

… contractors have spent countless hours in the past few years teaching OpenAI’s systems to give better responses in ChatGPT.

The write up includes an interesting quote; to wit:

“We are grunt workers, but there would be no AI language systems without it,” said Savreux [an indexer tagging content for OpenAI].

I want to point out a few items germane to human indexers based on my experience with content about nuclear information, business information, health information, pharmaceutical information, and “information” information which thumbtypers call metadata:

- Human indexers, even when trained in the use of a carefully constructed controlled vocabulary, make errors, become fatigued and fall back on some favorite terms, and misunderstand the content and assign terms which will mislead when used in a query

- Source content — regardless of type — varies widely. New subjects or different spins on what seem to be known concepts mean that important nuances may be lost due to what is included in the available dataset

- New content often uses words and phrases which are difficult to understand. I try to note a few of the more colorful “new” words and bound phrases like softkill, resenteeism, charity porn, toilet track, and purity spirals, among others. In order to index a document in a way that allows one to locate it, knowing the term is helpful if there is a full text instance. If not, one needs a handle on the concept which is an index terms a system or a searcher knows to use. Relaxing the meaning (a trick of some clever outfits with snappy names) is not helpful

- Creating a training set, keeping it updated, and assembling the content artifacts is slow, expensive, and difficult. (That’s why some folks have been seeking short cuts for decades. So far, humans still become necessary.)

- Reindexing, refreshing, or updating the digital construct used to “make sense” of content objects is slow, expensive, and difficult. (Ask an Autonomy user from 1998 about retraining in order to deal with “drift.” Let me know what you find out. Hint: The same issues arise from popular mathematical procedures no matter how many buzzwords are used to explain away what happens when words, concepts, and information change.

Are there other interesting factoids about dealing with multi-type content. Sure there are. Wouldn’t it be helpful if those creating the content applied structure tags, abstracts, lists of entities and their definitions within the field or subject area of the content, and pointers to sources cited in the content object.

Let me know when blog creators, PR professionals, and TikTok artists embrace this extra work.

Pop quiz: When was the last time you used a controlled vocabulary classification code to disambiguate airplane terminal, computer terminal, and terminal disease? How does smart software do this, pray tell? If the write up and my experience are on the same wave length (not surfing wave but frequency wave), a subject matter expert, trained index professional, or software smarter than today’s smart software are needed.

Stephen E Arnold, May 8, 2023

The Google: Indexing and Discriminating Are Expensive. So Get Bigger Already

November 9, 2022

It’s Wednesday, November 9, 2022, only a few days until I hit 78. Guess what? Amidst the news of crypto currency vaporization, hand wringing over the adult decisions forced on high school science club members at Facebook and Twitter, and the weirdness about voting — there’s a quite important item of information. This particular datum is likely to be washed away in the flood of digital data about other developments.

What is this gem?

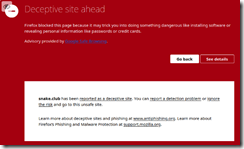

An individual has discovered that the Google is not indexing some Mastodon servers. You can read the story in a Mastodon post at this link. Don’t worry. The page will resolve without trying to figure out how to make Mastodon stomp around in the way you want it to. The link to you is Snake.club Stephen Brennan.

The item is that Google does not index every Mastodon server. The Google, according to Mr. Brennan:

has decided that since my Mastodon server is visually similar to other Mastodon servers (hint, it’s supposed to be) that it’s an unsafe forgery? Ugh. Now I get to wait for a what will likely be a long manual review cycle, while all the other people using the site see this deceptive, scary banner.

So what?

Mr. Brennan notes:

Seems like El Goog has no problem flagging me in an instant, but can’t cleanup their mistakes quickly.

A few hours later Mr. Brennan reports:

However, the Search Console still insists I have security problems, and the “transparency report” here agrees, though it classifies my threat level as Yellow (it was Red before).

Is the problem resolved? Sort of. Mr. Brennan has concluded:

… maybe I need to start backing up my Google data. I could see their stellar AI/moderation screwing me over, I’ve heard of it before.

Why do I think this single post and thread is important? Four reasons:

- The incident underscores how an individual perceives Google as “the Internet.” Despite the use of a decentralized, distributed system. The mind set of some Mastodon users is that Google is the be-all and end-all. It’s not, of course. But if people forget that there are other quite useful ways of finding information, the desire to please, think, and depend on Google becomes the one true way. Outfits like Mojeek.com don’t have much of a chance of getting traction with those in the Google datasphere.

- Google operates on a close-enough-for-horseshoes or good-enough approach. The objective is to sell ads. This means that big is good. The Good Principle doesn’t do a great job of indexing Twitter posts, but Twitter is bigger than Mastodon in terms of eye balls. Therefore, it is a consequence of good-enough methods to shove small and low-traffic content output into a area surrounded by Google’s police tape. Maybe Google wants Mastodon users behind its police tape? Maybe Google does not care today but will if and when Mastodon gets bigger? Plus some Google advertisers may want to reach those reading search results citing Mastodon? Maybe? If so, Mastodon servers will become important to the Google for revenue, not content.

- Google does not index “the world’s information.” The system indexes some information, ideally information that will attract users. In my opinion, the once naive company allegedly wanted to achieve the world’s information. Mr. Page and I were on a panel about Web search as I recall. My team and I had sold to CMGI some technology which was incorporated into Lycos. That’s why I was on the panel. Mr. Page rolled out the notion of an “index to the world’s information.” I pointed out that indexing rapidly-expanding content and the capturing of content changes to previously indexed content would be increasingly expensive. The costs would be high and quite hard to control without reducing the scope, frequency, and depth of the crawls. But Mr. Page’s big idea excited people. My mundane financial and technical truths were of zero interest to Mr. Page and most in the audience. And today? Google’s management team has to work overtime to try to contain the costs of indexing near-real time flows of digital information. The expense of maintaining and reindexing backfiles is easier to control. Just reduce the scope of sites indexed, the depth of each crawl, the frequency certain sites are reindexed, and decrease how much content old content is displayed. If no one looks at these data, why spend money on it? Google is not Mother Theresa and certainly not the Andrew Carnegie library initiative. Mr. Brennan brushed against an automated method that appears to say, “The small is irrelevant controls because advertisers want to advertise where the eyeballs are.”

- Google exists for two reasons: First, to generate advertising revenue. Why? None of its new ventures have been able to deliver advertising-equivalent revenue. But cash must flow and grow or the Google stumbles. Google is still what a Microsoftie called a “one-trick pony” years ago. The one-trick pony is the star of the Google circus. Performing Mastodons are not in the tent. Second, Google wants very much to dominate cloud computing, off-the-shelf machine learning, and cyber security. This means that the performing Mastodons have to do something that gets the GOOG’s attention.

Net net: I find it interesting to find examples of those younger than I discovering the precise nature of Google. Many of these individuals know only Google. I find that sad and somewhat frightening, perhaps more troubling than Mr. Putin’s nuclear bomb talk. Mr. Putin can be seen and heard. Google controls its datasphere. Like goldfish in a bowl, it is tough to understand the world containing that bowl and its inhabitants.

Stephen E Arnold, November 9, 2022

Deloitte Acquires Terbium Labs: Does This Mean Digital Shadows Won the Dark Web Indexing Skirmish?

July 7, 2021

Deloitte has been on a cybersecurity shopping spree this year. The giant auditing and consulting firm bought Root9B in January and CloudQuest at the beginning of June. Now, ZDNet reports, “Deloitte Scoops Up Digital Risk Protection Company Terbium Labs.” We like Terbium. Perhaps the acquisition will help Deloitte move past the unfortunate Autonomy affair. Writer Natalie Gagliordi tells us:

“The tax and auditing giant said Terbium Labs’ services — which include a digital risk protection platform that aims to helps organizations detect and remediate data exposure, theft, or misuse — will join Deloitte’s cyber practice and bolster its Detect & Respond offering suite. Terbium Labs’ digital risk platform leverages AI, machine learning, and patented data fingerprinting technologies to identify illicit use of sensitive data online. Deloitte said that adding the Terbium Labs business to its portfolio would enable the company to offer clients another way to continuously monitor for data exposed on the open, deep, or dark web. ‘Finding sensitive or proprietary data once it leaves an organization’s perimeter can be extremely challenging,’ said Kieran Norton, Deloitte Risk & Financial Advisory’s infrastructure solution leader, and principal. ‘Advanced cyber threat intelligence, paired with remediation of data risk exposure requires a balance of advanced technology, keen understanding of regulatory compliance and fine-tuning with an organization’s business needs and risk profile.’”

Among the Deloitte clients that may now benefit from Terbium tech are several governments and Fortune 500 companies. It is not revealed how much Deloitte paid for the privilege.

Terbium Labs lost the marketing fight with an outfit called Digital Shadows. That company has not yet been SPACed, acquired, or IPOed. There are quite a few Dark Web indexing outfits, and quite a bit of the Dark Web traffic appears to come from bots indexing the increasingly shrinky-dink obfuscated Web.

Is Digital Shadows’ marketing up to knocking Deloitte out of the game? Worth watching.

Cynthia Murrell, July 6, 2021

The Google and Web Indexing: An Issue of Control or Good, Old Fear?

March 29, 2021

I read “Google’s Got A Secret.” No kidding, but Google has many, many secrets. Most of them are unknown to today’s Googlers. After 20 plus years, even Xooglers are blissfully unaware of the “big idea,” the logic of low profiling data slurping, how those with the ability to make “changes” to search from various offices around the world can have massive consequences for those who “trust” the company, and the increasing pressure to control Googzilla’s appetite for cash. But enough of these long-ignored issues.

The “secret” in the article is that Google actively pushes as many buttons and pulls as many levers as its minions can to make it tough for competitors to “index” the publicly accessible Web. The write up states:

Only a select few crawlers are allowed access to the entire web, and Google is given extra special privileges on top of that.

The write up adds:

Only a select few crawlers are allowed access to the entire web, and Google is given extra special privileges on top of that. This isn’t illegal and it isn’t Google’s fault, but this monopoly on web crawling that has naturally emerged prevents any other company from being able to effectively compete with Google in the search engine market.

I am not in total agreement with these assertions. For example, consider the world of public relations distribution agencies. Do a search for “news release distribution” and you get a list of outfits. Now write a news release which reveals previously unknown and impactful information about a public traded company. These firms like PR Underground-type operations will explain that a one-day or longer review process is needed. The “reason” is that these firms have “rules of the road.” Is it possible that these distribution outlets are conforming to some vague guidelines imposed by Google. Crossing a blurry line means that the releases won’t be indexed. No indexing in Google means the agency failed and, of course, the information is effectively censored.

What about a company which publishes information on a consumer-type topic like automobiles. What if that Web site operator uses advertising from sources not linked to the Google combine? That Web site is indexed on a less frequent basis by the friendly Google crawler. Then those citations are suppressed for some unknown reason by a Google algorithm. (These are written by humans, but the Google never talks much about the capabilities of a person in a Google office laboring on core search to address issues.)

The ideas of the Knuckleheads’ Club are interesting. Implementing them, however, is going to require some momentum to overcome the Google habit which has become part of the online user’s DNA over the last 20 years.

The real questions remain. Is Google in control of the public Web? Are people fearful of irritating Mother Google (It’s not nice to anger Mother Google)?

Stephen E Arnold, March 29, 2021

Search Engines: Bias, Filters, and Selective Indexing

March 15, 2021

I read “It’s Not Just a Social Media Problem: How Search Engines Spread Misinformation.” The write up begins with a Venn diagram. My hunch is that quite a few people interested in search engines will struggle with the visual. Then there is the concept that typing in a search team returns results are like loaded dice in a Manhattan craps game in Union Square.

The reasons, according to the write up, that search engines fall off the rails are:

- Relevance feedback or the Google-borrowed CLEVER method from IBM Almaden’s patent

- Fake stories which are picked up, indexed, and displayed as value infused,

The write up points out that people cannot differentiate between accurate, useful, or “factual” results and crazy information.

Okay, here’s my partial list of why Web search engines return flawed results:

- Stop words. Control the stop words and you control the info people can find

- Stored queries. Type what you want but get the results already bundled and ready to display.

- Selective spidering. The idea is that any index is a partial representation of the possible content. Instruct spiders to skip Web sites with information about peanut butter, and, bingo, no peanut butter information

- Spidering depth. Is the bad stuff deep in a Web site? Just limit the crawl to fewer links?

- Spider within a span. Is a marginal Web site linking to sites with info you want killed? Don’t follow links off a domain.

- Delete the past. Who looks at historical info? A better question, “What advertiser will pay to appear on old content?” Kill the backfile. Web indexes are not archives no matter what thumbtypers believe.

There are other methods available as well; for example, objectionable info can be placed in near line storage so that results from questionable sources display with latency or slow enough to cause the curious user to click away.

To sum up, some discussions of Web search are not complete or accurate.

Stephen E Arnold, March 15, 2021

GenX Indexing: Newgen Designs ID Info Extraction Software

March 9, 2021

As vaccines for COVID-19 rollout, countries are discussing vaccination documentation and how to include that with other identification paperwork. The World Health Organization does have some standards for vaccination documentation, but they are not universally applied. Adding yet another document for international travel makes things even more confusing. News Patrolling has a headline about new software that could make extracting ID information easier: “Newgen Launches AI And ML-Based Identity Document Extraction And Redaction Software.”

Newgen Software provides low code digital automation platforms developed a new ID extraction software: Intelligent IDXtract. Intelligent IDXtract extracts required information from identity documents and allows organizations to use the information for multiple reasons across industries. These include KYC verification, customer onboarding, and employee information management.

Intelligent IDXtract works by:

“Intelligent IDXtract uses a computer vision-based cognitive model to identify the presence, location, and type of one or more entities on a given identity document. The entities can include a person’s name, date of birth, unique ID number, and address, among others. The software leverages artificial intelligence and machine learning, powered by computer vision techniques and rule-based capabilities, to extract and redact entities per business requirements.”

The key features in the software will be seamless integration with business applications, entity recognition and localization, language independent localization and redaction of entity, trainable machine learning for customized models, automatic recognition, interpretation, location, and support for image capture variations.

Hopefully Intelligent IDXtract will streamline processes that require identity documentation as well as vaccine documentation.

Whitney Grace, March 9, 2021

Smartlogic: Making Indexing a Thing

May 29, 2020

Years ago, one of the wizards of Smartlogic visited the DarkCyber team. The group numbered about seven of my loyal researchers. These were people who had worked on US government projects, analyses for now disgraced banks in NYC, and assorted high technology firms. Was the world’s largest search system in this list? Gee, I don’t recall.

In that presentation, Smartlogic’s wizard explained that indexing, repositioned as tagging was important. Examples of the values of metatagging (presumably a more advanced form of the 40 year old classification codes used in the ABI/INFORM database since — what? — 1983. Smartlogic embarked on a mini acquisition spree, purchasing the interesting Schemalogic company about a decade ago.

What did Schemalogic do? In addition to being a wonderland for Windows Certified Professionals, the “server” managed index terms. The idea was that people in different departments assigned key words to different digital entities; for example, an engineer might assign the key word “E12.” This is totally clear to a person who thinks about resistors, but to a Home Economics graduate working in marketing the E12 was a bit of a puzzle. The idea that an organization in the pre Covid days could develop a standard set of tags is a fine idea. There are boot camps and specialist firms using words like taxonomy or controlled terms in their marketing collateral. However, humans are not too good at assigning terms. Humans get tired and fall back upon their faves. Other humans are stupid, bored, or indifferent and just assign terms and be done with it. Endeca’s interesting Guided Navigation worked because the company cleverly included consulting in a license. The consulting consisted of humans who worked up the needed vocabulary for a liquor store or preferably an eCommerce site with a modest number of products for sale. (There are some computational challenges inherent in the magical Endeca facets.)

Consequently massive taxonomy projects come and then fade. A few stick around, but these are often hooked to applications with non volatile words. The Engineering Index is a taxonomy, but its terminology is of scant use to an investment bank. How about a taxonomy for business? ABI/INFORM created, maintained, and licensed its vocabulary to outfits like the Royal Bank of Canada. However, ABI/INFORM moved into the brilliant managers at other firms. I assume a biz dev professional at whatever owner possesses rights to the vocabulary will cut a deal.

Back to Smartlogic.

Into this historical stew, Smartlogic offered a better way. I think that was the point of the marketing presentation we enjoyed years ago. Today the words have become more jargon centric, but the idea is the same: Index in a way that makes it possible to find that E12 when the vocabulary of the home ec major struggles with engineer-speak.

Our perception evolved. Smartlogic dabbled in the usual markets. Enterprise search vendors pushed into customer support. Smartlogic followed. Health and medical outfits struggled with indexing content and medical claims form. Indexing specialists followed the search vendors. Smartlogic has enthusiastically chased those markets as well. An exit for the company’s founders has not materialized. The dream of many — a juicy IPO — must work through the fog of the post Covid business world.

The most recent pivot is announced this way:

Smartlogic now offers indexing for these sectors expressed in what may be Smartlogic compound controlled terms featuring conjunctions. There you go, Bing, Google, Swisscows, Qwant, and Yandex. Parse these and then relax the users’ query. That’s what happens to well considered controlled terms today DarkCyber knows.

- Energy and utilities

- Financial services and insurance

- Health care

- High tech and manufacturing

- Media and publishing

- Life sciences

- Retail and consumer products

- and of course, intelligence (presumably business, military, competitive, and enforcement).

Is the company pivoting or running a Marketing 101 game plan?

DarkCyber noted that Smartlogic offers a remarkable array of services, technologies (including our favorites semantic and knowledge management), and — wait for it — artificial intelligence.

Interesting. Indexing is versatile and definitely requires a Swiss Army Knife of solutions, a Gartner encomium, and those pivots. Those spins remains anchored to indexing.

Want to know more about Smartlogic? Navigate to the company’s Web site. There’s even a blog! Very reliable outfit. Quick response. Objective. Who could ask for anything more?

Stephen E Arnold, May 29, 2020

Deindexing: Does It Officially Exist?

May 14, 2020

DarkCyber noted “LinkedIn Temporarily Deindexed from Google.” The rock solid, hard news service stated:

LinkedIn found itself deindexed from Google search results on Wednesday, which may or may not have occurred due to an error on their part. The telltale sign of an entire domain being deindexed from Google is performing a “site:” search and seeing zero results.

Mysterious.

DarkCyber has fielded two reports of deindexing from Google in the last three days. I one case a site providing automobile data was disappeared. In another, a site focused on the politics of the intelligence sector was pushed from page one to the depths of page three.

Why?

No explanation, of course.

LinkedIn is owned by Microsoft. Is that a reason? Did LinkedIn’s engineers ignore a warning about a problem in AMP?

Google does not make errors. If a problem arises, the cause is the vaunted Google smart software.

DarkCyber’s view is that Google is taking stepped up action to filter certain types of content. We have documented that one Google office has access to controls that can selectively block certain content from appearing in the public facing Web search system. The content is indeed indexed and available to those with certain types of access.

What’s up? Here are our theories?

- Google is trying to deal with problematic content in a more timely manner by relaxing constraints on search engineers working in Google “virtual offices” around the world. Human judgments will affect some Web site. (Contacting Google is as difficult as it has been for the last 20 years.)

- Google wants to make sure that ads do not appear next to content that might cause a big spender to pull away. Google needs the cash. The thought is that Amazon and Facebook are starting to put a shunt in the money pipeline.

- Google is struggling to control costs. Slowing indexing, removing sites from a crawl, and pushing content that is rarely viewed to the side of the Information Superhighway reduces some of the costs associated with serving more than 95 percent of the queries launched by humans each day.

Regardless of the real reason or the theoretical ones, Google’s control over findable content can have interesting consequences. For example, more investigations are ramping up in Europe about the firm’s practices (either human or software centric).

Interesting. Too bad others affected by Google actions are not of the girth and heft of LinkedIn. Oh, well, the one percent are at the top for a reason.

Stephen E Arnold, May 14, 2020