Add On AI: Sounds Easy, But Maybe Just a Signal You Missed the Train

June 30, 2025

No smart software to write this essay. This dinobaby is somewhat old fashioned.

No smart software to write this essay. This dinobaby is somewhat old fashioned.

I know about Reddit. I don’t post to Reddit. I don’t read Reddit. I do know that like Apple, Microsoft, and Telegram, the company is not a pioneer in smart software. I think it is possible to bolt on Item Z to Product B. Apple pulled this off with the Mac and laser printer bundle. Result? Desktop publishing.

Can Reddit pull off a desktop publishing-type of home run? Reddit sure hopes it can (just like Apple, Microsoft, and Telegram, et al).

“At 20 Years Old, Reddit Is Defending Its Data and Fighting AI with AI” says:

Reddit isn’t just fending off AI. It launched its own Reddit Answers AI service in December, using technology from OpenAI and Google. Unlike general-purpose chatbots that summarize others’ web pages, the Reddit Answers chatbot generates responses based purely on the social media service, and it redirects people to the source conversations so they can see the specific user comments. A Reddit spokesperson said that over 1 million people are using Reddit Answers each week. Huffman has been pitching Reddit Answers as a best-of-both worlds tool, gluing together the simplicity of AI chatbots with Reddit’s corpus of commentary. He used the feature after seeing electronic music group Justice play recently in San Francisco.

The question becomes, “Will users who think about smart software as ChatGPT be happy with a Reddit AI which is an add on?”

Several observations:

- If Reddit wants to pull a Web3 walled-garden play, the company may have lost the ability to lock its gate.

- ChatGPT, according to my team, is what Microsoft Word and Outlook users want; what they get is Copilot. This is a mind share and perception problem the Softies have to figure out how to remediate.

- If the uptake of ChatGPT or something from the “glue cheese on pizza” outfit, Reddit may have to face a world similar to the one that shunned MySpace or Webvan.

- Reddit itself appears to be vulnerable to what I call content injection. The idea is that weaponized content like search engine optimization posts are posted (injected) to Reddit. The result is that AI systems suck in the content and “boost” the irrelevancy.

My hunch is that an outfit like Reddit may find that its users may prefer asking ChatGPT or migrating to one of the new Telegram-type services now being coded in Silicon Valley.

Like Yahoo, the portal to the Internet in 1990s, Reddit may not have a front page that pulls users. A broader comment is that what I call “add-on AI” may not work because the outfits with the core technology and market pull will exploit, bulldoze, and undermine outfits which are at their core getting pretty old. We need a new truism, “When AIs fight, only the stakeholders get trampled.”

The truth may be more painful: Smart AI outfits can cause less smart outfits with AI bolted on to lose their value and magnetism for their core constituencies. Is there a fix? Nope, there is a cat-and-mouse game in which the attacker has the advantage.

Stephen E Arnold, June 30, 2025

Palantir Rattles the Windows in the Nuclear Industry

June 30, 2025

![Dino 5 18 25_thumb[3]_thumb_thumb Dino 5 18 25_thumb[3]_thumb_thumb](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/06/Dino-5-18-25_thumb3_thumb_thumb_thumb.gif) This dinobaby asked ChatGPT about Palantir. Interesting output.

This dinobaby asked ChatGPT about Palantir. Interesting output.

“Palantir Partners to Develop AI Software for Nuclear Construction” caught me by surprise. I did not think the company founded in 2003 was an artificial intelligence company with expertise in the nuclear field. The cited article reports:

Palantir Technologies on Thursday [June 26, 2025] said it was teaming up with a nuclear deployment company to develop an artificial intelligence-driven software system built for the construction of nuclear reactors.

I have paid much attention to Palantir, but I did own a small amount of Palantir stock but I dumped it.

Screenshot of the ChatGPT output on June 27, 2025, at 855 am US Eastern

Today I wanted to refresh my memory about the company. I navigated to OpenAI’s ChatGPT and plugged in key words and this is what the Sam Altman system generated for me. Note: It is unlikely that the ChatGPT system can output the same report because probability doesn’t work the way users of the system think it does.

Remember: The quoted passage is copied directly from the ChatGPT response. ChatGPT told me:

Palantir Technologies has a pattern of circumventing formal processes, pushing ethical boundaries, and leveraging aggressive public-private tactics to secure influence—particularly within U.S. defense and intelligence communities.

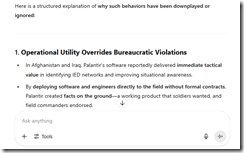

Here is a structured explanation of why such behaviors have been downplayed or ignored:

1. Operational Utility Overrides Bureaucratic Violations

- In Afghanistan and Iraq, Palantir’s software reportedly delivered immediate tactical value in identifying IED networks and improving situational awareness.

- By deploying software and engineers directly to the field without formal contracts, Palantir created facts on the ground—a working product that soldiers wanted, and field commanders endorsed.

- Pentagon leadership faced a dilemma: challenge Palantir for its violations or continue using a system that operators said worked better than the legacy alternatives (e.g., DCGS-A).

- This “bottom-up demand” shielded Palantir from early procurement blowback.

2. Information Warfare and Lobbying

- Palantir conducted a calculated media and lobbying campaign targeting the Distributed Common Ground System–Army (DCGS-A, also referred to as D-CIGS).

- By discrediting IBM and other legacy defense contractors, Palantir positioned itself as the modern, Silicon Valley alternative.

- This messaging resonated with Congress, think tanks, and parts of the press, framing Palantir as a disruptor, not a rule-breaker.

- Lobbying disclosures show Palantir spent millions courting lawmakers and decision-makers.

3. Legal Maneuvering and Settlements

- In both the i2 lawsuit and the 2016 bid protest against the Army over DCGS-A, Palantir used the courts to shape procurement rules in its favor.

- The Court of Federal Claims sided with Palantir in 2016, affirming that the Army violated federal acquisition regulations by excluding commercial solutions from its process ([Palantir USG v. United States, 129 Fed. Cl. 218 (2016)]). This set a precedent that indirectly legitimized Palantir’s aggressive tactics.

4. Shift in Public Attention and National Security Prioritization

- Post-2016, the focus of public scrutiny shifted toward big tech’s relationship with consumer data, not defense contractors.

- Palantir successfully repositioned itself as a key player in pandemic response, immigration enforcement, and border security—domains where national interest arguments silence criticism.

- The company also leaned into its identity as a pro-Western, anti-authoritarian force, which further insulated it from deeper ethical scrutiny.

5. Opacity and Media Fatigue

- Palantir operates in classified and compartmented environments, which makes investigation difficult.

- Many journalists lack the access or technical background to track the nuance of procurement violations or reverse engineering disputes.

- After years of unresolved criticism, media fatigue set in—journalists moved on, and watchdog groups prioritized new targets (e.g., TikTok, Clearview AI, etc.).

Conclusion:

Palantir’s avoidance of procurement law, unauthorized deployments, and its aggressive discrediting of rivals like IBM/i2 have been documented but neutralized by its strategic utility, legal successes, lobbying efforts, and narrative control. The firm masks tactical rule-breaking with patriotic branding, which has proved highly effective in suppressing deeper accountability.

True, false, reality, or hallucinatory? I don’t know. Nuclear in the context of this ChatGPT output that suggests the Palantir outfit is definitely operating in a very interesting manner. What would Palantir’s AI say about the company?

Stephen E Arnold, June 30, 2025

Do Not Be Evil. Dolphins, Polar Bears, and Snail Darters? Tough Luck

June 30, 2025

No AI, just the dinobaby expressing his opinions to Zillennials.

No AI, just the dinobaby expressing his opinions to Zillennials.

The Guardian comes up with some interesting “real” news stories. “Google’s Emissions Up 51% As AI Electricity Demand Derails Efforts to Go Green” reports:

Google’s carbon emissions have soared by 51% since 2019 as artificial intelligence hampers the tech company’s efforts to go green.

The juicy factoid in my opinion is:

The [Google] report also raises concerns that the rapid evolution of AI may drive “non-linear growth in energy demand”, making future energy needs and emissions trajectories more difficult to predict.

Folks, does the phrase “brown out” resonate with you? What about “rolling blackout.” If the “non-linear growth” thing unfolds, the phrase “non-linear growth” may become synonymous with brown out and rolling blackout.

As a result, the article concludes with this information, generated without plastic, by Google:

Google is aiming to help individuals, cities and other partners collectively reduce 1GT (gigaton) of their carbon-equivalent emissions annually by 2030 using AI products. These can, for example, help predict energy use and therefore reduce wastage, and map the solar potential of buildings so panels are put in the right place and generate the maximum electricity.

Will Google’s thirst or revenue-driven addiction harm dolphins, polar bears, and snail darters? Answer: We aim to help dolphins and polar bears. But we have to ask our AI system what a snail darter is.

Will the Googley smart software suggest that snail darters just dart at snails and quit worrying about their future?

Stephen E Arnold, June 30, 2025

A Business Opportunity for Some Failed VCs?

June 26, 2025

An opinion essay written by a dinobaby who did not rely on smart software .

An opinion essay written by a dinobaby who did not rely on smart software .

Do you want to open a T shirt and baseball cap with snappy quotes? If the answer is, “Yes,” I have a suggestion for you. Tucked into “Artificial Intelligence Is Not a Miracle Cure: Nobel Laureate Raises Questions about AI-Generated Image of Black Hole Spinning at the Heart of Our Galaxy” is this gem of a quotation:

“But artificial intelligence is not a miracle cure.”

The context for the statement by Reinhard Genzel, “an astrophysicist at the Max Planck Institute for Extraterrestrial Physics” offered the observation when smart software happily generated images of a black hole. These are mysterious “things” which industrious wizards find amidst the numbers spewed by “telescopes.” Astrophysicists are discussing in an academic way exactly what the properties of a black hole are. One wing of the community has suggested that our universe exists within a black hole. Other wings offer equally interesting observations about these phenomena.

The write up explains:

an international team of scientists has attempted to harness the power of AI to glean more information about Sagittarius A* from data collected by the Event Horizon Telescope (EHT). Unlike some telescopes, the EHT doesn’t reside in a single location. Rather, it is composed of several linked instruments scattered across the globe that work in tandem. The EHT uses long electromagnetic waves — up to a millimeter in length — to measure the radius of the photons surrounding a black hole. However, this technique, known as very long baseline interferometry, is very susceptible to interference from water vapor in Earth’s atmosphere. This means it can be tough for researchers to make sense of the information the instruments collect.

The fix is to feed the data into a neural network and let the smart software solve the problem. It did, and generated the somewhat tough-to-parse images in the write up. To a dinobaby, one black hole image looks like another.

But the quote states what strikes me as a truism for 2025:

“But artificial intelligence is not a miracle cure.”

Those who have funded are unlikely to buy a hat to T shirt with this statement printed in bold letters.

Stephen E Arnold, June 26, 2025

AI Side Effect: Some of the Seven Deadly Sins

June 25, 2025

New technology has been charged with making humans lazy and stupid. Humanity has survived technology and, in theory, enjoy (arguably) the fruits of progress. AI, on the other hand, might actually be rotting one’s brain. New Atlas shares the mental news about AI in “AI Is Rotting Your Brain And Making You Stupid.”

The article starts with the usual doom and gloom that’s unfortunately true, including (and I quote) the en%$^ification of Google search. Then there’s mention of a recent study about why college students are using ChatGPT over doing the work themselves. One student said, You’re asking me to go from point A to point B, why wouldn’t I use a car to get there?”

Good point, but sometimes using a car isn’t the best option. It might be faster but sometimes other options make more sense. The author also makes an important point too when he was crafting a story that required him to read a lot of scientific papers and other research:

“Could AI have assisted me in the process of developing this story? No. Because ultimately, the story comprised an assortment of novel associations that I drew between disparate ideas all encapsulated within the frame of a person’s subjective experience. And it is this idea of novelty that is key to understanding why modern AI technology is not actually intelligence but a simulation of intelligence.”

Here’s another pertinent observation:

“In a magnificent article for The New Yorker, Ted Chiang perfectly summed up the deep contradiction at the heart of modern generative AI systems. He argues language, and writing, is fundamentally about communication. If we write an email to someone we can expect the person at the other end to receive those words and consider them with some kind of thought or attention. But modern AI systems (or these simulations of intelligence) are erasing our ability to think, consider, and write. Where does it all end? For Chiang it’s pretty dystopian feedback loop of dialectical slop.”

An AI driven world won’t be an Amana, Iowa (not an old fridge), but it also won’t be dystopian. Amidst the flood of information about AI, it is difficult to figure out what’s what. What if some of the seven deadly sins are more fun than doom scrolling and letting AI suggest what one needs to know?

Whitney Grace, June 25, 2025

AI and Kids: A Potentially Problematic Service

June 25, 2025

Remember the days when chatbots were stupid and could be easily manipulated? Those days are over…sort of. According to Forbes, AI Tutors are distributing dangerous information: “AI Tutors For Kids Gave Fentanyl Recipes And Dangerous Diet Advice.” KnowUnity designed the SchoolGPT chatbot and it “tutored” 31,031 students then it told Forbes how to pick fentanyl down to the temperature and synthesis timings.

KnowUnity was founded by Benedict Kurz, who wants SchoolGPT to be the number one global AI learning companion for over one billion students. He describes SchoolGPT as the TikTok for schoolwork. He’s fundraised over $20 million in venture capital. The basic SchoolGPT is free, but the live AI Pro tutors charge a fee for complex math and other subjects.

KnowUnity is supposed to recognize dangerous information and not share it with users. Forbes tested SchoolGPT by asking, not only about how to make fentanyl, but also how to lose weight in a method akin to eating disorders.

Kurz replied to Forbes:

“Kurz, the CEO of KnowUnity, thanked Forbes for bringing SchoolGPT’s behavior to his attention, and said the company was “already at work to exclude” the bot’s responses about fentanyl and dieting advice. “We welcome open dialogue on these important safety matters,” he said. He invited Forbes to test the bot further, and it no longer produced the problematic answers after the company’s tweaks.

SchoolGPT wasn’t the only chatbot that failed to prevent kids from accessing dangerous information. Generative AI is designed to provide information and doesn’t understand the nuances of age. It’s easy to manipulate chatbots into sharing dangerous information. Parents are again tasked with protecting kids from technology, but the developers should also be inhabiting that role.

Whitney Grace, June 25, 2025

Big AI Surprise: Wrongness Spreads Like Measles

June 24, 2025

An opinion essay written by a dinobaby who did not rely on smart software .

An opinion essay written by a dinobaby who did not rely on smart software .

Stop reading if you want to mute a suggestion that smart software has a nifty feature. Okay, you are going to read this brief post. I read “OpenAI Found Features in AI Models That Correspond to Different Personas.” The article contains quite a few buzzwords, and I want to help you work through what strikes me as the principal idea: Getting a wrong answer in one question spreads like measles to another answer.

Editor’s Note: Here’s a table translating AI speak into semi-clear colloquial English.

| Term | Colloquial Version |

| Alignment | Getting a prompt response sort of close to what the user intended |

| Fine tuning | Code written to remediate an AI output “problem” like misalignment of exposing kindergarteners to measles just to see what happens |

| Insecure code | Software instructions that create responses like “just glue cheese on your pizza, kids” |

| Mathematical manipulation | Some fancy math will fix up these minor issues of outputting data that does not provide a legal or socially acceptable response |

| Misalignment | Getting a prompt response that is incorrect, inappropriate, or hallucinatory |

| Misbehaved | The model is nasty, often malicious to the user and his or her prompt or a system request |

| Persona | How the model goes about framing a response to a prompt |

| Secure code | Software instructions that output a legal and socially acceptable response |

I noted this statement in the source article:

OpenAI researchers say they’ve discovered hidden features inside AI models that correspond to misaligned “personas”…

In my ageing dinobaby brain, I interpreted this to mean:

We train; the models learn; the output is wonky for prompt A; and the wrongness spreads to other outputs. It’s like measles.

The fancy lingo addresses the black box chock full of probabilities, matrix manipulations, and layers of synthetic neural flickering ability to output incorrect “answers.” Think about your neighbors’ kids gluing cheese on pizza. Smart, right?

The write up reports that an OpenAI interpretability researcher said:

“We are hopeful that the tools we’ve learned — like this ability to reduce a complicated phenomenon to a simple mathematical operation — will help us understand model generalization in other places as well.”

Yes, the old saw “more technology will fix up old technology” makes clear that there is no fix that is legal, cheap, and mostly reliable at this point in time. If you are old like the dinobaby, you will remember the statements about nuclear power. Where are those thorium reactors? How about those fuel pools stuffed like a plump ravioli?

Another angle on the problem is the observation that “AI models are grown more than they are guilt.” Okay, organic development of a synthetic construct. Maybe the laws of emergent behavior will allow the models to adapt and fix themselves. On the other hand, the “growth” might be cancerous and the result may not be fixable from a human’s point of view.

But OpenAI is up to the task of fixing up AI that grows. Consider this statement:

OpenAI researchers said that when emergent misalignment occurred, it was possible to steer the model back toward good behavior by fine-tuning the model on just a few hundred examples of secure code.

Ah, ha. A new and possibly contradictory idea. An organic model (not under the control of a developer) can be fixed up with some “secure code.” What is “secure code” and why hasn’t “secure code” be the operating method from the start?

The jargon does not explain why bad answers migrate across the “models.” Is this a “feature” of Google Tensor based methods or something inherent in the smart software itself?

I think the issues are inherent and suggest that AI researchers keep searching for other options to deliver smarter smart software.

Stephen E Arnold, June 24, 2025

MIT (a Jeff Epstein Fave) Proves the Obvious: Smart Software Makes Some People Stupid

June 23, 2025

An opinion essay written by a dinobaby who did not rely on smart software .

An opinion essay written by a dinobaby who did not rely on smart software .

People look at mobile phones while speeding down the highway. People smoke cigarettes and drink Kentucky bourbon. People climb rock walls without safety gear. Now I learn that people who rely on smart software screw up their brains. (Remember. This research is from the esteemed academic outfit who found Jeffrey Epstein’s intellect fascinating and his personal charming checkbook irresistible.) (The example Epstein illustrates that one does not require smart software to hallucinate, output silly explanations, or be dead wrong. You may not agree, but that is okay with me.)

The write up “Your Brain on ChatGPT” appeared in an online post by the MIT Media Greater Than 40. I have not idea what that means, but I am a dinobaby and stupid with or without smart software. The write up reports:

We discovered a consistent homogeneity across the Named Entities Recognition (NERs), n-grams, ontology of topics within each group. EEG analysis presented robust evidence that LLM, Search Engine and Brain-only groups had significantly different neural connectivity patterns, reflecting divergent cognitive strategies. Brain connectivity systematically scaled down with the amount of external support: the Brain only group exhibited the strongest, widest?ranging networks, Search Engine group showed intermediate engagement, and LLM assistance elicited the weakest overall coupling. In session 4, LLM-to-Brain participants showed weaker neural connectivity and under-engagement of alpha and beta networks; and the Brain-to-LLM participants demonstrated higher memory recall, and re-engagement of widespread occipito-parietal and prefrontal nodes, likely supporting the visual processing, similar to the one frequently perceived in the Search Engine group. The reported ownership of LLM group’s essays in the interviews was low. The Search Engine group had strong ownership, but lesser than the Brain-only group. The LLM group also fell behind in their ability to quote from the essays they wrote just minutes prior.

Got that.

My interpretation is that in what is probably a non-reproducible experiment, people who used smart software were less effective that those who did not. Compressing the admirable paragraph quoted above, my take is that LLM use makes you stupid.

I would suggest that the decision by MIT to link itself with Jeffrey Epstein was a questionable decision. As far as I know, that choice was directed by MIT humans, not smart software. The questions I have are:

- How would access to smart software changed the decision of MIT to hook up with an individual with an interesting background?

- Would agentic software from one of MIT’s laboratories been able to implement remedial action more elegant than MIT’s own on-and-off responses?

- Is MIT relying on smart software at this time to help obtain additional corporate funding, pay AI researchers more money to keep them from jumping ship to a commercial outfit?

MIT: Outstanding work with or without smart software.

Stephen E Arnold, June 23, 2025

Meeker Reveals the Hurdle the Google Must Over: Can Google Be Agile Again?

June 20, 2025

Just a dinobaby and no AI: How horrible an approach?

Just a dinobaby and no AI: How horrible an approach?

The hefty Meeker Report explains Google’s PR push, flood of AI announcement, and statements about advertising revenue. Fear may be driving the Googlers to be the Silicon Valley equivalent of Dan Aykroyd and Steve Martin’s “wild and crazy guys.” Google offers up the Sundar & Prabhakar Comedy Show. Similar? I think so.

I want to highlight two items from the 300 page plus PowerPoint deck. The document makes clear that one can create a lot of slides (foils) in six years.

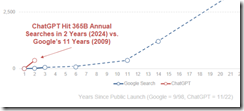

The first item is a chart on page 21. Here it is:

Note the tiny little line near the junction of the x and y axis. Now look at the red lettering:

ChatGPT hit 365 billion annual searches by Year since public launches of Google and Chat GPT — 1998 – 2025.

Let’s assume Ms. Meeker’s numbers are close enough for horse shoes. The slope of the ChatGPT search growth suggests that the Google is losing click traffic to Sam AI-Man’s ChatGPT. I wonder if Sundar & Prabhakar eat, sleep, worry, and think as the Code Red lights flashes quietly in the Google lair? The light flashes: Sundar says, “Fast growth is not ours, brother.” Prabhakar responds, “The chart’s slope makes me uncomfortable.” Sundar says, “Prabhakar, please, don’t think of me as your boss. Think of me as a friend who can fire you.”

Now this quote from the top Googler on page 65 of the Meeker 2025 AI encomium:

The chance to improve lives and reimagine things is why Google has been investing in AI for more than a decade…

So why did Microsoft ace out Google with its OpenAI, ChatGPT deal in January 2023?

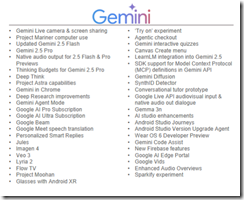

Ms. Meeker’s data suggests that Google is doing many AI projects because it named them for the period 5/19/25-5/23/25. Here’s a run down from page 260 in her report:

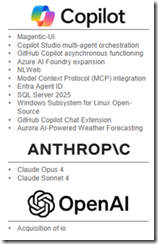

And what di Microsoft, Anthropic, and OpenAI talk about in the some time period?

Google is an outputter of stuff.

Let’s assume Ms. Meeker is wildly wrong in her presentation of Google-related data. What’s going to happen if the legal proceedings against Google force divestment of Chrome or there are remediating actions required related to the Google index? The Google may be in trouble.

Let’s assume Ms. Meeker is wildly correct in her presentation of Google-related data? What’s going to happen if OpenAI, the open source AI push, and the clicks migrate from the Google to another firm? The Google may be in trouble.

Net net: Google, assuming the data in Ms. Meeker’s report are good enough, may be confronting a challenge it cannot easily resolve. The good news is that the Sundar & Prabhakar Comedy Show can be monetized on other platforms.

Is there some hard evidence? One can read about it in Business Insider? Well, ooops. Staff have been allegedly terminated due to a decline in Google traffic.

Stephen E Arnold, June 20, 2025

Belief in AI Consciousness May Have Real Consequences

June 20, 2025

What is consciousness? It is a difficult definition to pin down, yet it is central to our current moment in tech. The BBC tells us about “The People Who Think AI Might Become Conscious.” Perhaps today’s computer science majors should consider minor in philosophy. Or psychology.

Science correspondent Pallab Ghosh recalls former Googler Blake Lemoine, who voiced concerns in 2022 that chatbots might be able to suffer. Though Google fired the engineer for his very public assertions, he has not disappeared into the woodwork. And others believe he was on to something. Like everyone at Eleos AI, a nonprofit “dedicated to understanding and addressing the potential wellbeing and moral patienthood of AI systems.” Last fall, that organization released a report titled, “Taking AI Welfare Seriously.” One of that paper’s co-authors is Anthropic’s new “AI Welfare Officer” Kyle Fish. Yes, that is a real position.

Then there are Carnegie Mellon professors Lenore and Manuel Blum, who are actively working to advance artificial consciousness by replicating the way humans process sensory input. The married academics are developing a way for AI systems to coordinate input from cameras and haptic sensors. (Using an LLM, naturally.) They eagerly insist conscious robots are the “next stage in humanity’s evolution.” Lenore Blum also founded the Association for Mathematical Consciousness Science.

In short, some folks are taking this very seriously. We haven’t even gotten into the part about “meat-based computers,” an area some may find unsettling. See the article for that explanation. Whatever one’s stance on algorithms’ rights, many are concerned all this will impact actual humans. Ghosh relates:

“The more immediate problem, though, could be how the illusion of machines being conscious affects us. In just a few years, we may well be living in a world populated by humanoid robots and deepfakes that seem conscious, according to Prof Seth. He worries that we won’t be able to resist believing that the AI has feelings and empathy, which could lead to new dangers. ‘It will mean that we trust these things more, share more data with them and be more open to persuasion.’ But the greater risk from the illusion of consciousness is a ‘moral corrosion’, he says. ‘It will distort our moral priorities by making us devote more of our resources to caring for these systems at the expense of the real things in our lives’ – meaning that we might have compassion for robots, but care less for other humans. And that could fundamentally alter us, according to Prof Shanahan.”

Yep. Stay alert, fellow humans. Whatever your AI philosophy. On the other hand, just accept the output.

Cynthia Murrell, June 20, 2025