AI: Demand Goes Up But Then What?

March 24, 2025

Yep, another dinobaby original.

Yep, another dinobaby original.

Why use smart software? For money, for academic and LinkedIn fame, for better grades in high school? The estimable publication The Cool Down revealed the truth in its article “Expert Talks Massive Impacts AI Will Have on Us All: I Can Only See the Demand Increasing.”

The expert is Dr. Chris Mattmann, the author of a book about AI and machine learning. He is also the chief data officer at UCLA.

The write up reports:

AI is really just search on steroids, and while training AI models is expensive and energy-consuming, it’s not much different than when Google introduced search for information retrieval and data gathering in 2009.

After reading the statement, I asked myself if smart software implemented in the Telegram smart contracts is about search or it it related to obfuscating financial transaction. Guess not. Too bad I did not understand that AI was just search.

The write up says:

“AI expects the world to look like tables with rows and columns … [but] the world doesn’t look that way. It’s messy, it’s multimodal, it’s video, image, sound, text,” he said, and making sense of all that information and “training AI models” takes the most energy.

I think that energy costs money. How companies make the jump between spending and generating sustainable revenue? Search runs on advertising dollars. Will AI do the same thing?

The Cool Down attempts to clarify certain types of AI use cases; for example, games and videos:

While The Cool Down will continue to report on inefficient uses of AI, it’s also fair to demystify AI as more like “a computer program” and to consider its energy use in a different light if and when it is a tool to replace other work or entertainment. Creating an AI image may often seem like it’s not a justified use of energy, but Mattmann is essentially saying: “Is it much more or less justified than playing video games or watching movies?”

AI has some benefits. Again the expert:

His three kids under age 15 use AI devices like the Amazon Echo to learn things, and Mattmann uses a Timekettle earbud device to immediately translate up to 40 languages in real-time, which he calls “an AI device at the edge.” “I’m excited about traveling. I’m excited about what it will do for our national security, what it will mean for language.” It will be transformative for “those tasks that [require] robotic process automation, intelligent assistance, or whatever can give us back time, which is our only precious commodity here on this planet,” he said.

Will The Cool Down become my go-to source for the real scoop about smart software? We’ll see.

Stephen E Arnold, March 24, 2025

Journalism Is Now Spelled Journ-AI-sm

March 24, 2025

Another dinobaby blog post. Eight decades and still thrilled when I point out foibles.

Another dinobaby blog post. Eight decades and still thrilled when I point out foibles.

When I worked at a “real” newspaper, I enjoyed listening to “real” journalists express their opinions on everything. Some were experts in sports and knew little details about Louisville basketball. Others were “into” technology and tracked the world of gadgets — no matter how useless — and regaled people at lunch with descriptions of products that would change everything. Everything? Yeah. I heard about the rigors of journalism school. The need to work on either the college radio station or the college newspaper. These individuals fancied themselves Renaissance men and women. That’s okay, but do bean counters need humans to “cover” the news?

The answer is, “Probably not.”

“Italian Newspaper Says It Has Published World’s First AI-Generated Edition” suggests that “real” humans covering the news may face a snow leopard or Maui ‘Alauahio moment. The article reports:

An Italian newspaper has said it is the first in the world to publish an edition entirely produced by artificial intelligence. The initiative by Il Foglio, a conservative liberal daily, is part of a month-long journalistic experiment aimed at showing the impact AI technology has “on our way of working and our days”, the newspaper’s editor, Claudio Cerasa, said.

The smart software is not just spitting out “real” news. The system does “headlines, quotes, and even the irony.” Wow. Irony from smart software.

According to the “real” journalistic who read the stories in the paper:

The articles were structured, straightforward and clear, with no obvious grammatical errors. However, none of the articles published in the news pages directly quote any human beings.

That puts Il Foglio ahead of the Smartnews’ articles. Wow, are some of those ungrammatical and poorly structured? In my opinion, I would toss in the descriptor “incoherent.”

What do I make of Il Folio’s trial? That’s an easy question:

- If the smart software is good enough and allows humans to be given an opportunity to find their future elsewhere, smart software is going to be used. A few humans might be rehired if revenues tank, but the writing is on the wall of the journalism school

- Bean counters know one thing: Counting beans. If the smart software generates financial benefits, the green eye shade crowd will happily approve licensing smart software.

- Readers may not notice or not care. Headline. First graf. Good to go.

Will the future pundits, analysts, marketing specialists, PR professionals, and LLM trainers find the journalistic joy? Being unhappy at work and paying bills is one thing; being happy doing news knowing that smart software is coming for the journalism jobs is another.

I would not return to college to learn how to be a “real” journalist. I would stay home, eat snacks, and watch game show re-runs. Good enough life plan, right?

Why worry? Il Foglio is just doing a small test.

Stephen E Arnold, March 24, 2025

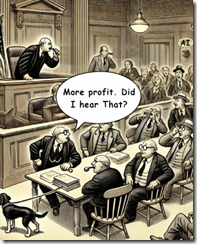

Dog Whistle Only Law Firm Partners Can Hear: More Profits, Bigger Bonuses!

March 21, 2025

Dinobaby, here. No smart software involved unlike some outfits. I did use Sam AI-Man’s art system to produce the illustration in the blog post.

Dinobaby, here. No smart software involved unlike some outfits. I did use Sam AI-Man’s art system to produce the illustration in the blog post.

Truth be told, we don’t do news. The write ups in my “placeholder” blog are my way to keep track of interesting items. Some of these I never include in my lectures. Some find their way into my monographs. The FOGINT stuff: Notes for my forthcoming monograph about Telegram, the Messenger mini app, and that lovable marketing outfit, the Open Network Foundation. If you want to know more, write benkent2020 at yahoo dot com. Some slacker will respond whilst scrolling Telegram Groups and Channels for interesting items.

Thanks, Sam AI-Man.

But this write up is an exception. This is a post about an article in the capitalist tool. (I have always like the ring of the slogan. I must admit when I worked in the Big Apple, I got a kick out of Malcolm Forbes revving his Harley at the genteel biker bar. But the slogan and the sound of the Hog? Unforgettable.)

What is causing me to stop my actual work to craft a blog post at 7 am on March 21, 2025? This article in Forbes Magazine. You know, the capitalist tool. Like a vice grip for Peruvian prison guards I think.

“Risk Or Revolution: Will AI Replace Lawyers?” sort of misses the main point of smart software and law firms. I will address the objective of big time law firms in a moment, but I want to look at what Hessie Jones, the strategist or stratagiste maybe, has to say:

Over the past few years, a growing number of legal professionals have embraced AI tools to boost efficiency and reduce costs. According to recent figures, nearly 73% of legal experts now plan to incorporate AI into their daily operations. 65% of law firms agree that "effective use of generative AI will separate the successful and unsuccessful law firms in the next five years."

Talk about leading the witness. “Who is your attorney?” The person in leg cuffs and an old fashioned straight jacket says, “Mr. Gradient Descent, your honor.”

The judge, a savvy fellow who has avoid social media criticism says, “Approach the bench.”

Silence.

The write up says:

Afolabi [a probate lawyer, a graduate of Osgoode Law School, York University in Canada] who holds a master’s from the London School of Economics, describes the evolution of legal processes over the past five years, highlighting the shift from paper-based systems to automated ones. He explains that the initial client interaction, where they tell a story and paint a picture remains crucial. However, the method of capturing and analyzing this information has changed significantly. "Five years ago, that would have been done via paper. You’re taking notes," Afolabi states, "now, there’s automation for that." He emphasizes that while the core process of asking questions remains, it’s now "the machine asking the questions." Automation extends to the initial risk analysis, where the system can contextualize the kind of issues and how to best proceed. Afolabi stresses that this automation doesn’t replace the lawyer entirely: "There’s still a lawyer there with the clients, of course."

Okay, the human lawyer, not the Musk envisioned Grok 3 android robot, will approach the bench. Well, someday.

Now the article’s author delivers the payoff:

While concerns about AI’s limitations persist, the consensus is clear: AI-driven services like Capita can make legal services more affordable and accessible without replacing human oversight.

After finishing this content marketing write up, I had several observations:

- The capitalist tool does not point out the entire purpose of the original Forbes, knock out Fortune Magazine and deliver information that will make a reader money.

- The article ignores the reality that smart software fiddling with word probabilities makes errors. Whether it was made up cases like Michael Cohen’s brush with AI or telling me that a Telegram-linked did not host a conference in Dubai, those mistakes might add some friction to smart speeding down the information highway.

- Lawyers will use AI to cut costs and speed billing cycles. In my opinion, lawyers don’t go to jail. Their clients do.

Let’s imagine the hog-riding Malcolm at his desk pondering great thoughts like this:

“It’s so much easier to suggest solutions when you don’t know too much about the problem.”

The problem for law firms will be solved by smart software; that is, reducing costs. Keep in mind, lawyers don’t go to jail that often. The AI hype train has already pulled into the legal profession. Will the result be better lawyering? I am not sure because once a judge or jury makes a decision the survey pool is split 50 50.

But those bonuses? Now that’s what AI can deliver. (Imagine the sound of a dog whistle with an AI logo, please.)

PS. If you are an observer of blue chip consulting firms. The same payoff logic applies. Both species have evolved to hear the more-money frequency.

Stephen E Arnold, March 21, 2025

Why Worry about TikTok?

March 21, 2025

We have smart software, but the dinobaby continues to do what 80 year olds do: Write the old-fashioned human way. We did give up clay tablets for a quill pen. Works okay.

We have smart software, but the dinobaby continues to do what 80 year olds do: Write the old-fashioned human way. We did give up clay tablets for a quill pen. Works okay.

I hope this news item from WCCF Tech is wildly incorrect. I have a nagging thought that it might be on the money. “Deepseek’s Chatbot Was Being Used By Pentagon Employees For At Least Two Days Before The Service Was Pulled from the Network; Early Version Has Been Downloaded Since Fall 2024” is the headline I noted. I find this interesting.

The short article reports:

A more worrying discovery is that Deepseek mentions that it stores data on servers in China, possibly presenting a security risk when Pentagon employees started playing around with the chatbot.

And adds:

… employees were using the service for two days before this discovery was made, prompting swift action. Whether the Pentagon workers have been reprimanded for their recent act, they might want to exercise caution because Deepseek’s privacy policy clearly mentions that it stores user data on its Chinese servers.

Several observations:

- This is a nifty example of an insider threat. I thought cyber security services blocked this type of to and fro from government computers on a network connected to public servers.

- The reaction time is either months (fall of 2024 to 48 hours). My hunch is that it is the months long usage of an early version of the Chinese service.

- Which “manager” is responsible? Sorting out which vendors’ software did not catch this and which individual’s unit dropped the ball will be interesting and probably unproductive. Is it in any authorized vendors’ interest to say, “Yeah, our system doesn’t look for phoning home to China but it will be in the next update if your license is paid up for that service.” Will a US government professional say, “Our bad.”

Net net: We have snow removal services that don’t remove snow. We have aircraft crashing in sight of government facilities. And we have Chinese smart software running on US government systems connected to the public Internet. Interesting.

Stephen E Arnold, March 21, 2025

Good News for AI Software Engineers. Others, Not So Much

March 20, 2025

Another dinobaby blog post. No AI involved which could be good or bad depending on one’s point of view.

Another dinobaby blog post. No AI involved which could be good or bad depending on one’s point of view.

Spring is on the way in rural Kentucky. Will new jobs sprout like the dogwoods? Judging from the last local business event I attended, the answer is, “Yeah, maybe not so much.”

But there is a bright spot in the AI space. “ChatGPT and Other AI Startups Drive Software Engineer Demand” says:

AI technology has created many promising new opportunities for software engineers in recent years.

That certainly appears to apply to the workers in the smart software power houses and the outfits racing to achieve greater efficiency via AI. (Does “efficiency” translate to non-AI specialist job reductions?)

Back to the good news. The article asserts:

Many sectors have embraced digital transformation as a means of improving efficiency, enhancing customer experience, and staying competitive. Industries like manufacturing, agriculture, and even construction are now leveraging technologies like the Internet of Things (IoT), artificial intelligence (AI), and machine learning. Software engineers are pivotal in developing, implementing, and maintaining these technologies, allowing companies to streamline operations and harness data analytics for informed decision-making. Smart farming is just one example that has emerged as a significant trend where software engineers design applications that optimize crop yields through data analysis, weather forecasting, and resource management.

Yep, the efficiency word again. Let’s now dwell on the secondary job losses, shall we. This is a good news blog post.

The essay continues:

The COVID-19 pandemic drastically accelerated the shift towards remote work. Remote, global collaboration has opened up exciting opportunities for most professionals, but software engineers are a major driving factor of that availability in any industry. As a result, companies are now hiring engineers from anywhere in the world. Now, businesses are actively seeking tech-savvy individuals to help them leverage new technologies in their fields. The ability to work remotely has expanded the horizons of what’s possible in business and global communications, making software engineering an appealing path for professionals all over the map.

I liked the “hiring engineers from anywhere in the world.” That poses some upsides like cost savings for US AI staff. That creates a downside because a remote worker might also be a bad actor laboring to exfiltrate high value data from the clueless hiring process.

Also, the Covid reference, although a bit dated, reminds people that the return to work movement is a way to winnow staff. I assume the AI engineer will not be terminated but for those unlucky enough to be in certain DOGE and McKinsey-type consultants targeting devices.

As I said, this is a good news write up. Is it accurate? No comment. What about efficiency? Sure, fewer humans means lower costs. What about engineers who cannot or will learn AI? Yeah, well.

Stephen E Arnold, March 20, 2025

AI: Apple Intelligence or Apple Ineptness?

March 20, 2025

Another dinobaby blog post. No AI involved which could be good or bad depending on one’s point of view.

Another dinobaby blog post. No AI involved which could be good or bad depending on one’s point of view.

I read a very polite essay with some almost unreadable graphs. “Apple Innovation and Execution” says:

People have been claiming that Apple has forgotten how to innovate since the early 1980s, or longer – it’s a standing joke in talking about the company. But it’s also a question.

Yes, it is a question. Slap on your Apple goggles and look at the world from the fan boy perspective. AI is not a thing. Siri is a bit wonky. The endless requests to log in to use Facetime and other Apple services are from an objective point of view a bit stupid. The annual iPhone refresh. Er, yeah, now what are the functional differences again? The Apple car? Er, yeah.

Is that an innovation worm? Is that a bad apple? One possibility is that innovation worm is quite happy making an exit and looking for a better orchard. Thanks, You.com “Creative.” Good enough.

The write up says:

And ‘Apple Intelligence’ certainly isn’t going to drive a ‘super-cycle’ of iPhone upgrades any time soon. Indeed, a better iPhone feature by itself was never going to drive fundamentally different growth for Apple

So why do something which makes the company look stupid?

And what about this passage?

And the failure of Siri 2 is by far the most dramatic instance of a growing trend for Apple to launch stuff late. The software release cycle used to be a metronome: announcement at WWDC in the summer, OS release in September with everything you’d seen. There were plenty of delays and failed projects under the hood, and centres of notorious dysfunction (Apple Music, say), and Apple has always had a tendency to appear to forget about products for years (most Apple Watch faces don’t support the key new feature in the new Apple Watch) but public promise were always kept. Now that seems to be slipping. Is this a symptom of a Vista-like drift into systemically poor execution?

Some innovation worms are probably gnawing away inside the Apple. Apple’s AI. Easy to talk about. Tough to convert marketing baloney crafted by art history majors into software of value to users in my opinion.

Stephen E Arnold, March 20, 2025

AI Checks Professors Work: Who Is Hallucinating?

March 19, 2025

This blog post is the work of a humanoid dino baby. If you don’t know what a dinobaby is, you are not missing anything. Ask any 80 year old why don’t you?

This blog post is the work of a humanoid dino baby. If you don’t know what a dinobaby is, you are not missing anything. Ask any 80 year old why don’t you?

I read an amusing write up in Nature Magazine, a publication which does not often veer into MAD Magazine territory. The write up “AI Tools Are Spotting Errors in Research Papers: Inside a Growing Movement” has a wild subtitle as well: “Study that hyped the toxicity of black plastic utensils inspires projects that use large language models to check papers.”

Some have found that outputs from large language models often make up information. I have included references in my writings to Google’s cheese errors and lawyers submitting court documents with fabricated legal references. The main point of this Nature article is that presumably rock solid smart software will check the work of college professors, pals in the research industry, and precocious doctoral students laboring for love and not much money.

Interesting but will hallucinating smart software find mistakes in the work of people like the former president of Stanford University and Harvard’s former ethics star? Well, sure, peers and co-authors cannot be counted on to do work and present it without a bit of Photoshop magic or data recycling.

The article reports that their are two efforts underway to get those wily professors to run their “work” or science fiction through systems developed by Black Spatula and YesNoError. The Black Spatula emerged from tweaked research that said, “Your black kitchen spatula will kill you.” The YesNoError is similar but with a crypto twist. Yep, crypto.

Nature adds:

Both the Black Spatula Project and YesNoError use large language models (LLMs) to spot a range of errors in papers, including ones of fact as well as in calculations, methodology and referencing.

Assertions and claims are good. Black Spatula markets with the assurance its system “is wrong about an error around 10 percent of the time.” The YesNoError crypto wizards “quantified the false positives in only around 100 mathematical errors.” Ah, sure, low error rates.

I loved the last paragraph of the MAD inspired effort and report:

these efforts could reveal some uncomfortable truths. “Let’s say somebody actually made a really good one of these… in some fields, I think it would be like turning on the light in a room full of cockroaches…”

Hallucinating smart software. Professors who make stuff up. Nature Magazine channeling important developments in research. Hey, has Nature Magazine ever reported bogus research? Has Nature Magazine run its stories through these systems?

Good question. Might be a good idea.

Stephen E Arnold, March 19, 2025

An Econ Paper Designed to Make Most People Complacent about AI

March 19, 2025

Yep, another dinobaby original.

Yep, another dinobaby original.

I zipped through — and I mean zipped — a 60 page working paper called “Artificial Intelligence and the Labor Market.” I have to be upfront. I detested economics, and I still do. I used to take notes when Econ Talk was actually discussing economics. My notes were points that struck me as wildly unjustifiable. That podcast has changed. My view of economics has not. At 80 years of age, do you believe that I will adopt a different analytical stance? Wow, I hope not. You may have to take care of your parents some day and learn that certain types of discourse do not compute.

This paper has multiple authors. In my experience, the more authors, the more complicated the language. Here’s an example:

“Labor demand decreases in the average exposure of workers’ tasks to AI technologies; second, holding the average exposure constant, labor demand increases in the dispersion of task exposures to AI, as workers shift effort to tasks that are not displaced by AI.” ?

The idea is that the impact of smart software will not affect workers equally. As AI gets better at jobs humans do, humans will learn more and get a better job or integrate AI into their work. In some jobs, the humans are going to be out of luck. The good news is that these people can take other jobs or maybe start their own business.

The problem with the document I reviewed is that there are several fundamental “facts of life” that make the paper look a bit wobbly.

First, the minute it is cheaper for smart software to do a job that a human does, the human gets terminated. Software does not require touchy feely interactions, vacations, pay raises, and health care. Software can work as long as the plumbing is working. Humans sleep which is not productive from an employer’s point of view.

Second, government policies won’t work. Why? Government bureaucracies are reactive. By the time, a policy arrives, the trend or the smart software revolution has been off to the races. One cannot put spilled radioactive waste back into its containment vessel quickly, easily, or cheaply. How’s that Fukushima remediation going?

Third, the reskilling idea is baloney. Most people are not skilled in reskilling themselves. Life long learning is not a core capability of most people. Sure, in theory anyone can learn. The problem is that most people are happy planning a vacation, doom scrolling, or watch TikTok-type videos. Figuring out how to make use of smart software capabilities is not as popular as watching the Super Bowl.

Net net: The AI services are getting better. That means that most people will be faced with a re-employment challenge. I don’t think LinkedIn posts will do the job.

Stephen E Arnold, March 19, 2025

AI: Meh.

March 19, 2025

It seems consumers can see right through the AI hype. TechRadar reports, “New Survey Suggests the Vast Majority of iPhone and Samsung Galaxy Users Find AI Useless—and I’m Not Surprised.” Both iPhones and Samsung Galaxy smartphones have been pushing AI onto their users. But, according to a recent survey, 73% of iPhone users and 87% of Galaxy users respond to the innovations with a resounding “meh.” Even more would refuse to pay for continued access to the AI tools. Furthermore, very few would switch platforms to get better AI features: 16.8% of iPhone users and 9.7% of Galaxy users. In fact, notes writer Jamie Richards, fewer than half of users report even trying the AI features. He writes:

“I have some theories about what could be driving this apathy. The first centers on ethical concerns about AI. It’s no secret that AI is an environmental catastrophe in motion, consuming massive amounts of water and emitting huge levels of CO2, so greener folks may opt to give it a miss. There’s also the issue of AI and human creativity – TechRadar’s Editorial Associate Rowan Davies recently wrote of a nascent ‘cultural genocide‘ as a result of generative AI, which I think is a compelling reason to avoid it. … Ultimately, though, I think AI just isn’t interesting to the everyday person. Even as someone who’s making a career of being excited about phones, I’ve yet to see an AI feature announced that doesn’t look like a chore to use or an overbearing generative tool. I don’t use any AI features day-to-day, and as such I don’t expect much more excitement from the general public.”

No, neither do we. If only investors would catch on. The research was performed by phone-reselling marketplace SellCell, which surveyed over 2,000 smartphone users.

Cynthia Murrell, March 19, 2025

AI May Be Discovering Kurt Gödel Just as Einstein and von Neumann Did

March 17, 2025

This blog post is the work of a humanoid dino baby. If you don’t know what a dinobaby is, you are not missing anything.

This blog post is the work of a humanoid dino baby. If you don’t know what a dinobaby is, you are not missing anything.

AI re-thinking is becoming more widespread. I published a snippet of an essay about AI and its impact in socialist societies on March 10, 2025. I noticed “A Bear Case: My Predictions Regarding AI Progress.” The write is interesting, and I think it represents thinking which is becoming more prevalent among individuals who have racked up what I call AI mileage.

The main theme of the write up is a modern day application of Kurt Gödel’s annoying incompleteness theorem. I am no mathematician like my great uncle Vladimir Arnold, who worked for year with the somewhat quirky Dr. Kolmogorov. (Family tip: Going winter camping with Dr. Kolmogorov wizard was not a good idea unless. Well, you know…)

The main idea is a formal axiomatic system satisfying certain technical conditions cannot decide the truth value of all statements about natural numbers. In a nutshell, a set cannot contain itself. Smart software is not able to go outside of its training boundaries as far as I know.

Back to the essay, the author points out that AI something useful:

There will be a ton of innovative applications of Deep Learning, perhaps chiefly in the field of biotech, see GPT-4b and Evo 2. Those are, I must stress, human-made innovative applications of the paradigm of automated continuous program search. Not AI models autonomously producing innovations.

The essay does contain a question I found interesting:

Because what else are they [AI companies and developers] to do? If they admit to themselves they’re not closing their fingers around godhood after all, what will they have left?

Let me offer several general thoughts. I admit that I am not able to answer the question, but some ideas crossed my mind when I was thinking about the sporty Kolmogorov, my uncle’s advice about camping in the winter, and this essay:

- Something else will come along. There is a myth that technology progresses. I think technology is like the fictional tribble on Star Trek. The products and services are destined to produce more products and services. Like the Santa Fe Institute crowd, order emerges. Will the next big thing be AI? Probably AI will be in the DNA of the next big thing. So one answer to the question is, “Something will emerge.” Money will flow and the next big thing cycle begins again.

- The innovators and the AI companies will pivot. This is a fancy way of saying, “Try to come up with something else.” Even in the age of monopolies and oligopolies, change is relentless. Some of the changes will be recognized as the next big thing or at least the thing a person can do to survive. Does this mean Sam AI-Man will manage the robots at the local McDonald’s? Probably not, but he will come up with something.

- The AI hot pot will cool. Life will regress to the mean or a behavior that is not hell bent on becoming a super human like the guy who gets transfusions from his kid, the wonky “have my baby” thinking of a couple of high profile technologist, or the money lust of some 25 year old financial geniuses on Wall Street. A digitized organization man man living out the theory of the leisure class will return. (Tip: Buy a dark grey suit. Lose the T shirt.)

As an 80 year old dinobaby, I find the angst of AI interesting. If Kurt Gödel were alive, he might agree to comment, “Sonny, you can’t get outside the set.” My uncle would probably say, “Those costs. Are they crazy?”

Stephen E Arnold, March 17, 2025