Yep, Making the AI Hype Real Will Be Expensive. Hundreds of Billions, Probably More, Says Microsoft

December 26, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I really don’t want to write another “if you think it, it will become real.” But here goes. I read “Microsoft AI CEO Mustafa Suleyman Says It Will Cost Hundreds of Billions to Keep Up with Frontier AI in the Next Decade.”

What’s the pitch? The write up says:

Artificial general intelligence, or AGI, refers to AI systems that can match human intelligence across most tasks. Superintelligence goes a step further — systems that surpass human abilities.

So what’s the cost? Allegedly Mr. AI at Microsoft (aka Microsoft AI CEO Mustafa Suleyman) asserts:

it’s going to cost “hundreds of billions of dollars” to compete at the frontier of AI over the next five to 10 years….Not to mention the prices that we’re paying for individual researchers or members of technical staff.

Microsoft seems to have some “we must win” DNA. The company appears to be willing to ignore users requests for less of that Copilot goodness.

The vice president of AI finance seems shocked by an AI wizard’s request for additional funds… right now. Thanks, Qwen. Good enough.

Several observations:

- The assumption is that more money will produce results. When? Who knows?

- The mental orientation is that outfits like Microsoft are smart enough to convert dreams into reality. That is a certain type of confidence. A failure is a stepping stone, a learning experience. No big deal.

- The hype has triggered some non-AI consequences. The lack of entry level jobs that AI will do is likely to derail careers. Remember the baloney that online learning was better than sitting in a classroom. Real world engagement is work. Short circuiting that work in my opinion is a problem not easily corrected.

Let’s step back. What’s Microsoft doing? First, the company caught Google by surprise in 2022. Now Google is allegedly as good or better than OpenAI’s technology. Microsoft, therefore, is the follower instead of the pace setter. The result is mild concern with a chance of fear tomorrow. the company’s “leadership” is not stabilizing the company, its messages, and its technology offerings. Wobble wobble. Not good.

Second, Microsoft has demonstrated its “certain blindness” to two corporate activities. The first is the amount of money Microsoft has spent and apparently will continue to spend. With inputs from the financially adept Mr. Suleyman, the bean counters don’t have a change. Sure, Microsoft can back out of some data center deals and it can turn some knobs and dials to keep the company’s finances sparkling in the sun… for a while. How long? Who knows?

Third, even Microsoft fan boys are criticizing the idea of shifting from software that a users uses for a purpose to an intelligent operating system that users its users. My hunch is that this bulldozing of user requests, preferences, and needs may be what some folks call a “moment.” Google’s Waymo killed a cat in the Mission District. Microsoft may be running over its customers. Is this risky? Who knows.

Fourth, can Microsoft deliver AI that is not like AI from other services; namely, the open source solutions that are available and the customer-facing apps built on Qwen, for example. AI is a utility and not without errors. Some reports suggest that smart software is wrong two thirds of the time. It doesn’t matter what the “real” percentage is. People now associate smart software with mistakes, not a rock solid tool like a digital tire pressure gauge.

Net net: Mr. Suleyman will have an opportunity to deliver. For how long? Who knows?

Stephen E Arnold, December 26, 2025

Forget AI AI AI. Think Enron Enron Enron

December 25, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Happy holidays AI industry. The Financial Times seems to be suggesting that lignite coal may be in your imitative socks hanging on your mantels. In the nightmare before Christmas edition of the orange newspaper, the story “Tech Groups Shift $120bn of AI Data Centre Debt Off Balance Sheets” says:

Creative financing helps insulate Big Tech while binding Wall Street to a future boom or bust.

What’s this mean? The short answer in my opinion is, “Enron Enron Enron.” That was the online oil information short cake that was inedible, choking a big accounting firm and lots of normal employees and investors. Some died. Houston and Wall Street had a problem. for years after the event, the smell of burning credibility could be detected by those with sensitive noses.

Thanks, Venice.ai. Good enough.

The FT, however, is not into Enron Enron Enron. The FT is into AI AI AI.

The write up says:

Financial institutions including Pimco, BlackRock, Apollo, Blue Owl Capital and US banks such as JPMorgan have supplied at least $120bn in debt and equity for these tech groups’ computing infrastructure, according to a Financial Times analysis.

So what? The FT says:

That money is channeled through special purpose holding companies known as SPVs. The rush of financings, which do not show up on the tech companies’ balance sheets, may be obscuring the risks that these groups are running — and who will be on the hook if AI demand disappoints. SPV structures also increase the danger that financial stress for AI operators in the future could cascade across Wall Street in unpredictable ways.

These sentence struck me as a little to limp. First, everyone knows what happens if AI works and creates the Big Rock Candy Mountain the tech bros will own. That’s okay. Lots of money. No worries. Second, the more likely outcome is [a] rain pours over the sweet treat and it melts gradually or [b] a huge thundercloud perches over the fragile peak and it goes away in a short time. One day a mountain and the next a sticky mess.

How is this possible? The FT states:

Data center construction has become largely reliant on deep-pocketed private credit markets, a rapidly inflating $1.7tn industry that has itself prompted concerns due to steep rises in asset valuations, illiquidity and concentration of borrowers.

The FT does not mention the fact that there may be insufficient power, water, and people to pull off the data center boom. But that’s okay, the FT wants to make clear that “risky lending” seems to be the go-approach for some of the hopefuls in the AI AI AI hoped-for boom.

What can make the use of financial engineering to do Enron Enron Enron maneuvers more tricky? How about this play:

A number of tech bankers said they had even seen securitization deals on AI debt in recent months, where lenders pool loans and sell slices of them, known as asset-backed securities, to investors. Two bankers estimated these deals currently numbered in the single-digit billions of dollars. These deals spread the risk of the data center loans to a much wider pool of investors, including asset managers and pension funds.

When playing Enron Enron Enron games, the ideas for “special purpose vehicles” or SPVs reduce financial risk. Just create a separate legal entity with its own balance sheet. If the SPV burns up (salute to Enron), the parent company’s assets are in theory protected. Enron’s money people cooked up some chrome trim for their SPVs; for example, just fund the SPVs with Enron stock. What could go wrong? Nothing unless, the stock tanked. It did. Bingo, another big flame out. Great idea as long as the rain clouds did not park over Big Rock Candy Mountain. But the rains came and stayed.

The result is that the use of these financial fancy dance moves suggests that some AI AI AI outfits are learning the steps to the Enron Enron Enron boogie.

Several observations:

- The “think it and it will work” folks in the AI AI AI business have some doubters among their troops

- The push back about AI leads to wild and crazy policies like those promulgated by Einstein’s old school. See ETH’s AI Policies. These indicate no one is exactly what to do with AI.

- Companies like Microsoft are experiencing what might be called post-AI AI AI digital Covid. If the disease spreads, trouble looms until herd immunity kicks in. Time costs money. Sick AI AI AI could be fatal.

Net net: The FT has sent an interesting holiday greeting to the AI AI AI financial engineers. 2026 will be exciting and perhaps a bit stressful for some in my opinion. AI AI AI.

Stephen E Arnold, December 25, 2025

Way More Goofs for Waymo: Power Failure? Humans at Fault!

December 23, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I found the stories about Google smart Waymo self driving vehicles in the recent San Francisco power failure amusing. Dangerous, yes. A hoot? Absolutely. Google, as its smart software wizards remind us on a PR cadence to make Colgate toothpaste envious, Google is the big dog of smart software. ChatGPT, a loser. Grok, a crazy loser. Chinese open source LLMs. Losers all.

State of the art artificial intelligence makes San Francisco residents celebrate true exceptionalism. Thanks, Venice.ai. Good enough.

I read “Waymo Robotaxis Stop in the Streets during San Francisco Power Outage.” Okay. Google’s Waymo can’t go. Are they electric vehicles suddenly deprived of power? Nope. The smart software did not operate in a way that thrilled riders and motorists when most of San Francisco lost power. The BBC says a very Googley expert said:

"While the Waymo Driver is designed to treat non-functional signals as four-way stops, the sheer scale of the outage led to instances where vehicles remained stationary longer than usual to confirm the state of the affected intersections," a Waymo spokesperson said in a statement provided to the BBC. That "contributed to traffic friction during the height of the congestion," they added.

What other minor issues do the Googley Waymos offer? I noticed the omission of the phrase “out of an abundance of caution.” It is probably out there in some Google quote.

Several observations:

- Google’s wizards will talk about this unlikely event and figure out how to have its cars respond when traffic lights go on the fritz. Will Google be able to fix the problem? Sure. Soon.

- What caused the problem? From Google’s point of view, it was the person responsible for the power failure. Google had nothing to do with that because Google’s Gemini was not autonomously operating the San Francisco power generation system. Someone get on that, please.

- After how many years of testing and how many safe miles (except of course for the bodega cat) will have to pass before Google Waymo does what normal humans would do. Pull over. Or head to a cul de sac in Cow Hollow.

Net net: Google and its estimable wizards can overlook some details. Leadership will take action.

As Elon Musk allegedly said:

"Tesla Robotaxis were unaffected by the SF power outage," Musk posted on X, along with a repost of video showing Waymo vehicles stopped at an intersection with down traffic lights as a line of cars honk and attempt to go around them. Musk also reposted a video purportedly showing a Tesla self-driving car navigating an intersection with non-functioning traffic lights.

Does this mean that Grok is better than Gemini?

Stephen E Arnold, December 23, 2025

AI Training: The Great Unknown

December 23, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Deloitte used to be an accounting firm. Then the company decided it could so much more. Normal people ask accountants for their opinions. Deloitte, like many other service firms, decided it could just become a general management consulting firm, an information technology company, a conference and event company, and also do the books.

A professional training program for business professionals at a blue chip consulting firm. One person speaks up, but the others keep their thoughts to themselves. How many are updating their LinkedIn profile? How many are wondering if AI will put them out of a job? How many don’t care because the incentives emphasize selling and upselling engagements? Thanks, Venice.ai. Good enough but you are AI and that’s a mark of excellence for some today.

I read an article that suggests a firm like Deloitte is not able to do much of the self assessment and introspection required to make informed decisions that make surprises part of some firms’ standard operating procedure.

This insight appears in “Deloitte’s CTO on a Stunning AI Transformation Stat: Companies Are Spending 93% on Tech and Only 7% on People.” This headline suggests that Deloitte itself is making this error. [Note: This is a wonky link from my feed system. If it disappears, good luck.]

The write up in Fortune Magazine said:

According to Bill Briggs, Deloitte’s chief technology officer, as we move from AI experimentation to impact/value at scale, that fear is driving a lopsided investment strategy where companies are pouring 93% of their AI budget into technology and only 7% into the people expected to use it.

The question that popped into my mind was, “How much money is Deloitte spending relative to smart software on training its staff in AI?” Perhaps the not-so-surprising MBA type “fact” reflects what some Deloitte professionals realize is happening at the esteemed “we can do it in any business discipline” consulting firm?

The explanation is that “the culture, workflow, and training” of a blue chip consulting firm is not extensive. Now with AI finding its way from word processing to looking up a fact, educating employees about AI is given lip service, but is “training” possible. Remember, please, that some consulting firms want those over 55 to depart to retirement. However, what about highly paid experts with being friendly and word smithing their core competencies, can learn how, when, and when not to rely on smart software? Do these “best of the best” from MBA programs have the ability to learn, or are these people situational thinkers; that is, the skill is to be spontaneously helpful, to connect the dots, and reframe what a client tells them so it appears sage-like.

The Deloitte expert says:

“This incrementalism is a hard trap to get out of.”

Is Deloitte out of this incrementalism?

The Deloitte expert (apparently not asked the question by the Fortune reporter) says:

As organizations move from “carbon-based” to “silicon-based” employees (meaning a shift from humans to semiconductor chips, or robots), they must establish the equivalent of an HR process for agents, robots, and advanced AI, and complex questions about liability and performance management. This is going to be hard, because it involves complex questions. He brought up the hypothetical of a human creating an agent, and that agent creating five more generations of agents. If wrongdoing occurs from the fifth generation, whose fault is that? “What’s a disciplinary action? You’re gonna put your line robot…in a timeout and force them to do 10 hours of mandatory compliance training?”

I want to point out that blue chip consulting is a soft skill business. The vaunted analytics and other parade float decorations come from Excel, third parties, or recent hires do the equivalent of college research.

Fortune points to Deloitte and says:

The consequences of ignoring the human side of the equation are already visible in the workforce. According to Deloitte’s TrustID report, released in the third quarter, despite increasing access to GenAI in the workplace, overall usage has actually decreased by 15%. Furthermore, a “shadow AI” problem is emerging: 43% of workers with access to GenAI admit to noncompliance, bypassing employer policies to use unapproved tools. This aligns with previous Fortune reporting on the scourge of shadow AI, as surveys show that workers at up to 90% of companies are using AI tools while hiding that usage from their IT departments. Workers say these unauthorized tools are “easier to access” and “better and more accurate” than the approved corporate solutions. This disconnect has led to a collapse in confidence, with corporate worker trust in GenAI declining by 38% between May and July 2025. The data supports this need for a human-centric approach. Workers who received hands-on AI training and workshops reported 144% higher trust in their employer’s AI than those who did not.

Let’s get back to the question? Is Deloitte training its employees in AI so the “information” sticks and then finds its way into engagements? This passage seems to suggest that the answer is, “No for Deloitte. No for its clients. And no for most organizations.” Judge for yourself:

For Briggs [the Deloitte wizard], the message to the C-suite is clear: The technology is ready, but unless leaders shift their focus to the human and cultural transformation, they risk being left with expensive technology that no one trusts enough to use.

My take is that the blue chip consulting firms are:

- Trying to make AI good enough so headcount and other cost savings like health care can be reduced

- Selling AI consulting to their clients before knowing what will and won’t work in a context different from the consulting firms’

- Developing an understanding that AI cannot do what humans can do; that is, build relationships and sell engagements.

Sort of a pickle.

Stephen E Arnold, December 23, 2025

How to Get a Job in the Age of AI?

December 23, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Two interesting employment related articles appeared in my newsfeeds this morning. Let’s take a quick look at each. I will try to add some humor to these write ups. Some may find them downright gloomy.

The first is “An OpenAI Exec Identifies 3 Jobs on the Cusp of Being Automated.” I want to point out that the OpenAI wizard’s own job seems to be secure from his point of view. The write up points out:

Olivier Godement, the head of product for business products at the ChatGPT maker, shared why he thinks a trio of jobs — in life sciences, customer service, and computer engineering — is on the cusp of automation.

Let’s think about each of these broad categories. I am not sure what life sciences means in OpenAI world. The term is like a giant umbrella. Customer service makes some sense. Companies were trying to ignore, terminate, and prevent any money sucking operation related to answer customer’s questions and complaints for years. No matter how lousy and AI model is, my hunch is that it will be slapped into a customer service role even if it is arguably worse than trying to understand the accent of a person who speaks English as a second or third language.

Young members of “leadership” realize that the AI system used to replace lower-level workers has taken their jobs. Selling crafts on Etsy.com is a career option. Plus, there is politics and maybe Epstein, Epstein, Epstein related careers for some. Thanks, Qwen, you just output a good enough image but you are free at this time (December 13, 2025).

Now we come to computer engineering. I assume the OpenAI person will position himself as an AI adept, which fits under the umbrella of computer engineering. My hunch is that the reference is to coders who do grunt work. The only problem is that the large language model approach to pumping out software can be problematic in some situations. That’s why the OpenAI person is probably not worrying about his job. An informed human has to be in the process of machine-generated code. LLMs do make errors. If the software is autogenerated for one of those newfangled portable nuclear reactors designed to power football field sized data centers, someone will want to have a human check that software. Traditional or next generation nuclear reactors can create some excitement if the software makes errors. Do you want a thorium reactor next to your domicile? What about one run entirely by smart software?

What’s amusing about this write up is that the OpenAI person seems blissfully unaware of the precarious financial situation that Sam AI-Man has created. When and if OpenAI experiences a financial hiccup, will those involved in business products keep their jobs. Oliver might want to consider that eventuality. Some investors are thinking about their options for Sam AI-Man related activities.

The second write up is the type I absolutely get a visceral thrill writing. A person with a connection (probably accidental or tenuous) lets me trot out my favorite trope — Epstein, Epstein, Epstein — as a way capture the peculiarity of modern America. This article is “Bill Gates Predicts That Only Three Jobs Will Be Safe from Being Replaced by AI.” My immediate assumption upon spotting the article was that the type of work Epstein, Epstein, Epstein did would not be replaced by smart software. I think that impression is accurate, but, alas, the write up did not include Epstein, Epstein, Epstein work in its story.

What are the safe jobs? The write up identifies three:

-

Biology. Remember OpenAI thinks life sciences are toast. Okay, which is correct?

-

Energy expertise

-

Work that requires creative and intuitive thinking. (Do you think that this category embraces Epstein, Epstein, Epstein work? I am not sure.)

The write up includes a statement from Bill Gates:

“You know, like baseball. We won’t want to watch computers play baseball,” he said. “So there’ll be some things that we reserve for ourselves, but in terms of making things and moving things, and growing food, over time, those will be basically solved problems.”

Several observations:

-

AI will cause many people to lose their jobs

-

Young people will have to make knick knacks to sell on Etsy or find equally creative ways of supporting themselves

-

The assumption that people will have “regular” jobs, buy houses, go on vacations, and do the other stuff organization man type thinking assumed was operative, is a goner.

Where’s the humor in this? Epstein, Epstein, Epstein and OpenAI debt, OpenAI debt, and OpenAI debt. Ho ho ho.

Stephen E Arnold, December x, 2025

Telegram News: AlphaTON, About Face

December 22, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Starting in January 2026, my team and I will be writing about Telegram’s Cocoon, the firm’s artificial intelligence push. Unlike the “borrow, buy, hype, and promise” approach of some US firms, Telegram is going a different direction. For Telegram, it is early days for smart software. The impact will be that posts in Beyond Search will decrease beginning Christmas week. The new Telegram News posts will be on a different url or service. Our preliminary tests show that a different approach won’t make much difference to the Arnold IT team. Frankly I am not sure how people will find the new service. I will post the links on Beyond Search, but with the exceptional indexing available from Bing, Google, et al, I have zero clue if these services will find our Telegram Notes.

Why am I making this shift?

Here’s one example. With a bit of fancy footwork, a publicly traded company popped into existence a couple of months ago. Telegram itself does not appear to have any connection to this outfit. However, the TON Foundation’s former president set up an outfit called the TON Strategy Co., which is listed on the US NASDAQ. Then following a similar playbook, AlphaTON popped up to provide those who believe in TONcoin a way to invest in a financial firm anchored to TONcoin. Yeah, I know that having these two public companies semi-linked to Telegram’s TON Foundation is interesting.

But even more fascinating is the news story about AlphaTON using some financial fancy dancing to link itself to Andruil. This is one of the companies familiar to those who keep track of certain Silicon Valley outfits generating revenue from Department of War contracts.

What’s the news?

The deal is off. According to “AlphaTON Capital Corp Issues Clarification on Anduril Industries Investment Program.” The word clarification is not one I would have chosen. The deal has vaporized. The write up says:

It has now come to the Company’s attention that the Anduril Industries common stock underlying the economic exposure that was contractually offered to our Company is subject to transfer restrictions and that Anduril will not consent to any such transfer. Due to these material limitations and risk on ownership and transferability, AlphaTON has made the decision to cancel the Anduril tokenized investment program and will not be proceeding with the transaction. The Company remains committed to strategic investments and the tokenization of desirable assets that provide clear ownership rights and align with shareholder value creation objectives.

I interpret this passage to mean, “Fire, Aim, Ready Maybe.”

With the stock of AlphaTON Capital as of December 18, 2025, at about $0.70 at 11 30 am US Eastern, this fancy dancing may end this set with a snappy rendition of Mozart’s Requiem.

That’s why Telegram Notes will be an interesting organization to follow. We think Pavel Durov’s trial in France, the two or maybe one surviving public company, two “foundations” linked to Telegram, and the new Cocoon AI play are going to be more interesting. If Mr. Durov goes to jail, the public company plays fail, and the Cocoon thing dies before it becomes a digital butterfly, I may flow more stories to Beyond Search.

Stay tuned.

Stephen E Arnold, December 22, 2025

Modern Management Method with and without Smart Software

December 22, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I enjoy reading and thinking about business case studies. The good ones are few and far between. Most are predictable, almost as if the author was relying on a large language model for help.

“I’m a Tech Lead, and Nobody Listens to Me. What Should I Do?” is an example of a bright human hitting on tactics to become more effective in his job. You can work through the full text of the article and dig out the gems that may apply to you. I want to focus on two points in the write up. The first is the matrix management diagram based on or attributed to Spotify, a music outfit. The second is a method for gaining influence in a modern, let’s go fast company.

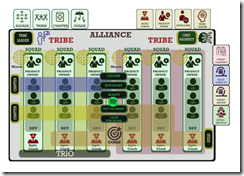

Here’s the diagram that caught my attention:

Instead of the usual business school lingo, you will notice “alliance,” “tribe,” “squad,” and “trio.” I am not sure what these jazzy words mean, but I want to ask you a question, “Looking at this matrix, who is responsible when a problem occurs?” Take you time. I did spend some time looking at this chart, and I formulated several hypotheses:

- The creator wanted to make sure that a member of leadership would have a tough time figuring out who screwed up. If you disagree, that’s okay. I am a dinobaby, and I like those old fashioned flow diagrams with arrows and boxes. In those boxes is the name of the person who has to fix a problem. I don’t know about one’s tribe. I know Problem A is here. Person B is going to fix it. Simple.

- The matrix as displayed allows a lot of people to blame other people. For example, what if the coach is like the leader of the Cleveland Browns, who brilliantly equipped a young quarterback with the incorrect game plan for the first quarter of a football game. Do we blame the coach or do we chase down a product owner? What if the problem is a result of a dependency screw up involving another squad in a different tribe? In practical terms, there is no one with direct responsibility for the problem. Again: Don’t agree? That’s okay.

- The matrix has weird “leadership” or “employment categories” distributed across the X axes at the top of the chart. What’s a chapter? What’s an alliance? What’s self organized and autonomous in a complex technical system? My view is that this is pure baloney designed to make people feel important yet shied any one person from responsibility. I bet some reading this numbered point find my statement out of line. Tough.

The diagram makes clear that the organization is presented as one that will just muddle forward. No one will have responsibility when a problem occurs? No one will know how to fix the problem without dropping other work and reverse engineering what is happening. The chart almost guarantees bafflement when a problem surfaces.

The second item I noticed was this statement or “learning” from the individual who presented the case example. Here’s the passage:

When you solve a real problem and make it visible, people join in. Trust is also built that way, by inviting others to improve what you started and celebrating when they do it better than you.

For this passage hooks into the one about solving a problem; to wit:

Helping people debug. I have never considered myself especially smart, but I have always been very systematic when connecting error messages, code, hypotheses, and system behavior. To my surprise, many people saw this as almost magical. It was not magic. It was a mix of experience, fundamentals, intuition, knowing where to look, and not being afraid to dive into third-party library code.

These two passages describe human interactions. Working with others can result in a collective effort greater than the sum of its parts. It is a human manifestation. One fellow described this a interaction efflorescence. Fancy words for what happens when a few people face a deadline and severe consequences for failure.

Why did I spend time pointing out an organizational structure purpose built to prevent assigning responsibility and the very human observations of the case study author?

The answer is, “What will happen when smart software is tossed into this management structure?” First, people will be fired. The matrix will have lots of empty boxes. Second, the human interaction will have to adapt to the smart software. The smart software is not going to adapt to humans. Don’t believe me. One smart software company defended itself by telling a court it is in our terms of service that suicide in not permissible. Therefore, we are not responsible. The dead kid violated the TOS.

How functional will the company be as the very human insight about solving real problems interfaces with software? Man machine interface? Will that be an issue in a go fast outfit? Nope. The human will be excised as a consequence of efficiency.

Stephen E Arnold, December 23, 2025

Windows Strafed by Windows Fanboys: Incredible Flip

December 19, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

When the Windows folding phone came out, I remember hunting around for blog posts, podcasts, and videos about this interesting device. Following links I bumbled onto the Windows Central Web site. The two fellows who seemed to be front and center had a podcast (a quite irregularly published podcast I might add). I was amazed at the pro-folding gizmo. One of the write ups was panting with excitement. I thought then and think now that figuring out how to fold a screen is a laboratory exercise, not something destined to be part of my mobile phone experience.

I forgot about Windows Central and the unflagging ability to find something wonderfully bigly about the Softies. Then I followed a link to this story: “Microsoft Has a Problem: Nobody Wants to Buy or Use Its Shoddy AI Products — As Google’s AI Growth Begins to Outpace Copilot Products.”

An athlete failed at his Dos Santos II exercise. The coach, a tough love type, offers the injured gymnast a path forward with Mistral AI. Thanks, Qwen, do you phone home?

The cited write up struck me as a technology aficionado pulling off what is called a Dos Santos II. (If you are not into gymnastics, this exercise “trick” involves starting backward with a half twist into a double front in the layout position. Boom. Perfect 10. From folding phone to “shoddy AI products.”

If I were curious, I would dig into the reasons for this change in tune, instruments, and concert hall. My hunch is that a new manager replaced a person who was talking (informally, of course) to individuals who provided the information without identifying the source. Reuters, the trust outfit, does this on occasion as do other “real” journalists. I prefer to say, here are my observations or my hypotheses about Topic X. Others just do the “anonymous” and move forward in life.

Here are a couple of snips from the write up that I find notable. These are not quite at the “shoddy AI products” level, but I find them interesting.

Snippet 1:

If there’s one thing that typifies Microsoft under CEO Satya Nadella‘s tenure: it’s a general inability to connect with customers. Microsoft shut down its retail arm quietly over the past few years, closed up shop on mountains of consumer products, while drifting haphazardly from tech fad to tech fad.

I like the idea that Microsoft is not sure what it is doing. Furthermore, I don’t think Microsoft every connected with its customers. Connections come from the Certified Partners, the media lap dogs fawning at Microsoft CEO antics, and brilliant statements about how many Russian programmers it takes to hack into a Windows product. (Hint: The answer is a couple if the Telegram posts I have read are semi accurate.)

Snippet 2:

With OpenAI’s business model under constant scrutiny and racking up genuinely dangerous levels of debt, it’s become a cascading problem for Microsoft to have tied up layer upon layer of its business in what might end up being something of a lame duck.

My interpretation of this comment is that Microsoft hitched its wagon to one of AI’s Cybertrucks, and the buggy isn’t able to pull the Softie’s one-horse shay. The notion of a “lame duck” is that Microsoft cannot easily extricate itself from the money, the effort, the staff, and the weird “swallow your AI medicine, you fool” approach the estimable company has adopted for Copilot.

Snippet 3:

Microsoft’s “ship it now fix it later” attitude risks giving its AI products an Internet Explorer-like reputation for poor quality, sacrificing the future to more patient, thoughtful companies who spend a little more time polishing first. Microsoft’s strategy for AI seems to revolve around offering cheaper, lower quality products at lower costs (Microsoft Teams, hi), over more expensive higher-quality options its competitors are offering. Whether or not that strategy will work for artificial intelligence, which is exorbitantly expensive to run, remains to be seen.

A less civilized editor would have dropped in the industry buzzword “crapware.” But we are stuck with “ship it now fix it later” or maybe just never. So far we have customer issues, the OpenAI technology as a lame duck, and now the lousy software criticism.

Okay, that’s enough.

The question is, “Why the Dos Santos II” at this time? I think citing the third party “Information” is a convenient technique in blog posts. Heck, Beyond Search uses this method almost exclusively except I position what I do as an abstract with critical commentary.

Let my hypothesize (no anonymous “source” is helping me out):

- Whoever at Windows Central annoyed a Softie with power created is responding to this perceived injustice

- The people at Windows Central woke up one day and heard a little voice say, “Your cheerleading is out of step with how others view Microsoft.” The folks at Windows Central listened and, thus, the Dos Santos II.

- Windows Central did what the auth9or of the article states in the article; that is, using multiple AI services each day. The Windows Central professional realized that Copilot was not as helpful writing “real” news as some of the other services.

Which of these is closer to the pin? I have no idea. Today (December 12, 2025) I used Qwen, Anthropic, ChatGPT, and Gemini. I want to tell you that these four services did not provide accurate output.

Windows Central gets a 9.0 for its flooring Microsoft exercise.

Stephen E Arnold, December 19, 2025

Waymo and a Final Woof

December 19, 2025

We’re dog lovers. Canines are the best thing on this green and blue sphere. We were sickened when we read this article in The Register about the death of a bow-wow: “Waymo Chalks Up Another Four-Legged Casualty On San Francisco Streets.”

Waymo is a self-driving car company based in San Francisco. The company unfortunately confirmed that one of its self-driving cars ran over a small, unleashed dog. The vehicle had adults and children in it. The children were crying after hearing the dog’s suffering. The status of the dog is unknown. Waymo wants to locate the dog’s family to offer assistance and veterinary services.

Waymo cars are popular in San Francisco, but…

“Many locals report feeling uneasy about the fleet of white Jaguar I-Paces roaming the city’s roads, although the offering has proven popular with tourists, women seeking safer rides, and parents in need of a quick, convenient way to ferry their children to school. Waymo currently operates in the SF Bay Area, Los Angeles, and Phoenix, and some self-driving rides are available through Uber in Austin and Atlanta.”

Waymo cars also ran over a famous stray cat named Kit Kat, known as the “Mayor of 16th Street.” May these animals rest in peace. Does the Waymo software experience regret? Yeah.

Whitney Grace, December 19, 2025

Mistakes Are Biological. Do Not Worry. Be Happy

December 18, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I read a short summary of a longer paper written by a person named Paul Arnold. I hope this is not misinformation. I am not related to Paul. But this could be a mistake. This dinobaby makes many mistakes.

The article that caught my attention is titled “Misinformation Is an Inevitable Biological Reality Across nature, Researchers Argue.” The short item was edited by a human named Gaby Clark. The short essay was reviewed by Robert Edan. I think the idea is to make clear that nothing in the article is made up and it is not misinformation.

Okay, but…. Let’s look at couple of short statements from the write up about misinformation. (I don’t want to go “meta” but the possibility exists that the short item is stuffed full of information. What do you think?

Here’s an image capturing a youngish teacher outputting misinformation to his students. Okay, Qwen. Good enough.

Here’s snippet one:

… there is nothing new about so-called “fake news…”

Okay, does this mean that software that predicts the next word and gets it wrong is part of this old, long-standing trajectory for biological creatures. For me, the idea that algorithms cobbled together gets a pass because “there is nothing new about so-called ‘fake news’ shifts the discussion about smart software. Instead of worrying about getting about two thirds of questions right, the smart software is good enough.

A second snippet says:

Working with these [the models Paul Arnold and probably others developed] led the team to conclude that misinformation is a fundamental feature of all biological communication, not a bug, failure, or other pathology.

Introducing the notion of “pathology” adds a bit of context to misinformation. Is a human assembled smart software system, trained on content that includes misinformation and processed by algorithms that may be biased in some way is just the way the world works. I am not sure I am ready to flash the green light for some of the AI outfits to output what is demonstrably wrong, distorted, weaponized, or non-verifiable outputs.

What puzzled me is that the article points to itself and to an article by Ling Wei Kong et al, “A Brief Natural history of Misinformation” in the Journal of the Royal Society Interface.

Here’s the link to the original article. The authors of the publication are, if the information on the Web instance of the article is accurate, Ling-Wei Kong, Lucas Gallart, Abigail G. Grassick, Jay W. Love, Amlan Nayak, and Andrew M. Hein. Seven people worked on the “original” article. The three people identified in the short version worked on that item. This adds up to 10 people. Apparently the group believes that misinformation is a part of the biological being. Therefore, there is no cause to worry. In fact, there are mechanisms to deal with misinformation. Obviously a duck quack that sends a couple of hundred mallards aloft can protect the flock. A minimum of one duck needs to check out the threat only to find nothing is visible. That duck heads back to the pond. Maybe others follow? Maybe the duck ends up alone in the pond. The ducks take the viewpoint, “Better safe than sorry.”

But when a system or a mobile device outputs incorrect or weaponized information to a user, there may not be a flock around. If there is a group of people, none of them may be able to identify the incorrect or weaponized information. Thus, the biological propensity to be wrong bumps into an output which may be shaped to cause a particular effect or to alter a human’s way of thinking.

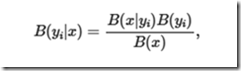

Most people will not sit down and take a close look at this evidence of scientific rigor:

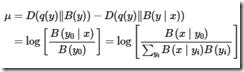

and then follow the logic that leads to:

I am pretty old but it looks as if Mildred Martens, my old math teacher, would suggest the KL divergence wants me to assume some things about q(y). On the right side, I think I see some good old Bayesian stuff but I didn’t see the to take me from the KL-difference to log posterior-to-prior ratio. Would Miss Martens ask a student like me to clarify the transitions, fix up the notation, and eliminate issues between expectation vs. pointwise values? Remember, please, that I am a dinobaby and I could be outputting misinformation about misinformation.

Several observations:

- If one accepts this line of reasoning, misinformation is emergent. It is somehow part of the warp and woof of living and communicating. My take is that one should expect misinformation.

- Anything created by a biological entity will output misinformation. My take on this is that one should expect misinformation everywhere.

- I worry that researchers tackling information, smart software, and related disciplines may work very hard to prove that information is inevitable but the biological organisms can carry on.

I am not sure if I feel comfortable with the normalization of misinformation. As a dinobaby, the function of education is to anchor those completing a course of study in a collection of generally agreed upon facts. With misinformation everywhere, why bother?

Net net: One can read this research and the summary article as an explanation why smart software is just fine. Accept the hallucinations and misstatements. Errors are normal. The ducks are fine. The AI users will be fine. The models will get better. Despite this framing of misinformation is everywhere, the results say, “Knock off the criticism of smart software. You will be fine.”

I am not so sure.

Stephen E Arnold, December 18, 2025