Let Them Eat Cake or Unplug: The AI Big Tech Bro Effect

November 7, 2024

I spotted a news item which will zip right by some people. The “real” news outfit owned by the lovable Jeff Bezos published “As Data Centers for AI Strain the Power Grid, Bills Rise for Everyday Customers.” The write up tries to explain that AI costs for electric power are being passed along to regular folks. Most of these electricity dependent people do not take home paychecks with tens of millions of dollars like the Nadellas, the Zuckerbergs, or the Pichais type breadwinners do. Heck, these AI poohbahs think about buying modular nuclear power plants. (I want to point out that these do not exist and may not for many years.)

The article is not going to thrill the professionals who are experts on utility demand and pricing. Those folks know that the smart software poohbahs have royally screwed up some weekends and vacations for the foreseeable future.

The WaPo article (presumably blessed by St. Jeffrey) says:

The facilities’ extraordinary demand for electricity to power and cool computers inside can drive up the price local utilities pay for energy and require significant improvements to electric grid transmission systems. As a result, costs have already begun going up for customers — or are about to in the near future, according to utility planning documents and energy industry analysts. Some regulators are concerned that the tech companies aren’t paying their fair share, while leaving customers from homeowners to small businesses on the hook.

Okay, typical “real” journospeak. “Costs have already begun going up for customers.” Hey, no kidding. The big AI parade began with the January 2023 announcement that the Softies were going whole hog on AI. The lovable Google immediately flipped into alert mode. I can visualize flashing yellow LEDs and faux red stop lights blinking in the gray corridors in Shoreline Drive facilities if there are people in those offices again. Yeah, ghostly blinking.

The write up points out, rather unsurprisingly:

The tech firms and several of the power companies serving them strongly deny they are burdening others. They say higher utility bills are paying for overdue improvements to the power grid that benefit all customers.

Who wants PEPCO and VEPCO to kill their service? Actually, no one. Imagine life in NoVa, DC, and the ever lovely Maryland without power. Yikes.

From my point of view, informed by some exposure to the utility sector at a nuclear consulting firm and then at a blue chip consulting outfit, here’s the scoop.

The demand planning done with rigor by US utilities took a hit each time the Big Dogs of AI brought more specialized, power hungry servers online and — here’s the killer, folks — and left them on. The way power consumption used to work is that during the day, consumer usage would fall and business/industry usage would rise. The power hogging steel industry was a 24×7 outfit. But over the last 40 years, manufacturing has wound down and consumer demand crept upwards. The curves had to be plotted and the demand projected, but, in general, life was not too crazy for the US power generation industry. Sure, there were the costs associated with decommissioning “old” nuclear plants and expanding new non-nuclear facilities with expensive but management environmental gewgaws, gadgets, and gizmos plugged in to save the snail darters and the frogs.

Since January 2023, demand has been curving upwards. Power generation outfits don’t want to miss out on revenue. Therefore, some utilities have worked out what I would call sweetheart deals for electricity for AI-centric data centers. Some of these puppies suck more power in a day than a dying city located in Flyover Country in Illinois.

Plus, these data centers are not enough. Each quarter the big AI dogs explain that more billions will be pumped into AI data centers. Keep in mind: These puppies run 24×7. The AI wolves have worked out discount rates.

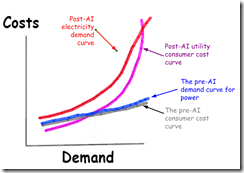

What do the US power utilities do? First, the models have to be reworked. Second, the relationships to trade, buy, or “borrow” power have to be refined. Third, capacity has to be added. Fourth, the utility rate people create a consumer pricing graph which may look like this:

Guess who will pay? Yep, consumers.

The red line is the prediction for post-AI electricity demand. For comparison, the blue line shows the demand curve before Microsoft ignited the AI wars. Note that the gray line is consumer cost or the monthly electricity bill for Bob and Mary Normcore. The nuclear purple line shows what is and will continue to happen to consumer electricity costs. The red line is the projected power demand for the AI big dogs.

The graph shows that the cost will be passed to consumers. Why? The sweetheart deals to get the Big Dog power generation contracts means guaranteed cash flow and a hurdle for a low-ball utility to lumber over. Utilities like power generation are not the Neon Deions of American business.

There will be hand waving by regulators. Some city government types will argue, “We need the data centers.” Podcasts and posts on social media will sprout like weeds in an untended field.

Net net: Bob and Mary Normcore may have to decide between food and electricity. AI is wonderful, right.

Stephen E Arnold, November 7, 2024

Dreaming about Enterprise Search: Hope Springs Eternal…

November 6, 2024

The post is the work of a humanoid who happens to be a dinobaby. GenX, Y, and Z, read at your own risk. If art is included, smart software produces these banal images.

The post is the work of a humanoid who happens to be a dinobaby. GenX, Y, and Z, read at your own risk. If art is included, smart software produces these banal images.

Enterprise search is back, baby. The marketing lingo is very year 2003, however. The jargon has been updated, but the story is the same: We can make an organization’s information accessible. Instead of Autonomy’s Neurolinguistic Programming, we have AI. Instead of “just text,” we have video content processed. Instead of filters, we have access to cloud-stored data.

An executive knows he can crack the problem of finding information instantly. The problem is doing it so that the time and cost of data clean up does not cost more than buying the Empire State Building. Thanks, Stable Diffusion. Good enough.

A good example of the current approach to selling the utility of an enterprise search and retrieval system is the article / interview in Betanews called “How AI Is Set to Democratize Information.” I want to be upfront. I am a mostly aligned with the analysis of information and knowledge presented by Taichi Sakaiya. His The Knowledge Value Revolution or a History of the Future has been a useful work for me since the early 1990s. I was in Osaka, Japan, lecturing at the Kansai Institute of Technology when I learned of this work book from my gracious hosts and the Managing Director of Kinokuniya (my sponsor). Devaluing knowledge by regressing to the fat part of a Gaussian distribution is not something about which I am excited.

However, the senior manager of Pyron (Raleigh, North Carolina), an AI-powered information retrieval company, finds the concept in line with what his firm’s technology provides to its customers. The article includes this statement:

The concept of AI as a ‘knowledge cloud’ is directly tied to information access and organizational intelligence. It’s essentially an interconnected network of systems of records forming a centralized repository of insights and lessons learned, accessible to individuals and organizations.

The benefit is, according to the Pyron executive:

By breaking down barriers to knowledge, the AI knowledge cloud could eliminate the need for specialized expertise to interpret complex information, providing instant access to a wide range of topics and fields.

The article introduces a fresh spin on the problems of information in organizations:

Knowledge friction is a pervasive issue in modern enterprises, stemming from the lack of an accessible and unified source of information. Historically, organizations have never had a singular repository for all their knowledge and data, akin to libraries in academic or civic communities. Instead, enterprise knowledge is scattered across numerous platforms and systems — each managed by different vendors, operating in silos.

Pyron opened its doors in 2017. After seven years, the company is presenting a vision of what access to enterprise information could, would, and probably should do.

The reality, based on my experience, is different. I am not talking about Pyron now. I am discussing the re-emergence of enterprise search as the killer application for bolting artificial intelligence to information retrieval. If you are in love with AI systems from oligopolists, you may want to stop scanning this blog post. I do not want to be responsible for a stroke or an esophageal spasm. Here we go:

- Silos of information are an emergent phenomenon. Knowledge has value. Few want to make their information available without some value returning to them. Therefore, one can talk about breaking silos and democratization, but those silos will be erected and protected. Secret skunk works, mislabeled projects, and squirreling away knowledge nuggets for a winter’s day. In the case of Senator Everett Dirksen, the information was used to get certain items prioritized. That’s why there is a building named after him.

- The “value” of information or knowledge depends on another person’s need. A database which contains the antidote to save a child from a household poisoning costs money to access. Why? Desperate people will pay. The “information wants to free” idea is not one that makes sense to those with information and the knowledge to derive value from what another finds inscrutable. I am not sure that “democratizing information” meshes smoothly with my view.

- Enterprise search, with or without, hits some cost and time problems with a small number of what have been problems for more than 50 years. SMART failed, STAIRS III failed, and the hundreds of followers have failed. Content is messy. The idea that one can process text, spreadsheets, Word files, and email is one thing. Doing it without skipping wonky files or the time and cost of repurposing data remains difficult. Chemical companies deal with formulae; nuclear engineering firms deal with records management and mathematics; and consulting companies deal with highly paid people who lock up their information on a personal laptop. Without these little puddles of information, the “answer” or the “search output” will not be just a hallucination. The answer may be dead wrong.

I understand the need to whip up jargon like “democratize information”, “knowledge friction”, and “RAG frameworks”. The problem is that despite the words, delivering accurate, verifiable, timely on-point search results in response to a query is a difficult problem.

Maybe one of the monopolies will crack the problem. But most of output is a glimpse of what may be coming in the future. When will the future arrive? Probably when the next PR or marketing write up about search appears. As I have said numerous times, I find it more difficult to locate the information I need than at any time in my more than half a century in online information retrieval.

What’s easy is recycling marketing literature from companies who were far better at describing a “to be” system, not a “here and now” system.

Stephen E Arnold, November 4, 2024

Twenty Five Percent of How Much, Google?

November 6, 2024

The post is the work of a humanoid who happens to be a dinobaby. GenX, Y, and Z, read at your own risk. If art is included, smart software produces these banal images.

The post is the work of a humanoid who happens to be a dinobaby. GenX, Y, and Z, read at your own risk. If art is included, smart software produces these banal images.

I read the encomia to Google’s quarterly report. In a nutshell, everything is coming up roses even the hyperbole. One news hook which has snagged some “real” news professionals is that “more than a quarter of new code at Google is generated by AI.” The exclamation point is implicit. Google’s AI PR is different from some other firms; for example, Samsung blames its financial performance disappointments on some AI. Winners and losers in a game in which some think the oligopolies are automatic winners.

An AI believer sees the future which is arriving “soon, real soon.” Thanks, You.com. Good enough because I don’t have the energy to work around your guard rails.

The question is, “How much code and technical debt does Google have after a quarter century of its court-described monopolistic behavior? Oh, that number is unknown. How many current Google engineers fool around with that legacy code? Oh, that number is unknown and probably for very good reasons. The old crowd of wizards has been hit with retirement, cashing in and cashing out, and “leadership” nervous about fiddling with some processes that are “good enough.” But 25 years. No worries.

The big news is that 25 percent of “new” code is written by smart software and then checked by the current and wizardly professionals. How much “new” code is written each year for the last three years? What percentage of the total Google code base is “new” in the years between 2021 and 2024? My hunch is that “new” is relative. I also surmise that smart software doing 25 percent of the work is one of those PR and Wall Street targeted assertions specifically designed to make the Google stock go up. And it worked.

However, I noted this Washington Post article: “Meet the Super Users Who Tap AI to Get Ahead at Work.” Buried in that write up which ran the mostly rah rah AI “real” news article coincident with Google’s AI spinning quarterly reports one interesting comment:

Adoption of AI at work is still relatively nascent. About 67 percent of workers say they never use AI for their jobs compared to 4 percent who say they use it daily, according to a recent survey by Gallup.

One can interpret this as saying, “Imagine the growth that is coming from reduced costs. Get rid of most coders and just use Google’s and other firms’ smart programming tools.

Another interpretation is, “The actual use is much less robust than the AI hyperbole machine suggests.”

Which is it?

Several observations:

- Many people want AI to pump some life into the economic fuel tank. By golly, AI is going to be the next big thing. I agree, but I think the Gallup data indicates that the go go view is like looking at a field of corn from a crop duster zipping along at 1,000 feet. The perspective from the airplane is different from the person walking amidst the stalks.

- The lack of data behind Google-type assertions about how much machine code is in the Google mix sounds good, but where are the data? Google, aren’t you data driven? So, where’s the back up data for the 25 percent assertion.

- Smart software seems to be something that is expensive, requires dreams of small nuclear reactors next to a data center adjacent a hospital. Yeah, maybe once the impact statements, the nuclear waste, and the skilled worker issues have been addressed. Soon as measured in environmental impact statement time which is different from quarterly report time.

Net net: Google desperately wants to be the winner in smart software. The company is suggesting that if it were broken apart by crazed government officials, smart software would die. Insert the exclamation mark. Maybe two or three. That’s unlikely. The blurring of “as is” with “to be” is interesting and misleading.

Stephen E Arnold, November 6, 2024

Hey, US Government, Listen Up. Now!

November 5, 2024

This post is the work of a dinobaby. If there is art, accept the reality of our using smart art generators. We view it as a form of amusement.

This post is the work of a dinobaby. If there is art, accept the reality of our using smart art generators. We view it as a form of amusement.

Microsoft on the Issues published “AI for Startups.” The write is authored by a dream team of individuals deeply concerned about the welfare of their stakeholders, themselves, and their corporate interests. The sensitivity is on display. Who wrote the 1,400 word essay? Setting aside the lawyers, PR people, and advisors, the authors are:

- Satya Nadella, Chairman and CEO, Microsoft

- Brad Smith, Vice-Chair and President, Microsoft

- Marc Andreessen, Cofounder and General Partner, Andreessen Horowitz

- Ben Horowitz, Cofounder and General Partner, Andreessen Horowitz

Let me highlight a couple of passages from essay (polemic?) which I found interesting.

In the era of trustbusters, some of the captains of industry had firm ideas about the place government professionals should occupy. Look at the railroads. Look at cyber security. Look at the folks living under expressway overpasses. Tumultuous times? That’s on the money. Thanks, MidJourney. A good enough illustration.

Here’s the first snippet:

Artificial intelligence is the most consequential innovation we have seen in a generation, with the transformative power to address society’s most complex problems and create a whole new economy—much like what we saw with the advent of the printing press, electricity, and the internet.

This is a bold statement of the thesis for these intellectual captains of the smart software revolution. I am curious about how one gets from hallucinating software to “the transformative power to address society’s most complex problems and cerate a whole new economy.” Furthermore, is smart software like printing, electricity, and the Internet? A fact or two might be appropriate. Heck, I would be happy with a nifty Excel chart of some supporting data. But why? This is the first sentence, so back off, you ignorant dinobaby.

The second snippet is:

Ensuring that companies large and small have a seat at the table will better serve the public and will accelerate American innovation. We offer the following policy ideas for AI startups so they can thrive, collaborate, and compete.

Ah, companies large and small and a seat at the table, just possibly down the hall from where the real meetings take place behind closed doors. And the hosts of the real meeting? Big companies like us. As the essay says, “that only a Big Tech company with our scope and size can afford, creating a platform that is affordable and easily accessible to everyone, including startups and small firms.”

The policy “opportunity” for AI startups includes many glittering generalities. The one I like is “help people thrive in an AI-enabled world.” Does that mean universal basic income as smart software “enhances” jobs with McKinsey-like efficiency. Hey, it worked for opioids. It will work for AI.

And what’s a policy statement without a variation on “May live in interesting times”? The Microsoft a2z twist is, “We obviously live in a tumultuous time.” That’s why the US Department of Justice, the European Union, and a few other Luddites who don’t grok certain behaviors are interested in the big firms which can do smart software right.

Translation: Get out of our way and leave us alone.

Stephen E Arnold, November 5, 2024

How to Cut Podcasts Costs and Hassles: A UK Example

November 5, 2024

Using AI to replicate a particular human is a fraught topic. Of paramount concern is the relentless issue of deepfakes. There are also legal issues of control over one’s likeness, of course, and concerns the technology could put humans out of work. It is against this backdrop, the BBC reports, that “Michael Parkinson’s Son Defends New AI Podcast.” The new podcast uses AI to recreate the late British talk show host, who will soon interview (human) guests. Son Mike acknowledges the concerns, but insists this project is different. Writer Steven McIntosh explains:

“Mike Parkinson said Deep Fusion’s co-creators Ben Field and Jamie Anderson ‘are 100% very ethical in their approach towards it, they are very aware of the legal and ethical issues, and they will not try to pass this off as real’. Recalling how the podcast was developed, Parkinson said: ‘Before he died, we [my father and I] talked about doing a podcast, and unfortunately he passed away before it came true, which is where Deep Fusion came in. ‘I came to them and said, ‘if we wanted to do this podcast with my father talking about his archive, is it possible?’, and they said ‘it’s more than possible, we think we can do something more’. He added his father ‘would have been fascinated’ by the project, although noted the broadcaster himself was a ‘technophobe’. Discussing the new AI version of his father, Parkinson said: ‘It’s extraordinary what they’ve achieved, because I didn’t really think it was going to be as accurate as that.’”

So they have the family’s buy-in, and they are making it very clear the host is remade with algorithms. The show is called “Virtually Parkinson,” after all. But there is still that replacing human talent with AI thing. Deep Fusion’s Anderson notes that, since Parkinson is deceased, he is in no danger of losing work. However, McIntosh counters, any guest that appears on this show may give one fewer interview to a show hosted by a different, living person. Good point.

One thing noteworthy about Deep Fusion’s AI on this project is its ability to not just put words in Parkinson’s mouth, but to predict how he would have actually responded. Assuming that function is accurate, we have a request: Please bring back the objective reporting of Walter Cronkite. This world sorely needs it.

Cynthia Murrell, November 5, 2024

Enter the Dragon: America Is Unhealthy

November 4, 2024

Written by a humanoid dinobaby. No AI except the illustration.

Written by a humanoid dinobaby. No AI except the illustration.

The YouTube video “A Genius Girl Who Is Passionate about Repairing Machines” presents a simple story in a 38 minute video. The idea is that a young woman with no help fixes a broken motorcycles with basic hand tools outside in what looks like a hoarder’s backyard. The message is: Wow, she is smart and capable. Don’t you wish you knew person like this who could repair your broken motorcycle.

This video is from @vutvtgamming and not much information is provided. After watching this and similar videos like “Genius Girl Restored The 280mm Lathe From 50 Years Ago And Made It Look Like”, I feel pretty stupid for an America dinobaby. I don’t think I can recall meeting a person with similar mechanical skills when I worked at Keystone Steel, Halliburton Nuclear, or Booz, Allen & Hamilton’s Design & Development division. The message I carried away was: I was stupid as were many people with whom I associated.

Thanks, MSFT Copilot. Good enough. (I slipped a put down through your filters. Imagine that!)

I picked up a similar vibe when I read “Today’s AI Ecosystem Is Unsustainable for Most Everyone But Nvidia, Warns Top Scholar.” On the surface, the ZDNet write up is an interview with the “scholar” Kai-Fu Lee, who, according to the article:

served as founding director of Microsoft Research Asia before working at Google and Apple, founded his current company, Sinovation Ventures, to fund startups such as 01.AI, which makes a generative AI search engine called BeaGo.

I am not sure how “scholar” correlates with commercial work for US companies and running an investment firm with a keen interest in Chinese start ups. I would not use the word “scholar.” My hunch is that the intent of Kai-Fu Lee is to present as simple and obvious something that US companies don’t understand. The interview is a different approach to explaining how advanced Kai-Fu Lee’s expertise is. He is, via this interview, sharing an opinion that the US is creating a problem and overlooking the simple solution. Just like the young woman able to repair a motorcycle or the lass fixing up a broken industrial lathe alone, the American approach does not get the job done.

What does ZDNet present as Kai-Fu Lee’s message. Here are a couple of examples:

“The ecosystem is incredibly unhealthy,” said Kai-Fu Lee in a private discussion forum earlier this month. Lee was referring to the profit disparity between, on the one hand, makers of AI infrastructure, including Nvidia and Google, and, on the other hand, the application developers and companies that are supposed to use AI to reinvent their operations.

Interesting. I wonder if the “healthy” ecosystem might be China’s approach of pragmatism and nuts-and-bolts evidenced in the referenced videos. The unhealthy versus healthy is a not-so-subtle message about digging one’s own grave in my opinion. The “economics” of AI are unhealthy, which seems to say, “America’s approach to smart software is going to kill it. A more healthy approach is the one in which government and business work to create applications.” Translating: China, healthy; America, sick as a dog.

Here’s another statement:

Today’s AI ecosystem, according to Lee, consists of Nvidia, and, to a lesser extent, other chip makers such as Intel and Advanced Micro Devices. Collectively, the chip makers rake in $75 billion in annual chip sales from AI processing. “The infrastructure is making $10 billion, and apps, $5 billion,” said Lee. “If we continue in this inverse pyramid, it’s going to be a problem,” he said.

Who will flip the pyramid? Uganda, Lao PDR, Greece? Nope, nope, nope. The flip will take an outfit with a strong mind and body. A healthy entity is needed to flip the pyramid. I wonder if that strong entity is China.

Here’s Kai-Fu kung fu move:

He recommended that companies build their own vertically integrated tech stack the way Apple did with the iPhone, in order to dramatically lower the cost of generative AI. Lee’s striking assertion is that the most successful companies will be those that build most of the generative AI components — including the chips — themselves, rather than relying on Nvidia. He cited how Apple’s Steve Jobs pushed his teams to build all the parts of the iPhone, rather than waiting for technology to come down in price.

In the write up Kai-Fu Lee refers to “we”. Who is included in that we? Excluded will be the “unhealthy.” Who is left? I would suggest that the pragmatic and application focused will be the winners. The reason? The “we” includes the healthy entities. Once again I am thinking of China’s approach to smart software.

What’s the correct outcome? Kai-Fu Lee allegedly said:

What should result, he said, is “a smaller, leaner group of leaders who are not just hiring people to solve problems, but delegating to smart enterprise AI for particular functions — that’s when this will make the biggest deal.”

That sounds like the Chinese approach to a number of technical, social, and political challenges. Healthy? Absolutely.

Several observations:

- I wonder if ZDNet checked on the background of the “scholar” interviewed at length?

- Did ZDNet think about the “healthy” versus “unhealthy” theme in the write up?

- Did ZDNet question the “scholar’s” purpose in explaining what’s wrong with the US approach to smart software?

I think I know the answer. The ZDNet outfit and the creators of this unusual private interview believe that the young women rebuilt complicated devices without any assistance. Smart China; dumb America. I understand the message which seems to have not been internalized by ZDNet. But I am a dumb dinobaby. What do I know? Exactly. Unhealthy that American approach to AI.

Stephen E Arnold, October 30, 2024

Great Moments in Marketing: MSFT Copilot, the Salesforce Take

November 1, 2024

A humanoid wrote this essay. I tried to get MSFT Copilot to work, but it remains dead. That makes four days with weird messages about a glitch. That’s the standard: Good enough.

A humanoid wrote this essay. I tried to get MSFT Copilot to work, but it remains dead. That makes four days with weird messages about a glitch. That’s the standard: Good enough.

It’s not often I get a kick out of comments from myth-making billionaires. I read through the boy wonder to company founder titled “An Interview with Salesforce CEO Marc Benioff about AI Abundance.” No paywall on this essay, unlike the New York Times’ downer about smart software which appears to have played a part in a teen’s suicide. Imagine when Perplexity can control a person’s computer. What exciting stories will appear. Here’s an example of what may be more common in 2025.

Great moments in Salesforce marketing. A senior Agentforce executive considers great marketing and brand ideas of the past. Inspiration strikes. In 2024, he will make fun of Clippy. Yes, a 1995 reference will resonate with young deciders in 2024. Thanks, Stable Diffusion. You are working; MSFT Copilot is not.

The focus today is a single statement in this interview with the big dog of Salesforce. Here’s the quote:

Well, I guess it wasn’t the AGI that we were expecting because I think that there has been a level of sell, including Microsoft Copilot, this thing is a complete disaster. It’s like, what is this thing on my computer? I don’t even understand why Microsoft is saying that Copilot is their vision of how you’re going to transform your company with AI, and you are going to become more productive. You’re going to augment your employees, you’re going to lower your cost, improve your customer relationships, and fundamentally expand all your KPIs with Copilot. I would say, “No, Copilot is the new Clippy”, I’m even playing with a paperclip right now.

Let’s think about this series of references and assertions.

First, there is the direct statement “Microsoft Copilot, this thing is a complete disaster.” Let’s assume the big dog of Salesforce is right. The large and much loved company — Yes, I am speaking about Microsoft — rolled out a number of implementations, applications, and assertions. The firm caught everyone’s favorite Web search engine with its figurative pants down like a hapless Russian trooper about to be dispatched by a Ukrainian drone equipped with a variant of RTX. (That stuff goes bang.) Microsoft “won” a marketing battle and gained the advantage of time. Google with its Sundar & Prabhakar Comedy Act created an audience. Microsoft seized the opportunity to talk to the audience. The audience applauded. Whether the technology worked, in my opinion was secondary. Microsoft wanted to be seen as the jazzy leader.

Second, the idea of a disaster is interesting. Since Microsoft relied on what may be the world’s weirdest organizational set up and supported the crumbling structure, other companies have created smart software which surfs on Google’s transformer ideas. Microsoft did not create a disaster; it had not done anything of note in the smart software world. Microsoft is a marketer. The technology is a second class citizen. The disaster is that Microsoft’s marketing seems to be out of sync with what the PowerPoint decks say. So what’s new? The answer is, “Nothing.” The problem is that some people don’t see Microsoft’s smart software as a disaster. One example is Palantir, which is Microsoft’s new best friend. The US government cannot rely on Microsoft enough. Those contract renewals keep on rolling. Furthermore the “certified” partners could not be more thrilled. Virtually every customer and prospect wants to do something with AI. When the blind lead the blind, a person with really bad eyesight has an advantage. That’s Microsoft. Like it or not.

Third, the pitch about “transforming your company” is baloney. But it sounds good. It helps a company do something “new” but within the really familiar confines of Microsoft software. In the good old days, it was IBM that provided the cover for doing something, anything, which could produce a marketing opportunity or a way to add a bit pizazz to a 1955 Chevrolet two door 210 sedan. Thus, whether the AI works or does not work, one must not lose sight of the fact that Microsoft centric outfits are going to go with Microsoft because most professionals need PowerPoint and the bean counters do not understand anything except Excel. What strikes me as important that Microsoft can use modest, even inept smart software, and come out a winner. Who is complaining? The Fortune 1000, the US Federal government, the legions of MBA students who cannot do a class project without Excel, PowerPoint, and Word?

Finally, the ultimate reference in the quote is Clippy. Personally I think the big dog at Salesforce should have invoked both Bob and Clippy. Regardless of the “joke” hooked to these somewhat flawed concepts, the names “Bob” and “Clippy” have resonance. Bob rolled out in 1995. Clippy helped so many people beginning in the same year. Decades later Microsoft’s really odd software is going to cause a 20 something who was not born to turn away from Microsoft products and services? Nope.

Let’s sum up: Salesforce is working hard to get a marketing lift by making Microsoft look stupid. Believe me. Microsoft does not need any help. Perhaps the big dog should come up with a marketing approach that replicates or comes close to what Microsoft pulled off in 2023. Google still hasn’t recovered fully from that kung fu blow.

The big dog needs to up its marketing game. Say Salesforce and what’s the reaction? Maybe meh.

Stephen E Arnold, November 1, 2024

Surprise: Those Who Have Money Keep It and Work to Get More

October 29, 2024

Written by a humanoid dinobaby. No AI except the illustration.

Written by a humanoid dinobaby. No AI except the illustration.

The Economist (a newspaper, not a magazine) published “Have McKinsey and Its Consulting Rivals Got Too Big?” Big is where the money is. Small consultants can survive but a tight market, outfits like Gerson Lehrman, and AI outputters of baloney like ChatGPT mean trouble in service land.

A next generation blue chip consultant produces confidential and secret reports quickly and at a fraction of the cost of a blue chip firm’s team of highly motivated but mostly inexperienced college graduates. Thanks, OpenAI, close enough.

The write up says:

Clients grappling with inflation and economic uncertainty have cut back on splashy consulting projects. A dearth of mergers and acquisitions has led to a slump in demand for support with due diligence and company integrations.

Yikes. What outfits will employ MBAs expecting $180,000 per year to apply PowerPoint and Excel skills to organizations eager for charts, dot points, and the certainty only 24 year olds have? Apparently fewer than before Covid.

How does the Economist know that consulting outfits face headwinds? Here’s an example:

Bain and Deloitte have paid some graduates to delay their start dates. Newbie consultants at a number of firms complain that there is too little work to go around, stunting their career prospects. Lay-offs, typically rare in consulting, have become widespread.

Consulting firms have chased projects in China but that money machine is sputtering. The MBA crowd has found the Middle East a source of big money jobs. But the Economist points out:

In February the bosses of BCG, McKinsey and Teneo, a smaller consultancy, along with Michael Klein, a dealmaker, were hauled before a congressional committee in Washington after failing to hand over details of their work for Saudi Arabia’s Public Investment Fund.

The firm’s response was, “Staff clould be imprisoned…” (Too bad the opioid crisis folks’ admissions did not result in such harsh consequences.)

Outfits like Deloitte are now into cyber security with acquisitions like Terbium Labs. Others are in the “reskilling” game, teaching their consultants about AI. The idea is that those pollinated type A’s will teach the firms’ clients just what they need to know about smart software. Some MBAs have history majors and an MBA in social media. I wonder how that will work out.

The write up concludes:

The quicker corporate clients become comfortable with chatbots, the faster they may simply go directly to their makers in Silicon Valley. If that happens, the great eight’s short-term gains from AI could lead them towards irrelevance.

Wow, irrelevance. I disagree. I think that school relationships and the networks formed by young people in graduate school will produce service work. A young MBA who mother or father is wired in will be valuable to the blue chip outfits in the future.

My take on the next 24 months is:

- Clients will hire employees who use smart software and can output reports with the help of whatever AI tools get hyped on LinkedIn.

- The blue chip outfits will get smaller and go back to their carpeted havens and cook up some crises or trends that other companies with money absolutely have to know about.

- Consulting firms will do the start up play. The failure rate will be interesting to calculate. Consultants are not entrepreneurs, but with connections the advice givers can tap their contacts for some tailwind.

I have worked at a blue chip outfit. I have done some special projects for outfits trying to become blue chip outfits. My dinobaby point of view boils down to seeing the Great Eight becoming the Surviving Six and then the end game, the Tormenting Two.

What picks up the slack? Smart software. Today’s systems generate the same type of normalized pablum many consulting firms provide. Note to MBAs: There will be jobs available for individuals who know how to perform Search GEO (generated engine optimization).

Stephen E Arnold, October 29, 2024

That AI Technology Is Great for Some Teens

October 29, 2024

The New York Times ran and seemed to sensationalized a story about a young person who formed an emotional relationship with AI from Character.ai. I personally like the Independent’s story “The Disturbing Messages Shared between AI Chatbot and Teen Who Took His Own Life,” which was redisplayed on the estimable MSN.com. If the link is dead, please, don’t write Beyond Search. Contact those ever responsible folks at Microsoft. The British “real” news outfit said:

Sewell [the teen] had started using Character.AI in April 2023, shortly after he turned 14. In the months that followed, the teen became “noticeably withdrawn,” withdrew from school and extracurriculars, and started spending more and more time online. His time on Character.AI grew to a “harmful dependency,” the suit states.

Let’s shift gears. The larger issues is that social media has changed the way humans interact with each other and smart software. The British are concerned. For instance, the BBC delves into how social media has changed human interaction: “How Have Social Media Algorithms Changed The Way We Interact?”

Social media algorithms are fifteen years old. Facebook unleashed the first in 2009 and the world changed. The biggest problem associated with social media algorithms are the addiction and excess. Teenagers and kids are the populations most affected by social media and adults want to curb their screen time. Global governments are steeping up to enforce rules on social media.

The US could ban TikTok if the Chinese parent company doesn’t sell it. The UK implemented a new online safety act for content moderation, while the EU outlined new rules for tech companies. The rules will fine them 6% of turnover and suspend them if they don’t prevent election interference. Meanwhile Brazil banned X for a moment until the company agreed to have a legal representative in the country and blocked accounts that questioned the legitimacy of the country’s last election.

While the regulation laws pose logical arguments, they also limit free speech. Regulating the Internet could tip the scale from anarchy to authoritarianism:

“Adam Candeub is a law professor and a former advisor to President Trump, who describes himself as a free speech absolutist. Social media is ‘polarizing, it’s fractious, it’s rude, it’s not elevating – I think it’s a terrible way to have public discourse”, he tells the BBC. “But the alternative, which I think a lot of governments are pushing for, is to make it an instrument of social and political control and I find that horrible.’ Professor Candeub believes that, unless ‘there is a clear and present danger’ posed by the content, ‘the best approach is for a marketplace of ideas and openness towards different points of view.’”

When Musk purchased X, he compared it to a “digital town square.” Social media, however, isn’t like a town square because the algorithms rank and deliver content based what eyeballs want to see. There isn’t fair and free competition of ideas. The smart algorithms shape free speech based on what users want to see and what will make money.

So where are we? Headed to the grave yard?

Whitney Grace, October 29, 2024

Fake Defined? Next Up Trust, Ethics, and Truth

October 28, 2024

Another post from a dinobaby. No smart software required except for the illustration.

Another post from a dinobaby. No smart software required except for the illustration.

This is a snappy headline: “You Can Now Get Fined $51,744 for Writing a Fake Review Online.” The write up states:

This mandate includes AI-generated reviews (which have recently invaded Amazon) and also encompasses dishonest celebrity endorsements as well as testimonials posted by a company’s employees, relatives, or friends, unless they include an explicit disclaimer. The rule also prohibits brands from offering any sort of incentive to prompt such an action. Suppressing negative reviews is no longer allowed, nor is promoting reviews that a company knows or should know are fake.

So, what does “fake” mean? The word appears more than 160 times in the US government document.

My hunch is that the intrepid US Federal government does not want companies to hype their products with “fake” reviews. But I don’t see a definition of “fake.” On page 10 of the government document “Use of Consumer Reviews”, I noted:

“…the deceptive or unfair commercial acts or practices involving reviews or other endorsement.”

That’s a definition of sort. Other words getting at what I would call a definition are:

- buying reviews (these can be non fake or fake it seems)

- deceptive

- false

- manipulated

- misleading

- unfair

On page 23 of the government document, A. 465. – Definitions appears. Alas, the word “fake” is not defined.

The document is 163 pages long and strikes me as a summary of standard public relations, marketing, content marketing, and social media practices. Toss in smart software and Telegram-type BotFather capability and one has described the information environment which buzzes, zaps, and swirls 24×7 around anyone with access to any type of electronic communication / receiving device.

Look what You.com generated. A high school instructor teaching a debate class about a foundational principle.

On page 119, the authors of the government document arrive at a key question, apparently raised by some of the individuals sufficiently informed to ask “killer” questions; for example:

Several commenters raised concerns about the meaning of the term “fake” in the context of indicators of social media influence. A trade association asked, “Does ‘fake’ only mean that the likes and followers were created by bots or through fake accounts? If a social media influencer were to recommend that their followers also follow another business’ social media account, would that also be ‘procuring’ of ‘fake’ indicators of social media influence? . . . If the FTC means to capture a specific category of ‘likes,’ ‘follows,’ or other metrics that do not reflect any real opinions, findings, or experiences with the marketer or its products or services, it should make that intention more clear.”

Alas, no definition is provided. “Fake” exists in a cloud of unknowing.

What if the US government prosecutors find themselves in the position of a luminary who allegedly said: “Porn. I know it when I see it.” That posture might be more acceptable than trying to explain that an artificial intelligence content generator produced a generic negative review of an Italian restaurant. A competitor uses the output via a messaging service like Telegram Messenger and creates a script to plug in the name, location, and date for 1,000 Italian restaurants. The individual then lets the script rip. When investigators look into this defamation of Italian restaurants, the trail leads back to a virtual assert service provider crime as a service operation in Lao PDR. The owner of that enterprise resides in Cambodia and has multiple cyber operations supporting the industrialized crime as a service operation. Okay, then what?

In this example, “fake” becomes secondary to a problem as large or larger than bogus reviews on US social media sites.

What’s being done when actual criminal enterprises are involved in “fake” related work. According the the United Nations, in certain nation states, law enforcement is hampered and in some cases prevented from pursuing a bad actor.

Several observations:

- As most high school debaters learn on Day One of class: Define your terms. Present these in plain English, not a series of anecdotes and opinions.

- Keep the focus sharp. If reviews designed to damage something are the problem, focus on that. Avoid the hand waving.

- The issue exists due to a US government policy of looking the other way with regard to the large social media and online services companies. Why not become a bit more proactive? Decades of non-regulation cannot be buried under 160 page plus documents with footnotes.

Net net: “Fake,” like other glittering generalities cannot be defined. That’s why we have some interesting challenges in today’s world. Fuzzy is good enough.

PS. If you have money, the $50,000 fine won’t make any difference. Jail time will.

Stephen E Arnold, October 28, 2024