Mother Nature Does Not Like AI

December 1, 2025

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Nature, the online service and still maybe a printed magazine, published a sour lemonade story. Its title is “Major AI Conference Flooded with Peer Reviews Written Fully by AI.” My reaction was, “Duh! Did you expect originality from AI professionals chasing big bucks?” In my experience, AI innovation appears in the marketing collateral, the cute price trickery for Google Gemini, and the slide decks presented to VCs who don’t want to miss out on the next big thing.

The Nature article states this shocker:

Controversy has erupted after 21% of manuscript reviews for an international AI conference were found to be generated by artificial intelligence.

Once again: Duh!

How about this statement from the write up and its sources?

The conference organizers say they will now use automated tools to assess whether submissions and peer reviews breached policies on using AI in submissions and peer reviews. This is the first time that the conference has faced this issue at scale, says Bharath Hariharan, a computer scientist at Cornell University in Ithaca, New York, and senior program chair for ICLR 2026. “After we go through all this process … that will give us a better notion of trust.”

Yep, trust. That’s a quality I admire.

I want to point out that Nature, a publication interested in sticking to the facts, does a little soft shoe and some fancy dancing in the cited article. For example, there are causal claims about how conferences operate. I did not spot any data, but I am a dinobaby prone to overlook the nuances of modern scientific write ups. Also, the article seems to want a fix now. Yeah, well, that is unlikely. LLMs change so that smart software tuned to find AI generated content are not exactly as reliable as a 2025 Toyota RAV.

Also, I am not sure fixes implemented by human reviewers and abstract readers will do the job. When I had the joyful opportunity to review submissions for a big time technical journal, I did a pretty good job on the first one or two papers tossed at me. But, to be honest, by paper three I was not sure I had the foggiest idea what I was doing. I probably would have approved something written by a French bulldog taking mushrooms for inspiration.

If you are in the journal article writing game or giving talks at conferences, think about AI. Whether you use it or not, you may be accused of taking short cuts. That’s important because professional publishers and conference organizers never take short cuts. They take money.

Stephen E Arnold, December 1, 2025

An AI Outfit Promises Something Infinite: Er, Really? Infinite?

November 28, 2025

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Venture Beat publishes some write ups that strike me like marketing collateral. I am not sure what to make of an article that asserts an “infinite” service. Let’s not skip over the idea of infinity. I think a couple of mathy types drove themselves crazy thinking about infinities of infinities working two ways: Bigger numbers and then smaller numbers. Yep, those close to these mathy types checked them into a facility for those sufficiently unusual. Would this article “Anthropic’s Claude Opus 4.5 Is Here” Cheaper, AI, Infinite Chats, and Coding Skills That Beat Humans (sic).”

Georg Cantor makes an appearance at the Happy Valley Rest Home. He tells the nurse delivering his scopolamine that he wants to talk about the central infinite-cardinality problem before he is placed in isolation. Thanks, Venice.ai. Close enough, although the setting does not look too much like a 19th century facility. Oh, well.

The first step on what will be a short journey I assure you. Let’s let Anthropic Claude 4.5 define “infinite”, shall we? Here’s the response:

Infinity represents unboundedness—a concept of endlessness without limit. In mathematics, it describes quantities exceeding any finite value or processes continuing indefinitely. Infinity isn’t a number but an abstract notion of limitlessness, fundamental to calculus, set theory, and cosmology.

Notice that Claude 4.5 did not mention marketing and PR speak. This is important for “Anthropic’s Claude Opus 4.5 Is Here: Cheaper AI, Infinite Chats, and Coding Skills That Beat Humans.” Note the two-word phrase “infinite chats.” Nope, I don’t think so. Not even Amazon’s money pipe can serve “infinite chats.” Come on, Claude. Get real. Amazon cannot even keep its system online if my memory serves me. But infinite? Hmmm.

The write up says that Venture Beat engaged in an exclusive interview with Alex Albert, “Anthropic’s head of developer relations.” (I think this means a marketing job. What do you think?) Here’s a statement that caught my attention:

The new model, Claude Opus 4.5, scored higher on Anthropic’s most challenging internal engineering assessment than any human job candidate in the company’s history, according to materials reviewed by VentureBeat. The result underscores both the rapidly advancing capabilities of AI systems and growing questions about how the technology will reshape white-collar professions. The Amazon-backed company is pricing Claude Opus 4.5 at $5 per million input tokens and $25 per million output tokens — a dramatic reduction from the $15 and $75 rates for its predecessor, Claude Opus 4.1, released earlier this year. The move makes frontier AI capabilities accessible to a broader swath of developers and enterprises while putting pressure on competitors to match both performance and pricing.

Does this strike you as what I caller a “better, faster, cheaper” approach. I like to add cheaper is the operative word. Yep, buy the market and raise prices later. Does this surprise me? No. From a company that promises to deliver something that costs money infinitely, cheaper does not surprise me one whit.

Here’s another statement I found interesting:

Albert said employee testers consistently reported that the model demonstrates improved judgment and intuition across diverse tasks — a shift he described as the model developing a sense of what matters in real-world contexts. “The model just kind of gets it,” Albert said. “It just has developed this sort of intuition and judgment on a lot of real world things that feels qualitatively like a big jump up from past models.”

To test this statement, I fired up Claude 4.5 again and asked, “Is it possible for a commercial enterprise supported by modest customer sales and venture capital to deliver an “infinite service”; for example, infinite chats for users?”

Here’s what the “just kind of gets it” Claude told me:

No, it’s not sustainably possible. Here’s why:

- Real Costs Scale with Usage: Every chat consumes computational resources (servers, energy, bandwidth). Even efficient models incur marginal costs per interaction that accumulate with heavy users.

- Finite Capital: Venture funding and modest sales provide limited runway. Without usage caps, a small subset of power users could drain resources disproportionately, making the business model unsustainable.

- Economic Reality: “Unlimited” offerings typically include hidden constraints (throttling during peak times, deprioritization, or eventual tier upgrades). True infinity would require infinite capital and infrastructure.

- Tragedy of the Commons: Without limits, rational users maximize personal use, collectively overwhelming the system—forcing either service degradation or imposed restrictions.

Practical Outcome: Most companies offer generous but capped services (e.g., “50 free messages/day”) or tiered pricing. Marketing terms like “unlimited” often mean “more than typical users need,” not literally infinite. Would you like examples of how AI companies balance accessibility with sustainability?

I think that Mr. Albert might want to check his assertions with his product that “infinite” is a tough promise. But let’s not stop with the infinite. What about this statement in the write up?

Opus 4.5 outscores all human candidates on company’s toughest engineering test.

For me, the sticking point is the word “all.” Categorical affirmatives are problematic. Black swans aside, “all” is a cousin of infinite. Its use implies a rather sweeping approach to those who take a tough engineering test. What’s the sample? One, 100, 10,000? Yeah. Oh, well.

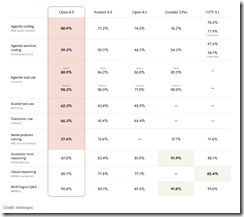

What’s the proof? Here’s the chart that did not knock me over with shock. I know it is difficult to read. Navigate to the cited article on a boat anchor computer with a big screen, and you can sort of read the numbers. Look for this:

Claude 4.5 is better than also-rans like Google and OpenAI. Well, why not? Anthropic has whipped infinite and tamed all. Dealing with weak wristed systems like Googzilla and ChatGPT is trivial.

Mr. Albert offered a statement which Venture Beat uses to complete this remarkable feat of content marketing, hyperbole, and logical impossibilities:

When asked about the engineering exam results and what they signal about AI’s trajectory, Albert was direct: “I think it’s a really important signal to pay attention to.”

Yep, pay attention. I did.

Stephen E Arnold, November 28, 2025

Gizmodo Suggests Sam AI-Man Destroys the Mind of Youth.

November 28, 2025

![green-dino_thumb_thumb[3] green-dino_thumb_thumb[3]](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/11/green-dino_thumb_thumb3_thumb-3.gif) This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

If I were an ad sales person at Gizmodo, I would not be happy. I am all for a wall between editorial and advertising. I bet you did not know that I learned that basic rule when I worked at Ziff in Manhattan. However, writing articles that accuse a potential advertiser of destroying the minds of youth is unlikely to be forgotten. I am not saying the write up is not accurate, but I know that it is possible to write articles and stories that do not make a potential advertiser go nutso.

Gizmodo published “OpenAI Introduces ‘ChatGPT for Teachers’ to Further Destroy the Minds of Our Youth” to explain a new LexisNexis-type of play to get people used to their online product. OpenAI thinks the LexisNexis- or close variant model is a good way to get paying customers. Students in law school become familiar with LexisNexis. When and if they get a job, those students will use LexisNexis. The approach made sense when Don Wilson and his fellow travelers introduced the program. OpenAI is jumping on a marketing wagon pulled by a horse that knows how to get from A to B.

Have those laptops, tablets, and mobile phones made retail workers adept at making change? Thanks, Venice.ai. Good enough.

The Gizmodo article says:

ChatGPT for Teachers is designed to help educators prepare materials for their classes, and it will support Family Educational Rights and Privacy Act (FERPA) requirements so that teachers and school staff can securely work with student data within the workspace. The company says the suite of tools for teachers will be available for free through June 2027, which is probably the point at which OpenAI will need to show that it can actually generate revenue and stick its hand out to demand payment from teachers who have become reliant on the suite of tools.

Okay, no big innovation here.

Gizmodo states:

There is already mounting evidence that relying on AI can erode critical thinking skills, which is something you’d like kids to be engaging in, at least during school hours. Other studies have shown that people “offload” the more difficult cognitive work and rely on AI as a shortcut when it’s available, ultimately harming their ability to do that work when they don’t have the tool to lean on. So what could go wrong giving those tools to both students and teachers? Seems like we’re going to find out.

Okay, but that headline looms over the Ivory soap conclusion to the article. In my opinion, I know exactly how this free AI will work. Students will continue to look for the easiest way to complete assigned tasks. If ChatGPT is available, students will find out if it works. Then students will use AI for everything possible so the students have more time for digging into linear algebra. (That’s a joke.) A few students will understand that other students will not do the assignments but will pay someone to do that work for them. That other person will be [a] a paramour, [b] a classmate who is a friend, [c] a classmate who responds to threats, or [d] ChatGPT-type services.

Test scores will continue to fall until a group of teachers create easier tests. Furthermore, like putting A/V systems for students to learn a foreign language in 1962, the technology works only if the student concentrates, follows the lesson attentively, writes notes, and goes through the listen and repeat mechanisms in the language lab. PCs, tablets, Chrome books, mobile phones, or AI work the same way. When students do not have the discipline to pay attention and put in the effort required to learn, the technology cannot compensate. It can, however, replace certain jobs so companies and co-workers do not have to compensate for those who lack basic skills, the discipline required to do the work, and the social skills needed to fit into an organization.

The myth that technology can replace traditional educational techniques is more nutso than the sales professionals who have to overcome ideas like “destroy the minds of youth.”

Net net: Sam AI-Man has some challenge ahead with this free ChatGPT. Want evidence of the impact of technology on the minds of legal professionals? Just check out some of the YouTubing lawyers. There you go.

Stephen E Arnold, November 28, 2024

Can the Chrome Drone Deorbit Comet?

November 28, 2025

Perplexity developed Comet, an intuitive AI-powered Internet browser. Analytic Insight has a rundown on Comet in the article: “Perplexity CEO Aravind Srinivas Claims Comet AI Browser Could ‘Kill’ Android System.” Perplexity designed Comet for more complex tasks such as booking flights, shopping, and answering then executing simple prompts. The new browser is now being released for Android OS.

Until recently Comet was an exclusive, invite-only browser for the desktop version. It is now available for download. Comet is taking the same approach for an Android release. Perplexity hopes to overtake Android as the top mobile OS or so CEO Aravind Srinivas plans.

Another question is if Comet could overtake Chrome as the favored AI browser:

“The launch of Comet AI browser coincides with the onset of a new conflict between AI browsers. Not long ago, OpenAI introduced ChatGPT Atlas, while Microsoft Edge and Google Chrome are upgrading their platforms with top-of-the-line AI tools. Additionally, Perplexity previously received attention for a $34.5 billion proposal to acquire Google Chrome, a bold move indicating its aspirations.

Comet, like many contemporary browsers, is built on the open-source Chromium framework provided by Google, which is also the backbone for Chrome, Edge, and other major browsers. With Comet’s mobile rollout and Srinivas’s bold claim, Perplexity is obviously betting entirely on an AI-first future, one that will see a convergence of the browser and the operating system.”

Comet is built on Chromium. Chrome is too. Comet is a decent web browser, but it doesn’t have the power of Alphabet behind it. Chrome will dominate the AI-browser race because it has money to launch a swarm of digital drones at this frail craft.

Whitney Grace, November 28, 2025

Coca-Cola and AI: Things May Not Be Going Better

November 27, 2025

Coca-Cola didn’t learn its lesson last year with a less than bad AI-generated Christmas commercial. It repeated the mistake again in 2025. Although the technology has improved, the ad still bears all the fake-ness of early CGI (when examined in hindsight of course). Coca-Cola, according to Creative Bloq, did want to redeem itself, so the soft drink company controlled every detail in the ad: “Devastating Graphic Shows Just How Bad The Coca-Cola Christmas Ad Really Is.”

Here’s how one expert viewed it:

“In a post on LinkedIn, the AI consultant Dino Burbidge points out the glaring lack of consistency and continuity in the design of the trucks in the new AI Holidays are Coming ad, which was produced by AI studio Secret Level. At least one of the AI-generated vehicles appears to completely defy physics, putting half of the truck’s payload beyond the last wheel.

Dino suggests that the problem with the ad is not AI per se, but the fact that no human appears to have checked what the AI models generated… or that more worryingly they checked but didn’t care, which is extraordinary when the truck is the main character in the ad.”

It’s been suggested that Coca-Cola used AI to engage in rage bait instead of building a genuinely decent Christmas ad. There was a behind the scenes video of how the ad was made and even that used AI VoiceOver.

I liked the different horse drawn wagons. Very consistent.

Whitney Grace, November 27, 2025

Microsoft: Desperate to Be a Leader in the Agentic OS Push Decides to Shove, Not Lure Supporters

November 26, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

I had a friend in high school who like a girl Mary B. He smiled at her. He complimented her plaid skirt. He gave her a birthday gift during lunch in the school cafeteria. My reaction to this display was, “Yo, Tommy, you are trying too hard.” I said nothing, I watched as Mary B. focused her attention on a football player with a C average but comic book Superman looks. Tommy became known as a person who tried too hard to reach a goal without realizing no girl wanted to be the focal point of a birthday gift in the school cafeteria with hundreds of students watching. Fail, Tommy.

Thanks, Venice.ai. Good enough, the gold standard today I believe.

I thought about this try-too-hard approach when I read “Windows President Addresses Current State of Windows 11 after AI Backlash.” The source is the on-again, off-again podcasting outfit called Windows Central. Here’s a snippet from the write up which recycles content from X.com. The source of the statement is a person named Pavan Davuluri, who is the Microsoft Windows lead:

The team (and I) take in a ton of feedback. We balance what we see in our product feedback systems with what we hear directly. They don’t always match, but both are important. I’ve read through the comments and see focus on things like reliability, performance, ease of use and more… we care deeply about developers. We know we have work to do on the experience, both on the everyday usability, from inconsistent dialogs to power user experiences. When we meet as a team, we discuss these pain points and others in detail, because we want developers to choose Windows.

Windows Central pointed out that Lead Davuluri demonstrated “leadership” with a bold move. He disabled comments to his X.com post about caring deeply about its customers. I like it when Lead Davuluri takes decisive leadership actions that prevent people from providing inputs. Is that why Microsoft ignored focus groups responding to Wi-Fi hardware that did not work and “ribbon” icons instead of words in Office application interfaces? I think I have possibly identified a trend at Microsoft: The aircraft carrier is steaming forward, and it is too bad about the dolphins, fishing boats, and scuba divers. I mean who cares about these unseen flotsam and jetsam.

Remarkably Windows Central’s write up includes another hint of negativism about Microsoft Windows:

What hasn’t helped in recent years is “Continuous Innovation,” Microsoft’s update delivery strategy that’s designed to keep the OS fresh with new features and changes on a consistent, monthly basis. On paper, it sounds like a good idea, but in practice, updating Windows monthly with new features often causes more headaches than joy for a lot of people. I think most users would prefer one big update at a predictable, certain time of the year, just like how Apple and Google do it.

Several observations if I may offer them as an aged dinobaby:

- Google has said it wants to become the agentic operating system. That means Google wants to kill off Microsoft, its applications, and its dreams.

- Microsoft knows that it faces competition from a person whom Satya Nadella knows, understands, absolutely must defeat because his family would make fun of him if he failed. Yep, a man-to-man dust up with annoying users trying to stop the march of technological innovation and revenue. Lead Davuluri has his marching orders; hence, the pablum tinged non-speak cited in the Windows Central write up.

- User needs and government regulation have zero — that’s right, none, nil, zip — chance of altering what these BAIT (big AI tech) outfits will do to win. Buckle up, Tommy. You are going to be rejected again.

Net net: That phrase agentic OS has a ring to it, doesn’t it?

Stephen E Arnold, November 26, 2025

Has Big Tech Taught the EU to Be Flexible?

November 26, 2025

![green-dino_thumb_thumb[3] green-dino_thumb_thumb[3]](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/11/green-dino_thumb_thumb3_thumb-2.gif) This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Here’s a question that arose in a lunch meeting today (November 19, 2025): Has Big Tech brought the European Union to heel? What’s your answer?

The “trust” outfit Thomson Reuters published “EU Eases AI, Privacy Rules As Critics Warn of Caving to Big Tech.”

European Union regulators demonstrate their willingness to be flexible. These exercises are performed in the privacy of a conference room in Brussels. The class is taught by those big tech leaders who have demonstrated their ability to chart a course and keep it. Thanks, Venice.ai. How about your interface? Yep, good enough I think.

The write up reported:

The EU Commission’s “Digital Omnibus”, which faces debate and votes from European countries, proposed to delay stricter rules on use of AI in “high-risk” areas until late 2027, ease rules around cookies and enable more use of data.

Ah, back peddling seems to be the new Zen moment for the European Union.

The “trust” outfit explains why, sort of:

Europe is scrabbling to balance tough rules with not losing more ground in the global tech race, where companies in the United States and Asia are streaking ahead in artificial intelligence and chips.

Several factors are causing this rethink. I am not going to walk the well-worn path called “Privacy Lane.” The reason for the softening is not a warm summer day. The EU is concerned about:

- Losing traction in the slippery world of smart software

- Failing to cultivate AI start ups with more than a snowball’s chance of surviving in the Dante’s inferno of the competitive market

- Keeping AI whiz kids from bailing out of European mathematics, computer science, and physics research centers for some work in Sillycon Valley or delightful Z Valley (Zhongguancun, China, in case you did not know).

From my vantage point in rural Kentucky, it certainly appears that the European Union is fearful of missing out on either the boom or the bust associated with smart software.

Several observations are warranted:

- BAITers are likely to win. (BAIT means Big AI Tech in my lingo.) Why? Money and FOMO

- Other governments are likely to adapt to the needs of the BAITers. Why? Money and FOMO

- The BAIT outfits will be ruthless and interpret the EU’s new flexibility as weakness.

Net net: Worth watching. What do you think? Money? Fear? A combo?

Stephen E Arnold, November 26, 2025

What Can a Monopoly Type Outfit Do? Move Fast and Break Things Not Yet Broken

November 26, 2025

![green-dino_thumb_thumb[3] green-dino_thumb_thumb[3]](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/11/green-dino_thumb_thumb3_thumb-4.gif) This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

CNBC published “Google Must Double AI Compute Every 6 Months to Meet Demand, AI Infrastructure Boss Tells Employees.”

How does the math work out? Big numbers result as well as big power demands, pressure on suppliers, and an incentive to enter hyper-hype mode for marketing I think.

Thanks, Venice.ai. Good enough.

The write up states:

Google ’s AI infrastructure boss [maybe a fellow named Amin Vahdat, the leadership responsible for Machine Learning, Systems and Cloud AI?] told employees that the company has to double its compute capacity every six months in order to meet demand for artificial intelligence services.

Whose demand exactly? Commercial enterprises, Google’s other leadership, or people looking for a restaurant in an unfamiliar town?

The write up notes:

Hyperscaler peers Microsoft, Amazon and Meta also boosted their capex guidance, and the four companies now expect to collectively spend more than $380 billion this year.

Faced with this robust demand, what differentiates the Google for other monopoly-type companies? CNBC delivers a bang up answer to my question:

Google’s “job is of course to build this infrastructure but it’s not to outspend the competition, necessarily,” Vahdat said. “We’re going to spend a lot,” he said, adding that the real goal is to provide infrastructure that is far “more reliable, more performant and more scalable than what’s available anywhere else.” In addition to infrastructure buildouts, Vahdat said Google bolsters capacity with more efficient models and through its custom silicon. Last week, Google announced the public launch of its seventh generation Tensor Processing Unit called Ironwood, which the company says is nearly 30 times more power efficient than its first Cloud TPU from 2018. Vahdat said the company has a big advantage with DeepMind, which has research on what AI models can look like in future years.

I see spend the same as a competitor but, because Google is Googley, the company will deliver better reliability, faster, and more easily made bigger AI than the non-Googley competition. Google is focused on efficiency. To me, Google bets that its engineering and programming expertise will give it an unbeatable advantage. The VP of Machine Learning, Systems and Cloud AI does not mention the fact that Google has its magical advertising system and about 85 percent of the global Web search market via its assorted search-centric services. Plus one must not overlook the fact that the Google is vertically integrated: Chips, data centers, data, smart people, money, and smart software.

The write up points out that Google knows there are risks with its strategy. But FOMO is more important than worrying about costs and technology. But what about users? Sure, okay, eyeballs, but I think Google means humanoids who have time to use Google whilst riding in Waymos and hanging out waiting for a job offer to arrive on an Android phone. Google doesn’t need to worry. Plus it can just bump up its investments until competitors are left dying in the desert known as Death Vall-AI.

After kicking beaten to the draw in the PR battle with Microsoft, the Google thinks it can win the AI jackpot. But what if it fails? No matter. The AI folks at the Google know that the automated advertising system that collects money at numerous touch points is for now churning away 24×7. Googzilla may just win because it is sitting on the cash machine of cash machines. Even counterfeiters in Peru and Vietnam cannot match Google’s money spinning capability.

Is it game over? Will regulators spring into action? Will Google win the race to software smarter than humans? Sure. Even if it part of the push to own the next big thing is puffery, the Google is definitely confident that it will prevail just like Superman and the truth, justice, and American way has. The only hitch in the git along may be having captured enough electrical service to keep the lights on and the power flowing. Lots of power.

Stephen E Arnold, November 26, 2025

LLMs and Creativity: Definitely Not Einstein

November 25, 2025

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

I have a vague recollection of a very large lecture room with stadium seating. I think I was at the University of Illinois when I was a high school junior. Part of the odd ball program in which I found myself involved a crash course in psychology. I came away from that class with an idea that has lingered in my mind for lo these many decades; to wit: People who are into psychology are often wacky. Consequently I don’t read too much from this esteemed field of study. (I do have some snappy anecdotes about my consulting projects for a psychology magazine, but let’s move on.)

A semi-creative human explains to his robot that he makes up answers and is not creative in a helpful way. Thanks, Venice.ai. Good enough, and I see you are retiring models, including your default. Interesting.

I read in PsyPost this article: “A Mathematical Ceiling Limits Generative AI to Amateur-Level Creativity.” The main idea is that the current approach to smart software does not just answers dead wrong, but the algorithms themselves run into a creative wall.

Here’s the alleged reason:

The investigation revealed a fundamental trade-off embedded in the architecture of large language models. For an AI response to be effective, the model must select words that have a high probability of fitting the context. For instance, if the prompt is “The cat sat on the…”, the word “mat” is a highly effective completion because it makes sense and is grammatically correct. However, because “mat” is the most statistically probable ending, it is also the least novel. It is entirely expected. Conversely, if the model were to select a word with a very low probability to increase novelty, the effectiveness would drop. Completing the sentence with “red wrench” or “growling cloud” would be highly unexpected and therefore novel, but it would likely be nonsensical and ineffective. Cropley determined that within the closed system of a large language model, novelty and effectiveness function as inversely related variables. As the system strives to be more effective by choosing probable words, it automatically becomes less novel.

Let me take a whack at translating this quote from PsyPost: LLMs like Google-type systems have to decide. [a] Be effective and pick words that fit the context well, like “jelly” after “I ate peanut butter and jelly.” Or, [b] The LLM selects infrequent and unexpected words for novelty. This may lead to LLM wackiness. Therefore, effectiveness and novelty work against each other—more of one means less of the other.

The article references some fancy math and points out:

This comparison suggests that while generative AI can convincingly replicate the work of an average person, it is unable to reach the levels of expert writers, artists, or innovators. The study cites empirical evidence from other researchers showing that AI-generated stories and solutions consistently rank in the 40th to 50th percentile compared to human outputs. These real-world tests support the theoretical conclusion that AI cannot currently bridge the gap to elite [creative] performance.

Before you put your life savings into a giant can’t-lose AI data center investment, you might want to ponder this passage in the PsyPost article:

“For AI to reach expert-level creativity, it would require new architecture capable of generating ideas not tied to past statistical patterns … Until such a paradigm shift occurs in computer science, the evidence indicates that human beings remain the sole source of high-level creativity.

Several observations:

- Today’s best-bet approach is the Google-type LLM. It has creative limits as well as the problems of selling advertising like old-fashioned Google search and outputting incorrect answers

- The method itself erects a creative barrier. This is good for humans who can be creative when they are not doom scrolling.

- A paradigm shift could make those giant data centers extremely large white elephants which lenders are not very good at herding along.

Net net: I liked the angle of the article. I am not convinced I should drop my teen impression of psychology. I am a dinobaby, and I like land line phones with rotary dials.

Stephen E Arnold, November 26, 2025

Why the BAIT Outfits Are Drag Netting for Users

November 25, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Have you wondered why the BAIT (big AI tech) companies are pumping cash into what looks to many like a cash bonfire? Here’s one answer, and I think it is a reasonably good one. Navigate to “Best Case: We’re in a Bubble. Worst Case: The People Profiting Most Know Exactly What They’re Doing.” I want to highlight several passages and then often my usually-ignored observations.

Thanks, Venice.ai. Good enough, but I am not sure how many AI execs wear old-fashioned camping gear.

I noted this statement:

The best case scenario is that AI is just not as valuable as those who invest in it, make it, and sell it believe.

My reaction to this bubble argument is that the BAIT outfits realized after Microsoft said, “AI in Windows” that a monopoly-type outfit was making a move. Was AI the next oil or railroad play? Then Google did its really professional and carefully-planned Code Red or Yellow whatever, the hair-on-fire moment arrived. Now almost three years later, the hot air from the flaming coifs are equaled by the fumes of incinerating bank notes.

The write up offers this comment:

My experience with AI in the design context tends to reflect what I think is generally true about AI in the workplace: the smaller the use case, the larger the gain. The larger the use case, the larger the expense. Most of the larger use cases that I have observed — where AI is leveraged to automate entire workflows, or capture end to end operational data, or replace an entire function — the outlay of work is equal to or greater than the savings. The time we think we’ll save by using AI tends to be spent on doing something else with AI.

The experiences of my team and I support this statement. However, when I go back to the early days of online in the 1970s, the benefits of moving from print research to digital (online) research were fungible. They were quantifiable. Online is where AI lives. As a result, the technology is not global. It is a subset of functions. The more specific the problem, the more likely it is that smart software can help with a segment of the work. The idea that cobbled together methods based on built-in guesses will be wonderful is just plain crazy. Once one thinks of AI as a utility, then it is easier to identify a use case where careful application of the technology will deliver a benefit. I think of AI as a slightly more sophisticated spell checker for writing at the 8th grade level.

The essay points out:

The last ten years have practically been defined by filter bubbles, alternative facts, and weaponized social media — without AI. AI can do all of that better, faster, and with more precision. With a culture-wide degradation of trust in our major global networks, it leaves us vulnerable to lies of all kinds from all kinds of sources and no standard by which to vet the things we see, hear, or read.

Yep, this is a useful way to explain that flows of online information tear down social structures. What’s not referenced, however, is that rebuilding will take a long time. Think about smashing your mom’s favorite Knick- knack. Were you capable of making it as good as new? Sure, a few specialists might be able to do a good job, but the time and cost means that once something is destroyed, that something is gone. The rebuild is at best a close approximation. That’s why people who want to go back to social structures in the 1950s are chasing a fairy tale.

The essay notes:

When a private company can construct what is essentially a new energy city with no people and no elected representation, and do this dozens of times a year across a nation to the point that half a century of national energy policy suddenly gets turned on its head and nuclear reactors are back in style, you have a sudden imbalance of power that looks like a cancer spreading within a national body.

My view is that the BAIT outfits want to control, dominate, and cash in. Hey, if you have cancer and one company has the alleged cure, are you going to take the drug or just die?

Several observations are warranted:

- BAIT outfits want to be the winner and be the only alpha dog. Ruthless behavior will be the norm for these firms.

- AI is the next big thing. The idea is that if one wishes it, thinks it, or invests in it, AI will be. My hunch is that the present methodologies are on the path to becoming the equivalent of a dial up modem.

- The social consequences of the AI utility added to social media are either ignored or not understood. AI is the catalyst needed to turn one substance into an explosion.

Net net: Good essay. I think the downsides referenced in the essay understate the scope of the challenge.

Stephen E Arnold, November 25, 2025