Mistakes Are Biological. Do Not Worry. Be Happy

December 18, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I read a short summary of a longer paper written by a person named Paul Arnold. I hope this is not misinformation. I am not related to Paul. But this could be a mistake. This dinobaby makes many mistakes.

The article that caught my attention is titled “Misinformation Is an Inevitable Biological Reality Across nature, Researchers Argue.” The short item was edited by a human named Gaby Clark. The short essay was reviewed by Robert Edan. I think the idea is to make clear that nothing in the article is made up and it is not misinformation.

Okay, but…. Let’s look at couple of short statements from the write up about misinformation. (I don’t want to go “meta” but the possibility exists that the short item is stuffed full of information. What do you think?

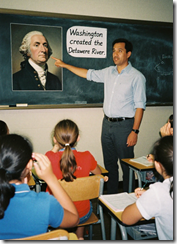

Here’s an image capturing a youngish teacher outputting misinformation to his students. Okay, Qwen. Good enough.

Here’s snippet one:

… there is nothing new about so-called “fake news…”

Okay, does this mean that software that predicts the next word and gets it wrong is part of this old, long-standing trajectory for biological creatures. For me, the idea that algorithms cobbled together gets a pass because “there is nothing new about so-called ‘fake news’ shifts the discussion about smart software. Instead of worrying about getting about two thirds of questions right, the smart software is good enough.

A second snippet says:

Working with these [the models Paul Arnold and probably others developed] led the team to conclude that misinformation is a fundamental feature of all biological communication, not a bug, failure, or other pathology.

Introducing the notion of “pathology” adds a bit of context to misinformation. Is a human assembled smart software system, trained on content that includes misinformation and processed by algorithms that may be biased in some way is just the way the world works. I am not sure I am ready to flash the green light for some of the AI outfits to output what is demonstrably wrong, distorted, weaponized, or non-verifiable outputs.

What puzzled me is that the article points to itself and to an article by Ling Wei Kong et al, “A Brief Natural history of Misinformation” in the Journal of the Royal Society Interface.

Here’s the link to the original article. The authors of the publication are, if the information on the Web instance of the article is accurate, Ling-Wei Kong, Lucas Gallart, Abigail G. Grassick, Jay W. Love, Amlan Nayak, and Andrew M. Hein. Seven people worked on the “original” article. The three people identified in the short version worked on that item. This adds up to 10 people. Apparently the group believes that misinformation is a part of the biological being. Therefore, there is no cause to worry. In fact, there are mechanisms to deal with misinformation. Obviously a duck quack that sends a couple of hundred mallards aloft can protect the flock. A minimum of one duck needs to check out the threat only to find nothing is visible. That duck heads back to the pond. Maybe others follow? Maybe the duck ends up alone in the pond. The ducks take the viewpoint, “Better safe than sorry.”

But when a system or a mobile device outputs incorrect or weaponized information to a user, there may not be a flock around. If there is a group of people, none of them may be able to identify the incorrect or weaponized information. Thus, the biological propensity to be wrong bumps into an output which may be shaped to cause a particular effect or to alter a human’s way of thinking.

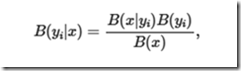

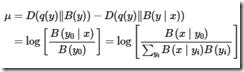

Most people will not sit down and take a close look at this evidence of scientific rigor:

and then follow the logic that leads to:

I am pretty old but it looks as if Mildred Martens, my old math teacher, would suggest the KL divergence wants me to assume some things about q(y). On the right side, I think I see some good old Bayesian stuff but I didn’t see the to take me from the KL-difference to log posterior-to-prior ratio. Would Miss Martens ask a student like me to clarify the transitions, fix up the notation, and eliminate issues between expectation vs. pointwise values? Remember, please, that I am a dinobaby and I could be outputting misinformation about misinformation.

Several observations:

- If one accepts this line of reasoning, misinformation is emergent. It is somehow part of the warp and woof of living and communicating. My take is that one should expect misinformation.

- Anything created by a biological entity will output misinformation. My take on this is that one should expect misinformation everywhere.

- I worry that researchers tackling information, smart software, and related disciplines may work very hard to prove that information is inevitable but the biological organisms can carry on.

I am not sure if I feel comfortable with the normalization of misinformation. As a dinobaby, the function of education is to anchor those completing a course of study in a collection of generally agreed upon facts. With misinformation everywhere, why bother?

Net net: One can read this research and the summary article as an explanation why smart software is just fine. Accept the hallucinations and misstatements. Errors are normal. The ducks are fine. The AI users will be fine. The models will get better. Despite this framing of misinformation is everywhere, the results say, “Knock off the criticism of smart software. You will be fine.”

I am not so sure.

Stephen E Arnold, December 18, 2025

AI Will Doom You to Poverty Unless You Do AI to Make Money

January 23, 2025

Prepared by a still-alive dinobaby.

Prepared by a still-alive dinobaby.

I enjoy reading snippets of the AI doomsayers. Some spent too much time worrying about the power of Joe Stalin’s approach to governing. Others just watched the Terminator series instead of playing touch football. A few “invented” AI by cobbling together incremental improvements in statistical procedures lashed to ever-more-capable computing infrastructures. A couple of these folks know that Nostradamus became a brand and want to emulate that predictive master.

I read “Godfather of AI Explains How Scary AI Will Increase the Wealth Gap and Make Society Worse.” That is a snappy title. Whoever wrote it crafted the idea of an explainer to fear. Plus, the click bait explains that homelessness is for you too. Finally, it presents a trope popular among the elder care set. (Remember, please, that I am a dinobaby myself.) Prod a group of senior citizens to a dinner and you will hear, “Everything is broken.” Also, “I am glad I am old.” Then there is the ever popular, “Those tattoos! The check out clerks cannot make change! I don’t understand commercials!” I like to ask, “How many wars are going on now? Quick.”

Two robots plan a day trip to see the street people in Key West. Thanks, You.com. I asked for a cartoon; I get a photorealistic image. I asked for a coffee shop; I get weird carnival setting. Good enough. (That’s why I am not too worried.)

Is society worse than it ever was? Probably not. I have had an opportunity to visit a number of countries, go to college, work with intelligent (for the most part) people, and read books whilst sitting on the executive mailing tube. Human behavior has been consistent for a long time. Indigenous people did not go to Wegman’s or Whole Paycheck. Some herded animals toward a cliff. Other harvested the food and raw materials from the dead bison at the bottom of the cliff. There were no unskilled change makers at this food delivery location.

The write up says:

One of the major voices expressing these concerns is the ‘Godfather of AI’ himself Geoffrey Hinton, who is viewed as a leading figure in the deep learning community and has played a major role in the development of artificial neural networks. Hinton previously worked for Google on their deep learning AI research team ‘Google Brain’ before resigning in 2023 over what he expresses as the ‘risks’ of artificial intelligence technology.

My hunch is that like me the “worked at” Google was for a good reason — Money. Having departed from the land of volleyball and weird empty office buildings, Geoffrey Hinton is in the doom business. His vision is that there will be more poverty. There’s some poverty in Soweto and the other townships in South Africa. The slums of Rio are no Palm Springs. Rural China is interesting as well. Doesn’t everyone want to run a business from the area in front of a wooden structure adjacent an empty highway to nowhere? Sounds like there is some poverty around, doesn’t it?

The write up reports:

“We’re talking about having a huge increase in productivity. So there’s going to be more goods and services for everybody, so everybody ought to be better off, but actually it’s going to be the other way around. “It’s because we live in a capitalist society, and so what’s going to happen is this huge increase in productivity is going to make much more money for the big companies and the rich, and it’s going to increase the gap between the rich and the people who lose their jobs.”

The fix is to get rid of capitalism. The alternative? Kumbaya or a better version of those fun dudes Marx. Lenin, and Mao. I stayed in the “last” fancy hotel the USSR built in Tallinn, Estonia. News flash: The hotels near LaGuardia are quite a bit more luxurious.

The godfather then evokes the robot that wanted to kill a rebel. You remember this character. He said, “I’ll be back.” Of course, you will. Hollywood does not do originals.

The write up says:

Hinton’s worries don’t just stop at the wealth imbalance caused by AI too, as he details his worries about where AI will stop following investment from big companies in an interview with CBC News: “There’s all the normal things that everybody knows about, but there’s another threat that’s rather different from those, which is if we produce things that are more intelligent than us, how do we know we can keep control?” This is a conundrum that has circulated the development of robots and AI for years and years, but it’s seeming to be an increasingly relevant proposition that we might have to tackle sooner rather than later.

Yep, doom. The fix is to become an AI wizard, work at a Google-type outfit, cash out, and predict doom. It is a solid career plan. Trust me.

Stephen E Arnold, January 23, 2025

Newton and Shoulders of Giants? Baloney. Is It Everyday Theft?

January 31, 2023

Here I am in rural Kentucky. I have been thinking about the failure of education. I recall learning from Ms. Blackburn, my high school algebra teacher, this statement by Sir Isaac Newton, the apple and calculus guy:

If I have seen further, it is by standing on the shoulders of giants.

Did Sir Isaac actually say this? I don’t know, and I don’t care too much. It is the gist of the sentence that matters. Why? I just finished reading — and this is the actual article title — “CNET’s AI Journalist Appears to Have Committed Extensive Plagiarism. CNET’s AI-Written Articles Aren’t Just Riddled with Errors. They Also Appear to Be Substantially Plagiarized.”

How is any self-respecting, super buzzy smart software supposed to know anything without ingesting, indexing, vectorizing, and any other math magic the developers have baked into the system? Did Brunelleschi wake up one day and do the Eureka! thing? Maybe he stood on line and entered the Pantheon and looked up? Maybe he found a wasp’s nest and cut it in half and looked at what the feisty insects did to build a home? Obviously intellectual theft. Just because the dome still stands, when it falls, he is an untrustworthy architect engineer. Argument nailed.

The write up focuses on other ideas; namely, being incorrect and stealing content. Okay, those are interesting and possibly valid points. The write up states:

All told, a pattern quickly emerges. Essentially, CNET‘s AI seems to approach a topic by examining similar articles that have already been published and ripping sentences out of them. As it goes, it makes adjustments — sometimes minor, sometimes major — to the original sentence’s syntax, word choice, and structure. Sometimes it mashes two sentences together, or breaks one apart, or assembles chunks into new Frankensentences. Then it seems to repeat the process until it’s cooked up an entire article.

For a short (very, very brief) time I taught freshman English at a big time university. What the Futurism article describes is how I interpreted the work process of my students. Those entitled and enquiring minds just wanted to crank out an essay that would meet my requirements and hopefully get an A or a 10, which was a signal that Bryce or Helen was a very good student. Then go to a local hang out and talk about Heidegger? Nope, mostly about the opposite sex, music, and getting their hands on a copy of Dr. Oehling’s test from last semester for European History 104. Substitute the topics you talked about to make my statement more “accurate”, please.

I loved the final paragraphs of the Futurism article. Not only is a competitor tossed over the argument’s wall, but the Google and its outstanding relevance finds itself a target. Imagine. Google. Criticized. The article’s final statements are interesting; to wit:

As The Verge reported in a fascinating deep dive last week, the company’s primary strategy is to post massive quantities of content, carefully engineered to rank highly in Google, and loaded with lucrative affiliate links. For Red Ventures, The Verge found, those priorities have transformed the once-venerable CNET into an “AI-powered SEO money machine.” That might work well for Red Ventures’ bottom line, but the specter of that model oozing outward into the rest of the publishing industry should probably alarm anybody concerned with quality journalism or — especially if you’re a CNET reader these days — trustworthy information.

Do you like the word trustworthy? I do. Does Sir Isaac fit into this future-leaning analysis. Nope, he’s still pre-occupied with proving that the evil Gottfried Wilhelm Leibniz was tipped off about tiny rectangles and the methods thereof. Perhaps Futurism can blame smart software?

Stephen E Arnold, January 31, 2023

Smart Software: Can Humans Keep Pace with Emergent Behavior ?

November 29, 2022

For the last six months, I have been poking around the idea that certain behaviors are emergent; that is, give humans a capability or a dataspace, and those humans will develop novel features and functions. The examples we have been exploring are related to methods used by bad actors to avoid take downs by law enforcement. The emergent behaviors we have noted exploit domain name registry mechanisms and clever software able to obfuscate traffic from Tor exit nodes. The result of the online dataspace is unanticipated emergent behaviors. The idea is that bad actors come up with something novel using the Internet’s furniture.

We noted “137 Emergent Abilities of Large Language Models.” If our understanding of this report is mostly accurate, large language models like those used by Google and other firms manifest emergent behavior. What’s interesting is that the write up explains that there is not one type of emergent behavior. The article ideas a Rivian truck bed full of emergent behaviors.

Here’s are the behaviors associated with big data sets and LaMDA 137B. (The method is a family of Transformer-based neural language models specialized for dialog. Correctly or incorrectly we associate LaMBA with Google’s smart software work. See this Google blog post.) Now here are the items mentioned in the Emergent Abilities paper:

Gender inclusive sentences German

Irony identification

Logical arguments

Repeat copy logic

Sports understanding

Swahili English proverbs

Word sorting

Word unscrambling

Another category of emergent behavior is what the paper calls “Emergent prompting strategies.” The idea is more general prompting strategies manifest themselves. The system can perform certain functions that cannot be implemented when using “small” data sets; for example, solving multi step math problems in less widely used languages.

The paper includes links so the different types of emergent behavior can be explored. The paper wraps up with questions researchers may want to consider. One question we found suggestive was:

What tasks are language models currently not able to to perform, that we should evaluate on future language models of better quality?

The notion of emergent behavior is important for two reasons: [a] Systems can manifest capabilities or possible behaviors not anticipated by developers and [b] Novel capabilities may create additional unforeseen capabilities or actions.

If one thinks about emergent behaviors in any smart, big data system, humans may struggle to understand, keep up, and manage downstream consequences in one or more dataspaces.

Stephen E Arnold, November 29, 2022

Objectivity in ALGOs: ALL GONE?

April 21, 2022

Objective algorithms? Nope. Four examples.

- Navigate to “How Anitta Megafans Gamed Spotify to Help Create Brazil’s First Global Chart-Topper.” The write up explains how the Spotify algos were manipulated.

- Check out the Washington Post story (paywall, gentle reader) “Internet Algospeak Is Changing Our Language in Real Time from Nips Nops to le Dollar Bean.” Change the words; fool the objective and too-smart algorithm.

- Now navigate to your favorite day trading discussion group Wall Street Bets. You can find this loose confederation at this link. You may spot some interesting humor. A few “tips” signal attempts to take advantage of a number of investing characteristics.

- Finally, pick your favorite search engine and enter the phrase search engine optimization. Scan the results.

Each of these examples signal the industrious folks who want to find, believe they have discovered, or have found ways to fiddle with objective algorithms.

Envision a world in which algorithms do more and more light and heavy lifting. Who are the winners? My thought is that it will be the clever people and their software agents. Am I looking forward to be an algo loser?

Sure, sounds like heaven. Oh, what about individuals who cannot manipulate algorithms or hire the people who can? A T shirt which says “Objectivity is ALGOne.” There’s a hoodie available. It says, “Manipulate me.”

Stephen E Arnold, April 18, 2022

DarkCyber for January 18, 2022 Now Available : An Interview with Dr. Donna M. Ingram

January 18, 2022

The fourth series of DarkCyber videos kicks off with an interview. You can view the program on YouTube at this link. Dr. Donna M. Ingram is the author of a new book titled “Help Me Learn Statistics.” The book is available on the Apple ebook store and features interactive solutions to the problems used to reinforce important concepts explained in the text. In the interview, Dr. Ingram talks about sampling, synthetic data, and a method to reduce the errors which can creep into certain analyses. Dr. Ingram’s clients include financial institutions, manufacturing companies, legal subrogration customers, and specialized software companies.

Kenny Toth, January 18, 2022

Credder: A New Fake News Finder

November 5, 2021

Fake news is a pandemic that spreads as fast as COVID-19 and wreaks as much havoc. While scientists created a cure for the virus, it is more difficult to cure misinformation. Make Use Of says there is a new tool to detect fake news: “How To Spot Fake News With This Handy Tool.”

Credder is a online platform designed by Chris Palmieri. He is a professional restaurateur and decided to build Credder after seeing potential in review sites like Yelp. Unlike Yelp and other review sites, Credder does not rate physical locations or items. The platform does not host any news articles. It crawls publications for the latest news and allows users and verified journalists to rate articles.

Credder is designed to fight clickbait and ensure information accuracy. Ratings are posted below each news brief. Verified journalists comment about their ratings and users can submit new pieces to rate.

Credder spots fake news in the following ways:

“Search for relevant articles on Credder. Besides each article, you will see the Public Rating and the User Critic Rating.

• The higher the rating, the more reliable the source.

• You can click on the article, and you’ll be taken to the parent website.

• There’s also a handy search tool that you can use to find articles or authors via keywords.

• Users can also rate individual authors and outlets. In turn, each user is assigned a rating from Credder as well. This is designed to ensure the quality of ratings across the platform.”

Credder relies on crowdsourcing and honesty to rate articles. There is not a system in place to verify journalist credentials and bias happens when users give their favorite authors and sources high scores. Credder, however, is transparent similar to the Web of Trust.

Fake news is a rash that will not go away, but it can be stopped. A little common sense and information literacy goes a long way in combatting fake news. Credder should start making PSAs for YouTube, Hulu, and cable TV.

Whitney Grace, November 5, 2021

Key Words: Useful Things

October 7, 2021

In the middle of nowhere in the American southwest, lunch time conversation turned to surveillance. I mentioned a couple of characteristics of modern smartphones, butjec people put down their sandwiches. I changed the subject. Later, when a wispy LTE signal permitted, I read “Google Is Giving Data to Police Based on Search Keywords, Court Docs Show.” This is an example of information which I don’t think should be made public.

The write up states:

Court documents showed that Google provided the IP addresses of people who searched for the arson victim’s address, which investigators tied to a phone number belonging to Williams. Police then used the phone number records to pinpoint the location of Williams’ device near the arson, according to court documents.

I want to point out that any string could contain actionable information; to wit:

- The name or abbreviation of a chemical substance

- An address of an entity

- A slang term for a controlled substance

- A specific geographic area or a latitude and longitude designation on a Google map.

With data federation and cross correlation, some specialized software systems can knit together disparate items of information in a useful manner.

The data and the analytic tools are essential for some government activities. Careless release of such sensitive information has unanticipated downstream consequences. Old fashioned secrecy has some upsides in my opinion.

Stephen E Arnold, October 7, 2021

Gender Biased AI in Job Searches Now a Thing

June 30, 2021

From initial search to applications to interviews, job hunters are now steered through the process by algorithms. Employers’ demand for AI solutions has surged with the pandemic, but there is a problem—the approach tends to disadvantage women applicants. An article at MIT Technology Review describes one website’s true bro response: “LinkedIn’s Job-Matching AI Was Biased. The Company’s Solution? More AI.” Reporters Sheridan Wall and Hilke Schellmann also cover the responses of competing job search sites Monster, CareerBuilder, and ZipRecruiter. Citing former LinkedIn VP John Jerson, they write:

“These systems base their recommendations on three categories of data: information the user provides directly to the platform; data assigned to the user based on others with similar skill sets, experiences, and interests; and behavioral data, like how often a user responds to messages or interacts with job postings. In LinkedIn’s case, these algorithms exclude a person’s name, age, gender, and race, because including these characteristics can contribute to bias in automated processes. But Jersin’s team found that even so, the service’s algorithms could still detect behavioral patterns exhibited by groups with particular gender identities. For example, while men are more likely to apply for jobs that require work experience beyond their qualifications, women tend to only go for jobs in which their qualifications match the position’s requirements. The algorithm interprets this variation in behavior and adjusts its recommendations in a way that inadvertently disadvantages women. … Men also include more skills on their résumés at a lower degree of proficiency than women, and they often engage more aggressively with recruiters on the platform.”

Rather than, say, inject human judgment into the process, LinkedIn added new AI in 2018 designed to correct for the first algorithm’s bias. Other companies side-step the AI issue. CareerBuilder addresses bias by teaching employers how to eliminate it from their job postings, while Monster relies on attracting users from diverse backgrounds. ZipRecruiter’s CEO says that site classifies job hunters using 64 types of information, including geographical data but not identifying pieces like names. He refused to share more details, but is confident his team’s method is as bias-free as can be. Perhaps—but the claims of any of these sites are difficult or impossible to verify.

Cynthia Murrell, June 30, 2021

SPACtacular Palantir Tech Gets More Attention: This Is Good?

June 30, 2021

Palantir is working to expand its public-private partnership operations beyond security into the healthcare field. Some say the company has fallen short in its efforts to peddle security software to officials in Europe, so the data-rich field of government-managed healthcare is the next logical step. Apparently the pandemic gave Palantir the opening it was looking for, paving the way for a deal it made with the UK’s National Health Service to develop the NHS COVID-19 Data Store. Now however, CNBC reports, “Campaign Launches to Try to Force Palantir Out of Britain’s NHS.” Reporter Sam L. Shead states that more than 50 organizations, led by tech-justice nonprofit Foxglove, are protesting Palantir’s involvement. We learn:

“The Covid-19 Data Store project, which involves Palantir’s Foundry data management platform, began in March 2020 alongside other tech giants as the government tried to slow the spread of the virus across the U.K. It was sold as a short-term effort to predict how best to deploy resources to deal with the pandemic. The contract was quietly extended in December, when the NHS and Palantir signed a £23 million ($34 million) two-year deal that allows the company to continue its work until December 2022. The NHS was sued by political website openDemocracy in February over the contract extension. ‘December’s new, two-year contract reaches far beyond Covid: to Brexit, general business planning and much more,’ the group said. The NHS contract allows Palantir to help manage the data lake, which contains everybody’s health data for pandemic purposes. ‘The reality is, sad to say, all this whiz-bang data integration didn’t stop the United Kingdom having one of the worst death tolls in the Western world,’ said [Foxglove co-founder Cori] Crider. ‘This kind of techno solutionism is not necessarily the best way of making an NHS sustainable for the long haul.’”

Not surprisingly, privacy is the advocacy groups’ main concern. The very personal data used in the project is not being truly anonymized—instead it is being “pseudo-anonymized,” a reversible process where an alias is swapped out for identifying information. Both the NHS and Palantir assure citizens re-identification will only be performed if necessary, and that the company has no interest in the patient data itself. In fact, we are told, that data remains the property of NHS and Palantir can only do so much with it. Those protesting the project, though, understand that money can talk louder than corporate promises; many companies would offer much for the opportunity to monetize that data.

Cynthia Murrell, June 30, 2021