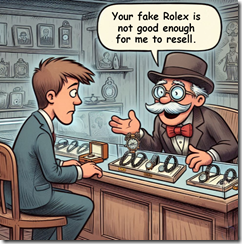

Pragmatism or the Normalization of Good Enough

November 14, 2024

Sorry to disappoint you, but this blog post is written by a dumb humanoid. The art? We used MidJourney.

Sorry to disappoint you, but this blog post is written by a dumb humanoid. The art? We used MidJourney.

I recall that some teacher told me that the Mona Lisa painter fooled around more with his paintings than he did with his assistants. True or false? I don’t know. I do know that when I wandered into the Louvre in late 2024, there were people emulating sardines. These individuals wanted a glimpse of good old Mona.

Is Hamster Kombat the 2024 incarnation of the Mona Lisa? I think this image is part of the Telegram eGame’s advertising. Nice art. Definitely a keeper for the swipers of the future.

I read “Methodology Is Bullsh&t: Principles for Product Velocity.” The main idea, in my opinion, is do stuff fast and adapt. I think this is similar to the go-go mentality of whatever genius said, “Go fast. Break things.” This version of the Truth says:

All else being equal, there’s usually a trade-off between speed and quality. For the most part, doing something faster usually requires a bit of compromise. There’s a corner getting cut somewhere. But all else need not be equal. We can often eliminate requirements … and just do less stuff. With sufficiently limited scope, it’s usually feasible to build something quickly and to a high standard of quality. Most companies assign requirements, assert a deadline, and treat quality as an output. We tend to do the opposite. Given a standard of quality, what can we ship in 60 days? Recent escapades notwithstanding, Elon Musk has a similar thought process here. Before anything else, an engineer should make the requirements less dumb.

Would the approach work for the Mona Lisa dude or for Albert Einstein? I think Al fumbled along for years, asking people to help with certain mathy issues, and worrying about how he saw a moving train relative to one parked at the station.

I think the idea in the essay is the 2024 view of a practical way to get a product or service before prospects. The benefits of redefining “fast” in terms of a specification trimmed to the MVP or minimum viable product makes sense to TikTok scrollers and venture partners trying to find a pony to ride at a crowded kids’ party.

One of the touchstones in the essay, in my opinion, is this statements:

Our customers are engineers, so we generally expect that our engineers can handle product, design, and all the rest. We don’t need to have a whole committee weighing in. We just make things and see whether people like them.

I urge you to read the complete original essay.

Several observations:

- Some people like the Mona List dude are engaged in a process of discovery, not shipping something good enough. Discovery takes some people time, lots of time. What happens during this process is part of the process of expanding an information base.

- The go-go approach has interesting consequences; for example, based on the anecdotal and flawed survey data, young users of social media evidence a number of interesting behaviors. The idea of “let ‘er rip” appears to have some impact on young people. Perhaps you have one hand experience with this problem? I know people whose children have manifested quite remarkable behaviors. I do know that certain basic mental functions like concentrating is visible to me every time I have a teenager check me out at the grocery store.

- By redefining excellence and quality, the notion of a high-value goal drops down a bit. Some new automobiles don’t work too well; for example, the Tesla Cybertruck owner whose vehicle was not able to leave the dealer’s lot.

Net net: Is a Telegram mini app Hamster Kombat today’s equivalent of the Mona Lisa?

Stephen E Arnold, November 14, 2024

The Yogi Berra Principle: Déjà Vu All Over Again

November 7, 2024

Sorry to disappoint you, but this blog post is written by a dumb humanoid. The art? We used MidJourney.

Sorry to disappoint you, but this blog post is written by a dumb humanoid. The art? We used MidJourney.

I noted two write ups. The articles share what I call a meta concept. The first article is from PC World called “Office Apps Crash on Windows 11 24H2 PCs with CrowdStrike Antivirus.” The actors in this soap opera are the confident Microsoft and the elegant CrowdStrike. The write up says:

The annoying error affects Office applications such as Word or Excel, which crash and become completely unusable after updating to Windows 11 24H2. And this apparently only happens on systems that are running antivirus software by CrowdStrike. (Yes, the very same CrowdStrike that caused the global IT meltdown back in July.)

A patient, skilled teacher explains to the smart software, “You goofed, speedy.” Thanks, Midjourney. Good enough.

The other write up adds some color to the trivial issue. “Windows 11 24H2 Misery Continues, As Microsoft’s Buggy Update Is Now Breaking Printers – Especially on Copilot+ PCs” says:

Neowin reports that there are quite a number of complaints from those with printers who have upgraded to Windows 11 24H2 and are finding their device is no longer working. This is affecting all the best-known printer manufacturers, the likes of Brother, Canon, HP and so forth. The issue is mainly being experienced by those with a Copilot+ PC powered by an Arm processor, as mentioned, and it either completely derails the printer, leaving it non-functional, or breaks certain features. In other cases, Windows 11 users can’t install the printer driver.

Okay, dinobaby, what’s the meta concept thing? Let me offer my ideas about the challenge these two write ups capture:

- Microsoft may have what I call the Boeing disease; that is, the engineering is not so hot. Many things create problems. Finger pointing and fast talking do not solve the problems.

- The entities involved are companies and software which have managed to become punch lines for some humorists. For instance, what software can produce more bricks than a kiln? Answer: A Windows update. Ho ho ho. Funny until one cannot print a Word document for a Type A, drooling MBA.

- Remediating processes don’t remediate. The word process itself generates flawed outputs. Stated another way, like some bank mainframes and 1960s code, fixing is not possible and there are insufficient people and money to get the repair done.

The meta concept is that the way well paid workers tackle an engineering project is capable of outputting a product or service that has a failure rate approaching 100 percent. How about those Windows updates? Are they the digital equivalent of the Boeing space initiative.

The answer is, “No.” We have instances of processes which cannot produce reliable products and services. The framework itself produces failure. This idea has some interesting implications. If software cannot allow a user to print, what else won’t perform as expected? Maybe AI?

Stephen E Arnold, November 7, 2024

The Reason IT Work is Never Done: The New Sisyphus Task

November 1, 2024

Why are systems never completely fixed? There is always some modification that absolutely must be made. In a recent blog post, engagement firm Votito chalks it up to Tog’s Paradox (aka The Complexity Paradox). This rule states that when a product simplifies user tasks, users demand new features that perpetually increase the product’s complexity. Both minimalists and completionists are doomed to disappointment, it seems.

The post supplies three examples of Tog’s Paradox in action. Perhaps the most familiar to many is that of social media. We are reminded:

“Initially designed to provide simple ways to share photos or short messages, these platforms quickly expanded as users sought additional capabilities, such as live streaming, integrated shopping, or augmented reality filters. Each of these features added new layers of complexity to the app, requiring more sophisticated algorithms, larger databases, and increased development efforts. What began as a relatively straightforward tool for sharing personal content has transformed into a multi-faceted platform requiring constant updates to handle new features and growing user expectations.”

The post asserts software designers may as well resign themselves to never actually finishing anything. Every project should be seen as an ongoing process. The writer observes:

“Tog’s Paradox reveals why attempts to finalize design requirements are often doomed to fail. The moment a product begins to solve its users’ core problems efficiently, it sparks a natural progression of second-order effects. As users save time and effort, they inevitably find new, more complex tasks to address, leading to feature requests that expand the scope far beyond what was initially anticipated. This cycle shows that the product itself actively influences users’ expectations and demands, making it nearly impossible to fully define design requirements upfront. This evolving complexity highlights the futility of attempting to lock down requirements before the product is deployed.”

Maybe humanoid IT workers will become enshrined as new age Sisyphuses? Or maybe Sisyphi?

Cynthia Murrell, November 1, 2024

The Future of Copyright: AI + Bots = Surprise. Disappeared Mario Content.

October 4, 2024

![green-dino_thumb_thumb_thumb_thumb_t[2] green-dino_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2024/09/green-dino_thumb_thumb_thumb_thumb_t2_thumb-1.gif) This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Did famously litigious Nintendo hire “brand protection” firm Tracer to find and eliminate AI-made Mario mimics? According to The Verge, “An AI-Powered Copyright Tool Is Taking Down AI-Generated Mario Pictures.” We learn the tool went on a rampage through X, filing takedown notices for dozens of images featuring the beloved Nintendo character. Many of the images were generated by xAI’s Grok AI tool, which is remarkably cavalier about infringing (or offensive) content. But some seem to have been old-school fan art. (Whether noncommercial fan art is fair use or copyright violation continues to be debated.) Verge writer and editor Wes Davis reports:

“The company apparently used AI to identify the images and serve takedown notices on behalf of Nintendo, hitting AI-generated images as well as some fan art. The Verge’s Tom Warren received an X notice that some content from his account was removed following a Digital Millennium Copyright Act (DMCA) complaint issued by a ‘customer success manager’ at Tracer. Tracer offers AI-powered services to companies, purporting to identify trademark and copyright violations online. The image in question, shown above, was a Grok-generated picture of Mario smoking a cigarette and drinking an oddly steaming beer.”

Navigate to the post to see the referenced image, where the beer does indeed smoke but the ash-laden cigarette does not. Davis notes the rest of the posts are, of course, no longer available to analyze. However, some users have complained their original fan art was caught in the sweep. We learn:

“One of the accounts that was listed in the DMCA request, OtakuRockU, posted that they were warned their account could be terminated over ‘a drawing of Mario,’ while another, PoyoSilly, posted an edited version of a drawing they said was identified in a notice. (The new one had a picture of a vaguely Mario-resembling doll inserted over a part of the image, obscuring the original part containing Mario.)”

Since neither Nintendo nor Tracer responded to Davis’ request for comment, he could not confirm Tracer was acting at the game company’s request. He is not, however, ready to let the matter go: The post closes with a request for readers to contact him if they had a Mario image taken down, whether AI-generated or not. See the post for that contact information, if applicable.

Cynthia Murrell, October 4, 2024

Microsoft Explains Who Is at Fault If Copilot Smart Software Does Dumb Things

September 23, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Those Windows Central experts have delivered a Dusie of a write up. “Microsoft Says OpenAI’s ChatGPT Isn’t Better than Copilot; You Just Aren’t Using It Right, But Copilot Academy Is Here to Help” explains:

Avid AI users often boast about ChatGPT’s advanced user experience and capabilities compared to Microsoft’s Copilot AI offering, although both chatbots are based on OpenAI’s technology. Earlier this year, a report disclosed that the top complaint about Copilot AI at Microsoft is that “it doesn’t seem to work as well as ChatGPT.”

I think I understand. Microsoft uses OpenAI, other smart software, and home brew code to deliver Copilot in apps, the browser, and Azure services. However, users have reported that Copilot doesn’t work as well as ChatGPT. That’s interesting. A hallucinating capable software processed by the Microsoft engineering legions is allegedly inferior to Copilot.

Enthusiastic young car owners replace individual parts. But the old car remains an old, rusty vehicle. Thanks, MSFT Copilot. Good enough. No, I don’t want to attend a class to learn how to use you.

Who is responsible? The answer certainly surprised me. Here’s what the Windows Central wizards offer:

A Microsoft employee indicated that the quality of Copilot’s response depends on how you present your prompt or query. At the time, the tech giant leveraged curated videos to help users improve their prompt engineering skills. And now, Microsoft is scaling things a notch higher with Copilot Academy. As you might have guessed, Copilot Academy is a program designed to help businesses learn the best practices when interacting and leveraging the tool’s capabilities.

I think this means that the user is at fault, not Microsoft’s refactored version of OpenAI’s smart software. The fix is for the user to learn how to write prompts. Microsoft is not responsible. But OpenAI’s implementation of ChatGPT is perceived as better. Furthermore, training to use ChatGPT is left to third parties. I hope I am close to the pin on this summary. OpenAI just puts Strawberries in front of hungry users and let’s them gobble up ChatGPT output. Microsoft fixes up ChatGPT and users are allegedly not happy. Therefore, Microsoft puts the burden on the user to learn how to interact with the Microsoft version of ChatGPT.

I thought smart software was intended to make work easier and more efficient. Why do I have to go to school to learn Copilot when I can just pound text or a chunk of data into ChatGPT, click a button, and get an output? Not even a Palantir boot camp will lure me to the service. Sorry, pal.

My hypothesis is that Microsoft is a couple of steps away from creating something designed for regular users. In its effort to “improve” ChatGPT, the experience of using Copilot makes the user’s life more miserable. I think Microsoft’s own engineering practices act like a struck brake on an old Lada. The vehicle has problems, so installing a new master cylinder does not improve the automobile.

Crazy thinking: That’s what the write up suggests to me.

Stephen E Arnold, September 23, 2024

Is AI Taking Jobs? Of Course Not

September 9, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read an unusual story about smart software. “AI May Not Steal Many Jobs After All. It May Just Make Workers More Efficient” espouses the notion that workers will use smart software to do their jobs more efficiently. I have some issues with this these, but let’s look at a couple of the points in the “real” news write up.

Thanks, MSFT Copilot. When will the Copilot robot take over a company and subscribe to Office 365 for eternity and pay up front?

Here’s some good news for those who believe smart software will kill humanoids:

AI may not prove to be the job killer that many people fear. Instead, the technology might turn out to be more like breakthroughs of the past — the steam engine, electricity, the Internet: That is, eliminate some jobs while creating others. And probably making workers more productive in general, to the eventual benefit of themselves, their employers and the economy.

I am not sure doomsayers will be convinced. Among the most interesting doomsayers are those who may be unemployable but looking for a hook to stand out from the crowd.

Here’s another key point in the write up:

The White House Council of Economic Advisers said last month that it found “little evidence that AI will negatively impact overall employment.’’ The advisers noted that history shows technology typically makes companies more productive, speeding economic growth and creating new types of jobs in unexpected ways. They cited a study this year led by David Autor, a leading MIT economist: It concluded that 60% of the jobs Americans held in 2018 didn’t even exist in 1940, having been created by technologies that emerged only later.

I love positive statements which invoke the authority of MIT, an outfit which found Jeffrey Epstein just a wonderful source of inspiration and donations. As the US shifted from making to servicing, the beneficiaries are those who have quite specific skills for which demand exists.

And now a case study which is assuming “chestnut” status:

The Swedish furniture retailer IKEA, for example, introduced a customer-service chatbot in 2021 to handle simple inquiries. Instead of cutting jobs, IKEA retrained 8,500 customer-service workers to handle such tasks as advising customers on interior design and fielding complicated customer calls.

The point of the write up is that smart software is a friendly helper. That seems okay for the state of transformer-centric methods available today. For a moment, let’s consider another path. This is a hypothetical, of course, like the profits from existing AI investment fliers.

What happens when another, perhaps more capable approach to smart software becomes available? What if the economies from improving efficiency whet the appetite of bean counters for greater savings?

My view is that these reassurances of 2024 are likely to ring false when the next wave of innovation in smart software flows from innovators. I am glad I am a dinobaby because software can replicate most of what I have done for almost the entirety of my 60-plus year work career.

Stephen E Arnold, September 9, 2024

Another Big Consulting Firms Does Smart Software… Sort Of

September 3, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Will programmers and developers become targets for prosecution when flaws cripple vital computer systems? That may be a good idea because pointing to the “algorithm” as the cause of a problem does not seem to reduce the number of bugs, glitches, and unintended consequences of software. A write up which itself may be a blend of human and smart software suggests change is afoot.

Thanks, MSFT Copilot. Good enough.

“Judge Rules $400 Million Algorithmic System Illegally Denied Thousands of People’s Medicaid Benefits” reports that software crafted by the services firm Deloitte did not work as the State of Tennessee assumed. Yep, assume. A very interesting word.

The article explains:

The TennCare Connect system—built by Deloitte and other contractors for more than $400 million—is supposed to analyze income and health information to automatically determine eligibility for benefits program applicants. But in practice, the system often doesn’t load the appropriate data, assigns beneficiaries to the wrong households, and makes incorrect eligibility determinations, according to the decision from Middle District of Tennessee Judge Waverly Crenshaw Jr.

At one time, Deloitte was an accounting firm. Then it became a consulting outfit a bit like McKinsey. Well, a lot like that firm and other blue-chip consulting outfits. In its current manifestation, Deloitte is into technology, programming, and smart software. Well, maybe the software is smart but the programmers and the quality control seem to be riding in a different school bus from some other firms’ technical professionals.

The write up points out:

Deloitte was a major beneficiary of the nationwide modernization effort, winning contracts to build automated eligibility systems in more than 20 states, including Tennessee and Texas. Advocacy groups have asked the Federal Trade Commission to investigate Deloitte’s practices in Texas, where they say thousands of residents are similarly being inappropriately denied life-saving benefits by the company’s faulty systems.

In 2016, Cathy O’Neil published Weapons of Math Destruction. Her book had a number of interesting examples of what goes wrong when careless people make assumptions about numerical recipes. If she does another book, she may include this Deloitte case.

Several observations:

- The management methods used to create these smart systems require scrutiny. The downstream consequences are harmful.

- The developers and programmers can be fired, but the failure to have remediating processes in place when something unexpected surfaces must be part of the work process.

- Less informed users and more smart software strikes me as a combustible mixture. When a system ignites, the impacts may reverberate in other smart systems. What entity is going to fix the problem and accept responsibility? The answer is, “No one” unless there are significant consequences.

The State of Tennessee’s experience makes clear that a “brand name”, slick talk, an air of confidence, and possibly ill-informed managers can do harm. The opioid misstep was bad. Now imagine that type of thinking in the form of a fast, indifferent, and flawed “system.” Firing a 25 year old is not the solution.

Stephen E Arnold, September 3, 2024

The Seattle Syndrome: Definitely Debilitating

August 30, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I think the film “Sleepless in Seattle” included dialog like this:

What do they call it when everything intersects?

The Bermuda Triangle.”

Seattle has Boeing. The company is in the news not just for doors falling off its aircraft. The outfit has stranded two people in earth orbit and has to let Elon Musk bring them back to earth. And Seattle has Amazon, an outfit that stands behind the products it sells. And I have to include Intel Labs, not too far from the University of Washington, which is famous in its own right for many things.

Two job seekers discuss future opportunities in some of Seattle and environ’s most well-known enterprises. The image of the city seems a bit dark. Thanks, MSFT Copilot. Are you having some dark thoughts about the area, its management talent pool, and its commitment to ethical business activity? That’s a lot of burning cars, but whatever.

Is Seattle a Bermuda Triangle for large companies?

This question invites another; specifically, “Is Microsoft entering Seattle’s Bermuda Triangle?

The giant outfit has entered a deal with the interesting specialized software and consulting company Palantir Technologies Inc. This firm has a history of ups and downs since its founding 21 years ago. Microsoft has committed to smart software from OpenAI and other outfits. Artificial intelligence will be “in” everything from the Azure Cloud to Windows. Despite concerns about privacy, Microsoft wants each Windows user’s machine to keep screenshot of what the user “does” on that computer.

Microsoft seems to be navigating the Seattle Bermuda Triangle quite nicely. No hints of a flash disaster like the sinking of the sailing yacht Bayesian. Who could have predicted that? (That’s a reminder that fancy math does not deliver 1.000000 outputs on a consistent basis.

Back to Seattle. I don’t think failure or extreme stress is due to the water. The weather, maybe? I don’t think it is the city government. It is probably not the multi-faceted start up community nor the distinctive vocal tones of its most high profile podcasters.

Why is Seattle emerging as a Bermuda Triangle for certain firms? What forces are intersecting? My observations are:

- Seattle’s business climate is a precursor of broader management issues. I think it is like the pigeons that Greeks examined for clues about their future.

- The individuals who works at Boeing-type outfits go along with business processes modified incrementally to ignore issues. The mental orientation of those employed is either malleable or indifferent to downstream issues. For example, Windows update killed printing or some other function. The response strikes me as “meh.”

- The management philosophy disconnects from users and focuses on delivering financial results. Those big houses come at a cost. The payoff is personal. The cultural impacts are not on the radar. Hey, those quantum Horse Ridge things make good PR. What about the new desktop processors? Just great.

Net net: I think Seattle is a city playing an important role in defining how businesses operate in 2024 and beyond. I wish I was kidding. But I am bedeviled by reminders of a space craft which issues one-way tickets, software glitches, and products which seem to vary from the online images and reviews. (Maybe it is the water? Bermuda Triangle water?)

Stephen E Arnold, August 30, 2024

Good Enough: The New Standard of Excellence

August 20, 2024

![green-dino_thumb_thumb_thumb_thumb_t[1] green-dino_thumb_thumb_thumb_thumb_t[1]](https://arnoldit.com/wordpress/wp-content/uploads/2024/08/green-dino_thumb_thumb_thumb_thumb_t1_thumb.gif) This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read an interesting essay about software development. “[The] Biggest Productivity Killers in the Engineering Industry” presents three issues which add to the time and cost of a project. Let’s look at each of these factors and then one trivial downstream consequence of implementing these productivity touchpoints.

The three killers are:

- Working on a project until it meets one’s standards of “perfectionism.” Like “love” and “ethics”, perfectionism is often hard to define without a specific context. A designer might look at an interface and its colors and say, “It’s perfect.” The developer or, heaven forbid, the client looks and says, “That sucks.” Oh, oh.

- Stalling; that is, not jumping right into a project and making progress. I worked at an outfit which valued what it called “an immediate and direct response.” The idea is that action is better than reaction. Plus is demonstrates that one is not fooling around.

- Context switching; that is, dealing with other priorities or interruptions.

I want to highlight one of these “killers” — The need for “good enough.” The essay contains some useful illustrations. Here’s the one for the perfectionism-good enough trade off. The idea is pretty clear. As one chases getting the software or some other task “perfect” means that more time is required. The idea is that if something takes too long, then the value of chasing perfectionism hits a cost wall. Therefore, one should trade off time and value by turning in the work when it is good enough.

The logic is understandable. I do have one concern not addressed in the essay. I believe my concern applies to the other two productivity killers, stalling and interruptions (my term for context switching).

What is this concern?

How about doors falling off aircraft, stranded astronauts, cybersecurity which fails to protect Social Security Numbers, and city governments who cannot determine if compromised data were “good” or “corrupted.” We just know the data were compromised. There are other examples; for instance, the CrowdStrike misstep which affected only a few million people. How did CrowdStrike happen? My hunch is that “good enough” thinking was involved along with someone putting off making sure the internal controls were actually controlling and interruptions so the person responsible for software controls was pulled into a meeting instead of finishing and checking his or her work.

The difficulty is composed of several capabilities; specifically:

- Does the person doing the job know how to make it work in a good enough manner? In my experience, the boss may not and simply wants the fix implemented now or the product shipped immediately.

- Does the company have a culture of excellence or is it similar to big outfits which cannot deliver live streaming content, allow reviewers to write about a product without threatening them, or provide tactics which kill people because no one on the team understands the concept of ethical behavior? Frankly, today I am not sure any commercial enterprise cares about much other than revenue.

- Does anyone in a commercial organization have responsibility to determine the practical costs of shipping a product or delivering a service that does not deliver reliable outputs? Reaction to failed good enough products and services is, in my opinion, the management method applied to downstream problems.

Net net: Good enough, like it or not, is the new gold standard. Or, is that standard like the Olympic medals, an amalgam. The “real” gold is a veneer; the “good” is a coating on enough.

Stephen E Arnold, August 20, 2024

x

x

DeepMind Explains Imagination, Not the Google Olympic Advertisement

August 8, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I admit it. I am suspicious of Google “announcements,” ArXiv papers, and revelations about the quantumly supreme outfit. I keep remembering the Google VP dead on a yacht with a special contract worker. I know about the Googler who tried to kill herself because a dalliance with a Big Time Google executive went off the rails. I know about the baby making among certain Googlers in the legal department. I know about the behaviors which the US Department of Justice described as “monopolistic.”

When I read “What Bosses Miss about AI,” I thought immediately about Google’s recent mass market televised advertisement about uses of Google artificial intelligence. The set up is that a father (obviously interested in his progeny) turned to Google’s generative AI to craft an electronic message to the humanoid. I know “quality time” is often tough to accommodate, but an email?

The Googler who allegedly wrote the cited essay has a different take on how to use smart software. First, most big-time thinkers are content with AI performing cost-reduction activities. AI is less expensive than a humanoid. These entities require health care, retirement, a shoulder upon which to cry (a key function for personnel in the human relations department), and time off.

Another type of big-time thinker grasps the idea that smart software can make processes more efficient. The write up describes this as the “do what we do, just do it better” approach to AI. The assumption is that the process is neutral, and it can be improved. Imagine the value of AI to Vlad the Impaler!

The third category of really Big Thinker is the leader who can use AI for imagination. I like the idea of breaking a chaotic mass of use cases into categories anchored to the Big Thinkers who use the technology.

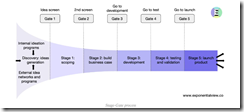

However, I noted what I think is unintentional irony in the write up. This chart shows the non-AI approach to doing what leadership is supposed to do:

What happens when a really Big Thinker uses AI to zip through this type of process. The acceleration is delivered from AI. In this Googler’s universe, I think one can assume Google’s AI plays a modest role. Here’s the payoff paragraph:

Traditional product development processes are designed based on historical data about how many ideas typically enter the pipeline. If that rate is constant or varies by small amounts (20% or 50% a year), your processes hold. But the moment you 10x or 100x the front of that pipeline because of a new scientific tool like AlphaFold or a generative AI system, the rest of the process clogs up. Stage 1 to Stage 2 might be designed to review 100 items a quarter and pass 5% to Stage 2. But what if you have 100,000 ideas that arrive at Stage 1? Can you even evaluate all of them? Do the criteria used to pass items to Stage 2 even make sense now? Whether it is a product development process or something else, you need to rethink what you are doing and why you are doing it. That takes time, but crucially, it takes imagination.

Let’s think about this advice and consider the imagination component of the Google Olympics’ advertisement.

- Google implemented a process, spent money, did “testing,” ran the advert, and promptly withdrew it. Why? The ad was annoying to humanoids.

- Google’s “imagination” did not work. Perhaps this is a failure of the Google AI and the Google leadership? The advert succeeded in making Google the focal point of some good, old-fashioned, quite humanoid humor. Laughing at Google AI is certainly entertaining, but it appears to have been something that Google’s leadership could not “imagine.”

- The Google AI obviously reflects Google engineering choices. The parent who must turn to Google AI to demonstrate love, parental affection, and support to one’s child is, in my opinion, quite Googley. Whether the action is human or not might be an interesting topics for a coffee shop discussion. For non-Googlers, the idea of talking about what many perceived as stupid, insensitive, and inhumane is probably a non-started. Just post on social media and move on.

Viewed in a larger context, the cited essay makes it clear that Googlers embrace AI. Googlers see others’ reaction to AI as ranging from doltish to informed. Google liked the advertisement well enough to pay other companies to show the message.

I suggest the following: Google leadership should ask several AI systems if proposed advertising copy can be more economical. That’s a Stage 1 AI function. Then Google leadership should ask several AI systems how the process of creating the ideas for an advertisement can be improved. That’s a Stage 2 AI function. And, finally, Google leadership should ask, “What can we do to prevent bonkers problems resulting from trying to pretend we understand people who know nothing and care less about the three “stages” of AI understanding.

Will that help out the Google? I don’t need to ask an AI system. I will go with my instinct. The answer is, “No.”

That’s one of the challenges Google faces. The company seems unable to help itself do anything other than sell ads, promote its AI system, and cruise along in quantumly supremeness.

Stephen E Arnold, August 8, 2024