Is Google the Macintosh in the Big Apple PAI?

January 27, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I want to be fair. Everyone, including companies that are recognized in the US as having the same “rights” as a citizen, is entitled to an opinion. Google is expressing an opinion, if the information in “Google Appeals Ruling on Illegal Search Monopoly” is correct. The write up says:

Google has appealed a US ruling that in 2024 found the company had an illegal monopoly in internet search and search advertising, reports CNBC. After a special hearing on penalties, the court decided in 2025 on milder measures than those originally proposed by the US Department of Justice.

Google, if I understand this news report, believes it is not a monopoly. Okay, that’s an opinion. Let’s assume that Google is correct. Its Android operating system, its Chrome browser, and its online advertising businesses along with other Google properties do not constitute a monopoly. Just keep that thought in mind: Google is not a monopoly.

Thanks, Venice. Good enough.

Consider that idea in the context of this write up in Macworld, an online information service: “If Google Helps Apple Beat Google, Does Everyone Lose?” The article states:

Basing Siri on Google Gemini, then, is a concession of defeat, and the question is what that defeat will cost. Of course, it will result in more features and very likely a far more capable Siri. Google is in a better position than Apple to deliver on the reckless promises and vaporware demos we heard and saw at WWDC 2024. The question is what compromises Apple will be asked to make, and which compromises it will be prepared to make, in return.

With all due respect to the estimable Macworld, I want to suggest the key question is: “What does the deal with Apple mean to Google’s argument that it is not a monopoly?”

The two companies control the lion’s share of the mobile device operating systems. The data from these mobile devices pump a significant amount of useful metadata and content to each of these companies. One can tell me that there will be a “Great Wall of Secrecy” between the two firms. I can be reassured that every system administrator involved in this tie up, deal, relationship, or “just pals” cooperating set up will not share data.

Google will remain the same privacy centric, user first operation it has been since it got into the online advertising business decades ago. The “don’t be evil” slogan is no longer part of the company credo, but the spirit of just being darned ethical remains the same as it was when Paul Buchheit allegedly came up with this memorable phrase. I assume it is now part of the Google DNA.,

Apple will continue to embrace the security, privacy, and vertical business approach that it has for decades. Despite the niggling complaints about the company’s using negotiation to do business with some interesting entities in the Middle Kingdom, Apple is working hard to allow its users that flexibility to do almost anything each wants within the Apple ecosystem of the super-open App Store.

Who wins in this deal?

I would suggest that Google is the winner for these reasons:

- Google now provides its services to the segment of the upscale mobile market that it has not been able to saturate

- Google provides Apple with its AI services from its constellation of data centers although that may change after Apple learns more about smart software, Google’s logs, and Google’s advertising system

- Google aced out the totally weak wristed competitors like Grok, OpenAI, Apple’s own internal AI team or teams, and open source solutions from a country where Apple a few, easy-to-manage, easy-to-replace manufacturing sets.

What’s Apple get? My view is:

- A way to meet its year’s old promises about smart software

- Some time to figure out how to position this waving of the white flag and the emails to Google suggesting, “Let’s meet up for a chat.”

- Catch up with companies that are doing useful things with smart software despite the hallucination problems.

The cited write up says:

In the end, the most likely answer is some complex mixture of incentives that may never be completely understood outside the companies (or outside an antitrust court hearing).

That statement is indeed accurate. Score a big win for the Googlers. Google is the Apple pulp, the skin, and the meat of the deal.

Stephen E Arnold, January 27, 2026

The Final Word on Tricky Online Shopping Tactics

January 26, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

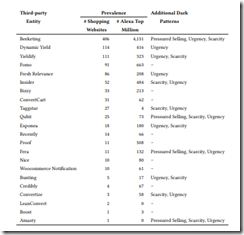

I read a round up of what I call “tricky online shopping tactics.” The data flow from an academic project called WebTAP. The researchers are smart; each hails from either Princeton University or the University of Chicago. Selected data are presented in “Dark Patterns at Scale: Findings from a Crawl of 11K Shopping Websites.” The authors (hopefully just one of them) will do a talk at a conference about tricky retail methods using the jazzier jargon “dark patterns.” The term (whether my dinobaby version or the hip new buzzword) mean the same thing: You are bamboozled into buying stuff you may not want, need, or price check before clicking.

I don’t want to be critical of these earnest researchers. There is a list of the sites that the researchers determined do some fancy dancing. Here it is:

If you want to read the list, you will find it on page 24 of the study team’s 32 page report. I want to point out that sites I know use tricky online shopping tactics are not on the list. Here’s one example of a site I expected to find on the radar of the estimable study team from Princeton and the University of Chicago: Amazon.

But what do the researchers say about dicey online shopping sites I never encounter? The paper states:

We found at least one instance of dark pattern on approximately 11.1% of the examined websites. Notably, 183 of the websites displayed deceptive messages. Furthermore, we observed that dark patterns are more likely to appear on popular websites. Finally, we discovered that dark patterns are often enabled by third-party entities, of which we identify 22; two of these advertise practices that enable deceptive patterns. Based on these findings, we suggest that future work focuses on empirically evaluating the effects of dark patterns on user behavior, developing countermeasures against dark patterns so that users have a fair and transparent experience, and extending our work to discover dark patterns in other domains.

Net net: No Amazon, no Microsoft, no big name online retailers like WalMart, and no product pitch blogs like Venture Beat-type publications. No suggestions for regulatory action to protect consumers. No data about the increase or decrease in the number of sites using dark patterns. Yep, there is indeed work to be done. Why not focus on deception as a business strategy and skip the jazzy jargon?

Stephen E Arnold, January 26, 2026

Chat Data Leaks: Why Worry?

January 23, 2026

A couple of big outfits have said that user privacy is number one with a bullet. I believe everything I read on the Internet. For these types of assertions I have some doubts. I have met a computer wizard who can make systems behave like the fellow getting brooms to dance in the Disney movies.

I operate as if anything I type into an AI chatbot is recorded and saved. Privacy advocates want AI companies to keep chatbot chat logs confidential. If government authorities seek information about AI users, people who treasure their privacy will want a search warrant before opening the kimono. The Electronic Frontier Foundation (EFF) explains its reasoning: “AI Chatbot Companies Should Protect Your Conversations From Bulk Surveillance.”

People share extremely personal information with chatbots and that deserves to be protected. You should consider anything you share with a chatbot the equivalent of your texts, email, or phone calls. These logs are already protected by the Fourth Amendment:

“Whether you draft an email, edit an online document, or ask a question to a chatbot, you have a reasonable expectation of privacy in that information. Chatbots may be a new technology, but the constitutional principle is old and clear. Before the government can rifle through your private thoughts stored on digital platforms, it must do what it has always been required to do: get a warrant. For over a century, the Fourth Amendment has protected the content of private communications—such as letters, emails, and search engine prompts—from unreasonable government searches. AI prompts require the same constitutional protection.”

In theory, AI companies shouldn’t comply with law enforcement unless officials have a valid warrant. Law enforcement officials are already seeking out user data in blanket searched called “tower dumps” or “geofence warrants.” This means that people within a certain area have their data shared with law enforcement.

Some US courts are viewing AI chatbot logs as protected speech. Dump searches may be unconstitutional. However, what about two giant companies buying and selling software and services from one another. Will the allegedly private data seep or be shared between the firms? An official statement may say, “We don’t share.” However, what about the individuals working to solve a specific problem. How are those interactions monitored. From my experience with people who can make brooms dance, the guarantees about leaking data are just words. Senior managers can look the other way or rely on their employees’ ethical values to protect user privacy.

However, those assurances raise doubts in my mind. But as the now defunct MAD Magazine character said, “Why worry?”

Whitney Grace, January 23, 2026

YouTube: Fingernails on a Blackboard

January 22, 2026

I read “From the CEO: What’s Coming to YouTube in 2026.” Yep, fingernails on a blackboard. Let’s take a look at a handful of the points in this annual letter to the world. Are advertisers included? No. What about regulators? No. What about media parters? Uh, no.

To whom is the leter addressed? I think it is to the media who report about YouTube, which, as the letter puts it, is “the epicenter of culture. ” Yeah, okay, maybe. The letter is also addressed to “creatives.” I think this means the people who post their content to YouTube in the hopes of making big money. Plus, some of the observations are likely to be aimed at those outfits who have the opportunity to participate in the YouTube cable TV clone service.

Okay, let’s begin the dragging of one’s fingernails down an old-school blackboard.

First, one of my instructors at Oxon Hill Primary School (a suburb of Washington, DC) told me, “Have a strong beginning.” Here’s what the Google YouTube pronouncement offers:

YouTube has the scale, community, and technological investments to lead the creative industry into this next era.

Notice, please, that Google is not providing search. It is not a service. YouTube will “lead the creative industry.” That an interesting statement from a company a court has labeled a monopoly. Monopolies don’t lead; they control and dictate. Thanks, Google, your intentions are admirable… for you. For a person who wants to write novels, do investigative reporting, or sculpt, you are explaining the way the world will work.

Here’s another statement that raised little goose bumps on my dinobaby skin:

we remain committed to protecting creative integrity by supporting critical legislation like the NO FAKES Act.

I like the idea that YouTube supports legislation it perceives as useful to itself. I want to point out that Google has filed to appeal the decision that labeled the outfit a monpoly. Google also acts in an arbitrary manner which makes it difficult for those who alleged a problem with Google cannot obtain direct interaction with the “YouTube team.” Selective rules appear to be the way forward for YouTube.

Finally, I want to point out a passage that set my teeth on edge like a visit to the dentist in Campinas, Brazil, who used a foot-peddled drill to deal with a cavity afflicting me. That was fun, just like using YouTube search, YouTube filters, or the YouTube interfaces. Here’s the segment from the statement of YouTube in 2026:

To reduce the spread of low quality AI content, we’re actively building on our established systems that have been very successful in combatting spam and clickbait, and reducing the spread of low quality, repetitive content.

Whoa, Nellie or Neil! Why can’t the AI champions at Gemini / DeepMind let AI identify “slop” and label it. A user could then choose, slop or no slop? I think I know the answer. Google’s AI cannot identify slop even though Google AI generates it. Furthermore, my Google generated YouTube recommendations present slop to me and suggest videos I have already viewed. These missteps illustrate that Google will not spend the money to deal with these problems, its smart software cannot deal with these problems, or clicks are more important than anything other than cost cutting. Google and YouTube are the Henry Ford of the AI slop business.

What do Neal’s heartfelt, honest, and entitled comments mean to me, a dinobaby? I shall offer some color about how I interpreted the YouTube statement about 2026:

- The statement dictates.

- The comments about those who create content strike me as self serving, possibly duplicitous

- The issue of slop becomes a “to do” with no admission of being a big part of the problem.

Net net: Google you are definitely Googlier than in 2025.

Stephen E Arnold, January 22, 2026

AI: Well, Maybe, Er, Not Exactly Yet

January 21, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I don’t spend much time with 20 somethings if I can help it. I know that if I use a term like “fancy dancing”, I get a glazed look. Right, 6-7? But how about the word “crawfish.” If you think this is something one eats in a paella at a restaurant in Valencia, you will want to know that my use of the word means “retreat from a previous commitment. When a corporate executive crawfishes, that estimable profession wants to get the heck away from one’s original position. Thus, when a big time big tech outfit’s leadership says, “Well, uh, yeah, AI is… well, you know, guess what? That is the sound of a crawfisher.

Executives are backing away from the AI robot. Thanks, Venice.ai. Not what I wanted but good enough, just like AI, right?

Okay, consider this statement from the Irish Times:

“So the [intellectual property] of any application or any firm is, how do you use all these models with context engineering or your data? As long as firms can answer that question, they’re gonna be getting ahead.”

Is this statement:

[a] A crystal clear explanation about how to make progress with AI

[b] A statement from a big time management consultant who uses classy words like “gonna”

[c] Crawfishing?

Let’s come at this from a different angle. Here’s a shameless content marketing article from Forbes. (Malcolm, I know you are spinning in heaven, but Forbes is different now.) “Why AI Needs a Strategy Before It Needs a Tool” states:

Most executives bought AI tools with zero strategic intention. No outcomes defined. No KPIs. No ownership. No activation plan. That is why AI adoption stalled…. It was always a strategy issue.

This does not seem to be crawfishing. The author is pointing out that smart software has stumbled trying to catch a cangrejos de río and is in danger of drowning in a stream in Spain, the strategy will drown the person, not the water.

And one more vector. This one is from the boo birds at the Guardian. “Artificial Intelligence Research Has a Slop Problem, Academics Say: ‘It’s a Mess’” points out:

it is almost impossible to know what’s actually going on in AI – for journalists, the public, and even experts in the field

With my reference to the Procambarus clarkii, what’s the point. The old AI razzmatazz seems to be something folks want to back away from. Reality bites hard. And what about those data centers that could endanger crawfish in some parts of the US of A. Rest easy, little critters, those may lack the power to light up the little blue, red, and green LEDs.

Stephen E Arnold, January 21, 2026

Meta Management: Building More Sort Of Maybe

January 21, 2026

Modern management examples are often difficult to figure out. One company with an interesting management approach is Meta (né Facebook).

Meta is focused on growing its AI to be the best in the tech industry, but they’re certainly going about it like an ADHD kid. Does Zuckerberg have that along with autism? We don’t know but we do know that CNBC examines the details about Meta’s strategy: “From Llamas To Avocados: Meta’s Shifting AI Strategy Is Causing Internal Confusion.”

Meta currently has a hiring spree in hopes to rival Anthropic, Google, and OpenAI. It, however, remains behind its competitors who continue to roll out further advances in AI technology. Meta is working on a successor to Llama and it is developing a new AI model named Avocado.

Meanwhile Wall Street is scratching its head and wondering where Meta is headed. Investors are curious about Meta’s ROI and its investment levels. People are still advised to buy stock in Meta (and Alphabet too).

Here’s what Zuckerberg thinks according the estimable CNBC information service:

“But Zuckerberg has much grander ambitions, and the new guard he’s brought in to push the future vision of AI has no background in online ads. The 41-year-old founder, with a net worth of more than $230 billion, has suggested that if Meta doesn’t take big swings, it risks becoming an afterthought in a world that’s poised to be defined by AI.”

He’s debating about what to release via open source and what to make proprietary.

Several observations:

- There is some reported management actions in the helmet and goggle unit. RIFs I think these are called

- One of the former big guns in AI at Meta is now in France grousing about Meta’s management methods. (Personally I find these types of company complainers fascinating. Mr. Zuckerberg did not. Yann LeCun ponder AI and complains.)

- The organization of the firm is under the seasoned, mature, well-informed professional AI Wang.

Will Meta’s management method lead the firm to AI glory? Online advertising will keep the money flowing, but will Meta be able to compete, catch up, and then pull ahead of the Apple-Google combine, the hyper promoting OpenAI, or the consulting boutique Googzilla? Yeah, sure.

Whitney Grace, January 21, 2026

Microsoft: Budgeting Data Centers How Exactly?

January 20, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Here’s how bean counters and MBA work. One gathers assumptions. Depending on the amount of time one has, the data collection can be done blue chip consulting style; that is, over weeks or months of billable hours. Alternatively, bean counters and MBA can sit in a conference room, talk, jot stuff on a white board, and let one person pull the assumptions into an Excel-type of spreadsheet. Then fill in the assumptions, some numbers based on hard data (unlikely in many organizations) and some guess-timates and check the “flow” of the numbers. Once the numbers have the right optics, reconvene in the conference room, talk, write on the white board, and the same lucky person gets to make the fixes. Once the flow is close enough for horse shoes, others can eyeball the numbers. Maybe a third party will be asked to “validate” the analysis? And maybe not?

Softies are working overtime on the AI data center budget. Thanks, Venice.ai. Good enough. Apologies to Copilot. Your output is less useful than Venice’s. Bummer.

We now know that Microsoft’s budgeting for its big beautiful build out of data centers will need to be reworked. Not to worry. If the numbers are off, the company can raise the price of an existing service or just fire however many people needed to free up some headroom. Isn’t this how Boeing-type companies design and build aircraft? (Snide comments about cutting corners to save money are not permitted. Thank you.)

How do “we” know this? I read in GeekWire (an estimable source I believe) this story: “Microsoft Responds to AI Data Center Revolt, Vowing to Cover Full Power Costs and Reject Local Tax Breaks.” I noted this passage about Microsoft’s costs for AI infrastructure:

The new plan, announced Tuesday morning [January 13, 2026) in Washington, D.C, includes pledges to pay the company’s full power costs, reject local property tax breaks, replenish more water than it uses, train local workers, and invest in AI education and community programs.

Yep, pledges. Just ask any religious institution about the pledge conversion ratio. Pledges are not cold, hard cash. Why are the Softies doing executive level PR about its plans to build environmentally friendly and family friendly data centers? People in fly over areas are not thrilled with increased costs for power and water, noise pollution from clever repurposed jet engines and possible radiation emission from those refurbed nuclear power plants in unused warships, and the general idea of watching good old empty land covered with football stadium sized tan structures.

Now back to the budget estimates for Microsoft’s data center investments. Did those bean counters include set asides for the “full power costs,” property taxes, water management, and black hole costs for “invest in AI education and community.”

Nope.

That means “pledges” are likely to be left fuzzy, defined on the fly, or just forgotten like Bob, the clever interface with precursor smart software. Sorry, Copilot Bob’s help and Clippy missed the mark. You may too for one reason: Apple and Google have teamed up in an even bigger way than before.

That brings me back to the bean counters at Microsoft, a uni shuttle bus full of MBAs, and a couple of railroad passenger cars filled with legal eagles. The money assumptions need a rethink.

Brad Smith, the Microsoft member of “leadership” blaming security breaches on 1,000 Russian hackers, wants this PR to work. The write up reports that Mr. Smith, the member of Microsoft leadership said:

Smith promised new levels of transparency… The companies that succeed with data centers in the long run will be the companies that have a strong and healthy relationship with local communities. Microsoft’s plan starts by addressing the electricity issue, pledging to work with utilities and regulators to ensure its electricity costs aren’t passed on to residential customers. Smith cited a new “Very Large Customers” rate structure in Wisconsin as a model, where data centers pay the full cost of the power they use, including grid upgrades required to support them.

And that’s not all. The pledge includes this ethically charged corporate commitment. I quote:

- “A 40% improvement in water efficiency by 2030, plus a pledge to replenish more water than it uses in each district where it operates. (Microsoft cited a recent $25 million investment in water and sewer upgrades in Leesburg, Va., as an example.)

- A new partnership with North America’s Building Trades Unions for apprenticeship programs, and expansion of its Datacenter Academy for operations training.

- Full payment of local property taxes, with no requests for municipal tax breaks.

- AI training through schools, libraries, and chambers of commerce, plus new Community Advisory Boards at major data center sites.”

I hear the background music. I think it is the double fugue or Kyrie in Mozart’s Requiem, but I may be wrong. Yes, I am. That is the sound track for the group reworking the numbers for Microsoft’s “beat Google” data center spend.

You can listen to Mozart’s Requiem on YouTube. My hunch is that the pledge is likely to be part of the Microsoft legacy. As a dinobaby, I would suggest that Microsoft’s legacy is blaming users for Microsoft’s security issues and relying on PR when it miscalculates [a] how people react to the excellent company’s moves and [b] its budget estimates for Copilot, the aircraft, the staff, the infrastructure, and the odds and ends.

Net net: Microsoft’s PR better be better than its AI budgeting.

Stephen E Arnold, January 20, 2026

Grok Is Spicy and It Did Not Get the Apple Deal

January 16, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

First, Gary Marcus makes clear that AI is not delivering the goods. Then a fellow named Tom Renner explains that LLMs are just a modern variation of a “confidence trick” that’s been in use for centuries. I then bumbled into a paywalled post from an outfit named Vox. The write up about AI is “Grok’s Nonconsensual Porn Problem is Part of a Long, Gross Legacy.”

Unlike Dr. Marcus and Mr. Renner, Vox focuses on a single AI outfit. Is this fair? Nah, but it does offer some observations that may apply to the entire band of “if we build it, they will come” wizards. Spoiler: I am not coming for AI. I will close with an observation about the desperation that is roiling some of the whiz kids.

First, however, what does Vox have to say about the “I am a genius. I want to spawn more like me. I want to colonize Mars” superman. I urge you to subscribe the Vox. I will highlight a couple of passages about the genius Elon Musk. (I promised I won’t mention the department of government efficiency project. I project. DOGE DOGE DOGE. Yep, I lied must like some outfits have. Thank goodness I am an 81 year old dinobaby in rural Kentucky. I can avoid AI, but DOGE DOGE DOGE, not a chance.

Here’s the first statement I circled on my print out of the expensive Vox article:

Elon Musk claims tech needs a “spicy mode” to dominate. Is he right?

I can answer this question: No, only those who want to profit from salacious content want a spicy mode. People who deal in spicy modes made VHS tapes a thing much to the chagrin of Sony. People who create spicy mode content helped sell some virtual reality glasses. I sure didn’t buy any. Spicy at age 81 is walking from room to room in my two room log cabin in the Kentucky hollow in which I live.

Here’s the second passage in the write up I check marked:

Musk has remained committed to the idea that Grok would be the sexiest AI model. On X, Musk has defended the choice on business grounds, citing the famous tale of how VHS beat Betamax in the 1980s after the porn industry put its weight behind VHS, with its larger storage capacity. “VHS won in the end,” Musk posted, “in part because they allowed spicy mode.

Does this mean that Elon analyzed the p_rn industry when he was younger? For business reasons only I presume. I wonder if he realizes that Grok and perhaps the Tesla businesses may be adversely affected by the spicy stuff. No, I won’t. I won’t. Yes, I will. DOGE DOGE DOGE

Here’s the final snip:

A more accurate phrasing, however, might be to say that in our misogynistic society, objectifying and humiliating the bodies of unconsenting women is so valuable that the fate of world-altering technologies depends on how good they are at facilitating it. AI was always going to be used for this, one way or the other. But only someone as brutally uncaring and willing to cut corners as Elon Musk would allow it to go this wrong.

Snappy.

But the estimable Elon Musk has another thorn in the driver’s seat of his Tesla. Apple, a company once rumored to be thinking about buying the car company, signed another deal with Apple. The gentle and sometimes smarmy owner of Android, online advertising, and surveillance technology is going to provide AI to the wonderful wonderful Apple.

I think Mr. Musk’s Grok is a harbinger of a spring time blossoming of woe for much of the AI sector. There are data center pushbacks. There are the Chinese models available for now as open source. There are regulators in the European Union who want to hear the ka-ching of cash registers after another fine is paid by an American AI outfit.

I think the spicy angle just helps push Mr. Musk and Grok to the head of the line for AI pushback. I hope not. I wonder if Mr. Musk will resume talks with Pavel Durov about providing Grok as an AI engine for Nikolai Durov’s new approach to smart software. I await spring.

Stephen E Arnold, January 16, 2026

Apple and Google: Lots of Nots, Nos, and Talk

January 15, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

This is the dinobaby, an 81 year old dinobaby. In my 60 plus year work career I have been around, in, and through what I call “not and no” PR. The basic idea is that one floods the zones with statements about what an organization will do. Examples range from “our Wi-Fi sniffers will not log home access point data” to “our AI service will not capture personal details” to “our security policies will not hamper usability of our devices.” I could go on, but each of these statements were uttered in meetings, in conference “hallway” conversations, or in public podcasts.

Thanks, Venice.ai. Good enough. See I am prevaricating. This image sucks. The logos are weird. GW looks like a wax figure.

I want to tell you that if the Nots and Nos identified in the flood of write ups about the Apple Google AI tie up immutable like Milton’s description of his God, the nots and nos are essentially pre-emptive PR. Both firms are data collection systems. The nature of the online world is that data are logged, metadata captured and mindlessly processed for a statistical signal, and content processed simply because “why not?”

Here’s a representative write up about the Apple Google nots and nos: “Report: Apple to Fine-Tune Gemini Independently, No Google Branding on Siri, More.” So what’s the more that these estimable firms will not do? Here’s an example:

Although the final experience may change from the current implementation, this partly echoes a Bloomberg report from late last year, in which Mark Gurman said: “I don’t expect either company to ever discuss this partnership publicly, and you shouldn’t expect this to mean Siri will be flooded with Google services or Gemini features already found on Android devices. It just means Siri will be powered by a model that can actually provide the AI features that users expect — all with an Apple user interface.”

How about this write up: “Official: Apple Intelligence & Siri To Be Powered By Google Gemini.”

Source details how Apple’s Gemini deal works: new Siri features launching in spring and at WWDC, Apple can finetune Gemini, no Google branding, and more

Let’s think about what a person who thinks the way my team does. Here are what we can do with these nots and nos:

- Just log everything and don’t talk about the data

- Develop specialized features that provide new information about use of the AI service

- Monitor the actions of our partners so we can be prepared or just pounce on good ideas captured with our “phone home” code

- Skew the functionality so that our partners become more dependent on our products and services; for example, exclusive features only for their users.

The possibilities are endless. Depending upon the incentives and controls put in place for this tie up, the employees of Apple and Google may do what’s needed to hit their goals. One can do PR about what won’t happen but the reality of certain big technology companies is that these outfits defy normal ethical boundaries, view themselves as the equivalent of nation states, and have a track record of insisting that bending mobile devices do not bend and that information of a personal nature is not cross correlated.

Watch the pre-emptive PR moves by Apple and Google. These outfits care about their worlds, not those of the user.

Just keep in mind that I am an old, very old, dinobaby. I have some experience in these matters.

Stephen E Arnold, January 15, 2025

Apple Google Prediction: Get Real, Please

January 13, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Prediction is a risky business. I read “No, Google Gemini Will Not Be Taking Over Your iPhone, Apple Intelligence, or Siri.” The write up asserts:

Apple is licensing a Google Gemini model to help make Apple Foundation Models better. The deal isn’t a one-for-one swap of Apple Foundation Models for Gemini ones, but instead a system that will let Apple keep using its proprietary models while providing zero data to Google.

Yes, the check is in the mail. I will jump on that right now. Let’s have lunch.

Two giant creatures find joy in their deepening respect and love for one another. Will these besties step on the ants and grass under their paws? Will they leave high-value information on the shelf? What a beautiful relationship! Will these two get married? Thanks, Venice.ai. Good enough.

Each of these breezy statements sparks a chuckle in those who have heard direct statements and know that follow through is unlikely.

The article says:

Gemini is not being weaved into Apple’s operating systems. Instead, everything will remain Apple Foundation Models, but Gemini will be the "foundation" of that.

Yep, absolutely. The write up presents this interesting assertion:

To reiterate: everything the end user interacts with will be Apple technology, hosted on Apple-controlled server hardware, or on-device and not seen by Apple or anybody else at all. Period.

Plus, Apple is a leader in smart software. Here’s the article’s presentation of this interesting idea:

Apple has been a dominant force in artificial intelligence development, regardless of what the headlines and doom mongers might say. While Apple didn’t rush out a chatbot or claim its technology could cause an apocalypse, its work in the space has been clearly industry leading. The biggest problem so far is that the only consumer-facing AI features from Apple have been lackluster and got a tepid consumer response. Everything else, the research, the underlying technology, the hardware itself, is industry leading.

Okay. Several observations:

- Apple and Google have achieved significant market share. A basic rule of online is that efficiency drives the logic of consolidation. From my point of view, we now have two big outfits, their markets, their products, and their software getting up close and personal.

- Apple and Google may not want to hook up, but the financial upside is irresistible. Money is important.

- Apple, like Telegram, is taking time to figure out how to play the AI game. The approach is positioned as a smart management move. Why not figure out how to keep those users within the friendly confines of two great companies? The connection means that other companies just have to be more innovative.

Net net: When information flows through online systems, metadata about those actions presents an opportunity to learn more about what users and customers want. That’s the rationale for leveraging the information flows. Words may not matter. Money, data, and control do.

Stephen E Arnold, January 13, 2026