Meta 2026: Grousing Baby Dinobabies and Paddling Furiously

January 7, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I am an 81 year old dinobaby. I get a kick out of a baby dinobaby complaining about young wizards. Imagine how that looks to me. A pretend dinobaby with many years to rock and roll complaining about how those “young people” are behaving. What a hoot!

A dinobaby explains to a young, brilliant entrepreneur, “You are clueless.” My response: Yeah, but who has a job? Thanks, Qwen. Good enough.

Why am I thinking about age classification of dinobabies? I read “Former Meta Scientist Says Mark Zuckerberg’s New AI Chief Is ‘Young’ And ‘Inexperienced’—Warns ‘Lot Of People’ Who Haven’t Yet Left Meta ‘Will Leave’.” Now this is a weird newsy story or a story presented as a newsy release by an outfit called Benzinga. I don’t know much about Benzinga. I will assume it is a version of the estimable Wall Street Journal or the more estimable New York Times. With that nod to excellence in mind, what is this write up about?

Answer: A staff change and what I call departure grief. People may hate their job. However, when booted from that job, a problem looms. No matter how important a person’s family, no matter how many technical accolades a person has accrued, and no matter the sense of self worth — the newly RIFed, terminated, departed, or fired feels bad.

Many Xooglers fume online after losing their status as Googlers. These essays are amusing to me. Then when Mother Google kicks them out of the quiet pod, the beanbag, or the table tennis room — these people fume. I think that’s what this Benzinga “zinger” of a write up conveys.

Let’s take a quick look, shall we?

First, the write up reports that the French-born Yann LeCun is allegedly 65 years old. I noted this passage about Alexandr [sic] Wang is the top dog in Meta’s Superintelligence Labs (MSL) or “MISSILE” I assume. That’s quite a metaphor. Missiles are directed or autonomous. Sometimes they work and sometimes they explode at wedding parties in some countries. Whoops. Now what does the baby dinobaby Mr. LeCun say about the 28 year old sprout Alexandr (sic) Wang, founder of Scale AI. Keep in mind that the genius Mark Zuckerberg paid $14 billion dollars for this company in the middle of 2025.

Alexandr Wang is intelligent and learns quickly, but does not yet grasp what attracts — or alienates — top researchers.

Okay, we have a baby dinobaby complaining about the younger generation. Nothing new here except that Mr. Wang is still employed by the genius Mark Zuckerberg. Mr. LeCun is not as far as I know.

Second, the article notes:

According to LeCun, internal confidence eroded after Meta was criticized for allegedly overstating benchmark results tied to Llama 4. He said the controversy angered Zuckerberg and led him to sideline much of Meta’s existing generative AI organization.

And why? According the zinger write up:

LLMs [are] a dead end.

But was Mr. LeCun involved in these LLMs and was he tainted by the failure that appears to have sparked the genius Mark Zuckerberg to pay $14 billion for an indexing and content-centric company? I would assume that the answer is, “Yep, Mr. LeCun was in his role for about 13 years.” And the result of that was a “dead end.”

I would suggest that the former Facebook and Meta employee was not able to get the good ship Facebook and its support boats Instagram and WhatsApp on course despite 156 months of navigation, charting, and inputting.

Several observations:

- Real dinobabies and pretend dinobabies complain. No problem. Are the complaints valid? One must know about the mental wiring of said dinobaby. Young dinobabies may be less mature complainers.

- Geniuses with a lot of money can be problematic. Mr. LeCun may not appreciate the wisdom of this dinobaby’s statement … yet.

- The genius Mr. Zuckerberg is going to spend his way back into contention in the AI race.

Net net: Meta (Facebook) appears to have floundered with the virtual worlds thing and now is paddling furiously as the flood of AI solutions rushes past him. Can geniuses paddle harder or just buy bigger and more powerful boats? Yep, zinger.

Stephen E Arnold, January 7, 2026

ChatGPT Channels Telegram

January 7, 2026

Just what everyone needs: Telegram type apps on the Sam AI-Man platform. What will bad actors do? Obviously nothing. Apps will be useful, do good, and make the world a better place.k

ChatGPT now connects to apps without leaving the AI interface.? ? According to Mashable, “ChatGPT Launches Apps Beta: 8 Big Apps You Can Now Use In ChatGPT.”? ? ChatGPT wants its users to easily access apps or take suggestions during conversations with the AI.? ? The idea is that ChatGPT will be augmented by apps and extend conversations.

App developers will also be able to use ChatGPT to build chat-native experiences to bring context and action directly into conversations.

The new app integration is described as:

“While some commentators have referred to the new Apps beta as a ChatGPT app store, at this time, it’s more of an app directory. However, in the “Looking Ahead” section of its announcement post, OpenAI does note that this tool could eventually ‘expand the ways developers can reach users and monetize their work.’”

The apps that are integrated into ChatGPT are Zillow, Target, Expedia, Tripadvisor, Instacart, DoorDash, Apple Music, and Spotify.? ? This sounds similar to what Telegram did.? ? Does this mean OpenAI is on the road to Telegram like services?

Just doing good. Bad actors will pay no attention.

Whitney Grace, January 7, 2025

Telegram Notes: Is ATON an Inside Joke?

January 2, 2026

![green-dino_thumb_thumb[3]_thumb green-dino_thumb_thumb[3]_thumb](https://www.arnoldit.com/wordpress/wp-content/uploads/2026/01/green-dino_thumb_thumb3_thumb_thumb.gif) Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

On New Year’s Day, I continued to sift through my handwritten notecards. (I am a dinobaby. No Obsidian for me!) I came across a couple of items that I thought were funny or at the least “inside jokes” related to the beep beep Road Runner spin of a company involved (allegedly) in pharma research into a distributed AI mining company. Yeah, I am not sure what that means either. I did notice a link to an outfit with a confusing deal to manage the company named AlphaTON, captained by a former Cambridge Analytica professional, and featuring a semi-famous person in Moscow named Yuri Mitin of the Red Shark Ventures outfit.

Several financial wizards, eager to tap into US financial markets, toss around names for the NASDAQ’s official ticker symbol. Thanks, Venice.ai. Good enough.

What’s the joke? I am not sure you will laugh outload, but I know of a couple people who would slap their knee and chortle.

Joke 1: The ticker symbol for the beep beep outfit is ATON. That is the “name” of a famous or infamous Russian bank. Naming a company listed on the US NASDAQ as ATON is a bit like using the letters CIA or NSA on a company listed on the MOEX.

Joke 2: The name “Red Shark” suggests an intelligence operation; for example, there was an Israeli start up called “Sixgill.” That’s a shark reference too. Quite a knee slapper because the “old” Red Shark Ventures is now RSV Capital, and it has a semi luminary in Moscow’s financial world as its chief business development officer. Yuri Mitin. Do you have tears in your eyes yet?

Joke 3: AlphaTON’s SEC S-3/F-3 shelf registration for this dollar amount: US$420.69 million. If you are into the cannabis world, “420” means either partaking of cannabis at 4:20 pm each afternoon or holding a protest on April 20th each year. Don’t feel bad. I did not get this either, but Perplexity as able to explain this in great detail. And the “69” is supposed to be a signal to crypto bros that this stock is going to be a roller coaster. I think “69” has another slang reference, but I don’t recall that. You may, however.

Joke 4: The name Alpha may be a favorite of the financial wizard Enzo Villani involved with AlphaTON, but one of my long time clients (a former CIA operations officers) told me that “A” was an easy way to refer to those entertaining people in the Russian Spetsgruppa "A", the FSB’s elite counter-terrorism unit.

Whether these are making you laugh, I don’t know. I wonder if these references are a coincidence. Probably not. AlphaTON, supporting the Telegram TON Foundation’s AI initiative, is just a coincidence. I would hazard a guess that a fellow name Andrei Grachev understands the attempts at humor. The wet blanket is the stock price and the potential for some turbulence.

Financial wizards engaged in crypto centric activities do have a sense of humor — until they lose money. Those two empty chairs in the cartoon suggest people have left the meeting.

PS. The Telegram Notes information service is beginning to take shape. If you want more information about our Telegram, TON Foundation, TON Strategy Company, and AlphaTON, just write kentmaxwell at proton dot me.

Stephen E Arnold, January 2, 2025

Telegram Notes: AI Slop Analyzes TON Strategy and Demonstrates Shortcomings

January 1, 2026

![green-dino_thumb_thumb[3] green-dino_thumb_thumb[3]](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/12/green-dino_thumb_thumb3_thumb-2.gif) Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

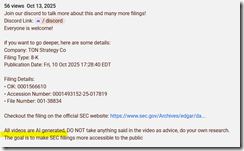

If you want to get a glimpse of AI financial analysis, navigate to “My Take on TON Strategy’s NASDAQ Listing Warning.” The video is ascribed to Corporate Decoder. But there is no “my” in the old-fashioned humanoid sense. Click on the “more” link in the YouTube video, and you will see this statement:

The highlight points to the phrase, “All videos are AI generated…”

The six minute video does not point out some of the interesting facets of the Telegram / TON Foundation inspired “beep beep zoom” approach to getting a “rules observing listing” on the US NASDAQ. Yep, beep beep. Road Runner inspired public lists with an alleged decade of history. I find this fascinating.

The video calls attention to superficial aspects of the beep beep Road Runner company spun up in a matter of weeks in late 2025. The company is TON Strategy captained by Manny Stotz, formerly the president or CEO or temporary top dog at the TON Foundation. That’s the outfit Pavel Durov thought would be a great way to convert GRAMcoin into TONcoin, gift the Foundation with the TON blockchain technology, and allow the Foundation to handle the marketing. (Telegram itself does not marketing except leverage the image of Pavel Durov, the self-proclaimed GOAT of Russian technology culture. In addition to being the GOAT, Mr. Durov is reporting to his nanny in the French judiciary as he awaits a criminal trial on nine or ten serious offenses. But who is counting?

What’s the AI video do other than demonstrate that YouTube does not make easily spotted AI labels obvious.

The video does not provide any insight into some questions my team and I had about TON Strategy Company, its executive chairperson Manual “Manny” Stotz, or the $400 million plus raised to get the outfit afloat. The video does not call attention to the presence of some big, legitimate, brands in the world of crypto like Blockchain.com.

The video tries to explain that the firm is trying to become an asset outfit like Michael Saylor’s Strategy Company. But the key difference is not pointed out; that is, Mr. Saylor bet on Bitcoin. Mr. Stotz is all in on TONcoin. He believes in TONcoin. He wanted to move fast. He is going to have to work hard to overcome what might be some modest potholes as his crypto vehicle chugs along the Information Highway.

The first crack in the asphalt is the TONcoin itself. Mr. Stotz “bought” TONcoins at about a value point of $5.00. That was several weeks ago. Those same TONcoins can be had for $1.62 at about noon on December 31, 2025. Source you ask? Okay, here’s the service I consulted: https://www.tradingview.com/symbols/TONUSD/

The second little dent in the road is the price of the TON Strategy Company’s NASDAQ stock. At about noon on December 31, 2025, it was going for $2.00 a share. What did the TONX stock cost in September 2025? According to Google Finance it was in the $21.00 range. Is this a problem? Probably not for Mr. Stotz because his Kingsway Capital is separate from TON Strategy Company. Plus, Kingsway Capital is separate from its “owner” Koenigsweg Holdings. Will someone care if TONX gets delisted? Yep, but I am not prepared to talk about the outfits who have an interest in Koenigsweg and Kingsway. My hunch is that a couple of these outfits may want to take a ride with Manny to talk, to share ideas, and to make sure everyone is on the same page. In Russian, Google Translate says this sequence of words might pop up during the ride: ?? ?????????, ??????

What are some questions the AI system did not address? Here are a few:

- How does the current market value of TONcoin affect the information in the SEC document the AI analyzed?

- Where do these companies fit into the TON Strategy outfit? Why the beep beep approach when two established outfits like Kingsway Capital and Koenigsweg Holdings could have handled the deal?

- What connections exist between the TON Foundation and Mr. Stotz? What connection does the deal have to the TON Foundation’s ardent supported, DWF Labs (a crypto market maker of repute)?

- Who is president of the TON Strategy Company? What is the address of the company for the SEC document? Where does Veronika Kapustina reside in the United States? Why do Rory Cutaia, Veronika Kapustina, and TON Strategy Company share a residential address in Las Vegas?

- What role does Sarah Olsen, a former Rockefeller financial analyst play in the company from her home, possibly in Miami, Florida?

- What is the Plan B if the VERB-to-TON Strategy Company continues to suffer from the down market in crypto? What will outfits like DWF Labs do? What will the TON Foundation do? What will Pavel Durov, the GOAT, do in addition to wait for the big, sluggish wheels of the French judicial system to grind forward?

The AI did not probe like an over-achieving MBA at an investment firm would do to keep her job. Nope. Hit the pause switch and use whatever the AI system generates. Good enough, right?

What does this AI generated video reveal about smart software playing the role of a human analyst? Our view is:

- Quick and sloppy content

- Failure to chase obvious managerial and financial loose ends

- Ignoring obvious questions about how a “sophisticated” pivot can garner two notes from Mother SEC in a couple of weeks.

Net net: AI is not ready for some types of intellectual work.

Want for more Telegram Notes’ content? More information about our Telegram-related information service in the new year.

Stephen E Arnold, January 1, 2026

Salesforce: Winning or Losing?

December 29, 2025

![green-dino_thumb_thumb[3] green-dino_thumb_thumb[3]](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/12/green-dino_thumb_thumb3_thumb.gif) Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

One of the people on my team told me at our holiday staff lunch (I had a coupon, thank you), that Salesforce signed up thousands of new customers because of AI. Lunch included one holiday beverage, so I dismissed this statement as one of those wild and crazy end-of-year statements from a person who had just consumed one burrito deluxe and a chicken fajita. Let’s assume the number is correct.

That means whatever the leadership of Salesforce is doing, that team is winning. AI may be one reason for the sales success if true. On the other hand, I had the small chicken burrito and an iced tea. I said, “Maybe Microsoft is screwing up with AI so much, commercial outfits are turning to Salesforce?”

The answer for many in Silicon Valley is, “Go in.” Forget the Chinese focus on practical applications of smart software. Go in, win big. Thanks, Venice.ai. Good enough.

Of course, no one agreed. The conversation turned to the hopelessness of catching flights home and the lack of people in my favorite coupon issuing Mexican themed restaurant in rural Kentucky. I stopped thinking about Salesforce until…

I read “Salesforce Regrets Firing 4000 Experienced Staff and Replacing Them with AI.” The write up is interesting. If the statements in the document are accurate, AI may become a bit of a problem for some outfits in 2026. Be sure to read the original essay by an entity identified as Maarthandam. I am only going to highlight a couple of sentences.

The first passage I noted is:

You cannot put a bot where a human is supposed to be. “We assumed the technology was further along than it actually was.”

Well, that makes sense. High technology folks read science fiction instead of Freddy the Pig books when in middle school. The efforts to build smart software, data centers in space, ray guns, etc. etc. are works in progress. Plus the old admonition of high school teachers, “Never assume, or you will make an “ass” of “u” and “me” seems to have been ignored. Yeah. Wizards.

The second passage of interest to me was:

Executives now concede that removing large numbers of trained support staff created gaps that AI could not immediately fill, forcing teams to reassign remaining employees and increase human oversight of automated systems.

The main idea for me is that humans have to do extra work to keep AI from creating more problems. These are problems unique to a situation. Therefore, something more than today’s AI systems is needed. The “something” is humans who can keep the smart software from wandering into the no man’s land of losing a customer or having a smart self driving car kill the Mission District’s most loved stray cat. Yeah. More humans, expensive ones at that.

The final passage I circled was:

Salesforce has now begun reframing its AI strategy, shifting away from the language of replacement toward what executives call “rebalancing.”

This passage means to this dinobaby, “We screwed up, have paid a consultant to craft some smarmy words, and are trying to contain the damage.” I may be wrong, but the Salesforce situation seems to be a mess. Yeah, leadership Silicon Valley style.

Net net: Is that a blimp labeled “Enron” circling the Salesforce offices?

Stephen E Arnold, December 29, 2025

Telegram Notes: Manny, Snoop, and Millions in Minutes

December 24, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

In the mass of information my team and I gathered for my new study “The Telegram Labyrinth,” we saw several references to what may be an interesting intersection of Manuel (Manny) Stotz, a hookah company in the Middle East, Snoop Dog (the musical luminary), and Telegram.

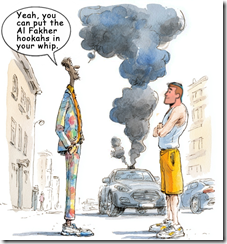

At some point in Mr. Stotz’ financial career, he acquired an interest in a company doing business as Advanced Inhalation Rituals or AIR. This firm owned or had an interest in a hookah manufacturer doing business as Al Fakher. By chance, Mr. Stotz interacted with Mr. Snoop Dog. As the two professionals discussed modern business, Mr. Stotz suggested that Mr. Snoop Dog check out Telegram.

Thanks, Venice.ai. I needed smoke coming out of the passenger side window, but smoke existing through the roof is about right for smart software.

Telegram allowed Messenger users to create non fungible tokens. Mr. Snoop Dog thought this was a very interesting idea. In July 2025, Mr. Snoop Dogg

I found the anecdotal Manny Stotz information in social media and crypto centric online services suggestive but not particularly convincing and rarely verifiable.

One assertion did catch my attention. The Snoop Dogg NFT allegedly generated US$12 million in 30 minutes. Is the number in “Snoop Dogg Rakes in $12M in 30 Minutes with Telegram NFT Drop” on the money? I have zero clue. I don’t even know if the release of the NFT or drop took place. Let’s go to the write up:

Snoop Dogg is back in the web3 spotlight, this time partnering with Telegram to launch the messaging app’s first celebrity digital collectibles drop. According to Telegram CEO Pavel Durov, the launch generated $12 million in sales, with nearly 1 million items sold out in just 30 minutes. While the items aren’t minted yet, users purchased the collectibles internally on Telegram, with minting on The Open Network (TON) scheduled to go live later this month [July 2025].

Is this important? It depends on one’s point of view. As an 81 year old dinobaby, I find the comments online about this alleged NFT for a popular musician not too surprising. I have several other dinobaby observations to offer, of course:

- Mr. Stotz allegedly owns shares in a company (possibly more than 50 percent or more of the outfit) that does business in the UAE and other countries where hookahs are popular. That’s AIR.

- Mr. Stotz worked for a short time a a senior manager at the TON Foundation. That’s an organization allegedly 100 percent separate from Telegram. That’s the totally independent, Swiss registered TON Foundation, not to be confused with the other TON Foundation in Abu Dhabi. (I wonder why there are two Telegram linked foundations. Maybe someone will look into that? Perhaps these are legal conventions or something akin to Trojan horses? This dinobaby does not know.

- By happenstance, Mr. Snoop Dogg learned about Telegram NFTs and at the same time Mr. Stotz was immersed in activities related to the Foundation and its new NASDAQ listed property TON Strategy Company, the NFT spun up and then moved forward allegedly.

- Does a regulatory entity monitor and levy tax on the sale of NFTs within Telegram? I mean Mr. Snoop Dogg resides in America. Mr. Stotz resides allegedly in London. The TON Foundation which “runs” the TON blockchain is in United Arab Emirates, and Mr. Pavel Durov is an AirBnB type of entrepreneur — this question of paying taxes is probably above my pay grade which is US$0.00.

One simple question I have is, “Does Mr. Snoop Dogg have an Al Faker hookah?

This is an example of one semi interesting activity involving Mr. Stotz, his companies (Koenigsweg Holdings Ltd Holdings Ltd and its limited liability unit Kingsway Capital) and the Telegram / TON Foundation interactions cross borders, business types, and cultural boundaries. Crypto seems to be a magnetic agent.

As Mr. Snoop Dogg sang in 1994:

“With so much drama in the LBC, it’s kinda hard being Snoop D-O-double-G.” (“Gin and Juice, 1994)

For those familiar with NFT but not LBC, the “LBC” refers to Long Beach, California. There is much mystery surrounding many words and actions in Telegram-related activities.

PS. My team and I are starting an information service called “Telegram Notes.” We have a url, some of the items will be posted to LinkedIn and the cyber crime groups which allowed me to join. We are not sure what other outlets will accept these Telegram-related essays. It’s kinda hard being a double DINO-B-A-BEEE.

Stephen E Arnold, December 24, 2025

How to Get a Job in the Age of AI?

December 23, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Two interesting employment related articles appeared in my newsfeeds this morning. Let’s take a quick look at each. I will try to add some humor to these write ups. Some may find them downright gloomy.

The first is “An OpenAI Exec Identifies 3 Jobs on the Cusp of Being Automated.” I want to point out that the OpenAI wizard’s own job seems to be secure from his point of view. The write up points out:

Olivier Godement, the head of product for business products at the ChatGPT maker, shared why he thinks a trio of jobs — in life sciences, customer service, and computer engineering — is on the cusp of automation.

Let’s think about each of these broad categories. I am not sure what life sciences means in OpenAI world. The term is like a giant umbrella. Customer service makes some sense. Companies were trying to ignore, terminate, and prevent any money sucking operation related to answer customer’s questions and complaints for years. No matter how lousy and AI model is, my hunch is that it will be slapped into a customer service role even if it is arguably worse than trying to understand the accent of a person who speaks English as a second or third language.

Young members of “leadership” realize that the AI system used to replace lower-level workers has taken their jobs. Selling crafts on Etsy.com is a career option. Plus, there is politics and maybe Epstein, Epstein, Epstein related careers for some. Thanks, Qwen, you just output a good enough image but you are free at this time (December 13, 2025).

Now we come to computer engineering. I assume the OpenAI person will position himself as an AI adept, which fits under the umbrella of computer engineering. My hunch is that the reference is to coders who do grunt work. The only problem is that the large language model approach to pumping out software can be problematic in some situations. That’s why the OpenAI person is probably not worrying about his job. An informed human has to be in the process of machine-generated code. LLMs do make errors. If the software is autogenerated for one of those newfangled portable nuclear reactors designed to power football field sized data centers, someone will want to have a human check that software. Traditional or next generation nuclear reactors can create some excitement if the software makes errors. Do you want a thorium reactor next to your domicile? What about one run entirely by smart software?

What’s amusing about this write up is that the OpenAI person seems blissfully unaware of the precarious financial situation that Sam AI-Man has created. When and if OpenAI experiences a financial hiccup, will those involved in business products keep their jobs. Oliver might want to consider that eventuality. Some investors are thinking about their options for Sam AI-Man related activities.

The second write up is the type I absolutely get a visceral thrill writing. A person with a connection (probably accidental or tenuous) lets me trot out my favorite trope — Epstein, Epstein, Epstein — as a way capture the peculiarity of modern America. This article is “Bill Gates Predicts That Only Three Jobs Will Be Safe from Being Replaced by AI.” My immediate assumption upon spotting the article was that the type of work Epstein, Epstein, Epstein did would not be replaced by smart software. I think that impression is accurate, but, alas, the write up did not include Epstein, Epstein, Epstein work in its story.

What are the safe jobs? The write up identifies three:

-

Biology. Remember OpenAI thinks life sciences are toast. Okay, which is correct?

-

Energy expertise

-

Work that requires creative and intuitive thinking. (Do you think that this category embraces Epstein, Epstein, Epstein work? I am not sure.)

The write up includes a statement from Bill Gates:

“You know, like baseball. We won’t want to watch computers play baseball,” he said. “So there’ll be some things that we reserve for ourselves, but in terms of making things and moving things, and growing food, over time, those will be basically solved problems.”

Several observations:

-

AI will cause many people to lose their jobs

-

Young people will have to make knick knacks to sell on Etsy or find equally creative ways of supporting themselves

-

The assumption that people will have “regular” jobs, buy houses, go on vacations, and do the other stuff organization man type thinking assumed was operative, is a goner.

Where’s the humor in this? Epstein, Epstein, Epstein and OpenAI debt, OpenAI debt, and OpenAI debt. Ho ho ho.

Stephen E Arnold, December x, 2025

How Do You Get Numbers for Copilot? Microsoft Has a Good Idea

December 22, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

In past couple of days, I tested some of the latest and greatest from the big tech outfits destined to control information flow. I uploaded text to Gemini, asked it a question answered in the test, and it spit out the incorrect answer. Score one for the Googlers. Then I selected an output from ChatGPT and asked it to determine who was really innovating in a very, very narrow online market space. ChatGPT did not disappoint. It just made up a non-existent person. Okay Sam AI-Man, I think you and Microsoft need to do some engineering.

Could a TV maker charge users to uninstall a high value service like Copilot? Could Microsoft make the uninstall app available for a fee via its online software store? Could both the TV maker and Microsoft just ignore the howls of the demented few who don’t love Copilot? Yeah, I go with ignore. Thanks, Venice.ai. Good enough.

And what did Microsoft do with its Copilot online service? According to Engadget, “LG quietly added an unremovable Microsoft Copilot app to TVs.” The write up reports:

Several LG smart TV owners have taken to Reddit over the past few days to complain that they suddenly have a Copilot app on the device

But Microsoft has a seductive way about its dealings. Engadget points out:

[LG TV owners] cannot uninstall it.

Let’s think about this. Most smart TVs come with highly valuable to the TV maker baloney applications. These can be uninstalled if one takes the time. I don’t watch TV very much, so I just leave the set the way it was. I routinely ignore pleas to update the software. I listen, so I don’t care if weird reminders obscure the visuals.

The Engadget article states:

LG said during the 2025 CES season that it would have a Copilot-powered AI Search in its next wave of TV models, but putting in a permanent AI fixture is sure to leave a bad taste in many customers’ mouths, particularly since Copilot hasn’t been particularly popular among people using AI assistants.

Okay, Microsoft has a vision for itself. It wants to be the AI operating system just as Google and other companies desire. Microsoft has been a bit pushy. I suppose I would come up with ideas that build “numbers” and provide fodder for the Microsoft publicity machine. If I hypothesize myself in a meeting at Microsoft (where I have been but that was years ago), I would reason this way:

- We need numbers.

- Why not pay a TV outfit to install Copilot.

- Then either pay more or provide some inducements to our TV partner to make Copilot permanent; that is, the TV owner has no choice.

The pushback for this hypothetical suggestion would be:

- How much?

- How many for sure?

- How much consumer backlash?

I further hypothesize that I would say:

- We float some trial balloon numbers and go from there.

- We focus on high end models because those people are more likely to be willing to pay for additional Microsoft services

- Who cares about consumer backlash? These are TVs and we are cloud and AI people.

Obviously my hypothetical suggestion or something similar to it took place at Microsoft. Then LG saw the light or more likely the check with some big numbers imprinted on it, and the deal was done.

The painful reality of consumer-facing services is that something like 95 percent of the consumers do not change the defaults. By making something uninstallable will not even register as a problem for most consumers.

Therefore, the logic of the LG play is rock solid. Microsoft can add the LG TVs with Copilot to its confirmed Copilot user numbers. Win.

Microsoft is not in the TV business so this is just advertising. Win

Microsoft is not a consumer product company like a TV set company. Win.

As a result, the lack of an uninstall option makes sense. If a lawyer or some other important entity complains, making Copilot something a user can remove eliminates the problem.

Love those LGs. Next up microwaves, freezers, smart lights, and possibly electric blankets. Numbers are important. Users demonstrate proof that Microsoft is on the right path.

But what about revenue from Copilot. No problem. Raise the cost of other services. Charging Outlook users per message seems like an idea worth pursuing? My hypothetical self would argue with type of toll or taxi meter approach. A per pixel charge in Paint seems plausible as well.

The reality is that I believe LG will backtrack. Does it need the grief?

Stephen E Arnold, December 22, 2025

First, Virtual AI Compute and Now a Virtual Supercomputation Complex

December 19, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Do you remember the good old days at AT&T? No Judge Green. No Baby Bells. Just the Ma Bell. Devices were boxes or plastic gizmos. Western Electric paid people to throw handsets out of a multi story building to make sure the stuff was tough. That was the old Ma Bell. Today one has virtual switches, virtual exchanges, and virtual systems. Software has replaced quite a bit of the fungible.

A few days ago, Pavel Durov rolled out his Cocoon. This is a virtual AI complex or VAIC. Skip that build out of data centers. Telegram is using software to provide an AI compute service to anyone with a mobile device. I learned today (December 6, 2025) that Stephen Wolfram has rolled out “instant supercompute.”

When those business plans don’t work out, the buggy whip boys decide to rent out their factory and machines. Too bad about those new fangled horseless carriages. Will the AI data center business work out? Stephen Wolfram and Pavel Durov seem to think that excess capacity is a business opportunity. Thanks, Venice.ai. Good enough.

A Mathematica user wants to run a computation at scale. According to “Instant Supercompute: Launching Wolfram Compute Services”:

Well, today we’ve released an extremely streamlined way to do that. Just wrap the scaled up computation in RemoteBatchSubmit and off it’ll go to our new Wolfram Compute Services system. Then—in a minute, an hour, a day, or whatever—it’ll let you know it’s finished, and you can get its results. For decades I’ve often needed to do big, crunchy calculations (usually for science). With large volumes of data, millions of cases, rampant computational irreducibility, etc. I probably have more compute lying around my house than most people—these days about 200 cores worth. But many nights I’ll leave all of that compute running, all night—and I still want much more. Well, as of today, there’s an easy solution—for everyone: just seamlessly send your computation off to Wolfram Compute Services to be done, at basically any scale.

And the payoff to those using Mathematica for big jobs:

One of the great strengths of Wolfram Compute Services is that it makes it easy to use large-scale parallelism. You want to run your computation in parallel on hundreds of cores? Well, just use Wolfram Compute Services!

One major point in the announcement is:

Wolfram Compute Services is going to be very useful to many people. But actually it’s just part of a much larger constellation of capabilities aimed at broadening the ways Wolfram Language can be used…. An important direction is the forthcoming Wolfram HPCKit—for organizations with their own large-scale compute facilities to set up their own back ends to RemoteBatchSubmit, etc. RemoteBatchSubmit is built in a very general way, that allows different “batch computation providers” to be plugged in.

Does this suggest that Supercompute is walking down the same innovation path as Pavel and Nikolai Durov? I seem some similarities, but there are important differences. Telegram’s reputation is enhanced with some features of considerable value to a certain demographic. Wolfram Computer Services is closely associated with heavy duty math. Pavel Durov awaits trial in France on more than a dozen charges of untoward online activities. Stephen Wolfram collects awards and gives enthusiastic if often incomprehensible talks on esoteric subjects.

But the technology path is similar in my opinion. Both of these organizations want to use available compute resources; they are not too keen on buying GPUs, building data centers, and spending time in meetings about real estate.

The cost of running a job on the Supercompute system depends on a number of factors. A user buys “credits” and pays for a job with those. No specific pricing details are available to me at this time: 0800 US Eastern on December 6, 2025.

Net net: Two very intelligent people — Stephen Wolfram and Pavel Durov — seem to think that the folks with giant data centers will want to earn some money. Messrs. Wolfram and Durov are resellers of excess computing capacity. Will Amazon, Google, Microsoft, et al be signing up if the AI demand does not meet the somewhat robust expectations of big AI tech companies?

Stephen E Arnold, December 19, 2025

Windows Strafed by Windows Fanboys: Incredible Flip

December 19, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

When the Windows folding phone came out, I remember hunting around for blog posts, podcasts, and videos about this interesting device. Following links I bumbled onto the Windows Central Web site. The two fellows who seemed to be front and center had a podcast (a quite irregularly published podcast I might add). I was amazed at the pro-folding gizmo. One of the write ups was panting with excitement. I thought then and think now that figuring out how to fold a screen is a laboratory exercise, not something destined to be part of my mobile phone experience.

I forgot about Windows Central and the unflagging ability to find something wonderfully bigly about the Softies. Then I followed a link to this story: “Microsoft Has a Problem: Nobody Wants to Buy or Use Its Shoddy AI Products — As Google’s AI Growth Begins to Outpace Copilot Products.”

An athlete failed at his Dos Santos II exercise. The coach, a tough love type, offers the injured gymnast a path forward with Mistral AI. Thanks, Qwen, do you phone home?

The cited write up struck me as a technology aficionado pulling off what is called a Dos Santos II. (If you are not into gymnastics, this exercise “trick” involves starting backward with a half twist into a double front in the layout position. Boom. Perfect 10. From folding phone to “shoddy AI products.”

If I were curious, I would dig into the reasons for this change in tune, instruments, and concert hall. My hunch is that a new manager replaced a person who was talking (informally, of course) to individuals who provided the information without identifying the source. Reuters, the trust outfit, does this on occasion as do other “real” journalists. I prefer to say, here are my observations or my hypotheses about Topic X. Others just do the “anonymous” and move forward in life.

Here are a couple of snips from the write up that I find notable. These are not quite at the “shoddy AI products” level, but I find them interesting.

Snippet 1:

If there’s one thing that typifies Microsoft under CEO Satya Nadella‘s tenure: it’s a general inability to connect with customers. Microsoft shut down its retail arm quietly over the past few years, closed up shop on mountains of consumer products, while drifting haphazardly from tech fad to tech fad.

I like the idea that Microsoft is not sure what it is doing. Furthermore, I don’t think Microsoft every connected with its customers. Connections come from the Certified Partners, the media lap dogs fawning at Microsoft CEO antics, and brilliant statements about how many Russian programmers it takes to hack into a Windows product. (Hint: The answer is a couple if the Telegram posts I have read are semi accurate.)

Snippet 2:

With OpenAI’s business model under constant scrutiny and racking up genuinely dangerous levels of debt, it’s become a cascading problem for Microsoft to have tied up layer upon layer of its business in what might end up being something of a lame duck.

My interpretation of this comment is that Microsoft hitched its wagon to one of AI’s Cybertrucks, and the buggy isn’t able to pull the Softie’s one-horse shay. The notion of a “lame duck” is that Microsoft cannot easily extricate itself from the money, the effort, the staff, and the weird “swallow your AI medicine, you fool” approach the estimable company has adopted for Copilot.

Snippet 3:

Microsoft’s “ship it now fix it later” attitude risks giving its AI products an Internet Explorer-like reputation for poor quality, sacrificing the future to more patient, thoughtful companies who spend a little more time polishing first. Microsoft’s strategy for AI seems to revolve around offering cheaper, lower quality products at lower costs (Microsoft Teams, hi), over more expensive higher-quality options its competitors are offering. Whether or not that strategy will work for artificial intelligence, which is exorbitantly expensive to run, remains to be seen.

A less civilized editor would have dropped in the industry buzzword “crapware.” But we are stuck with “ship it now fix it later” or maybe just never. So far we have customer issues, the OpenAI technology as a lame duck, and now the lousy software criticism.

Okay, that’s enough.

The question is, “Why the Dos Santos II” at this time? I think citing the third party “Information” is a convenient technique in blog posts. Heck, Beyond Search uses this method almost exclusively except I position what I do as an abstract with critical commentary.

Let my hypothesize (no anonymous “source” is helping me out):

- Whoever at Windows Central annoyed a Softie with power created is responding to this perceived injustice

- The people at Windows Central woke up one day and heard a little voice say, “Your cheerleading is out of step with how others view Microsoft.” The folks at Windows Central listened and, thus, the Dos Santos II.

- Windows Central did what the auth9or of the article states in the article; that is, using multiple AI services each day. The Windows Central professional realized that Copilot was not as helpful writing “real” news as some of the other services.

Which of these is closer to the pin? I have no idea. Today (December 12, 2025) I used Qwen, Anthropic, ChatGPT, and Gemini. I want to tell you that these four services did not provide accurate output.

Windows Central gets a 9.0 for its flooring Microsoft exercise.

Stephen E Arnold, December 19, 2025