The Starlink Angle: Yacht, Contraband, and Global Satellite Connectivity

December 16, 2024

This blog post is the work of an authentic dinobaby. No smart software was used.

This blog post is the work of an authentic dinobaby. No smart software was used.

I have followed the ups and downs of Starlink satellite Internet connectivity in the Russian special operation. I have not paid much attention to more routine criminal use of the Starlink technology. Before I direct your attention to a write up about this Elon Musk enterprise, I want to mention that this use case for satellites caught my attention with Equatorial Communications’ innovations in 1979. Kudos to that outfit!

“Police Demands Starlink to Reveal Buyer of Device Found in $4.2 Billion Drug Bust” has a snappy subtitle:

Smugglers were caught with 13,227 pounds of meth

Hmmm. That works out to 6,000 kilograms or 6.6 short tons of meth worth an estimated $4 billion on the open market. And it is the government of India chasing the case. (Starlink is not yet licensed for operation in that country.)

The write up states:

officials have sent Starlink a police notice asking for details about the purchaser of one of its Starlink Mini internet devices that was found on the boat. It asks for the buyer’s name and payment method, registration details, and where the device was used during the smugglers’ time in international waters. The notice also asks for the mobile number and email registered to the Starlink account.

The write up points out:

Starlink has spent years trying to secure licenses to operate in India. It appeared to have been successful last month when the country’s telecom minister said Starlink was in the process of procuring clearances. The company has not yet secured these licenses, so it might be more willing than usual to help the authorities in this instance.

Starlink is interesting because it is a commercial enterprise operating in a free wheeling manner. Starlink’s response is not known as of December 12, 2024.

Stephen E Arnold, December 16, 2024

Amazon: Black FridAI for Smart Software Arrives

December 9, 2024

This write up was created by an actual 80-year-old dinobaby. If there is art, assume that smart software was involved. Just a tip.

This write up was created by an actual 80-year-old dinobaby. If there is art, assume that smart software was involved. Just a tip.

Five years ago, give or take a year, my team and I were giving talks about Amazon. Our topics touched on Amazon’s blockchain patents, particularly some interesting cross blockchain filings, and Amazon’s idea for “off the shelf” smart software. At the time, we compared the blockchain patents to examining where data resided across different public ledgers. We also showed pictures of Lego blocks. The idea was that a customer of Amazon Web Service could select a data package, a model, and some other Amazon technologies and create amazing AWS-infused online confections.

Thanks, MidJourney. Good enough.

Well, as it turned out the ideas were interesting, but Amazon just did not have the crate engine stuffed in its digital flea market to make the ideas go fast. The fix has been Amazon’s injections of cash and leadership attention into Anthropic and a sweeping concept of partnering with other AI outfits. (Hopefully one of these ideas will make Amazon’s Alexa into more than a kitchen timer. Well, we’ll see.)

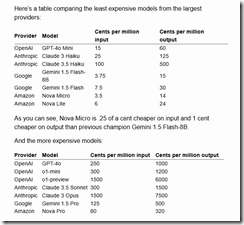

I read “First Impressions of the New Amazon Nova LLMs (Via a New LLM-Bedrock Plugin).” I am going to skip the Amazon jargon and focus on one key point in the rah rah write up:

This is a nicely presented pricing table. You can work through the numbers and figure out how much Amazon will “save” some AI-crazed customer. I want to point out that Amazon is bringing price cutting to the world of smart software. Every day will be a Black FridAI for smart software.

That’s right. Amazon is cutting prices for AI, and that is going to set the stage for a type of competitive joust most of the existing AI players were not expecting to confront. Sure, there are “free” open source models, but you have to run them somewhere. Amazon wants to be that “where”.

If Amazon pulls off this price cutting tactic, some customers will give the system a test drive. Amazon offers a wide range of ways to put one’s toes in the smart software swimming pool. There are training classes; there will be presentations at assorted Amazon events; and there will be a slick way to make Amazon’s smart software marketing make money. Not too many outfits can boost advertising prices and Prime membership fees as part of the smart software campaign.

If one looks at Amazon’s game plan over the last quarter century, the consequences are easy to spot: No real competition for digital books or for semi affluent demographics desire to have Amazon trucks arrive multiple times a day. There is essentially no quality or honesty controls on some of the “partners” in the Amazon ecosystem. And, I personally received a pair of large red women’s underpants instead of an AMD Ryzen CPU. I never got the CPU, but Amazon did not allow me to return the unused thong. Charming.

Now it is possible that this cluster of retail tactics will be coming to smart software. Am I correct, or am I just reading into the play book which has made Amazon a fave among so many vendors of so many darned products?

Worth watching because price matters.

Stephen E Arnold, December 9, 2024

Smart Software Is Coming for You. Yes, You!

December 9, 2024

This write up was created by an actual 80-year-old dinobaby. If there is art, assume that smart software was involved. Just a tip.

This write up was created by an actual 80-year-old dinobaby. If there is art, assume that smart software was involved. Just a tip.

“Those smart software companies are not going to be able to create a bot to do what I do.” — A CPA who is awash with clients and money.

Now that is a practical, me–me-me idea. However, the estimable Organization for Economic Co-Operation and Development (OECD, a delightful acronym) has data suggesting a slightly different point of view: Robots will replace workers who believe themselves unreplaceable. (The same idea is often held by head coaches of sports teams losing games.)

Thanks, MidJourney. Good enough.

The report is titled in best organizational group think: Job Creation and Local Economic Development 2024; The Geography of Generative AI.

I noted this statement in the beefy document, presumably written by real, live humanoids and not a ChatGPT type system:

In fact, the finance and insurance industry is the tightest industry in the United States, with 2.5 times more vacancies per filled position than the regional average (1.6 times in the European Union).

I think this means that financial institutions will be eager to implement smart software to become “workers.” If that works, the confident CPA quoted at the beginning of this blog post is going to get a pink slip.

The OECD report believes that AI will have a broad impact. The most interesting assertion / finding in the report is that one-fifth of the tasks a worker handles can be handled by smart software. This figure is interesting because smart software hallucinates and is carrying the hopes and dreams of many venture outfits and forward leaning wizards on its digital shoulders.

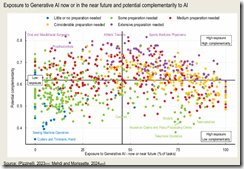

And what’s a bureaucratic report without an almost incomprehensible chart like this one from page 145 of the report?

Look closely and you will see that sewing machine operators are more likely to retain jobs than insurance clerks.

Like many government reports, the document focuses on the benefits of smart software. These include (cue the theme from Star Wars, please) more efficient operations, employees who do more work and theoretically less looking for side gigs, and creating ways for an organization to get work done without old-school humans.

Several observations:

- Let’s assume smart software is almost good enough, errors and all. The report makes it clear that it will be grabbed and used for a plethora of reasons. The main one is money. This is an economic development framework for the research.

- The future is difficult to predict. After scanning the document, I was thinking that a couple of college interns and an account to You.com would be able to generate a reasonable facsimile of this report.

- Agents can gather survey data. One hopes this use case takes hold in some quasi government entities. I won’t trot out my frequently stated concerns about “survey” centric reports.

Stephen E Arnold, December 9, 2024

Google and 2025: AI Scurrying and Lawsuits. Lots of Lawsuits

December 6, 2024

This is the work of a dinobaby. Smart software helps me with art, but the actual writing? Just me and my keyboard.

This is the work of a dinobaby. Smart software helps me with art, but the actual writing? Just me and my keyboard.

I think there are 193 nations which are members of the UN. Two entities which one can count but are what one might call specialty equipment organizations: The Holy See aka Vatican City and the State of Palestine. The other 193 are “recognized,” mostly pay their UN dues, and have legal systems of varying quality and diligence.

I read “Google Earns Fresh Competition Scrutiny from Two Nations on a Single Day.” The write said:

In India – the most populous nation on Earth – the Competition Commission ordered [PDF] a probe after a developer called WinZo – which promotes itself with the chance to “Play Mobile Games & Win Cash” – complained that Google Play won’t host games that offer real money as prizes, only allowing sideloading onto Android devices.

Then it added:

Advertising is the reason for the other Google probe announced Thursday, by the Competition Bureau of Canada – the world’s second-largest country by area. The Bureau announced its investigations found Google’s ads biz “abused its dominant position through conduct intended to ensure that it would maintain and entrench its market power” and “engaged in conduct that reduces the competitiveness of rival ad tech tools and the likelihood of new entrants in the market.” The Bureau thinks the situation can be addressed if Google sells two of its ads tools – but the filing in which the identity of those two products will be revealed is yet to appear on the site of the Competition Tribunal.

Whether Google is good or evil is, in my opinion, irrelevant. With the US, the EU, Canada, and India chasing Google for its alleged misbehavior, other nations are going to pay attention.

Does that mean that another 100 or more nations will launch their own investigations and initiate legal action related to the lovable Google’s approach to business? In practical terms what does this mean?

- Google will be hiring lawyers and retaining firms. This is definitely good for legal eagles.

- Google will win some, delay some, and lose some cases. The losses, however, will result in consequences. Some of these will require Google to write checks for penalties. These can add up.

- Conflicting decisions are likely to result in delays. Those delays means that Google will be more Googley. The number of ads in YouTube will increase. The mysterious revenue payments will become more quirky. Commissions on various user-customer-Google touch points will increase.

Net net: We have a good example of what a failure to regulate high technology companies for a couple of decades creates. Kicking the can down the road has done what exactly?

Stephen E Arnold, December 6, 2024

Googlers Face Another Ka-Ching Moment in the United Kingdom

December 5, 2024

This write up is from a real and still-alive dinobaby. If there is art, smart software has been involved. Dinobabies have many skills, but Gen Z art is not one of them.

This write up is from a real and still-alive dinobaby. If there is art, smart software has been involved. Dinobabies have many skills, but Gen Z art is not one of them.

Mr. Harold Carlin, my high school history teacher, made us learn about the phrase “The sun never sets on the British empire.” It has, and Mr. Carlin like many old-school teachers forced our class to read about protectionism, subjugation of people who did not enjoy beef Wellington, or assorted monopolies.

Two intelligent entities discuss how to resolve legal problems. Thanks, MidJourney. Good enough.

Now Google may want to think about the phrase, “The sun never sets on Google legal matters related to its alleged behavior in the datasphere.”

“Google Must Face £7B UK Class Action over Search Engine Dominance” reported:

The complaint centers around Google shutting out competition for mobile search, resulting in higher prices for advertisers, which were allegedly passed on to consumers. According to consumer rights campaigner Nikki Stopford, who is bringing the claim on behalf of UK consumers, Android device makers that wanted access to Google’s Play Store had to accept its search service. The ad slinger also paid Apple billions to have Google Search as the default for the Safari browser in iOS.

The write up noted:

According to Stopford [a UK official], Google used its position to up prices paid by advertisers, resulting in higher costs to consumers. “What we’re trying to achieve with this claim is essentially compensate consumers,” she said.

Google has moved some of its smart software activities to the UK. One would think that with Google’s cash resources, its attorneys, and its smart software — mere government officials would have zero chance of winning this now repetitive allegation that dear Google has behaved in an untoward way.

If I were a government litigator, I would just drop the suit, Jack Smith style.

Will the sun set on these allegations against the “do no evil” outfit?

Nope, not as long as the opportunity for a payout exists. Google may have been too successful in its decades long rampage through traditional business practices. The good news is that Google has an almost limitless supply of money. The bad news is that countries have an almost limitless supply of regulators. But Google has smart software. Remember the film “The Terminator”? Winner: Google.

Stephen E Arnold, December 5, 2024

China Seeks to Curb Algorithmic Influence and Manipulation

December 5, 2024

Someone is finally taking decisive action against unhealthy recommendation algorithms, AI-driven price optimization, and exploitative gig-work systems. That someone is China. ”China Sets Deadline for Big Tech to Clear Algorithm Issues, Close ‘Echo Chambers’,” reports the South China Morning Post. Ah, the efficiency of a repressive regime. Writer Hayley Wong informs us:

‘Tech operators in China have been given a deadline to rectify issues with recommendation algorithms, as authorities move to revise cybersecurity regulations in place since 2021. A three-month campaign to address ‘typical issues with algorithms’ on online platforms was launched on Sunday, according to a notice from the Communist Party’s commission for cyberspace affairs, the Ministry of Industry and Information Technology, and other relevant departments. The campaign, which will last until February 14, marks the latest effort to curb the influence of Big Tech companies in shaping online views and opinions through algorithms – the technology behind the recommendation functions of most apps and websites. System providers should avoid recommendation algorithms that create ‘echo chambers’ and induce addiction, allow manipulation of trending items, or exploit gig workers’ rights, the notice said.

They should also crack down on unfair pricing and discounts targeting different demographics, ensure ‘healthy content’ for elderly and children, and impose a robust ‘algorithm review mechanism and data security management system’.”

Tech firms operating within China are also ordered to conduct internal investigations and improve algorithms’ security capabilities by the end of the year. What happens if firms fail? Reeducation? A visit to the death van? Or an opportunity to herd sheep in a really nice area near Xian? The brief write-up does not specify.

We think there may be a footnote to the new policy; for instance, “Use algos to advance our policies.”

Cynthia Murrell, December 5, 2024

Legacy Code: Avoid, Fix, or Flee (Two Out of Three Mean Forget It)

December 4, 2024

In his Substack post, “Legacy Schmegacy,” software engineer David Reis offers some pointers on preventing and coping with legacy code. We found this snippet interesting:

“Someone must fix the legacy code, but it doesn’t have to be you. It’s far more honorable to switch projects or companies than to lead a misguided rewrite.”

That’s the spirit: quit and let someone else deal with it. But not everyone is in the position to cut and run. For those actually interested in addressing the problem, Reis has some suggestions. First, though, the post lists factors that can prevent legacy code in the first place:

- “The longer a programmer’s tenure the less code will become legacy, since authors will be around to appreciate and maintain it.

- The more code is well architected, clear and documented the less it will become legacy, since there is a higher chance the author can transfer it to a new owner successfully.

- The more the company uses pair programming, code reviews, and other knowledge transfer techniques, the less code will become legacy, as people other than the author will have knowledge about it.

- The more the company grows junior engineers the less code will become legacy, since the best way to grow juniors is to hand them ownership of components.

- The more a company uses simple standard technologies, the less likely code will become legacy, since knowledge about them will be widespread in the organization. Ironically if you define innovation as adopting new technologies, the more a team innovates the more legacy it will have. Every time it adopts a new technology, either it won’t work, and the attempt will become legacy, or it will succeed, and the old systems will.”

Reiss’ number one suggestion to avoid creating legacy code is, “don’t write crappy code.” Noted. Also, stick with tried and true methods unless shiny a new tech is definitely the best option. Perhaps most importantly, coders should teach others in the organization how their code works and leave behind good documentation. So, common sense and best practices. Brilliant!

When confronted with a predecessor’s code, he advises one to “delegacify” it. That is a word he coined to mean: Take time to understand the code and see if it can be improved over time before tossing it out entirely. Or, as noted above, just run away. That can be an option for some.

Cynthia Murrell, December 4, 2024

FOGINT: Telegram and Its Race Against Time

December 3, 2024

The article is a product of the humans working on the FOGINT team. The image is from Gifr.com.

The article is a product of the humans working on the FOGINT team. The image is from Gifr.com.

The Financial Times recently stirred debate in the cryptocurrency community with its article, “Telegram Finances Propped Up by Crypto Gains As Founder Fights Charges.” Telegram’s ambitions for an initial public offering (IPO) hinge on proving it has a sustainable, profitable business model.

According to the FT, Telegram sold off cryptocurrency holdings to shore up its balance sheet, reporting revenue of $525 million. The financials, based on unaudited statements, framed the crypto sale as a “tactical” move, with Durov’s confinement in France having no material impact on the company.

CCN added flair with its piece, “Pavel Durov’s Telegram Nets $335M Windfall: Can It Ride a Crypto Bull to a $30B IPO by 2026,” highlighting that while crypto revenues and TON reserves have helped Telegram stay afloat, the firm still faces substantial debt and operating losses—$259 million in 2023 alone.

Crypto.news zeroed in on debt in its article, “How Telegram Made Over Half a Billion Dollars Thanks to Crypto?” Telegram, wholly owned by Durov, has raised $2.4 billion in debt financing, with repayment looming in 2026. In September, it used part of its crypto proceeds to repurchase $124.5 million in bonds. (Note: None of the news sources we reviewed noted that Telegram is using a variant of the MicroStrategy strategy of acquiring crypto currency to pump up the company’s “value.” See DLNews for more detail.)

The FOGINT research team identifies three key dynamics:

- Cash Flow Through Crypto Sales: Telegram’s crypto transactions inject much-needed liquidity, transforming a significant 2023 loss into a manageable red blot on its financial history.

- Tight Links to The Open Network Foundation (TON): Despite TON’s ostensible independence, the Foundation is deeply intertwined with Telegram. This relationship traces back to the U.S. SEC’s 2019 intervention against GRAM (now TON), which forced Telegram to offload its blockchain to the “independent” Foundation in 2023. Regulators in the U.S., UAE, and Switzerland appear to tolerate this arrangement for now.

- Racing Against the Clock: Telegram is fast-tracking innovations, like the BotFather’s high-speed processing and partnerships with firms such as Ku Group. Its developer meetups and funding programs are designed to rapidly build out its ecosystem. The urgency stems from a softening stance toward law enforcement—Telegram now appears more willing to share user data, potentially feeding into global investigative pipelines.

This newfound openness aligns with Telegram’s aggressive push to monetize its TON blockchain. At the November 1-2 Gateway Conference in Dubai, Telegram launched an all-out campaign to promote its crypto ecosystem. From YouTube videos and meetups to venture fund pitches, the effort signals a company operating in overdrive.

Blockchain researcher Sean Brizendine said: “”The Telegram hype is definitely real, and the Durov brothers’ future is on the line. Now is the time to pay attention as Telegram’s moves are breaking fast and furious.”

Why the rush? Telegram’s 900 million users and its wildly popular crypto games have been critical growth engines. But with its ethos of “do what you want” giving way to “use our crypto platform,” the stakes are higher than ever. The “leader” is in the grasp of French authorities. Fast-fashion-like life cycles of its crypto ventures underline a harsh reality: For Telegram, succeeding in crypto is no longer optional—it’s a turning point for the company and its affiliated organizations. Cornered animals can be more dangerous than some people think.

Stephen E Arnold, December 3, 2024

Directories Have Value

November 29, 2024

Why would one build an online directory—to create a helpful reference? Or for self aggrandizement? Maybe both. HackerNoon shares a post by developer Alexander Isora, “Here’s Why Owning a Directory = Owning a Free Infinite Marketing Channel.”

First, he explains why users are drawn to a quality directory on a particular topic: because humans are better than Google’s algorithm at determining relevant content. No argument here. He uses his own directory of Stripe alternatives as an example:

“Why my directory is better than any of the top pages from Google? Because in the SERP [Search Engine Results Page], you will only see articles written by SEO experts. They have no idea about billing systems. They never managed a SaaS. Their set of links is 15 random items from Crunchbase or Product Hunt. Their article has near 0 value for the reader because the only purpose of the article is to bring traffic to the company’s blog. What about mine? I tried a bunch of Stripe alternatives myself. Not just signed up, but earned thousands of real cash through them. I also read 100s of tweets about the experiences of others. I’m an expert now. I can even recognize good ones without trying them. The set of items I published is WAY better than any of the SEO-optimized articles you will ever find on Google. That is the value of a directory.”

Okay, so that is why others would want a subject-matter expert to create a directory. But what is in it for the creator? Why, traffic, of course! A good directory draws eyeballs to one’s own products and services, the post asserts, or one can sell ads for a passive income. One could even sell a directory (to whom?) or turn it into its own SaaS if it is truly popular.

Perhaps ironically, Isora’s next step is to optimize his directories for search engines. Sounds like a plan.

Cynthia Murrell, November 29, 2024

Apple: Another Problem Becoming Evident

November 25, 2024

Apple is a beast in Big Tech with its cult of loyal devotees, technology advancement (especially in mobile devices), and Apple TV. Apple TV invested big money in developing original content for its streaming service and has garnered many accolades, but it’s a misnomer in the entertainment industry. Why? ArsTechnica has the lowdown on that: “Apple TV+ Spent $20B On Original Content. If Only People Actually Watched.”

Apple spent $20 billion to make a name for itself in the prominent streaming wars. While its original content shows have loyal followings, Nielsen says that its attracted only 0.3% of US eyeballs. Bloomberg wrote: “Apple TV+ generates less viewing in one month than Netflix does in one day.”

Ouch! Here are some numbers to support that statement:

“Apple doesn’t provide subscriber numbers for Apple TV+, but it’s estimated to have 25 million subscribers. That would make it one of the smallest mainstream streaming services. For comparison, Netflix has about 283 million, and Prime Video has over 200 million. Smaller services like Peacock (about 28 million) and Paramount+ (about 72 million) best Apple TV+’s subscriber count, too.”

Apple only has 259 shows compared to Netflix’s 18,000. Also Apple’s marketing efforts are minimal, but the company has used big names like Leonardo DiCaprio, Reese Witherspoon, Idris Elba, and Martin Scorsese. Here are some more numbers for comparisons sake:

“To put this into perspective, Apple spent $14.9 million on commercials for Apple TV+ in October 2019 versus $28.6 million on the iPhone, per iSpot.TV data cited by The New York Times. Online, Apple paid for 139 unique digital ads for Apple TV+ in October 2019 compared to 245 for the iPhone (about $1.7 million versus about $2.3 million), per data from advertising analytics platform Pathmatics cited by The Times.”

Apple plans to raise its viewership by licensing its content to foreign marketplaces and adopting more common streaming practices. These include bundling through Comcast and Amazon Prime Video.

Apple had smart intentions but its lackluster performance begs its intelligence in the entertainment department. Apple sure didn’t replicate the success Steve Jobs had by investing in Pixar.

Whitney Grace, November 25, 2024