AI and the Obvious: Hire Us and Pay Us to Tell You Not to Worry

December 26, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

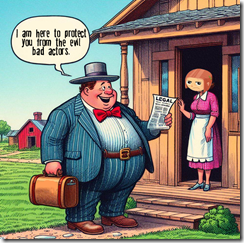

I read “Accenture Chief Says Most Companies Not Ready for AI Rollout.” The paywalled write up is an opinion from one of Captain Obvious’ closest advisors. The CEO of Accenture (a general purpose business expertise outfit) reveals some gems about artificial intelligence. Here are three which caught my attention.

#1 — “Sweet said executives were being “prudent” in rolling out the technology, amid concerns over how to protect proprietary information and customer data and questions about the accuracy of outputs from generative AI models.”

The secret to AI consulting success: Cost, fear of failure, and uncertainty or CFU. Thanks, MSFT Copilot. Good enough.

Arnold comment: Yes, caution is good because selling caution consulting generates juicy revenues. Implementing something that crashes and burns is a generally bad idea.

#2 — “Sweet said this corporate prudence should assuage fears that the development of AI is running ahead of human abilities to control it…”

Arnold comment: The threat, in my opinion, comes from a handful of large technology outfits and from the legions of smaller firms working overtime to apply AI to anything that strikes the fancy of the entrepreneurs. These outfits think about sizzle first, consequences maybe later. Much later.

# 3 — ““There are no clients saying to me that they want to spend less on tech,” she said. “Most CEOs today would spend more if they could. The macro is a serious challenge. There are not a lot of green shoots around the world. CEOs are not saying 2024 is going to look great. And so that’s going to continue to be a drag on the pace of spending.”

Arnold comment: Great opportunity to sell studies, advice, and recommendations when customers are “not saying 2024 is going to look great.” Hey, what’s “not going to look great” mean?

The obvious is — obvious.

Stephen E Arnold, December 26, 2023

Quantum Supremacy in Management: A Google Incident

December 25, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I spotted an interesting story about an online advertising company which has figured out how to get great PR in respected journals. But this maneuver is a 100 yard touchdown run for visibility. “Hundreds Gather at Google’s San Francisco Office to Protest $1.2 Billion Contract with Israel” reports:

More than 400 protesters gathered at Google’s San Francisco office on Thursday to demand the tech company cut ties with Israel’s government.

Some managers and techno wizards envy companies which have the knack for attracting crowds and getting free publicity. Thanks, MSFT Copilot. Close enough for horseshoes

The demonstration, according to the article, was a response to Google and its new BFF’s project for Israel. The SFGate article contains some interesting photographs. One is a pretend dead person wrapped in a shroud with the word “Genocide” in bright, cheerful Google log colors. I wanted to reproduce it, but I am not interested in having copyright trolls descend on me like a convocation of legal eagles. The “Project Nimbus” — nimbus is a type of cloud which I learned about in the fifth- or sixth-grade — “provides the country with local data centers and cloud computing services.”

The article contains words which cause OpenAI’s art generators to become uncooperative. That banned word is “genocide.” The news story adds some color to the fact of the protest on December 14, 2023:

Multiple speakers mentioned an article from The Intercept, which reported that Nimbus delivered Israel the technology for “facial detection, automated image categorization, object tracking, and even sentiment analysis.” Others referred to an NPR investigation reporting that Israel says it is using artificial intelligence to identify targets in Gaza, though the news outlet did not link the practice to Google’s technology.

Ah, ha. Cloud services plus useful technologies. (I wonder if the facial recognition system allegedly becoming available to the UK government is included in the deal?) The story added a bit of spice too:

For most of Thursday’s protest, two dozen people lay wrapped in sheets — reading “Genocide” in Google’s signature rainbow lettering — in a “die-in” performance. At the end, they stood to raise up white kites, as a speaker read Refaat Alareer’s “If I must die,” written just over a month before the Palestinian poet was killed by an Israeli airstrike.

The article included a statement from a spokesperson, possible from Google. This individual said:

“We have been very clear that the Nimbus contract is for workloads running on our commercial platform by Israeli government ministries such as finance, healthcare, transportation, and education,” she said. “Our work is not directed at highly sensitive or classified military workloads relevant to weapons or intelligence services.”

Does this sound a bit like an annoyed fifth- or sixth-grade teacher interrupted by a student who said out loud: “Clouds are hot air.” While not technically accurate, the student was sent to the principal’s office. What will happen in this situation?

Some organizations know how to capture users’ attention. Will the company be able to monetize it via a YouTube Short or a more lengthy video. Google is quite skilled at making videos which purport to show reality as Google wants it to be. The “real” reality maybe be different. Revenue is important, particularly as regulatory scrutiny remains popular in the EU and the US.

Stephen E Arnold, December 25, 2023

A Grade School Food Fight Could Escalate: Apples Could Become Apple Sauce

December 25, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

A squabble is blowing up into a court fight. “Beeper vs Apple Battle Intensifies: Lawmakers Demand DOJ Investigation” reports:

US senators have urged the DOJ to probe Apple’s alleged anti-competitive conduct against Beeper.

Apple killed a messaging service in the name of protecting apple pie, mom, love, truth, justice, and the American way. Ooops, sorry. That’s something from the Superman comix.

“You squashed my apple. You ruined my lunch. You ruined my life. My mommy will call your mommy, and you will be in trouble,” says the older, more mature child. The principal appears and points out that screeching is not comely. Thanks, MSFT Copilot. Close enough for horseshoes.

The article said:

The letter to the DOJ is signed by Minnesota Senator Amy Klobuchar, Utah Senator Mike Lee, Congressman Jerry Nadler, and Congressman Ken Buck. They have urged the law enforcement body to investigate “whether Apple’s potentially anti-competitive conduct against Beeper violates US antitrust laws.” Apple has been constantly trying to block Beeper Mini and Beeper Cloud from accessing iMessage. The two Beeper messaging apps allow Android users to interact with iPhone users through iMessage — an interoperability Apple has been opposed to for a long time now.

As if law enforcement did not have enough to think about. Now an alleged monopolist is engaged in a grade school cafeteria spat with a younger, much smaller entity. By golly, that big outfit is threatened by the jejune, immature, and smaller service.

How will this play out?

- A payday for Beeper when Apple makes the owners of Beeper an offer that would be tough to refuse. Big piles of money can alter one’s desire to fritter away one’s time in court

- The dust up spirals upwards. What if the attitude toward Apple’s approach to its competitors becomes a crusade to encourage innovation in a tough environment for small companies? Containment may be difficult.

- The jury decision against Google may kindle more enthusiasm for another probe of Apple and its posture in some tricky political situations; for example, the iPhone in China, the non-repairability issues, and Apple’s mesh of inter-connected services which may be seen as digital barriers to user choice.

In 2024, Apple may find that some government agencies are interested in the fruit growing on the company’s many trees.

Stephen E Arnold, December 25, 2023

Google AI and Ads: Beavers Do What Beavers Do

December 20, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Consider this. Take a couple of beavers. Put them in the Cloud Room near the top of the Chrysler Building in Manhattan. Shut the door. Come back in a day. What have the beavers done? The beavers start making a dam. Beavers do what beavers do. That’s a comedian’s way of explaining that some activities are hard wired into an organization. Therefore, beavers do what beavers do.

I read the paywalled article “Google Plans Ad Sales Restructuring as Automation Booms” and the other versions of the story on the shoulder of the Information Superhighway; for example, the trust outfit’s recycling of the Information’s story. The giant quantum supremacy, protein folding, and all-round advertising company is displaying beaver-like behavior. Smart software will be used to sell advertising.

That ad DNA? Nope, the beavers do what beavers do. Here’s a snip from the write up:

The planned reorganization comes as Google is relying more on machine-learning techniques to help customers buy more ads on its search engine, YouTube and other services…

Translating: Google wants fewer people to present information to potential and actual advertisers. The idea is to reduce costs and sell more advertising. I find it interesting that the quantum supremacy hoo-hah boils down to … selling ads and eliminating unreliable, expensive, vacation-taking, and latte consuming humans.

Two real beavers are surprised to learn that a certain large and dangerous creature also has DNA. Notice that neither of the beavers asks the large reptile to join them for lunch. The large reptile may, in fact, view the beavers as something else; for instance, lunch. Thanks, MSFT Copilot. Good enough.

Are there other ad-related changes afoot at the Google? According to “Google Confirms It is Testing Ad Copy Variation in Live Ads” points out:

Google quietly started placing headlines in ad copy description text without informing advertisers

No big deal. Just another “test”, I assume. Search Engine Land (a publication founded, nurtured, and shaped into the search engine optimization information machine by Dan Sullivan, now a Googler) adds:

Changing the rules without informing advertisers can make it harder for them to do their jobs and know what needs to be prioritized. The impact is even more significant for advertisers with smaller budgets, as assessing the changes, especially with responsive search ads, becomes challenging, adding to their workload.

Google wants to reduce its workload. In pursuing that noble objective, if Search Engine Land is correct, may increase the workload of the advertisers. But never fear, the change is trivial, “a small test.”

What was that about beavers? Oh, right. Certain behaviors are hard wired into the DNA of a corporate entity, which under US law is a “person” someone once told me.

Let me share with you several observations based on my decades-long monitoring of the Google.

- Google does what Google wants and then turns over the explanation to individuals who say what is necessary to deflect actual intent, convert actions into fuzzy Google speech, and keep customer and user pushback to a minimum. (Note: The tactic does not work with 100 percent reliability as the recent loss to US state attorneys general illustrates.)

- Smart software is changing rapidly. What appears to be one application may (could) morph into more comprehensive functionality. Predicting the future of AI and Google’s actions is difficult. Google will play the odds which means what the “entity” does will favor its objective and goals.

- The quaint notion of a “small test” is the core of optimization for some methods. Who doesn’t love “quaint” as a method for de-emphasizing the significance of certain actions. The “small test” is often little more than one component of a larger construct. Dismissing the small is to ignore the larger component’s functionality; for example, data control and highly probable financial results.

Let’s flash back to the beavers in the Cloud Room. Imagine the surprise of someone who opens the door and sees gnawed off portions of chairs, towels, a chunk of unidentifiable gook piled between two tables.

Those beavers and their beavering can create an unexpected mess. The beavers, however, are proud of their work because they qualify under an incentive plan for a bonus. Beavers do what beavers do.

Stephen E Arnold, December 20, 2023

Is Google Really Clever and Well Managed or the Other Way Round?

December 19, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

“Google Will Pay $700 Million to Settle a Play Store Antitrust Lawsuit with All 50 US States” reports that Google put up a blog post. (You can read that at this link. The title of the post is worth the click.) Neowin.net reported that Google will “make some changes.”

“What’s happened to our air vent?” asks one government regulatory professional. Thanks, MSFT Copilot. Good enough.

Change is good. It is better if that change is organic in my opinion. But change is change. I noted this statement in the Neowin.net article:

The public reveal of this settlement between Google and the US state attorney generals comes just a few days after a jury ruled against Google in a similar case with developer Epic Games. The jury agreed with Epic’s view that Google was operating an illegal monopoly with its Play Store on Android devices. Google has stated it will appeal the jury’s decision.

Yeah, timing.

Several observations:

- It appears that some people perceive Google as exercising control over their decisions and the framing of those decisions

- The business culture creating the need to pay a $700 million penalty are likely to persist because the people who write checks at Google are not the people facilitating the behaviors creating the legal issue in my opinion

- The payday, when distributed, is not the remedy for some of those snared in the Googley approach to business.

Net net: Other nation states may look at the $700 million number and conclude, “Let’s take another look at that outfit.”

Stephen E Arnold, December 19, 2023

Why Humans Follow Techno Feudal Lords and Ladies

December 19, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

“Seduced By The Machine” is an interesting blend of human’s willingness to follow the leader and Silicon Valley revisionism. The article points out:

We’re so obsessed by the question of whether machines are rising to the level of humans that we fail to notice how the humans are becoming more like machines.

I agree. The write up offers an explanation — it’s arriving a little late because the Internet has been around for decades:

Increasingly we also have our goals defined for us by technology and by modern bureaucratic systems (governments, schools, corporations). But instead of providing us with something equally rich and well-fitted, they can only offer us pre-fabricated values, standardized for populations. Apps and employers issue instructions with quantifiable metrics. You want good health – you need to do this many steps, or achieve this BMI. You want expertise? You need to get these grades. You want a promotion? Hit these performance numbers. You want refreshing sleep? Better raise your average number of hours.

A modern high-tech pied piper leads many to a sanitized Burning Man? Sounds like fun. Look at the funny outfit. The music is a TikTok hit. The followers are looking forward to their next “experience.” Thanks, MSFT Copilot. One try for this cartoon. Good enough again.

The idea is that technology offers a short cut. Who doesn’t like a short cut? Do you want to write music in the manner of Herr Bach or do you want to do the loop and sample thing?

The article explores the impact of metrics; that is, the idea of letting Spotify make clear what a hit song requires. Now apply that malleability and success incentive to getting fit, getting start up funding, or any other friction-filled task. Let’s find some Teflon, folks.

The write up concludes with this:

Human beings tend to prefer certainty over doubt, comprehensibility to confusion. Quantified metrics are extremely good at offering certainty and comprehensibility. They seduce us with the promise of what Nguyen calls “value clarity”. Hard and fast numbers enable us to easily set goals, justify decisions, and communicate what we’ve done. But humans reach true fulfilment by way of doubt, error and confusion. We’re odd like that.

Hot button alert! Uncertainty means risk. Therefore, reduce risk. Rely on an “authority,” “data,” or “research.” What if the authority sells advertising? What if the data are intentionally poisoned (a somewhat trivial task according to watchers of disinformation outfits)? What if the research is made up? (I am thinking of the Stanford University president and the Harvard ethic whiz. Both allegedly invented data; both found themselves in hot water. But no one seems to have cared.

With smart software — despite its hyperbolic marketing and its role as the next really Big Thing — finding its way into a wide range of business and specialized systems, just trust the machine output. I went for a routine check up. One machine reported I was near death. The doctor was recommending a number of immediate remediation measures. I pointed out that the data came from a single somewhat older device. No one knew who verified its accuracy. No one knew if the device was repaired. I noted that I was indeed still alive and asked if the somewhat nervous looking medical professional would get a different device to gather the data. Hopefully that will happen.

Is it a positive when the new pied piper of Hamelin wants to have control in order to generate revenue? Is it a positive when education produces individuals who do not ask, “Is the output accurate?” Some day, dinobabies like me will indeed be dead. Will the willingness of humans to follow the pied piper be different?

Absolutely not. This dinobaby is alive and kicking, no matter what the aged diagnostic machine said. Gentle reader, can you identify fake, synthetic, or just plain wrong data? If you answer yes, you may be in the top tier of actual thinkers. Those who are gatekeepers of information will define reality and take your money whether you want to give it up or not.

Stephen E Arnold, December 19, 2023

Facing an Information Drought Tech Feudalists Will Innovate

December 18, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The Exponential View (Azeem Azhar) tucked an item in his “blog.” The item is important, but I am not familiar with the cited source of the information in “LLMs May Soon Exhaust All Available High Quality Language Data for Training.” The main point is that the go-to method for smart software requires information in volume to [a] be accurate, [b] remain up to date, and [c] sufficiently useful to pay for the digital plumbing.

Oh, oh. The water cooler is broken. Will the Pilates’ teacher ask the students to quench their thirst with synthetic water? Another option is for those seeking refreshment to rejuvenate tired muscles with more efficient metabolic processes. The students are not impressed with these ideas? Thanks, MSFT Copilot. Two tries and close enough.

One datum indicates / suggests that the Big Dogs of AI will run out of content to feed into their systems in either 2024 or 2025. The date is less important than the idea of a hard stop.

What will the AI companies do? The essay asserts:

OpenAI has shown that it’s willing to pay eight figures annually for historical and ongoing access to data — I find it difficult to imagine that open-source builders will…. here are ways other than proprietary data to improve models, namely synthetic data, data efficiency, and algorithmic improvements – yet it looks like proprietary data is a moat open-source cannot cross.

Several observations:

- New methods of “information” collection will be developed and deployed. Some of these will be “off the radar” of users by design. One possibility is mining the changes to draft content is certain systems. Changes or deltas can be useful to some analysts.

- The synthetic data angle will become a go-to method using data sources which, by themselves, are not particularly interesting. However, when cross correlated with other information, “new” data emerge. The new data can be aggregated and fed into other smart software.

- Rogue organizations will acquire proprietary data and “bitwash” the information. Like money laundering systems, the origin of the data are fuzzified or obscured, making figuring out what happened expensive and time consuming.

- Techno feudal organizations will explore new non commercial entities to collect certain data; for example, the non governmental organizations in a niche could be approached for certain data provided by supporters of the entity.

Net net: Running out of data is likely to produce one high probability event: Certain companies will begin taking more aggressive steps to make sure their digital water cooler is filled and working for their purposes.

Stephen E Arnold, December 18, 2023

Google and Its Epic Magic: Will It Keep on Thrilling?

December 17, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The Financial Times (the orange newspaper) published a paywalled essay/interview with Epic Games’s CEO Tim Sweeney. The hook for the sit down was the decision that a court proceeding determined that Google had acted in an illegal way. How? Google developed Android, then Google used that mobile system as a platform for revenue generation. These appear to have involved one-off special deals with some companies and a hefty commission on sales made via the Google Play Store.

Will the magic show continue to surprise and entertain the innocent at the party? Thanks, MSFT Copilot. Close enough for horseshoes, but I wanted a Godzilla monster in a tuxedo doing the tricks. But that’s forbidden.

Several items struck me in the article “Epic Games Chief Concerned Google Will Get Away with App Store Charges.”

First, the trial made clear that Google was unable to back up certain data. Here’s how the Financial Times’s story phrased this matter:

The judge in the case, US district judge James Donato, also criticized the company for its failure to preserve evidence, with internal policies for deleting chats. He instructed the jury that they were free to conclude Google’s chat deletion policies were designed to conceal incriminating evidence. “The Google folks clearly knew what they were doing,” Sweeney said. “They had very lucid writings internally as they were writing emails to each other, though they destroyed most of the chats.” “And then there was the massive document destruction,” Sweeney added. “It’s astonishing that a trillion-dollar corporation at the pinnacle of the American tech industry just engages in blatantly dishonest processes, such as putting all of their communications in a form of chat that is destroyed every 24 hours.” Google has since changed its chat deletion policy.

Taking steps to obscure evidence suggests to me that Google operates in an ethical zone with which I and the judge find uncomfortable. The behavior also implies that Google professionals are not just clever, but that they do what pays off within a governance system which is comfortable with a philosophy of entitlement. Google does what Google does. Oh, that is a problem for others. Well, that’s too bad.

Second, according to the article, Google would pursue “alternative payment methods.” The online ad giant would then slap a fee to list a product in the Google Play Store. The method has a number of variations which can include a fee for promoting a product to offering different size listings. The idea is similar to a grocery chain charging a manufacturer to put annoying free standing displays of breakfast foods in the center of a high traffic aisle.

Third , Mr. Sweeney seems happy with the evidence about payola which emerged during the trial. Google appears to have payed Samsung to sell its digital goods via the Google Play Store. The pay-to-play model apparently prevented the South Korean company from setting up an alternative store for Android equipped mobile devices.

Several observations:

- The trial, unlike the proceedings in the DC monopoly probe produced details about what Google does to generate lock in, money, and Googliness

- The destruction of evidence makes clear a disdain for behavior which preserves the trust and integrity of certain norms of behavior

- The trial makes clear that Google wants to preserve its dominant position and will pay to remain Number One.

Net net: Will Google’s magic wow everyone as it did when the company was gaining momentum? For some, yes. For others, no, sorry. I think the costume Google has worn for decades is now weakening at the seams. But the show must go on.

Stephen E Arnold, December 17, 2023

Microsoft Snags Cyber Criminal Gang: Enablers Finally a Target

December 14, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Earlier this year at the National Cyber Crime Conference, we shared some of our research about “enablers.” The term is our shorthand for individuals, services, and financial outfits providing the money, services, and management support to cyber criminals. Online crime comes, like Baskin & Robbins ice cream, in a mind-boggling range of “flavors.” To make big bucks, funding and infrastructure are needed. The reasons include amped up enforcement from the US Federal Bureau of Investigation, Europol, and cooperating law enforcement agencies. The cyber crime “game” is a variation of a cat-and-mouse game. With each technological advance, bad actors try out the latest and greatest. Then enforcement agencies respond and neutralize the advantage. The bad actors then scan the technology horizon, innovate, and law enforcement responds. There are many implications of this innovate-react-innovate cycle. I won’t go into those in this short essay. Instead I want to focus on a Microsoft blog post called “Disrupting the Gateway Services to Cybercrime.”

Industrialized cyber crime uses existing infrastructure providers. That’s a convenient, easy, and economical means of hiding. Modern obfuscation technology adds to law enforcements’ burden. Perhaps some oversight and regulation of these nearly invisible commercial companies is needed? Thanks, MSFT Copilot. Close enough and I liked the investigators on the roof of a typical office building.

Microsoft says:

Storm-1152 [the enabler?] runs illicit websites and social media pages, selling fraudulent Microsoft accounts and tools to bypass identity verification software across well-known technology platforms. These services reduce the time and effort needed for criminals to conduct a host of criminal and abusive behaviors online.

What moved Microsoft to take action? According to the article:

Storm-1152 created for sale approximately 750 million fraudulent Microsoft accounts, earning the group millions of dollars in illicit revenue, and costing Microsoft and other companies even more to combat their criminal activity.

Just 750 million? One question which struck me was: “With the updating, the telemetry, and the bits and bobs of Microsoft’s “security” measures, how could nearly a billion fake accounts be allowed to invade the ecosystem?” I thought a smaller number might have been the tipping point.

Another interesting point in the essay is that Microsoft identifies the third party Arkose Labs as contributing to the action against the bad actors. The company is one of the firms engaged in cyber threat intelligence and mitigation services. The question I had was, “Why are the other threat intelligence companies not picking up signals about such a large, widespread criminal operation?” Also, “What is Arkose Labs doing that other sophisticated companies and OSINT investigators not doing?” Google and In-Q-Tel invested in Recorded Future, a go to threat intelligence outfit. I don’t recall seeing, but I heard that Microsoft invested in the company, joining SoftBank’s Vision Fund and PayPal, among others.

I am delighted that “enablers” have become a more visible target of enforcement actions. More must be done, however. Poke around in ISP land and what do you find? As my lecture pointed out, “Respectable companies in upscale neighborhoods harbor enablers, so one doesn’t have to travel to Bulgaria or Moldova to do research. Silicon Valley is closer and stocked with enablers; the area is a hurricane of crime.

In closing, I ask, “Why are discoveries of this type of industrialized criminal activity unearthed by one outfit?" And, “What are the other cyber threat folks chasing?”

Stephen E Arnold, December 14, 2023

Apple Harvests Old Bell Tel Ideas

December 14, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I am not a Bell head. True, my team did work at Bell Labs. In mid project, Judge Green’s order was enforced; therefore, the project morphed into a Bellcore job. I had opportunities to buy a Young Pioneer T shirt. Apple’s online store has “matured” that idea. The computer platform was one of those inviolate things. Apple is into digital chastity belts too I believe. Lose your iTunes’ password, and you are instantly transferred back to the world of Bell Tel hell if you “lost” your Western Electric 202 handset.

So what?

I read “Apple Shutters Third-Party Apps That Enabled iMessage on Android.” In my opinion, the write up says, “Apple killed a cross platform messaging application.” This is no surprise to anyone who had the experience of attending pre-Judge Green meetings. May I illustrate? In one meeting in Manhattan, the firm with which I was affiliated attended a meeting to explain a proposal and the fee for professional services. I don’t recall what my colleagues and I were pitching, I just remember the reaction to the fee. I am a dinobaby, but the remark ran along this railroad line:

A Fruit Company executive visits a user. The visit is intended to make clear that the user will suffer penalties if she continues to operate outside the rules of the orchard. That MSFT Copilot. Only three tries today to get one good enough cartoon.

That’s a big number. We may have to raise the price of long-distance calls. But you guys won’t get paid until we get enough freight cars organized. We will deliver the payment in nickels, dimes, and quarters.

Yep, a Bell head joke, believe it or not. Ho, ho, ho. Railcars filled with coins.

The write up states:

The iPhone maker said in a statement it “took steps to protect our users by blocking techniques that exploit fake credentials in order to gain access to iMessage.” It added that “these techniques posed significant risks to user security and privacy, including the potential for metadata exposure and enabling unwanted messages, spam, and phishing attacks.” The company said it would continue to make changes in the future to protect its users.

If you remember the days when a person tried to connect a non-Western Electric device into the Bell phone system, the comments were generally similar. Unauthorized devices could imperil national security or cause people to die. There you go.

As a resident of Kentucky, I am delighted that big companies want to protect me. Those Kentuckians unfortunate enough to have gobbled a certain pharma company’s medications may not believe the “protect users” argument.

As a dinobaby, I see Apple’s “protect users” play as little more than an overt and somewhat clumsy attempt to kill cross platform messaging. The motives are easy to identify:

- Protect the monopoly until Apply-pleasing terms can be put in place

- Demonstrate that the company is more powerful than an upstart innovator

- Put the government on notice that it will control its messaging platform

Oh, I almost forget. Apple wants to “protect users.” Bell/AT&T thinking has fertilized the soil in the Apple orchard in my view. I feel more protected already even though a group fired mortars at a certain meeting’s attendees, causing me to hide in a basement until the supply of shells was exhausted.

Oh, yeah, there were people who were supposed to protect me and others at the meeting. How did that work out?

Stephen E Arnold, December 13, 2023

x

x

x