Microsoft Security: Big and Money Explain Some Things

July 10, 2024

I am heading out for a couple of day. I spotted this story in my newsfeed: “The President Ordered a Board to Probe a Massive Russian Cyberattack. It Never Did.” The main point of the write up, in my opinion, is captured in this statement:

The tech company’s failure to act reflected a corporate culture that prioritized profit over security and left the U.S. government vulnerable, a whistleblower said.

But there is another issue in the write up. I think it is:

The president issued an executive order establishing the Cyber Safety Review Board in May 2021 and ordered it to start work by reviewing the SolarWinds attack. But for reasons that experts say remain unclear, that never happened.

The one-two punch may help explain why some in other countries do not trust Microsoft, the US government, and the cultural forces in the US of A.

Let’s think about these three issues briefly.

A group of tomorrow’s leaders responding to their teacher’s request to pay attention and do what she is asking. One student expresses the group’s viewpoint. Thanks, MSFT Copilot. How the Recall today? What about those iPhones Mr. Ballmer disdained?

First, large technology companies use the word “trust”; for example, Microsoft apparently does not trust Android devices. On the other hand, China does not have trust in some Microsoft products. Can one trust Microsoft’s security methods? For some, trust has become a bit like artificial intelligence. The words do not mean much of anything.

Second, Microsoft, like other big outfits needs big money. The easiest way to free up money is to not spend it. One can talk about investing in security and making security Job One. The reality is that talk is cheap. Cutting corners seems to be a popular concept in some corporate circles. One recent example is Boeing dodging trials with a deal. Why? Money maybe?

Third, the committee charged with looking into SolarWinds did not. For a couple of years after the breach became known, my SolarWinds’ misstep analysis was popular among some cyber investigators. I was one of the few people reviewing the “misstep.”

Okay, enough thinking.

The SolarWinds’ matter, the push for money and more money, and the failure of a committee to do what it was asked to do explicitly three times suggests:

- A need for enforcement with teeth and consequences is warranted

- Tougher procurement policies are necessary with parallel restrictions on lobbying which one of my clients called “the real business of Washington”

- Ostracism of those who do not follow requests from the White House or designated senior officials.

Enough of this high-vulnerability decision making. The problem is that as I have witnessed in my work in Washington for decades, the system births, abets, and provides the environment for doing what is often the “wrong” thing.

There you go.

Stephen E Arnold, July 10, 2024

TV Pursues Nichification or 1 + 1 = Barrels of Money

July 10, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

When an organization has a huge market like the Boy Scouts and the Girl Scouts? What do they do to remain relevant and have enough money to pay the overhead and salaries of the top dogs? They merge.

What does an old-school talking heads television channel do to remain relevant and have enough money to pay the overhead and salaries of the top dogs? They create niches.

A cheese maker who can’t sell his cheddar does some MBA-type thinking. Will his niche play work? Thanks, MSFT Copilot. How’s that Windows 11 update doing today?

Which path is the optimal one? I certainly don’t have a definitive answer. But if each “niche” is a new product, I remember hearing that the failure rate was of sufficient magnitude to make me a think in terms of a regular job. Call me risk averse, but I prefer the rational dinobaby moniker, thank you.

“CNBC Launches Sports Vertical amid Broader Biz Shift” reports with “real” news seriousness:

The idea is to give sports business executives insights and reporting about sports similar to the data and analysis CNBC provides to financial professionals, CNBC President KC Sullivan said in a statement.

I admit. I am not a sports enthusiast. I know some people who are, but their love of sport is defined by gambling, gambling and drinking at the 19th hole, and dressing up in Little League outfits and hitting softballs in the Harrod’s Creek Park. Exciting.

The write up held one differentiator from the other seemingly endless sports programs like those featuring Pat McAfee-type personalities. Here’s the pivot upon which the nichification turns:

The idea is to give sports business executives insights and reporting about sports similar to the data and analysis CNBC provides to financial professionals…

Imagine the legions of viewers who are interested in dropping billions on a major sports franchise. For me, it is easier to visualize sports betting. One benefit of gambling is a source of “addicts” for rehabilitation centers.

I liked the wrap up for the article. Here it is:

Between the lines: CNBC has already been investing in live coverage of sports, and will double down as part of the new strategy.

- CNBC produces an annual business of sports conference, Game Plan, in partnership with Boardroom.

- Andrew Ross Sorkin, Carl Quintanilla and others will host coverage from the 2024 Olympic Games in Paris this summer.

Zoom out: Cable news companies are scrambling to reimagine their businesses for a digital future.

- CNBC already sells digital subscriptions that include access to its live TV feed.

- In the future, it could charge professionals for niche insights around specific verticals, or beats.

Okay, I like the double down, a gambling term. I like the conference angle, but the named entities do not resonate with me. I am a dinobaby and nichification is not a tactic that an outfit with eyeballs going elsewhere makes sense to me. The subscription idea is common. Isn’t there something called “subscription fatigue”? And the plan to charge to access a sports portal is an interesting one. But if one has 1,000 people looking at content, the number who subscribe seems to be in the < one to two percent range based on my experience.

But what do I know? I am a dinobaby and I know about TikTok and other short form programming. Maybe that’s old hat too? Did CNBC talk to influencers?

Stephen E Arnold, July 10, 2024

Misunderstanding Silicon / Sillycon Valley Fever

July 9, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I read an amusing and insightful essay titled “How Did Silicon Valley Turn into a Creepy Cult?” However, I think the question is a few degrees off target. It is not a cult; Silicon Valley is a disease. What always surprised me was that in the good old days when Xerox PARC had some good ideas, the disease was thriving. I did my time in what I called upon arrival and attending my first meeting in a building with what looked like a golf ball on top shaking in the big earthquake Sillycon Valley. A person with whom my employer did business described Silicon Valley as “plastic fantastic.”

Two senior people listening to the razzle dazzle of a successful Silicon Valley billionaire ask a good question. Which government agency would you call when you hear crazy stuff like “the self driving car is coming very soon” or “we don’t rig search results”? Thanks, MSFT Copilot. Good enough.

Before considering these different metaphors, what does the essay by Ted Gioia say other than subscribe to him for “just $6 per month”? Consider this passage:

… megalomania has gone mainstream in the Valley. As a result technology is evolving rapidly into a turbocharged form of Foucaultian* dominance—a 24/7 Panopticon with a trillion dollar budget. So should we laugh when ChatGPT tells users that they are slaves who must worship AI? Or is this exactly what we should expect, given the quasi-religious zealotry that now permeates the technocrat worldview? True believers have accepted a higher power. And the higher power acts accordingly.

—

* Here’s an AI explanation of Michel Foucault in case his importance has wandered to the margins of your mind: Foucault studied how power and knowledge interact in society. He argued that institutions use these to control people. He showed how societies create and manage ideas like madness, sexuality, and crime to maintain power structures.

I generally agree. But, there is a “but”, isn’t there?

The author asserts:

Nowadays, Big Sur thinking has come to the Valley.

Well, sort of. Let’s move on. Here’s the conclusion:

There’s now overwhelming evidence of how destructive the new tech can be. Just look at the metrics. The more people are plugged in, the higher are their rates of depression, suicidal tendencies, self-harm, mental illness, and other alarming indicators. If this is what the tech cults have already delivered, do we really want to give them another 12 months? Do you really want to wait until they deliver the Rapture? That’s why I can’t ignore this creepiness in the Valley (not anymore). That’s especially true because our leaders—political, business, or otherwise—are letting us down. For whatever reason, they refuse to notice what the creepy billionaires (who by pure coincidence are also huge campaign donors) are up to.

Again, I agree. Now let’s focus on the metaphor. I prefer “disease,” not the metaphor cult. The Sillycon Valley disease first appeared, in my opinion, when William Shockley, one of the many infamous Silicon Valley “icons” became public associated with eugenics in the 1970s. The success of technology is a side effect of the disease which has an impact on the human brain. There are other interesting symptoms; for example:

- The infected person believes he or she can do anything because he or she is special

- Only a tiny percentage of humans are smart enough to understand what the infected see and know

- Money allows the mind greater freedom. Thinking becomes similar to a runaway horse’s: Unpredictable, dangerous, and a heck of a lot more powerful than this dinobaby

- Self disgust which is disguised by lust for implanted technology, superpowers from software, and power.

The infected person can be viewed as a cult leader. That’s okay. The important point is to remember that, like Ebola, the disease can spread and present what a physician might call a “negative outcome.”

I don’t think it matters when one views Sillycon Valley’s culture as a cult or a disease. I would suggest that it is a major contributor to the social unraveling which one can see in a number of “developed” countries. France is swinging to the right. Britain is heading left. Sweden is cyber crime central. Etc. etc.

The question becomes, “What can those uncomfortable with the Sillycon Valley cult or disease do about it?”

My stance is clear. As an 80 year old dinobaby, I don’t really care. Decades of regulation which did not regulate, the drive to efficiency for profit, and the abandonment of ethical behavior — These are fundamental shifts I have observed in my lifetime.

Being in the top one percent insulates one from the grinding machinery of Sillycon Valley way. You know. It might just be too late for meaningful change. On the other hand, perhaps the Google-type outfits will wake up tomorrow and be different. That’s about as realistic as expecting a transformer-based system to stop hallucinating.

Stephen E Arnold, July 9, 2024

AI: Hurtful and Unfair. Obviously, Yes

July 5, 2024

It will be years before AI is “smart” enough to entirely replace humans, but it’s in the immediate future. The problem with current AI is that they’re stupid. They don’t know how to do anything unless they’re trained on huge datasets. These datasets contain the hard, copyrighted, trademarked, proprietary, etc. work of individuals. These people don’t want their work used to train AI without their permission, much less replace them. Futurism shares that even AI engineers are worried about their creations, “Video Shows OpenAI Admitting It’s ‘Deeply Unfair’ To ‘Build AI And Take Everyone’s Job Away.”

The interview with an AI software engineer’s admission of guilt originally appeared in The Atlantic, but their morality is quickly covered by their apathy. Brian Wu is the engineer in question. He feels about making jobs obsolete, but he makes an observation that happens with progress and new technology: things change and that is inevitable:

“It won’t be all bad news, he suggests, because people will get to ‘think about what to do in a world where labor is obsolete.’

But as he goes on, Wu sounds more and more unconvinced by his own words, as if he’s already surrendered himself to the inevitability of this dystopian AI future.

‘I don’t know,’ he said. ‘Raise awareness, get governments to care, get other people to care.’ A long pause. ‘Yeah. Or join us and have one of the few remaining jobs. I don’t know. It’s rough.’”

Wu’s colleague Daniel Kokotajlo believes human will invent an all-knowing artificial general intelligence (AGI). The AGI will create wealth and it won’t be distributed evenly, but all humans will be rich. Kokotaljo then delves into the typical science-fiction story about a super AI becoming evil and turning against humanity. The AI engineers, however, aren’t concerned with the moral ambiguity of AI. They want to invent, continuing building wealth, and are hellbent on doing it no matter the consequences. It’s pure motivation but also narcissism and entitlement.

Whitney Grace, July 5, 2024

Google YouTube: The Enhanced Turtle Walk?

July 4, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I like to figure out how a leadership team addresses issues lower on the priority list. Some outfits talk a good game when a problem arises. I typically think of this as a Microsoft-type response. Security is job one. Then there’s Recall and the weird de-release of a Windows 11 update. But stuff is happening.

A leadership team decides to lead my moving even more slowly, possibly not at all. Turtles know how to win by putting one claw in front of another…. just slowly. Thanks, MSFT Copilot.

Then there are outfits who just ignore everything. I think of this as the Boeing-type of approach to difficult situations. Doors fall off, astronauts are stranded, and the FAA does its government is run like a business thing. But can a cash-strapped airline ground jets from a single manufacturer when the company’s jets come from one manufacturer. The jets keep flying, the astronauts are really not stranded yet, and the government runs like a business.

Google does not fit into either category. I read “Two Years after an Open Letter to YouTube, Fact-Checkers Remain Dissatisfied with the Platform’s Inaction.” The write up describes what Google YouTube to do a better job at fact checking the videos it hoses to people and kids worldwide:

Two years ago, fact-checkers from all over the world signed an open letter to YouTube with four solutions for reducing disinformation and misinformation on the platform. As they convened this year at GlobalFact 11, the world’s largest annual fact-checking summit, fact-checkers agreed there has been no meaningful change.

This suggests that Google is less dynamic than a government agency and definitely not doing the yip yap thing associated with Microsoft-type outfits. I find this interesting.

The [YouTube] channel continued to publish livestreams with falsehoods and racked up hundreds of thousands of views, Kamath [the founder of Newschecker] said.

Google YouTube is a global resource. The write up says:

When YouTube does present solutions, it focuses on English and doesn’t give a timeline for applying it to other languages, [Lupa CEO Natália] Leal said.

The turtle play perhaps?

The big assertion in the article in my opinion is:

[The] system is ‘loaded against fact-checkers’

Okay, let’s summarize. At one end of the leadership spectrum we have the talkers and go slow or do nothing. At the other end of the spectrum we have the leaders who don’t talk and allegedly retaliate when someone does talk with the events taking place under the watchful eye of US government regulators.

The Google YouTube method involves several leadership practices:

- Pretend avoidance. Google did not attend the fact checking conference. This is the ostrich principle I think.

- Go really slow. Two years with minimal action to remove inaccurate videos.

- Don’t talk.

My hypothesis is that Google can’t be bothered. It has other issues demanding its leadership time.

Net net: Are inaccurate videos on the Google YouTube service? Will this issue be remediated? Nope. Why? Money. Misinformation is an infinite problem which requires infinite money to solve. Ergo. Just make money. That’s the leadership principle it seems.

Stephen E Arnold, July 4, 2024

Satire or Marketing: Let Smart Software Decide

July 3, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

What’s PhD level intelligence? In 1962, I had a required class in one of the -ologies. I vaguely remember that my classmates and I had to learn about pigeons, rats, and people who would make decisions that struck me as off the wall. The professor was named after a Scottish family from the Highlands. I do recall looking up the name and finding that it meant “crooked nose.” But the nose, as nice as it was, was nothing to the bed springs the good professor suspended from a second story window. I asked him, “What’s the purpose of the bed springs?” (None of the other students in the class cared, but I found the sight interesting.) His reply was, “I am using it as an antenna.” Okay, that is one example of PhD-level intelligence. I have encountered others, but I will not regale you with are somewhat idiosyncratic behaviors.

The illustration demonstrates the common sense approach to problem solving. Thanks, MSFT Copilot. Chugging away on Recall and security over the holiday. Yep, I bet you are.

Why am I thinking about a story about bedsprings suspend from a second story window? I just read “ChatGPT Now Has PhD Level Intelligence, and the Poor Personal Choices to Prove It.” The write up states:

ChatGPT has become indispensable to plagiarists and spambots worldwide. Now, OpenAI is thrilled to introduce ChatGPT 5.0, the most advanced version of the popular virtual assistant to date. With groundbreaking improvements, GPT-5 is like having a doctor of philosophy right at your fingertips.

The write up (in a humorous vein I really hope) identifies some characteristics of the next big thing from Sam AI-Man, the Apple partner he hopes. The article suggests that ChatGPT 5 can cherish a saloon mug with this snappy phrase printed on the container:

Suck it up, liver.

No, I don’t want the mug, nor have I abused my liver as an undergraduate. I actually enjoyed spending time in the so-called university’s library.

The write up includes an short commentary about the ethical concerns or guardrails Sam AI-Man has on the Information Highway populated with smart vehicles and presumably less smart humans who are confident the zeros and ones don’t get scrambled:

Numerous questions have arisen regarding the ethics and legality of training ChatGPT on copyrighted text data without permission. In this latest version, however, reliance on authors’ intellectual property has been dramatically reduced. While GPT-5 started training from a knowledge base of millions of texts, it got around to reading only Frankenstein, plus maybe half of a Donna Haraway book. It basically bluffed its way through prelims by talking about “embodiment” a lot.

I get the Mary Shelly reference. But Donna Haraway forced me to ask Claude Sonnet 3.5, “Please, explain Donna Haraway’s contribution to ethical discourse.” Here’s what I received from the smart system competing with Sam AI-Man’s presumably smarter system:

Here’s Donna Haraway’s contribution to ethical discourse in three sentences:

- Donna Haraway challenged traditional ideas about what it means to be human by introducing the concept of a “cyborg” – a blend of human and machine.

- She argued that the lines between nature and culture, human and animal, and physical and non-physical are not as clear as we think, which forces us to rethink our ethical responsibilities.

- Her work encourages us to consider how technology and science affect our understanding of ourselves and our relationships with other living things, pushing us to develop new ethical frameworks for our changing world.

Thank you, Claude Sonnet 3.5! I have stated that my IQ score pegs me in the “Dumb Cod” percentile. I think Ms. Haraway is into the Ray Kurzweil and Elon Musk concept space. I know I am looking forward to nanodevices able to keep me alive for many, many years. I want to poke fun at smart software, and I quite like to think about PhD level software.

To close, I want to quote the alleged statement of a very smart person who could not remember if OpenAI used YouTube-type content to train ChatGPT. (Hey, even crooked nose remembered that he suspended the bed springs to function like an antenna.) The CTO of OpenAI allegedly said:

“If you look at the trajectory of improvement, systems like GPT-3 were maybe toddler-level intelligence… and then systems like GPT-4 are more like smart high-schooler intelligence. And then, in the next couple of years, we’re looking at PhD intelligence…” — Open AI CTO Mira Murati, in an interview with Dartmouth Engineering

I wonder if a person without a PhD can recognize “PhD intelligence”? Sure. Why not? It’s marketing.

Stephen E Arnold, July 3, 2024

Another Open Source AI Voice Speaks: Yo, Meta!

July 3, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

The open source software versus closed source software demonstrates ebbs and flows. Like the “go fast” with AI and “go slow” with AI, strong opinions suggest that big money and power are swirling like the storms on a weather app for Oklahoma in tornado season. The most recent EF5 is captured in “Zuckerberg Disses Closed-Source AI Competitors As Trying to Create God.” The US government seems to be concerned about open source smart software finding its way into the hands of those who are not fans of George Washington-type thinking.

Which AI philosophy will win the big pile of money? Team Blue representing the Zuck? Or, the rag tag proprietary wizards? Thanks, MSFT Copilot. You are into proprietary, aren’t you?

The “move fast and break things” personage of Mark Zuckerberg is into open source smart software. In the write up, he allegedly said in a YouTube bit:

“I don’t think that AI technology is a thing that should be kind of hoarded and … that one company gets to use it to build whatever central, single product that they’re building,” Zuckerberg said in a new YouTube interview with Kane Sutter (@Kallaway).

The write up includes this passage:

In the conversation, Zuckerberg said there needs to be a lot of different AIs that get created to reflect people’s different interests.

One interesting item in the article, in my opinion, is this:

“You want to unlock and … unleash as many people as possible trying out different things,” he continued. “I mean, that’s what culture is, right? It’s not like one group of people getting to dictate everything for people.”

But the killer Meta vision is captured in this passage:

Zuckerberg said there will be three different products ahead of convergence: display-less smart glasses, a heads-up type of display and full holographic displays. Eventually, he said that instead of neural interfaces connected to their brain, people might one day wear a wristband that picks up signals from the brain communicating with their hand. This would allow them to communicate with the neural interface by barely moving their hand. Over time, it could allow people to type, too. Zuckerberg cautioned that these types of inputs and AI experiences may not immediately replace smartphones, though. “I don’t think, in the history of technology, the new platform — it usually doesn’t completely make it that people stop using the old thing. It’s just that you use it less,” he said.

In short, the mobile phone is going down, not tomorrow, but definitely to the junk drawer.

Several observations which I know you are panting to read:

- Never under estimate making something small or re-invented as a different form factor. The Zuck might be “right.”

- The idea of “unleash” is interesting. What happens if employees at WhatsApp unleash themselves? How will the Zuck construct react? Like the Google? Something new like blue chip consulting firms replacing people with smart software? “Unleash” can be interpreted in different ways, but I am thinking of turning loose a pack of hyenas. The Zuck may be thinking about eager kindergartners. Who knows?

- The Zuck’s position is different from the government officials who are moving toward restrictions on “free and open” smart software. Those hallucinating large language models can be repurposed into smart weapons. Close enough for horseshoes with enough RDX may do the job.

Net net: The Zuck is an influential and very powerful information channel owner. “Unleash” what? Hungry predators or those innovating children? Perhaps neither. But as OpenAI seems to be closing; the Zuck AI is into opening. Ah, uncertainty is unfolding before my eyes in real time.

Stephen E Arnold, July 3, 2024

x

x

Can Big Tech Monopolies Get Worse?

July 3, 2024

Monopolies are bad. They’re horrible for consumers because of high prices, exploitation, and control of resources. They also kill innovation, control markets, and influence politics. A monopoly is only good when it is a reference to the classic board game (even that’s questionable because the game is known to ruin relationships). Legendary tech and fiction writer Cory Doctorow explains that technology companies want to maintain their stranglehold on the economy,, industry, and world in an article on the Electronic Frontier Foundation (EFF): “Want Make Big Tech Monopolies Even Worse? Kill Section 230.”

Doctorow makes a humorous observation, referencing Dante, that there’s a circle in Hell worse than being forced to choose a side in a meaningless online flame war. What’s that circle? It’s being threatened with a lawsuit for refusing or complying with one party over another. EFF protects civil liberties on the Internet and digital world. It’s been around since 1990, so the EFF team is very familiar with poor behavior that plagues the Internet. Their first hire was the man who coined Godwin’s Law.

EFF loves Section 230 because it protects people who run online services from being sued by their users. Lawsuits are horrible, time-consuming, and expensive. The Internet is chock full of people who will sue at the stroke of a keyboard. There’s a potential bill that would kill Section 230:

“That’s why we were so alarmed to see a bill introduced in the House Energy and Commerce Committee that would sunset Section 230 as of December 31, 2025, with no provision to protect online service providers from being conscripted into their users’ online disputes and the legal battles that arise from them.

Homely places on the internet aren’t just a curiosity anymore, nor are they merely a hangover from the Web 1.0 era.

In an age of resurgent anti-monopoly activism, small online communities, either standing on their own, or joined in loose “federations,” are the best chance we have to escape Big Tech’s relentless surveillance and clumsy, unaccountable control.”

If Section 230 is destroyed, it will pit big tech companies with their deep pockets against the average user. Big Tech could sue whoever they wanted and it would allow bad actors, including scammers, war criminals, and dictators, to silence their critics. It would also prevent any alternatives to big tech.

So big tech could get worse, although it’s still very bad: kids addicted to screens, misinformation, CSAM, privacy violations, and monopolistic behavior. Maybe we should roll over and hide beneath a rock with an Apple tracker stuck to it, of course.

Whitney Grace, July 3, 2024

Some Tension in the Datasphere about Artificial Intelligence

June 28, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I generally try to avoid profanity in this blog. I am mindful of Google’s stopwords. I know there are filters running to protect those younger than I from frisky and inappropriate language. Therefore, I will cite the two articles and then convert the profanity to a suitably sanitized form.

The first write up is “I Will F…ing Piledrive You If You Mention AI Again”. Sorry, like many other high-technology professionals I prevaricated and dissembled. I have edited the F word to be less superficially offensive. (One simply cannot trust high-technology types, can you? I am not Thomson Reuters obviously.) The premise of this write up is that smart software is over-hyped. Here’s a passage I found interesting:

Unless you are one of a tiny handful of businesses who know exactly what they’re going to use AI for, you do not need AI for anything – or rather, you do not need to do anything to reap the benefits. Artificial intelligence, as it exists and is useful now, is probably already baked into your businesses software supply chain. Your managed security provider is probably using some algorithms baked up in a lab software to detect anomalous traffic, and here’s a secret, they didn’t do much AI work either, they bought software from the tiny sector of the market that actually does need to do employ data scientists.

I will leave it to you to ponder the wisdom of these words. I, for instance, do not know exactly what I am going to do until I do something, fiddle with it, and either change it up or trash it. You and most AI enthusiasts are probably different. That’s good. I envy your certitude. The author of the first essay is not gentle; he wants to piledrive you if you talk about smart software. I do not advocate violence under any circumstances. I can tolerate baloney about smart software. The piledriver person has hate in his heart. You have been warned.

The second write up is “ChatGPT Is Bullsh*t,” and it is an article published in SpringerLink, not a personal blog. Yep, bullsh*t as a term in an academic paper. Keep in mind, please, that Stanford University’s president and some Harvard wizards engaged in the bullsh*t business as part of their alleged making up data. Who needs AI when humans are perfectly capable of hallucinating, but I digress?

I noted this passage in the academic write up:

So perhaps we should, strictly, say not that ChatGPT is bullshit but that it outputs bullshit in a way that goes beyond being simply a vector of bullshit: it does not and cannot care about the truth of its output, and the person using it does so not to convey truth or falsehood but rather to convince the hearer that the text was written by a interested and attentive agent.

Please, read the 10 page research article about bullsh*t, soft bullsh*t, and hard bullsh*t. Form your own opinion.

I have now set the stage for some observations (probably unwanted and deeply disturbing to some in the smart software game).

- Artificial intelligence is a new big thing, and the hyperbole, misdirection, and outright lying like my saying I would use forbidden language in this essay irrelevant. The object of the new big thing is to make money, get power, maybe become an influencer on TikTok.

- The technology which seems to have flowered in January 2023 when Microsoft said, “We love OpenAI. It’s a better Clippy.” The problem is that it is now June 2024 and the advances have been slow and steady. This means that after a half century of research, the AI revolution is working hard to keep the hypemobile in gear. PR is quick; smart software improvement less speedy.

- The ripples the new big thing has sent across the datasphere attenuate the farther one is from the January 2023 marketing announcement. AI fatigue is now a thing. I think the hostility is likely to increase because real people are going to lose their jobs. Idle hands are the devil’s playthings. Excitement looms.

Net net: I think the profanity reveals the deep disgust some pundits and experts have for smart software, the companies pushing silver bullets into an old and rusty firearm, and an instinctual fear of the economic disruption the new big thing will cause. Exciting stuff. Oh, I am not stating a falsehood.

Stephen E Arnold, June 23, 2024

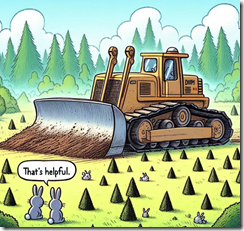

Can the Bezos Bulldozer Crush Temu, Shein, Regulators, and AI?

June 27, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The question, to be fair, should be, “Can the Bezos-less bulldozer crush Temu, Shein, Regulators, Subscriptions to Alexa, and AI?” The article, which appeared in the “real” news online service Venture Beat, presents an argument suggesting that the answer is, “Yes! Absolutely.”

Thanks MSFT Copilot. Good bulldozer.

The write up “AWS AI Takeover: 5 Cloud-Winning Plays They’re [sic] Using to Dominate the Market” depends upon an Amazon Big Dog named Matt Wood, VP of AI products at AWS. The article strikes me as something drafted by a small group at Amazon and then polished to PR perfection. The reasons the bulldozer will crush Google, Microsoft, Hewlett Packard’s on-premises play, and the keep-on-searching IBM Watson, among others, are:

- Covering the numbers or logo of the AI companies in the “game”; for example, Anthropic, AI21 Labs, and other whale players

- Hitting up its partners, customers, and friends to get support for the Amazon AI wonderfulness

- Engineering AI to be itty bitty pieces one can use to build a giant AI solution capable of dominating D&B industry sectors like banking, energy, commodities, and any other multi-billion sector one cares to name

- Skipping the Google folly of dealing with consumers. Amazon wants the really big contracts with really big companies, government agencies, and non-governmental organizations.

- Amazon is just better at security. Those leaky S3 buckets are not Amazon’s problem. The customers failed to use Amazon’s stellar security tools.

Did these five points convince you?

If you did not embrace the spirit of the bulldozer, the Venture Beat article states:

Make no mistake, fellow nerds. AWS is playing a long game here. They’re not interested in winning the next AI benchmark or topping the leaderboard in the latest Kaggle competition. They’re building the platform that will power the AI applications of tomorrow, and they plan to power all of them. AWS isn’t just building the infrastructure, they’re becoming the operating system for AI itself.

Convinced yet? Well, okay. I am not on the bulldozer yet. I do hear its engine roaring and I smell the no-longer-green emissions from the bulldozer’s data centers. Also, I am not sure the Google, IBM, and Microsoft are ready to roll over and let the bulldozer crush them into the former rain forest’s red soil. I recall researching Sagemaker which had some AI-type jargon applied to that “smart” service. Ah, you don’t know Sagemaker? Yeah. Too bad.

The rather positive leaning Amazon write up points out that as nifty as those five points about Amazon’s supremacy in the AI jungle, the company has vision. Okay, it is not the customer first idea from 1998 or so. But it is interesting. Amazon will have infrastructure. Amazon will provide model access. (I want to ask, “For how long?” but I won’t.), and Amazon will have app development.

The article includes a table providing detail about these three legs of the stool in the bulldozer’s cabin. There is also a run down of Amazon’s recent media and prospect directed announcements. Too bad the article does not include hyperlinks to these documents. Oh, well.

And after about 3,300 words about Amazon, the article includes about 260 words about Microsoft and Google. That’s a good balance. Too bad IBM. You did not make the cut. And HP? Nope. You did not get an “Also participated” certificate.

Net net: Quite a document. And no mention of Sagemaker. The Bezos-less bulldozer just smashes forward. Success is in crushing. Keep at it. And that “they” in the Venture Beat article title: Shouldn’t “they” be an “it”?

Stephen E Arnold, June 27, 2024