Content Conversion: Search and AI Vendors Downplay the Task

November 19, 2024

No smart software. Just a dumb dinobaby. Oh, the art? Yeah, MidJourney.

No smart software. Just a dumb dinobaby. Oh, the art? Yeah, MidJourney.

Marketers and PR people often have degrees in political science, communications, or art history. This academic foundation means that some of these professionals can listen to a presentation and struggle to figure out what’s a horse, what’s horse feathers, and what’s horse output.

Consequently, many organizations engaged in “selling” enterprise search, smart software, and fusion-capable intelligence systems downplay or just fib about how darned easy it is to take “content” and shove it into the Fancy Dan smart software. The pitch goes something like this: “We have filters that can handle 90 percent of the organization’s content. Word, PowerPoint, Excel, Portable Document Format (PDF), HTML, XML, and data from any system that can export tab delimited content. Just import and let our system increase your ability to analyze vast amounts of content. Yada yada yada.”

Thanks, Midjourney. Good enough.

The problem is that real life content is often a problem. I am not going to trot out my list of content problem children. Instead I want to ask a question: If dealing with content is a slam dunk, why do companies like IBM and Oracle sustain specialized tools to convert Content Type A into Content Type B?

The answer is that content processing is an essential step because [a] structured and unstructured content can exist in different versions. Figuring out the one that is least wrong and most timely is tricky. [b] Humans love mobile devices, laptops, home computers, photos, videos, and audio. Furthermore, how does a content processing get those types of content from a source not located in an organization’s office (assuming it has one) and into the content processing system? The answer is, “Money, time, persuasion, and knowledge of what employee has what.” Finding a unicorn at the Kentucky Derby is more likely. [c] Specialized systems employ lingo like “Export as” and provide some file types. Yeah. The problem is that the output may not contain everything that is in the specialized software program. Examples range from computational chemistry systems to those nifty AutoCAD type drawing system to slick electronic trace routing solutions to DaVinci Resolve video systems which can happily pull “content” from numerous places on a proprietary network set up. Yeah, no problem.

Evidence of how big this content conversion issue is appears in the IBM write up “A New Tool to Unlock Data from Enterprise Documents for Generative AI.” If the content conversion work is trivial, why is IBM wasting time and brainpower figuring out something like making a PowerPoint file smart software friendly?

The reason is that as big outfits get “into” smart software, the people working on the project find that the exception folder gets filled up. Some documents and content types don’t convert. If a boss asks, “How do we know the data in the AI system are accurate?”, the hapless IT person looking at the exception folder either lies or says in a professional voice, “We don’t have a clue?”

IBM’s write up says:

IBM’s new open-source toolkit, Docling, allows developers to more easily convert PDFs, manuals, and slide decks into specialized data for customizing enterprise AI models and grounding them on trusted information.

But one piece of software cannot do the job. That’s why IBM reports:

The second model, TableFormer, is designed to transform image-based tables into machine-readable formats with rows and columns of cells. Tables are a rich source of information, but because many of them lie buried in paper reports, they’ve historically been difficult for machines to parse. TableFormer was developed for IBM’s earlier DeepSearch project to excavate this data. In internal tests, TableFormer outperformed leading table-recognition tools.

Why are these tools needed? Here’s IBM’s rationale:

Researchers plan to build out Docling’s capabilities so that it can handle more complex data types, including math equations, charts, and business forms. Their overall aim is to unlock the full potential of enterprise data for AI applications, from analyzing legal documents to grounding LLM responses on corporate policy documents to extracting insights from technical manuals.

Based on my experience, the paragraph translates as, “This document conversion stuff is a killer problem.”

When you hear a trendy enterprise search or enterprise AI vendor talk about the wonders of its system, be sure to ask about document conversion. Here are a few questions to put the spotlight on what often becomes a black hole of costs:

- If I process 1,000 pages of PDFs, mostly text but with some charts and graphs, what’s the error rate?

- If I process 1,000 engineering drawings with embedded product and vendor data, what percentage of the content is parsed for the search or AI system?

- If I process 1,000 non text objects like videos and iPhone images, what is the time required and the metadata accuracy for the converted objects?

- Where do unprocessable source objects go? An exception folder, the trash bin, or to my in box for me to fix up?

Have fun asking questions.

Stephen E Arnold, November 19, 2024

The Internet as a Library and Archive? Ho Ho Ho

March 8, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I know that I find certain Internet-related items a knee slapper. Here’s an example: “Millions of Research Papers at Risk of Disappearing from the Internet.” The number of individuals — young at heart and allegedly-informed seniors — think the “Internet” is a library or better yet an archive like the Library of Congress’ collection of “every” book.

A person deleting data with some degree of fierceness. Yep, thanks MSFT Copilot. After three tries, this is the best of the lot for a prompt asking for an illustration of data being deleted from a personal computer. Not even good enough but I like the weird orange coloration.

Here are some basics of how “Internet” services work:

- Every year costs go up of storage for old and usually never or rarely accessed data. A bean counter calls a meeting and asks, “Do we need to keep paying for ping, power, and pipes?” Some one points out, “Usage of X percent of the data described as “old” is 0.0003 percent or whatever number the bright young sprout has guess-timated. The decision is, as you might guess, dump the old files and reduce other costs immediately.

- Doing “data” or “online” is expensive, and the costs associated with each are very difficult, if not impossible to control. Neither government agencies, non-governmental outfits, the United Nations, a library in Cleveland or the estimable Harvard University have sufficient money to make available or keep at hand information. Thus, stuff disappears.

- Well-intentioned outfits like the Internet Archive or Project Gutenberg are in the same accountant ink pot. Not every Web site is indexed and archived comprehensively. Not every book that can be digitized and converted to a format someone thinks will be “forever.” As a result, one has a better chance of discovering new information browsing through donated manuscripts at the Vatican Library than running an online query.

- If something unique is online “somewhere,” that item may be unfindable. Hey, what about Duke University’s collection of “old” books from the 17th century? Who knew?

- Will a government agency archive digital content in a comprehensive manner? Nope.

The article about “risks of disappearing” is a hoot. Notice this passage:

“Our entire epistemology of science and research relies on the chain of footnotes,” explains author Martin Eve, a researcher in literature, technology and publishing at Birkbeck, University of London. “If you can’t verify what someone else has said at some other point, you’re just trusting to blind faith for artefacts that you can no longer read yourself.”

I like that word “epistemology.” Just one small problem: Trust. Didn’t the president of Stanford University have an opportunity to find his future elsewhere due to some data wonkery? Google wants to earn trust. Other outfits don’t fool around with trust; these folks gather data, exploit it, and resell it. Archiving and making it findable to a researcher or law enforcement? Not without friction, lots and lots of friction. Why verify? Estimates of non-reproducible research range from 15 percent to 40 percent of scientific, technical, and medical peer reviewed content. Trust? Hello, it’s time to wake up.

Many estimate how much new data are generated each year. I would suggest that data falling off the back end of online systems has been an active process. The first time an accountant hears the IT people say, “We can just roll off the old data and hold storage stable” is right up there with avoiding an IRS audit, finding a life partner, and billing an old person for much more than the accounting work is worth.

After 25 years, there is “risk.” Wow.

Stephen E Arnold, March 8, 2024

Big Wizards Discover What Some Autonomy Users Knew 30 Years Ago. Remarkable, Is It Not?

April 14, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

What happens if one assembles a corpus, feeds it into a smart software system, and turns it on after some tuning and optimizing for search or a related process like indexing. After a few months, the precision and recall of the system degrades. What’s the fix? Easy. Assemble a corpus. Feed it into the smart software system. Turn it on after some tuning and optimizing. The approach works and would keep the Autonomy neuro linguistic programming system working quite well.

Not only was Autonomy ahead of the information retrieval game in the late 1990s, I have made the case that its approach was one of the enablers for the smart software in use today at outfits like BAE Systems.

There were a couple of drawbacks with the Autonomy approach. The principal one was the expensive and time intensive job of assembling a training corpus. The narrower the domain, the easier this was. The broader the domain — for instance, general business information — the more resource intensive the work became.

The second drawback was that as new content was fed into the black box, the internals recalibrated to accommodate new words and phrases. Because the initial training set did not know about these words and phrases, the precision and recall from the point of the view of the user would degrade. From the engineering point of view, the Autonomy system was behaving in a known, predictable manner. The drawback was that users did not understand what I call “drift”, and the licensees’ accountants did not want to pay for the periodic and time consuming retraining.

What’s changed since the late 1990s? First, there are methods — not entirely satisfactory from my point of view — like the Snorkel-type approach. A system is trained once and then it uses methods that do retraining without expensive subject matter experts and massive time investments. The second method is the use of ChatGPT-type approaches which get trained on large volumes of content, not the comparatively small training sets feasible decades ago.

Are there “drift” issues with today’s whiz bang methods?

Yep. For supporting evidence, navigate to “91% of ML Models Degrade in Time.” The write up from big brains at “MIT, Harvard, The University of Monterrey, and other top institutions” learned about model degradation. On one hand, that’s good news. A bit of accuracy about magic software is helpful. On the other hand, the failure of big brain institutions to note the problem and then look into it is troubling. I am not going to discuss why experts don’t know what high profile advanced systems actually do. I have done that elsewhere in my monographs and articles.

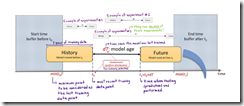

I found this “explanatory diagram” in the write up interesting:

What was the authors’ conclusion other than not knowing what was common knowledge among Autonomy-type system users in the 1990s?

You need to retrain the model! You need to embrace low cost Snorkel-type methods for building training data! You have to know what subject matter experts know even though SMEs are an endangered species!

I am glad I am old and heading into what Dylan Thomas called “that good night.” Why? The “drift” is just one obvious characteristic. There are other, more sinister issues just around the corner.

Stephen E Arnold, April 14, 2023

A Legal Information Truth Inconvenient, Expensive, and Dangerous

December 5, 2022

The Wall Street Journal published “Justice Department Prosecutors Swamped with Data As Cases Leave Long Digital Trails.” The write up addressed a problematic reality without craziness. The basic idea is that prosecutors struggle with digital information. The consequences are higher costs and in some cases allowing potentially problematic individuals to go to Burger King or corporate practices to chug along with felicity.

The article states:

Federal prosecutors are swamped by data, as the way people communicate and engage in behavior scrutinized by investigators often leaves long and complicated digital trails that can outpace the Justice Department’s technology.

What’s the fix? This is a remarkable paragraph:

The Justice Department has been working on ways to address the problem, including by seeking additional funding for electronic-evidence technology and staffing for US attorney’s offices. It is also providing guidance in an annual training for prosecutors to at times collect less data.

Okay, more money which may or may not be spent in a way to address the big data issues, more lawyers (hopefully skilled in manipulating content processing systems functions), annual training, and gather less information germane to a legal matter. I want to mention that misinformation, reformation of data, and weaponized data are apparently not present in prosecutors’ data sets or not yet recognized as a problem by the Justice Department.

My response to this interesting article includes:

- This is news? The issue has been problematic for many years. The vendors of specialized systems to manage evidence, index and make searchable content from disparate sources, and output systems which generate a record of what lawyer accessed what and when are asserting their systems can handle this problem. Obviously either licensees discover the systems don’t work like the demos or cannot handle large flows of disparate content.

- The legal industry is not associated with groundbreaking information innovation. I may be biased, but I think of lawyers knowing more about billing for their time than making use of appropriate, reliable technology for managing evidence. Excel timesheets are one thing. Dark Web forum content, telephone intercepts, and context free email and chat messages are quite different. Annual training won’t change the situation. The problem has to be addressed by law schools and lawyer certification systems. Licensing a super duper search system won’t deal with the problem no matter what consultants, vendors, and law professors say.

- The issue of “big data” is real, particularly when there are some many content objects available to a legal team, its consultants, and the government professionals working on a case or a particular matter. It is just easier to gather and then try to make sense of the data. When the necessary information is not available, time or money runs out and everyone moves on. Big data becomes a process that derails some legal proceedings.

My view is that similar examples of “data failure” will surface. The meltdown of crypto? Yes, too much data. The downstream consequences of certain medical products? Yes, too much data and possibly the subtle challenge of data shaping by certain commercial firms? The interlocks among suppliers of electrical components? Yes, too much data and possibly information weaponization by parties to a legal matter?

When online meant keyword indexing and search, old school research skills and traditional data collection were abundant. Today, short cuts and techno magic are daily fare.

It is time to face reality. Some technology is useful, but human expertise and judgment remain essential. Perhaps that will be handled in annual training, possibly on a cruise ship with colleagues? A vendor conference offering continuing education credits might be a more workable solution than smart software with built in workflow.

Stephen E Arnold, December 5, 2022

Amazon and Fake Reviews: Ah, Ha, Fake Reviews Exist

September 5, 2022

I read “Amazon’s Delay for the Rings of Power Reviews on Prime Video Part of New Initiative to Filter Out Trolls.” The write up makes reasonably official the factoid that Amazon reviews are, in many cases, more fanciful than the plot of Rings of Power.

The write up states:

The series appears to have been review bombed — when trolls flood intentionally negative reviews for a show or film — on other sites like Rotten Tomatoes, where it has an 84% rating from professional critics, but a 37% from user-submitted reviews. “The Rings of Power” has been fending off trolls for months, especially ones who take issue with the decision to cast actors of color as elves, dwarves, hand waves and other folk of Tolkien’s fictional Middle-earth.

Amazon wants to be a good shepherd for truth. The write up says:

Amazon’s new initiative to review its reviews, however, is designed to weed out ones that are posted in bad faith, deadening their impact. In the case of “A League of Their Own,” it appears to have worked: To date, the show has an average 4.3 out of 5 star rating on Prime Video, with 80% of users rating the show with five stars and 14% with one star.

Interesting. My view is that Amazon hand waves about fake reviews but for those which could endanger its own video product. Agree with me or not, Amazon is revealing that fake reviews are an issue. What about those reviews for Chinese shirts which appear to have been fabricated for folks in the seventh grade? SageMaker, what’s up?

Stephen E Arnold, September 12, 2022

Bots Are Hot

September 2, 2022

Developer Michael I Lewis had noble intentions when he launched searchmysite.net in 2020. Because Google and other prominent search engines have become little more than SEO and advertising ambushes, he worked evenings and weekends to create a search engine free from both ads and search engine optimization. The site indexes only user-submitted personal and independent sites and leaves content curation up to its community. Naturally, the site also emphasizes privacy and is open source. To keep the lights on, Lewis charges a modest listing fee. Alas, even this principled platform has failed to escape the worst goblins of the SEO field. Lewis laments, “Almost All Searches on my Independent Search Engine Are Now from SEO Spam Bots.”

SEO spam lowers the usual SEO trickery into the realm of hacking. It’s black hat practitioners exploit weaknesses, like insecure passwords or out-of-data plugins, in any website they can penetrate and plant their own keywords, links, and other dubious content. That spam then rides its target site up the search rankings as long as it can, ripping off marks along the way. If the infiltration goes on for long, the reputation and ranking of the infected website will tank, leaving its owner wondering what went awry. The results can be devastating for affected businesses.

In spring of 2022, Lewis detected a suspicious jump in non-human visitors on searchmysite.net. He writes:

“I’ve always had some activity from bots, but it has been manageable. However, in mid-April 2022, bot activity started to increase dramatically. I didn’t notice at first because the web analytics only shows real users, and the unusual activity could only be seen by looking at the server logs. I initially suspected that it was another search engine scraping results and showing them on their results page, because the IP addresses, user agents and search queries were all different. I then started to wonder if it was a DDoS attack, as the scale of the problem and the impact it was having on the servers (and therefore running costs) started to become apparent. After some deeper investigation, I noticed that most of the search queries followed a similar pattern. … It turns out that these search patterns are ‘scraping footprints’. These are used by the SEO practitioners, when combined with their search terms, to search for URLs to target, implying that searchmysite.net has been listed as a search engine in one or more SEO tools like ScrapeBox, GSA SEO or SEnuke. It is hard to imagine any legitimate white-hat SEO techniques requiring these search results, so I would have to imagine it is for black-hat SEO operations.”

Meanwhile, Lewis’ site has seen very little traffic from actual humans. Though it might be tempting to accuse major search engines of deliberately downplaying the competition, he suspects the site is simply drowning in a sea of SEO spam. Are real people browsing the Web anymore, as opposed to lapping up whatever social media sites choose to dish out? A few, but they are increasingly difficult to detect within the crowd of bots looking to make a buck.

Cynthia Murrell, September 2, 2022

Scraping By: A Winner Business Model

May 23, 2022

Will Microsoft-owned LinkedIn try, try, try again? The platform’s latest attempt to protect its users’ data from being ransacked has been thwarted, TechCrunch reveals in, “Web Scraping Is Legal, US Appeals Court Reaffirms.” The case reached the Supreme Court last year, but SCOTUS sent it back down to the Ninth Circuit of Appeals for a re-review. That court reaffirmed its original finding: scraping publicly accessible data is not a violation of the decades-old Computer Fraud and Abuse Act (CFAA). It is a decision to celebrate or to lament, depending on one’s perspective. A threat to the privacy of those who use social media and other online services, the practice is integral to many who preserve, analyze, and report information. Writer Zack Whittaker explains:

“The Ninth Circuit’s decision is a major win for archivists, academics, researchers and journalists who use tools to mass collect, or scrape, information that is publicly accessible on the internet. Without a ruling in place, long-running projects to archive websites no longer online and using publicly accessible data for academic and research studies have been left in legal limbo. But there have been egregious cases of web scraping that have sparked privacy and security concerns. Facial recognition startup Clearview AI claims to have scraped billions of social media profile photos, prompting several tech giants to file lawsuits against the startup. Several companies, including Facebook, Instagram, Parler, Venmo and Clubhouse have all had users’ data scraped over the years. The case before the Ninth Circuit was originally brought by LinkedIn against Hiq Labs, a company that uses public data to analyze employee attrition. LinkedIn said Hiq’s mass web scraping of LinkedIn user profiles was against its terms of service, amounted to hacking and was therefore a violation of the CFAA.”

The Ninth Circuit disagreed. Twice. In the latest decision, the court pointed to last year’s Supreme Court ruling which narrowed the scope of the CFAA to those who “gain unauthorized access to a computer system,” as opposed to those who simply exceed their authorization. A LinkedIn spokesperson expressed disappointment, stating the platform will “continue to fight” for its users’ rights over their data. Stay tuned.

Cynthia Murrell, May 23, 2022

UK Bill Would Require Age Verification

February 25, 2022

It might seem like a no-brainer—require age verification to protect children from adult content wherever it may appear online. But The Register insists it is not so simple in, “UK.gov Threatens to Make Adults Give Credit Card Details for Access to Facebook or TikTok.” The UK’s upcoming Online Safety Bill will compel certain websites to ensure users are 18 or older, a process often done using credit card or other sensitive data. Though at first the government vowed this requirement would only apply to dedicated porn sites, a more recent statement from the Department for Digital, Culture, Media, and Sport indicates social media companies will be included. The statement notes research suggests such sites are common places for minors to access adult material.

Writer Gareth Corfield insists the bill will not even work because teenagers are perfectly capable of using a VPN to get around age verification measures. Meanwhile, adults following the rules will have to share sensitive data with third-party gatekeepers just to keep up with friends and family on social media. Then there is the threat to encryption, which would have to be discontinued to enable the bills provision for scanning social media posts. Civil liberties groups have expressed concern, just as they did the last time around. Corfield observes:

“Prior efforts for mandatory age verification controls were originally supposed to be inserted into Digital Economy Act but were abandoned in 2019 after more than one delay. At that time, the government had designated the British Board of Film Classification, rather than Ofcom, as the age verification regulator. In 2018, it estimated that legal challenges to implementing the age check rules could cost it up to £10m in the first year alone. As we pointed out at the time, despite what lawmakers would like to believe – it’s not a simple case of taking offline laws and applying them online. There are no end of technical and societal issues thrown up by asking people to submit personal details to third parties on the internet. … The newer effort, via the Online Safety Bill, will possibly fuel Britons’ use of VPNs and workarounds, which is arguably equally as risky: free VPNs come with a lot of risks and even paid products may not always work as advertised.”

So if this measure is not viable, what could be the solution to keeping kids away from harmful content? If only each child could be assigned one or more adults responsible for what their youngsters access online. We could call them “caregivers,” “guardians,” or “parents,” perhaps.

Cynthia Murrell, February 25, 2022

Coalesce: Tackling the Bottleneck Few Talk About

February 1, 2022

Coalesce went stealth, the fancier and more modern techno slang for “going dark,” to work on projects in secret. The company has returned to the light, says Crowd Fund Insider with a robust business plan and product, plus loads of funding: “Coalesce Debuts From Stealth, Attracts $5.92M For Analytics Platform.”

Coalesce is run by a former Oracle employee and it develops products and services similar to Oracle, but with a Marklogic spin. That is one way to interpret how Coalesce announced its big return with its Coalesce Data Transformation platform that offers modeling, cleansing, governance, and documentation of data with analytical efficiency and flexibility. Do no forger that 11.2 Capital and GreatPoint Ventures raised $5.92 million in seed funding for the new data platform. Coalesce plans to use the funding for engineering functions, developing marketing strategy, and expanding sales.

Coalesce noticed that there is a weak link between organizations’ cloud analytics and actively making use of data:

“ ‘The largest bottleneck in the data analytics supply chain today is transformations. As more companies move to the cloud, the weaknesses in their data transformation layer are becoming apparent,’ said Armon Petrossian, the co-founder and CEO of Coalesce. “Data teams are struggling to keep up with the demands from the business, and this problem has only continued to grow with the volumes and complexity of data combined with the shortage of skilled people. We are on a mission to radically improve the analytics landscape by making enterprise-scale data transformations as efficient and flexible as possible.’”

Coalesce might be duplicating Oracle and MarkLogic, but if they have discovered a niche market in cloud analytics then they are about to rocket from their stealth. Hopefully the company will solve the transformation problem instead of issuing marketing statements as many other firms do.

Whitney Grace, February 1, 2022

Anonymized Location Data: an Oxymoron?

May 13, 2020

Location data. To many the term sounds innocuous, boring really. Perhaps that is why society has allowed apps to collect and sell it with no significant regulation. An engaging (and well-illustrated) piece from Norway’s NRK News, “Revealed by Mobile,” shares the minute details journalists were able to put together about one citizen from location data purchased on the open market. Graciously, this man allowed the findings to published as a cautionary tale. We suggest you read the article for yourself to absorb the chilling reality. (The link we share above runs through Google Translate.)

Vendors of location data would have us believe the information is completely anonymized and cannot be tied to the individuals who generated it. It is only good for general uses like statistics and regional marketing, they assert. Intending to put that claim to the test, NRK purchased a batch of Norwegian location data from the British firm Tamoco. Their investigation shows anonymization is an empty promise. Though the data is stripped of directly identifying information, buyers are a few Internet searches away from correlating location patterns with individuals. Journalists Trude Furuly, Henrik Lied, and Martin Gundersen tell us:

“All modern mobile phones have a GPS receiver, which with the help of satellite can track the exact position of the phone with only a few meters distance. The position data NRK acquired consisted of a table with four hundred million map coordinates from mobiles in Norway. …

“All the coordinates were linked to a date, time, and specific mobile. Thus, the coordinates showed exactly where a mobile or tablet had been at a particular time. NRK coordinated the mobile positions with a map of Norway. Each position was marked on the map as an orange dot. If a mobile was in a location repeatedly and for a long time, the points formed larger clusters. Would it be possible for us to find the identity of a mobile owner by seeing where the phone had been, in combination with some simple web searches? We selected a random mobile from the dataset.

“NRK searched the address where the mobile had left many points about the nights. The search revealed that a man and a woman lived in the house. Then we searched their Facebook profiles. There were several pictures of the two smiling together. It seemed like they were boyfriend and girlfriend. The man’s Facebook profile stated that he worked in a logistics company. When we searched the company in question, we discovered that it was in the same place as the person used to drive in the morning. Thus, we had managed to trace the person who owned the cell phone, even though the data according to Tamoco should have been anonymized.”

The journalists went on to put together a detailed record of that man’s movements over several months. It turns out they knew more about his trip to the zoo, for example, than he recalled himself. When they revealed their findings to their subject, he was shocked and immediately began deleting non-essential apps from his phone. Read the article; you may find yourself doing the same.

Cynthia Murrell, May 12, 2020