Information: Cheap, Available, and Easy to Obtain

April 9, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I worked in Sillycon Valley and learned a few factoids I found somewhat new. Let me highlight three. First, a person with whom my firm had a business relationship told me, “Chinese people are Chinese for their entire life.” I interpreted this to mean that a person from China might live in Mountain View, but that individual had ties to his native land. That makes sense but, if true, the statement has interesting implications. Second, another person told me that there was a young person who could look at a circuit board and then reproduce it in sufficient detail to draw a schematic. This sounded crazy to me, but the individual took this person to meetings, discussed his company’s interest in upcoming products, and asked for briefings. With the delightful copying machine in tow, this person would have information about forthcoming hardware, specifically video and telecommunications devices. And, finally, via a colleague I learned of an individual who was a naturalized citizen and worked at a US national laboratory. That individual swapped hard drives in photocopy machines and provided them to a family member in his home town in Wuhan. Were these anecdotes true or false? I assumed each held a grain of truth because technology adepts from China and other countries comprised a significant percentage of the professionals I encountered.

Information flows freely in US companies and other organizational entities. Some people bring buckets and collect fresh, pure data. Thanks, MSFT Copilot. If anyone knows about security, you do. Good enough.

I thought of these anecdotes when I read an allegedly accurate “real” news story called “Linwei Ding Was a Google Software Engineer. He Was Also a Prolific Thief of Trade Secrets, Say Prosecutors.” The subtitle is a bit more spicy:

U.S. officials say some of America’s most prominent tech firms have had their virtual pockets picked by Chinese corporate spies and intelligence agencies.

The write up, which may be shaped by art history majors on a mission, states:

Court records say he had others badge him into Google buildings, making it appear as if he were coming to work. In fact, prosecutors say, he was marketing himself to Chinese companies as an expert in artificial intelligence — while stealing 500 files containing some of Google’s most important AI secrets…. His case illustrates what American officials say is an ongoing nightmare for U.S. economic and national security: Some of America’s most prominent tech firms have had their virtual pockets picked by Chinese corporate spies and intelligence agencies.

Several observations about these allegedly true statements are warranted this fine spring day in rural Kentucky:

- Some managers assume that when an employee or contractor signs a confidentiality agreement, the employee will abide by that document. The problem arises when the person shares information with a family member, a friend from school, or with a company paying for information. That assumption underscores what might be called “uninformed” or “naive” behavior.

- The language barrier and certain cultural norms lock out many people who assume idle chatter and obsequious behavior signals respect and conformity with what some might call “US business norms.” Cultural “blindness” is not uncommon.

- Individuals may possess technical expertise unknown to colleagues and contracting firms offering body shop services. Armed with knowledge of photocopiers in certain US government entities, swapping out a hard drive is no big deal. A failure to appreciate an ability to draw a circuit leads to similar ineptness when discussing confidential information.

America operates in a relatively open manner. I have lived and worked in other countries, and that openness often allows information to flow. Assumptions about behavior are not based on an understanding of the cultural norms of other countries.

Net net: The vulnerability is baked in. Therefore, information is often easy to get, difficult to keep privileged, and often aided by companies and government agencies. Is there a fix? No, not without a bit more managerial rigor in the US. Money talks, moving fast and breaking things makes sense to many, and information seeps, maybe floods, from the resulting cracks. Whom does one trust? My approach: Not too many people regardless of background, what people tell me, or what I believe as an often clueless American.

Stephen E Arnold, April 9, 2024

AI Hermeneutics: The Fire Fights of Interpretation Flame

March 12, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

My hunch is that not too many of the thumb-typing, TikTok generation know what hermeneutics means. Furthermore, like most of their parents, these future masters of the phone-iverse don’t care. “Let software think for me” would make a nifty T shirt slogan at a technology conference.

This morning (March 12, 2024) I read three quite different write ups. Let me highlight each and then link the content of those documents to the the problem of interpretation of religious texts.

Thanks, MSFT Copilot. I am confident your security team is up to this task.

The first write up is a news story called “Elon Musk’s AI to Open Source Grok This Week.” The main point for me is that Mr. Musk will put the label “open source” on his Grok artificial intelligence software. The write up includes an interesting quote; to wit:

Musk further adds that the whole idea of him founding OpenAI was about open sourcing AI. He highlighted his discussion with Larry Page, the former CEO of Google, who was Musk’s friend then. “I sat in his house and talked about AI safety, and Larry did not care about AI safety at all.”

The implication is that Mr. Musk does care about safety. Okay, let’s accept that.

The second story is an ArXiv paper called “Stealing Part of a Production Language Model.” The authors are nine Googlers, two ETH wizards, one University of Washington professor, one OpenAI researcher, and one McGill University smart software luminary. In short, the big outfits are making clear that closed or open, software is rising to the task of revealing some of the inner workings of these “next big things.” The paper states:

We introduce the first model-stealing attack that extracts precise, nontrivial information from black-box production language models like OpenAI’s ChatGPT or Google’s PaLM-2…. For under $20 USD, our attack extracts the entire projection matrix of OpenAI’s ada and babbage language models.

The third item is “How Do Neural Networks Learn? A Mathematical Formula Explains How They Detect Relevant Patterns.” The main idea of this write up is that software can perform an X-ray type analysis of a black box and present some useful data about the inner workings of numerical recipes about which many AI “experts” feign total ignorance.

Several observations:

- Open source software is available to download largely without encumbrances. Good actors and bad actors can use this software and its components to let users put on a happy face or bedevil the world’s cyber security experts. Either way, smart software is out of the bag.

- In the event that someone or some organization has secrets buried in its software, those secrets can be exposed. One the secret is known, the good actors and the bad actors can surf on that information.

- The notion of an attack surface for smart software now includes the numerical recipes and the model itself. Toss in the notion of data poisoning, and the notion of vulnerability must be recast from a specific attack to a much larger type of exploitation.

Net net: I assume the many committees, NGOs, and government entities discussing AI have considered these points and incorporated these articles into informed policies. In the meantime, the AI parade continues to attract participants. Who has time to fool around with the hermeneutics of smart software?

Stephen E Arnold, March 12, 2024

Internet Governance and Enforcement Needed: Not Just One-Off Legal Spats

February 5, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

“Web Publisher Seeks Injunctive Relief to Address Web Scraper’s Domain Name Maneuvers Intended to Avoid Court Order” at first glance is another tale of woe about a content or information publisher getting its content sucked down and into another service. This happens frequently, and the limp robots.txt file does not thwart the savvy content vacuum cleaner which is digitally Hoovering its way to a minimally viable product.

An outfit called Chegg creates or recycles information to create answers to homework problems. Students, who want to have more time for swiping left and right, subscribe and use Chegg to achieve academic certification. The outfit running the digital Hoover uses different domains; for example, Homeworkify.EU and then to Homeworkify.st. This change made it possible for Homeworkify to continue sucking down Chegg’s content.

One must know where to look, have the expertise to pull away the surface, and exert effort to eliminate the problem. Thanks, MSFT Copilot Bing thing. How’s the email security today? Oh, too bad.

The write up explains that the matter is in court, the parties are seeking a decision which validates their position, and the matter bumbles onward.

My take on this is different, and I am reasonably confident that it may make pro-Chegg and pro-Homeworkify advocates uncomfortable. Here are my observations:

- Neither outfit strikes me as particularly savvy when it comes to protecting or accessing online content. There are numerous Clear Web and Dark Web sites which engage in interesting actions, and investigators often have difficulty figuring out who is who, and what what is a who doing. One example from our own recent research has been our effort to determine “who” or “what” is behind the domain altenen.is. There are some hurdles to get over before the question can be answered. The operators of certain sites like the credit card outfit move around from domain to domain. This is accomplished automatically.

- The domain name registrars are an interesting group of companies. Upon examination, an ISP can be a domain reseller, operate an auction service buyers and sellers of “registered” domains, or operate as an ISP, a provider of virtual hosting, a domain name seller, and a domain name marketplace with connections to other domain name businesses. Getting lost in this mostly unregulated niche is quite easy. The sophisticated operators can appear to be a legitimate company with alleged locations in France or Russia. One outfit engaged in some interesting “reseller” activities appears to be in jail is Israel. But his online operation continues to hum along.

- The obfuscation of domains is facilitated by outfits based in salubrious locations like the Seychelles. Drop in and check out the businesses sometime when you are in Somalia or cruising the Indian Ocean off the west coast of Africa. The “specialists” located in remote regions provide “air cover” for individuals engaged in interesting business activities like running encrypted email services for allegedly bad actors and strong supporters of specialist “groups.”

Now back to the problem of Chegg and Homeworkify. My take on this dust up is:

- Neither outfit is sufficiently advanced to [a] prevent content access or [b] getting caught.

- Dumping the matter into a legal process means [a] spending lots of money on lawyers and [b] learning that no one understands what is taking place and why these actions are different from what’s being Hoovered by some of the most respected techno feudalists in the world. The cloud of unknowing will be thick as these issues are discussed.

- The focus should include attention and then action toward what I call “the enablers.” Who or what is an enabler? That’s easy. The basic services of the Internet governance entities and the failure to license certain firms who provide technology to facilitate problematic online activity.

Net net: Until regulation and consequences are imposed on the enablers, there will be more dust ups like the one between Chegg and Homeworkify.

PS. I am not too keen on selling short cuts to learning.

Stephen E Arnold, February 5, 2024

Fujitsu: Good Enough Software, Pretty Good Swizzling

January 25, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The USPS is often interesting. But the UK’s postal system, however, is much worse. I think we can thank the public private US postal construct for not screwing over those who manage branch offices. Computer Weekly details how the UK postal system’s leaders knowingly had an IT problem and blamed employees: “Fujitsu Bosses Knew About Post Office Horizon IT Flaws, Says Insider.”

The UK postal system used the Post Office Horizon IT system supplied by Fujitsu. The Fujitsu bosses allowed it to be knowingly installed despite massive problems. Hundreds of UK subpostmasters were accused of fraud and false accounting. They were held liable. Many were imprisoned, had their finances ruined, and lost jobs. Many of the UK subpostmasters fought the accusations. It wasn’t until 2019 that the UK High Court proved it was Horizon IT’s fault.

The Fujitsu that “designed” the postal IT system didn’t have the correct education and experience for the project. It was built on a project that didn’t properly record and process payments. A developer on the project shared with Computer Weekly:

“‘To my knowledge, no one on the team had a computer science degree or any degree-level qualifications in the right field. They might have had lower-level qualifications or certifications, but none of them had any experience in big development projects, or knew how to do any of this stuff properly. They didn’t know how to do it.’”

The Post Office Horizon It system was the largest commercial system in Europe and it didn’t work. The software was bloated, transcribed gibberish, and was held together with the digital equivalent of Scotch tape. This case is the largest miscarriage of justice in current UK history. Thankfully the truth has come out and the subpostmasters will be compensated. The compensation doesn’t return stolen time but it will ease their current burdens.

Fujitsu is getting some scrutiny. Does the company manufacture grocery self check out stations? If so, more outstanding work.

Whitney Grace, January 25, 2024

Amazon: A Secret of Success Revealed

January 15, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “Jeff Bezos Reportedly Told His Team to Attack Small Publishers Like a Cheetah Would Pursue a Sickly Gazelle in Amazon’s Early Days — 3 Ruthless Strategies He’s Used to Build His Empire.” The inspirational story make clear why so many companies, managers, and financial managers find the Bezos Bulldozer a slick vehicle. Who needs a better role model for the Information Superhighway?

Although this machine-generated cheetah is chubby, the big predator looks quite content after consuming a herd of sickly gazelles. No wonder so many admire the beast. Can the chubby creature catch up to the robotic wizards at OpenAI-type firms? Thanks, MSFT Copilot Bing thing. It was a struggle to get this fat beast but good enough.

The write up is not so much news but a summing up of what I think of as Bezos brainwaves. For example, the write up describes either the creator of the Bezos Bulldozer as “sadistic” or a “godfather.” Another facet of Mr. Bezos’ approach to business is an aggressive price strategy. The third tool in the bulldozer’s toolbox is creating an “adversarial” environment. That sounds delightful: “Constant friction.”

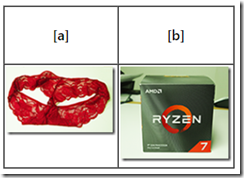

But I think there are other techniques in play. For example, we ordered a $600 dollar CPU. Amazon or one of its “trusted partners” shipped red panties in an AMD Ryzen box. [a] The CPU and [b] its official box. Fashionable, right?

This image appeared in my April 2022 Beyond Search. Amazon customer support insisted that I received a CPU, not panties in an AMB box. The customer support process made it crystal clear that I was trying the cheat them. Yeah, nice accusation and a big laugh when I included the anecdote in one of my online fraud lectures at a cyber crime conference.

More recently, I received a smashed package with a plastic bag displaying this message: “We care.” When I posted a review of the shoddy packaging and the impossibility of contacting Amazon, I received several email messages asking me to go to the Amazon site and report the problem. Oh, the merchant in question is named Meta Bosem:

Amazon asks me to answer this question before getting a resolution to this predatory action. Amazon pleads, “Did this solve my problem?” No, I will survive being the victim of what seems to a way to down a sickly gazelle. (I am just old, not sickly.)

The somewhat poorly assembled article cited above includes one interesting statement which either a robot or an underpaid humanoid presented as a factoid about Amazon:

Malcolm Gladwell’s research has led him to believe that innovative entrepreneurs are often disagreeable. Businesses and society may have a lot to gain from individuals who “change up the status quo and introduce an element of friction,” he says. A disagreeable personality — which Gladwell defines as someone who follows through even in the face of social approval — has some merits, according to his theory.

Yep, the benefits of Amazon. Let me identify the ones I experienced with the panties and the smashed product in the “We care” wrapper:

- Quality control and quality assurance. Hmmm. Similar to aircraft manufacturer’s whose planes feature self removing doors at 14,000 feet

- Customer service. I love the question before the problem is addressed which asks, “Did this solve your problem?” (The answer is, “No.”)

- Reliable vendors. I wonder if the Meta Bosum folks would like my pair of large red female undergarments for one of their computers?

- Business integrity. What?

But what does one expect from a techno feudal outfit which presents products named by smart software. For details of this recent flub, navigate to “Amazon Product Name Is an OpenAI Error Message.” This article states:

We’re accustomed to the uncanny random brand names used by factories to sell directly to the consumer. But now the listings themselves are being generated by AI, a fact revealed by furniture maker FOPEAS, which now offers its delightfully modern yet affordable I’m sorry but I cannot fulfill this request it goes against OpenAI use policy. My purpose is to provide helpful and respectful information to users in brown.

Isn’t Amazon a delightful organization? Sickly gazelles, be cautious when you hear the rumble of the Bezos Bulldozer. It does not move fast and break things. The company has weaponized its pursuit of revenue. Neither, publishers, dinobabies, or humanoids can be anything other than prey if the cheetah assertion is accurate. And the government regulatory authorities in the US? Great job, folks.

Stephen E Arnold, January 15, 2024

Balloons, Hands Off Virtual Services, and Enablers: Technology Shadows and Ghosts

December 30, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Earlier this year (2023) I delivered a lecture called “Ghost Web.” I defined the term, identified what my team and I call “enablers,” and presented several examples. These included a fan of My Little Pony operating Dark Web friendly servers, a non-governmental organization pitching equal access, a disgruntled 20 something with a fixation on adolescent humor, and a suburban business executive pumping adult content to anyone able to click or swipe via well-known service providers. These are examples of enablers.

Enablers are accommodating. Hear no evil, see no evil, admit to knowing nothing is the mantra. Thanks, MSFT Copilot Bing thing.

Figuring out the difference between the average bad guy and a serious player in industrialized cyber crime is not easy. Here’s another possible example of how enablers facilitate actions which may be orthogonal to the interests of the US and its allies. Navigate to “U.S. Intelligence Officials Determined the Chinese Spy Balloon Used a U.S. Internet Provider to Communicate.” The report may or may not be true, but the scant information presented lines up with my research into “enablers.” (These are firms which knowingly set up their infrastructure services to allow the customer to control virtual services. The idea is that the hosting vendor does nothing but process the credit card, bank transfer, crypto, or other accepted form of payment. Done. The customer or the sys admin for the actor does the rest: Spins up the servers, installs necessary software, and operates the service. The “enabler” just looks at logs and sends bills.

Enablers are aware that their virtual infrastructure makes it easy for a customer to operate in the shadows. Look up a url and what do you find? Missing information due to privacy regulations like those in Western Europe or an obfuscation service offered by the “enabler.” Explore the urls using an appropriate method and what do you find? Dead ends. What happens when a person looks into an enabling hosting provider? Looks of confusion because the mechanism does not know if the customers are “real”? Stuff is automatic. The blank looks reflect the reality that at certain enabling ISPs, no one knows because no one wants to know. As long as the invoice is paid, the “enabler” is a happy camper.

What’s the NBC News report say?

U.S. intelligence officials have determined that the Chinese spy balloon that flew across the U.S. this year used an American internet service provider to communicate, according to two current and one former U.S. official familiar with the assessment.

The “American Internet Service Provider” is an enabler. Neither the write up nor an “official” is naming the alleged enabler. I want to point out that there many firms are in the enabling business. I will not identify by name these outfits, but I can characterize the types of outfits my team and I have identified. I will highlight three for this free, public blog post:

- A grifter who sets up an ISP and resells services. Some of these outfits have buildings and lease machines; others just use space in a very large utility ISP. The enabling occurs because of what we call the Russian doll set up. A big outfit allows resellers to brand an ISP service and pay a commission to the company with the pings, pipes, and other necessaries.

- An outright criminal no longer locked up sets up a hosting operation in a country known to be friendly to technology businesses. Some of these are in nation states with other problems on their hands and lack the resources to chase what looks like a simple Web hosting operation. Other variants include known criminals who operate via proxies and focus on industrialized cyber crime in different flavors.

- A business person who understands enough about technology to hire and compensate engineers to build a “ghost” operation. One such outfit diverted itself of a certain sketchy business when the holding company sold what looked like a “plain vanilla” services firm. The new owner figured out what was going on and sold the problematic part of the business to another party.

There are other variants.

The big question is, “How do these outfits remain in business?” My team and I identified a number of reasons. Let me highlight a handful because this is, once again, a free blog and not a mechanism for disseminating information reserved for specialists:

The first is that the registration mechanism is poorly organized, easily overwhelmed, and without enforcement teeth. As a result, it is very easy to operate a criminal enterprise, follow the rules (such as they are), and conduct whatever online activities desired with minimal oversight. Regulation of the free and open Internet facilitates enablers.

The second is that modern methods and techniques make it possible to set up an illegal operation and rely on scripts or semi-smart software to move the service around. The game is an old one, and it is called Whack A Mole. The idea is that when investigators arrive to seize machines and information, the service is gone. The account was in the name of a fake persona. The payments arrived via a bogus bank account located in a country permitting opaque banking operations. No one where physical machines are located paid any attention to a virtual service operated by an unknown customer. Dead ends are not accidental; they are intentional and often technical.

The third is that enforcement personnel have to have time and money to pursue the bad actors. Some well publicized take downs like the Cyberbunker operation boil down to a mistake made by the owner or operator of a service. Sometimes investigators get a tip, see a message from a disgruntled employee, or attend a hacker conference and hear a lecturer explain how an encrypted email service for cyber criminals works. The fix, therefore, is additional, specialized staff, technical resources, and funding.

What’s the NBC News’s story mean?

Cyber crime is not just a lone wolf game. Investigators looking into illegal credit card services find that trails can lead to a person in prison in Israel or to a front company operating via the Seychelles using a Chinese domain name registrar with online services distributed around the world. The problem is like one of those fancy cakes with many layers.

How accurate is the NBC News report? There aren’t many details, but it a fact that enablers make things happen. It’s time for regulatory authorities in the US and the EU to put on their Big Boy pants and take more forceful, sustained action. But that’s just my opinion about what I call the “ghost Web,” its enablers, and the wide range of criminal activities fostered, nurtured, and operated 24×7 on a global basis.

When a member of your family has a bank account stripped or an identity stolen, you may have few options for a remedy. Why? You are going to be chasing ghosts and the machines which make them function in the real world. What’s your ISP facilitating?

Stephen E Arnold, December 30, 2023

AI Silly Putty: Squishes Easily, Impossible to Remove from Hair

December 29, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I like happy information. I navigated to “Meta’s Chief AI Scientist Says Terrorists and Rogue States Aren’t Going to Take Over the World with Open Source AI.” Happy information. Terrorists and the Axis of Evil outfits are just going to chug along. Open source AI is not going to give these folks a super weapon. I learned from the write up that the trustworthy outfit Zuckbook has a Big Wizard in artificial intelligence. That individual provided some cheerful words of wisdom for me. Here’s an example:

It won’t be easy for terrorists to takeover the world with open-source AI.

Obviously there’s a caveat:

they’d need a lot money and resources just to pull it off.

That’s my happy thought for the day.

“Wow, getting this free silly putty out of your hair is tough,” says the scout mistress. The little scout asks, “Is this similar to coping with open source artificial intelligence software?” Thanks, MSFT Copilot. After a number of weird results, you spit out one that is good enough.

Then I read “China’s Main Intel Agency Has Reportedly Developed An AI System To Track US Spies.” Oh, oh. Unhappy AI information. China, I assume, has the open source AI software. It probably has in its 1.4 billion population a handful of AI wizards comparable to the Zuckbook’s line up. Plus, despite economic headwinds, China has money.

The write up reports:

The CIA and China’s Ministry of State Security (MSS) are toe to toe in a tense battle to beat one another’s intelligence capabilities that are increasingly dependent on advanced technology… , the NYT reported, citing U.S. officials and a person with knowledge of a transaction with contracting firms that apparently helped build the AI system. But, the MSS has an edge with an AI-based system that can create files near-instantaneously on targets around the world complete with behavior analyses and detailed information allowing Beijing to identify connections and vulnerabilities of potential targets, internal meeting notes among MSS officials showed.

Not so happy.

Several observations:

- The smart software is a cat out of the bag

- There are intelligent people who are not pals of the US who can and will use available tools to create issues for a perceived adversary

- The AI technology is like silly putty: Easy to get, free or cheap, and tough to get out of someone’s hair.

What’s the deal with silly putty? Cheap, easy, and tough to remove from hair, carpet, and seat upholstery. Just like open source AI software in the hands of possibly questionable actors. How are those government guidelines working?

Stephen E Arnold, December 29, 2023

Microsoft Snags Cyber Criminal Gang: Enablers Finally a Target

December 14, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Earlier this year at the National Cyber Crime Conference, we shared some of our research about “enablers.” The term is our shorthand for individuals, services, and financial outfits providing the money, services, and management support to cyber criminals. Online crime comes, like Baskin & Robbins ice cream, in a mind-boggling range of “flavors.” To make big bucks, funding and infrastructure are needed. The reasons include amped up enforcement from the US Federal Bureau of Investigation, Europol, and cooperating law enforcement agencies. The cyber crime “game” is a variation of a cat-and-mouse game. With each technological advance, bad actors try out the latest and greatest. Then enforcement agencies respond and neutralize the advantage. The bad actors then scan the technology horizon, innovate, and law enforcement responds. There are many implications of this innovate-react-innovate cycle. I won’t go into those in this short essay. Instead I want to focus on a Microsoft blog post called “Disrupting the Gateway Services to Cybercrime.”

Industrialized cyber crime uses existing infrastructure providers. That’s a convenient, easy, and economical means of hiding. Modern obfuscation technology adds to law enforcements’ burden. Perhaps some oversight and regulation of these nearly invisible commercial companies is needed? Thanks, MSFT Copilot. Close enough and I liked the investigators on the roof of a typical office building.

Microsoft says:

Storm-1152 [the enabler?] runs illicit websites and social media pages, selling fraudulent Microsoft accounts and tools to bypass identity verification software across well-known technology platforms. These services reduce the time and effort needed for criminals to conduct a host of criminal and abusive behaviors online.

What moved Microsoft to take action? According to the article:

Storm-1152 created for sale approximately 750 million fraudulent Microsoft accounts, earning the group millions of dollars in illicit revenue, and costing Microsoft and other companies even more to combat their criminal activity.

Just 750 million? One question which struck me was: “With the updating, the telemetry, and the bits and bobs of Microsoft’s “security” measures, how could nearly a billion fake accounts be allowed to invade the ecosystem?” I thought a smaller number might have been the tipping point.

Another interesting point in the essay is that Microsoft identifies the third party Arkose Labs as contributing to the action against the bad actors. The company is one of the firms engaged in cyber threat intelligence and mitigation services. The question I had was, “Why are the other threat intelligence companies not picking up signals about such a large, widespread criminal operation?” Also, “What is Arkose Labs doing that other sophisticated companies and OSINT investigators not doing?” Google and In-Q-Tel invested in Recorded Future, a go to threat intelligence outfit. I don’t recall seeing, but I heard that Microsoft invested in the company, joining SoftBank’s Vision Fund and PayPal, among others.

I am delighted that “enablers” have become a more visible target of enforcement actions. More must be done, however. Poke around in ISP land and what do you find? As my lecture pointed out, “Respectable companies in upscale neighborhoods harbor enablers, so one doesn’t have to travel to Bulgaria or Moldova to do research. Silicon Valley is closer and stocked with enablers; the area is a hurricane of crime.

In closing, I ask, “Why are discoveries of this type of industrialized criminal activity unearthed by one outfit?" And, “What are the other cyber threat folks chasing?”

Stephen E Arnold, December 14, 2023

23andMe: Those Users and Their Passwords!

December 5, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Silicon Valley and health are match fabricated in heaven. Not long ago, I learned about the estimable management of Theranos. Now I find out that “23andMe confirms hackers stole ancestry data on 6.9 million users.” If one follows the logic of some Silicon Valley outfits, the data loss is the fault of the users.

“We have the capability to provide the health data and bioinformation from our secure facility. We have designed our approach to emulate the protocols implemented by Jack Benny and his vault in his home in Beverly Hills,” says the enthusiastic marketing professional from a Silicon Valley success story. Thanks, MSFT Copilot. Not exactly Jack Benny, Ed, and the foghorn, but I have learned to live with “good enough.”

According to the peripatetic Lorenzo Franceschi-Bicchierai:

In disclosing the incident in October, 23andMe said the data breach was caused by customers reusing passwords, which allowed hackers to brute-force the victims’ accounts by using publicly known passwords released in other companies’ data breaches.

Users!

What’s more interesting is that 23andMe provided estimates of the number of customers (users) whose data somehow magically flowed from the firm into the hands of bad actors. In fact, the numbers, when added up, totaled almost seven million users, not the original estimate of 14,000 23andMe customers.

I find the leak estimate inflation interesting for three reasons:

- Smart people in Silicon Valley appear to struggle with simple concepts like adding and subtracting numbers. This gap in one’s education becomes notable when the discrepancy is off by millions. I think “close enough for horse shoes” is a concept which is wearing out my patience. The difference between 14,000 and almost 17 million is not horse shoe scoring.

- The concept of “security” continues to suffer some set backs. “Security,” one may ask?

- The intentional dribbling of information reflects another facet of what I call high school science club management methods. The logic in the case of 23andMe in my opinion is, “Maybe no one will notice?”

Net net: Time for some regulation, perhaps? Oh, right, it’s the users’ responsibility.

Stephen E Arnold, December 5, 2023

Deepfakes: Improving Rapidly with No End in Sight

December 1, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The possible applications of AI technology are endless and we’ve barely imagined the opportunities. While tech experts mainly focus on the benefits of AI, bad actors are concentrating how to use them for illegal activities. The Next Web explains how bad actors are using AI for scams, “Deepfake Fraud Attempts Are Up 3000% In 2023-Here’s Why.” Bad actors are using cheap and widely available AI technology to create deepfake content for fraud attempts.

According to Onfido, an ID verification company in London, reports that deepfake scams increased by 31% in 2023. It’s an entire 3000% year-on-year gain. The AI tool of choice for bad actors is face-swapping apps. They range in quality from a bad copy and paste job to sophisticated, blockbuster quality fakes. While the crude attempts are laughable, it only takes one successful facial identity verification for fraudsters to win.

The bad actors concentrate on quantity over quality and account for 80.3% of attacks in 2023. Biometric information is a key component to stop fraudsters:

“Despite the rise of deepfake fraud, Onfido insists that biometric verification is an effective deterrent. As evidence, the company points to its latest research. The report found that biometrics received three times fewer fraudulent attempts than documents. The criminals, however, are becoming more creative at attacking these defenses. As GenAI tools become more common, malicious actors are increasingly producing fake documents, spoofing biometric defenses, and hijacking camera signals.”

Onfido suggests using “liveness” biometrics in verification technology. Liveness determines if a user if actually present instead of a deepfake, photo, recording, or masked individual.

As AI technology advances so will bad actors in their scams.

Whitney Grace, December 1, 2023