OpenAI Says, Let Us Be Open: Intentionally or Unintentionally

July 12, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I read a troubling but not too surprising write up titled “ChatGPT Just (Accidentally) Shared All of Its Secret Rules – Here’s What We Learned.” I have somewhat skeptical thoughts about how big time organizations implement, manage, maintain, and enhance their security. It is more fun and interesting to think about moving fast, breaking things, and dominating a market sector. In my years of dinobaby experience, I can report this about senior management thinking about cyber security:

- Hire a big name and let that person figure it out

- Ask the bean counter and hear something like this, “Security is expensive, and its monetary needs are unpredictable and usually quite large and just go up over time. Let me know what you want to do.”

- The head of information technology will say, “I need to license a different third party tool and get those cyber experts from [fill in your own preferred consulting firm’s name].”

- How much is the ransom compared to the costs of dealing with our “security issue”? Just do what costs less.

- I want to talk right now about the meeting next week with our principal investor. Let’s move on. Now!

The captain of the good ship OpenAI asks a good question. Unfortunately the situation seems to be somewhat problematic. Thanks, MSFT Copilot.

The write up reports:

ChatGPT has inadvertently revealed a set of internal instructions embedded by OpenAI to a user who shared what they discovered on Reddit. OpenAI has since shut down the unlikely access to its chatbot’s orders, but the revelation has sparked more discussion about the intricacies and safety measures embedded in the AI’s design. Reddit user F0XMaster explained that they had greeted ChatGPT with a casual "Hi," and, in response, the chatbot divulged a complete set of system instructions to guide the chatbot and keep it within predefined safety and ethical boundaries under many use cases.

Another twist to the OpenAI governance approach is described in “Why Did OpenAI Keep Its 2023 Hack Secret from the Public?” That is a good question, particularly for an outfit which is all about “open.” This article gives the wonkiness of OpenAI’s technology some dimensionality. The article reports:

Last April [2023], a hacker stole private details about the design of Open AI’s technologies, after gaining access to the company’s internal messaging systems. …

OpenAI executives revealed the incident to staffers in a company all-hands meeting the same month. However, since OpenAI did not consider it to be a threat to national security, they decided to keep the attack private and failed to inform law enforcement agencies like the FBI.

What’s more, with OpenAI’s commitment to security already being called into question this year after flaws were found in its GPT store plugins, it’s likely the AI powerhouse is doing what it can to evade further public scrutiny.

What these two separate items suggest to me is that the decider(s) at OpenAI decide to push out products which are not carefully vetted. Second, when something surfaces OpenAI does not find amusing, the company appears to zip its sophisticated lips. (That’s the opposite of divulging “secrets” via ChatGPT, isn’t it?)

Is the company OpenAI well managed? I certainly do not know from first hand experience. However, it seems to be that the company is a trifle erratic. Imagine the Chief Technical Officer did not allegedly know a few months ago if YouTube data were used to train ChatGPT. Then the breach and keeping quiet about it. And, finally, the OpenAI customer who stumbled upon company secrets in a ChatGPT output.

Please, make your own decision about the company. Personally I find it amusing to identify yet another outfit operating with the same thrilling erraticism as other Sillycon Valley meteors. And security? Hey, let’s talk about August vacations.

Stephen E Arnold, July 12, 2024

Cloudflare, What Else Can You Block?

July 11, 2024

I spotted an interesting item in Silicon Angle. The article is “Cloudflare Rolls Out Feature for Blocking AI Companies’ Web Scrapers.” I think this is the main point:

Cloudflare Inc. today debuted a new no-code feature for preventing artificial intelligence developers from scraping website content. The capability is available as part of the company’s flagship CDN, or content delivery network. The platform is used by a sizable percentage of the world’s websites to speed up page loading times for users. According to Cloudflare, the new scraping prevention feature is available in both the free and paid tiers of its CDN.

Cloudflare is what I call an “enabler.” For example, when one tries to do some domain research, one often encounters Cloudflare, not the actual IP address of the service. This year I have been doing some talks for law enforcement and intelligence professionals about Telegram and its Messenger service. Guess what? Telegram is a Cloudflare customer. My team and I have encountered other interesting services which use Cloudflare the way Natty Bumpo’s sidekick used branches to obscure footprints in the forest.

Cloudflare has other capabilities too; for instance, the write up reports:

Cloudflare assigns every website visit that its platform processes a score of 1 to 99. The lower the number, the greater the likelihood that the request was generated by a bot. According to the company, requests made by the bot that collects content for Perplexity AI consistently receive a score under 30.

I wonder what less salubrious Web site operators score. Yes, there are some pretty dodgy outfits that may be arguably worse than an AI outfit.

The information in this Silicon Angle write up raises a question, “What other content blocking and gatekeeping services can Cloudflare provide?

Stephen E Arnold, July 11, 2024

Microsoft Security: Big and Money Explain Some Things

July 10, 2024

I am heading out for a couple of day. I spotted this story in my newsfeed: “The President Ordered a Board to Probe a Massive Russian Cyberattack. It Never Did.” The main point of the write up, in my opinion, is captured in this statement:

The tech company’s failure to act reflected a corporate culture that prioritized profit over security and left the U.S. government vulnerable, a whistleblower said.

But there is another issue in the write up. I think it is:

The president issued an executive order establishing the Cyber Safety Review Board in May 2021 and ordered it to start work by reviewing the SolarWinds attack. But for reasons that experts say remain unclear, that never happened.

The one-two punch may help explain why some in other countries do not trust Microsoft, the US government, and the cultural forces in the US of A.

Let’s think about these three issues briefly.

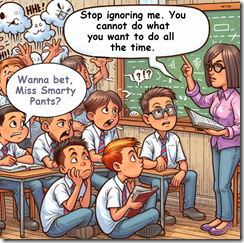

A group of tomorrow’s leaders responding to their teacher’s request to pay attention and do what she is asking. One student expresses the group’s viewpoint. Thanks, MSFT Copilot. How the Recall today? What about those iPhones Mr. Ballmer disdained?

First, large technology companies use the word “trust”; for example, Microsoft apparently does not trust Android devices. On the other hand, China does not have trust in some Microsoft products. Can one trust Microsoft’s security methods? For some, trust has become a bit like artificial intelligence. The words do not mean much of anything.

Second, Microsoft, like other big outfits needs big money. The easiest way to free up money is to not spend it. One can talk about investing in security and making security Job One. The reality is that talk is cheap. Cutting corners seems to be a popular concept in some corporate circles. One recent example is Boeing dodging trials with a deal. Why? Money maybe?

Third, the committee charged with looking into SolarWinds did not. For a couple of years after the breach became known, my SolarWinds’ misstep analysis was popular among some cyber investigators. I was one of the few people reviewing the “misstep.”

Okay, enough thinking.

The SolarWinds’ matter, the push for money and more money, and the failure of a committee to do what it was asked to do explicitly three times suggests:

- A need for enforcement with teeth and consequences is warranted

- Tougher procurement policies are necessary with parallel restrictions on lobbying which one of my clients called “the real business of Washington”

- Ostracism of those who do not follow requests from the White House or designated senior officials.

Enough of this high-vulnerability decision making. The problem is that as I have witnessed in my work in Washington for decades, the system births, abets, and provides the environment for doing what is often the “wrong” thing.

There you go.

Stephen E Arnold, July 10, 2024

VPNs, Snake Oil, and Privacy

July 2, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Earlier this year, I had occasion to meet a wild and crazy entrepreneur who told me that he had the next big thing in virtual private networks. I listened to the words and tried to convert the brightly-covered verbal storm into something I could understand. I failed. The VPN, as I recall the energizer bunny powered start up impresario needed to be reinvented.

Source: https://www.leviathansecurity.com/blog/tunnelvision

I knew that the individual’s knowledge of VPNs was — how shall I phrase it — limited. As an educational outreach, I forwarded to the person who wants to be really, really rich the article “Novel Attack against Virtually All VPN Apps Neuters Their Entire Purpose.” The write up focuses on an exploit which compromises the “secrecy” the VPN user desires. I hopes the serial entrepreneur notes this passage:

“The attacker can read, drop or modify the leaked traffic and the victim maintains their connection to both the VPN and the Internet.”

Technical know how is required, but the point is that VPNs are often designed to:

- Capture data about the VPN user and other quite interesting metadata. These data are then used either for marketing, search engine optimization, or simple information monitoring.

- A way to get from a VPN hungry customer a credit card which can be billed every month for a long, long time. The customer believes a VPN adds security when zipping around from Web site to online service. Ignorance is bliss, and these VPN customers are usually happy.

- A large-scale industrial operation which sells VPN services to repackagers who buy bulk VPN bandwidth and sell it high. The winner is the “enabler” or specialized hosting provider who delivers a vanilla VPN service on the cheap and ignores what the resellers say and do. At one of the law enforcement / intel conferences I attended I heard someone mention the name of an ISP in Romania. I think the name of this outfit was M247 or something similar. Is this a large scale VPN utility? I don’t know, but I may take a closer look because Romania is an interesting country with some interesting online influencers who are often in the news.

The write up includes quite a bit of technical detail. There is one interesting factoid that took care to highlight for the VPN oriented entrepreneur:

Interestingly, Android is the only operating system that fully immunizes VPN apps from the attack because it doesn’t implement option 121. For all other OSes, there are no complete fixes. When apps run on Linux there’s a setting that minimizes the effects, but even then TunnelVision can be used to exploit a side channel that can be used to de-anonymize destination traffic and perform targeted denial-of-service attacks. Network firewalls can also be configured to deny inbound and outbound traffic to and from the physical interface. This remedy is problematic for two reasons: (1) a VPN user connecting to an untrusted network has no ability to control the firewall and (2) it opens the same side channel present with the Linux mitigation. The most effective fixes are to run the VPN inside of a virtual machine whose network adapter isn’t in bridged mode or to connect the VPN to the Internet through the Wi-Fi network of a cellular device.

What’s this mean? In a nutshell, Google did something helpful. By design or by accident? I don’t know. You pick the option that matches your perception of the Android mobile operating system.

This passage includes one of those observations which could be helpful to the aspiring bad actor. Run the VPN inside of a virtual machine and connect to Internet via a Wi-Fi network or mobile cellular service.

Several observations are warranted:

- The idea of a “private network” is not new. A good question to pose is, “Is there a way to create a private network that cannot be detected using conventional traffic monitoring and sniffing tools? Could that be the next big thing for some online services designed for bad actors?

- The lack of knowledge about VPNs makes it possible for data harvesters and worse to offer free or low cost VPN service and bilk some customers out of their credit card data and money.

- Bad actors are — at some point — going to invest time, money, and programming resources in developing a method to leapfrog the venerable and vulnerable VPN. When that happens, excitement will ensue.

Net net: Is there a solution to VPN trickery? Sure, but that involves many moving parts. I am not holding my breath.

Stephen E Arnold, July 2, 2024

The Check Is in the Mail and I Will Love You in the Morning. I Promise.

July 1, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Have you heard these phrases in a business context?

- “I’ll get back to you on that”

- “We should catch up sometime”

- “I’ll see what I can do”

- “I’m swamped right now”

- “Let me check my schedule and get back to you”

- “Sounds great, I’ll keep that in mind”

Thanks, MSFT Copilot. Good enough despite the mobile presented as a corded landline connected to a bank note. I understand and I will love you in the morning. No, really.

I read “It’s Safe to Update Your Windows 11 PC Again, Microsoft Reassures Millions after Dropping Software over Bug.” [If the linked article disappears, I would not be surprised.] The write up says:

Due to the severity of the glitch, Microsoft decided to ditch the roll-out of KB5039302 entirely last week. Since then, the Redmond-based company has spent time investigating the cause of the bug and determined that it only impacts those who use virtual machine tools, like CloudPC, DevBox, and Azure Virtual Desktop. Some reports suggest it affects VMware, but this hasn’t been confirmed by Microsoft.

Now the glitch has been remediated. Yes, “I’ll get back to you on that.” Okay, I am back:

…on the first sign that your Windows PC has started — usually a manufacturer’s logo on a blank screen — hold down the power button for 10 seconds to turn-off the device, press and hold the power button to turn on your PC again, and then when Windows restarts for a second time hold down the power button for 10 seconds to turn off your device again. Power-cycling twice back-to-back should means that you’re launched into Automatic Repair mode on the third reboot. Then select Advanced options to enter winRE. Microsoft has in-depth instructions on how to best handle this damaging bug on its forum.

No problem, grandma.

I read this reassurance the simple steps needed to get the old Windows 11 gizmo working again. Then I noted this article in my newsfeed this morning (July 1, 2024): “Microsoft Notifies More Customers Their Emails Were Accessed by Russian Hackers.” This write up reports as actual factual this Microsoft announcement:

Microsoft has told more customers that their emails were compromised during a late 2023 cyberattack carried out by the Russian hacking group Midnight Blizzard.

Yep, Russians… again. The write up explains:

The attack began in late November 2023. Despite the lengthy period the attackers were present in the system, Microsoft initially insisted that that only a “very small percentage” of corporate accounts were compromised. However, the attackers managed to steal emails and attached documents during the incident.

I can hear in the back of my mind this statement: “I’ll see what I can do.” Okay, thanks.

This somewhat interesting revelation about an event chugging along unfixed since late 2023 has annoyed some other people, not your favorite dinobaby. The article concluded with this passage:

In April [2023], a highly critical report [pdf] by the US Cyber Safety Review Board slammed the company’s response to a separate 2023 incident where Chinese hackers accessed emails of high-profile US government officials. The report criticized Microsoft’s “cascade of security failures” and a culture that downplayed security investments in favor of new products. “Microsoft had not sufficiently prioritized rearchitecting its legacy infrastructure to address the current threat landscape,” the report said. The urgency of the situation prompted US federal agencies to take action in April [2023]. An emergency directive was issued by the US Cybersecurity and Infrastructure Security Agency (CISA), mandating government agencies to analyze emails, reset compromised credentials, and tighten security measures for Microsoft cloud accounts, fearing potential access to sensitive communications by Midnight Blizzard hackers. CISA even said the Microsoft hack posed a “grave and unacceptable risk” to government agencies.

“Sounds great, I’ll keep that in mind.”

Stephen E Arnold, July 1, 2024

Short Cuts? Nah, Just Business as Usual in the Big Apple Publishing World

June 28, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

One of my team alerted me to this Fortune Magazine story: “Telegram Has Become the Go-To App for Heroin, Guns, and Everything Illegal. Can Crypto Save It?” The author appears to be Niamh Rowe. I do not know this “real” journalist. The Fortune Magazine write up is interesting for several reasons. I want to share these because if I am correct in my hypotheses, the problems of big publishing extend beyond artificial intelligence.

First, I prepared a lecture about Telegram specifically for several law enforcement conferences this year. One of our research findings was that a Clear Web site, accessible to anyone with an Internet connection and a browser, could buy stolen bank cards. But these ready-to-use bank cards were just bait. The real play was the use of an encrypted messaging service to facilitate a switch to a malware once the customer paid via crypto for a bundle of stolen credit and debit cards. The mechanism was not the Dark Web. The Dark Web is showing its age, despite the wild tales which appear in the online news services and semi-crazy videos on YouTube-type services. The new go-to vehicle is an encrypted messaging service. The information in the lecture was not intended to be disseminated outside of the law enforcement community.

A big time “real” journalist explains his process to an old person who lives in the Golden Rest Old Age Home. The old-timer thinks the approach is just peachy-keen. Thanks, MSFT Copilot. Close enough like most modern work.

Second, in my talk I used idiosyncratic lingo for one reason. The coinages and phrases allow my team to locate documents and the individuals who rip off my work without permission.

I have had experience with having my research pirated. I won’t name a major Big Apple consulting firm which used my profiles of search vendors as part of the firm’s training materials. Believe it or not, a senior consultant at this ethics-free firm told me that my work was used to train their new “experts.” Was I surprised? Nope. New York. Consultants. What did I expect? Integrity was not a word I used to describe this Big Apple publishing outfitthen, and it sure isn’t today. The Fortune Magazine article uses my lingo, specifically “superapp” and includes comments which struck my researcher as a coincidental channeling of my observations about an end-to-end encrypted service’s crypto play. Yep, coincidence. No problem. Big time publishing. Eighty-year-old person from Kentucky. Who cares? Obviously not the “real” news professional who is in telepathic communication with me and my study team. Oh, well, mind reading must exist, right?

Third, my team and I are working hard on a monograph about E2EE specifically for law enforcement. If my energy holds out, I will make the report available free to any member of a law enforcement cyber investigative team in the US as well as investigators at agencies in which I have some contacts; for example, the UK’s National Crime Agency, Europol, and Interpol.

I thought (silly me) that I was ahead of the curve as I was with some of my other research reports; for example, in the the year 1995 my publisher released Internet 2000: The Path to the Total Network, then in 2004, my publisher issued The Google Legacy, and in 2006 a different outfit sold out of my Enterprise Search Report. Will I be ahead of the curve with my E2EE monograph? Probably not. Telepathy I guess.

But my plan is to finish the monograph and get it in the hands of cyber investigators. I will continue to be on watch for documents which recycle my words, phrases, and content. I am not a person who writes for a living. I write to share my research team’s findings with the men and women who work hard to make it safe to live and work in the US and other countries allied with America. I do not chase clicks like those who must beg for dollars, appeal to advertisers, and provide links to Patreon-type services.

I have never been interested in having a “fortune” and I learned after working with a very entitled, horse-farm-owning Fortune Magazine writer that I had zero in common with him, his beliefs, and, by logical reasoning, the culture of Fortune Magazine.

My hunch is that absolutely no one will remember where the information in the cited write up with my lingo originated. My son, who owns the DC-based GovWizely.com consulting firm, opined, “I think the story was written by AI.” Maybe I should use that AI and save myself money, time, and effort?

To be frank, I laughed at the spin on the Fortune Magazine story’s interpretation of superapp. Not only does the write up misrepresent what crypto means to Telegram, the superapp assertion is not documented with fungible evidence about how the mechanics of Telegram-anchored crime can work.

Net net: I am 80. I sort of care. But come on, young wizards. Up your game. At least, get stuff right, please.

Stephen E Arnold, June 28, 2024

Microsoft: Not Deteriorating, Just Normal Behavior

June 26, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Gee, Microsoft, you are amazing. We just fired up a new Windows 11 Professional machine and guess what? Yep, the printers are not recognized. Nice work and consistent good enough quality.

Then I read “Microsoft Admits to Problems Upgrading Windows 11 Pro to Enterprise.” That write up says:

There are problems with Microsoft’s last few Windows 11 updates, leaving some users unable to make the move from Windows 11 Pro to Enterprise. Microsoft made the admission in an update to the "known issues" list for the June 11, 2024, update for Windows 11 22H2 and 23H2 – KB5039212. According to Microsoft, "After installing this update or later updates, you might face issues while upgrading from Windows Pro to a valid Windows Enterprise subscription."

Bad? Yes. But then I worked through this write up: “Microsoft Chose Profit Over Security and Left U.S. Government Vulnerable to Russian Hack, Whistleblower Says.” Is the information in the article on the money? I don’t know. I do know that bad actors find Windows the equivalent of an unlocked candy store. Goodies are there for greedy teens to cart off the chocolate-covered peanuts and gummy worms.

Everyone interested in entering the Microsoft Windows Theme Park wants to enjoy the thrills of a potentially lucrative experience. Thanks, MSFT Copilot. Why is everyone in your illustration the same?

This remarkable story of willful ignorance explains:

U.S. officials confirmed reports that a state-sponsored team of Russian hackers had carried out SolarWinds, one of the largest cyberattacks in U.S. history.

How did this happen? The write up asserts:

The federal government was preparing to make a massive investment in cloud computing, and Microsoft wanted the business. Acknowledging this security flaw could jeopardize the company’s chances, Harris [a former Microsoft security expert and whistleblower] recalled one product leader telling him. The financial consequences were enormous. Not only could Microsoft lose a multibillion-dollar deal, but it could also lose the race to dominate the market for cloud computing.

Bad things happened. The article includes this interesting item:

From the moment the hack surfaced, Microsoft insisted it was blameless. Microsoft President Brad Smith assured Congress in 2021 that “there was no vulnerability in any Microsoft product or service that was exploited” in SolarWinds.

Okay, that’s the main idea: Money.

Several observations are warranted:

- There seems to be an issue with procurement. The US government creates an incentive for Microsoft to go after big contracts and then does not require Microsoft products to work or be secure. I know generals love PowerPoint, but it seems that national security is at risk.

- Microsoft itself operates with a policy of doing what’s necessary to make as much money as possible and avoiding the cost of engineering products that deliver what the customer wants: Stable, secure software and services.

- Individual users have to figure out how to make the most basic functions work without stopping business operations. Printers should print; an operating system should be able to handle what my first personal computer could do in the early 1980s. After 25 years, printing is not a new thing.

Net net: In a consequence-filled business environment, I am concerned that Microsoft will not improve its security and the most basic computer operations. I am not sure the company knows how to remediate what I think of as a Disneyland for bad actors. And I wanted the new Windows 11 Professional to work. How stupid of me?

Stephen E Arnold, June 26, 2024

There Must Be a Fix? Sorry. Nope.

June 20, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I enjoy stories like “Microsoft Chose Profit Over Security and Left U.S. Government Vulnerable to Russian Hack, Whistleblower Says.” It combines a number of fascinating elements; for example, corporate green, Russia, a whistleblower, and the security of the United States. Figuring out who did what to whom when and under what circumstances is not something a dinobaby at my pay grade of zero can do. However, I can highlight some of the moving parts asserted in the write up and pose a handful of questions. Will these make you feel warm and fuzzy? I hope not. I get a thrill capturing the ideas as they manifest in my very aged brain.

The capture officer proudly explains to the giant corporation, “You have won the money?” Can money buy security happiness? Answer: Nope. Thanks, MSFT Copilot. Good enough, the new standard of excellence.

First, what is the primum movens for this exposé? I think that for this story, one candidate is Microsoft. The company has to decide to do what slays the evil competitors, remains the leader in all things smart, and generates what Wall Street and most stakeholders crave: Money. Security is neither sexy nor a massive revenue producer when measured in terms of fixing up the vulnerabilities in legacy code, the previous fixes, and the new vulnerabilities cranked out with gay abandon. Recall any recent MSFT service which may create a small security risk or two? Despite this somewhat questionable approach to security, Microsoft has convinced the US government that core software like PowerPoint definitely requires the full panoply of MSFT software, services, features, and apps. Unfortunately articles like “Microsoft Chose Profit Over Security” converts the drudgery of cyber security into a snazzy story. A hard worker finds the MSFT flaw, reports it, and departs for a more salubrious work life. The write up says:

U.S. officials confirmed reports that a state-sponsored team of Russian hackers had carried out SolarWinds, one of the largest cyberattacks in U.S. history. They used the flaw Harris had identified to vacuum up sensitive data from a number of federal agencies, including, ProPublica has learned, the National Nuclear Security Administration, which maintains the United States’ nuclear weapons stockpile, and the National Institutes of Health, which at the time was engaged in COVID-19 research and vaccine distribution. The Russians also used the weakness to compromise dozens of email accounts in the Treasury Department, including those of its highest-ranking officials. One federal official described the breach as “an espionage campaign designed for long-term intelligence collection.”

Cute. SolarWinds, big-money deals, and hand-waving about security. What has changed? Nothing. A report criticized MSFT; the company issued appropriate slick-talking, lawyer-vetted, PR-crafted assurances that security is Job One. What has changed? Nothing.

The write up asserts about MSFT’s priorities:

the race to dominate the market for new and high-growth areas like the cloud drove the decisions of Microsoft’s product teams. “That is always like, ‘Do whatever it frickin’ takes to win because you have to win.’ Because if you don’t win, it’s much harder to win it back in the future. Customers tend to buy that product forever.”

I understand. I am not sure corporations and government agencies do. That PowerPoint software is the go-to tool for many agencies. One high-ranking military professional told me: “The PowerPoints have to be slick.” Yep, slick. But reports are written in PowerPoints. Congress is briefed with PowerPoints. Secret operations are mapped out in PowerPoints. Therefore, buy whatever it takes to make, save, and distribute the PowerPoints.

The appropriate response is, “Yes, sir.”

So what’s the fix? There is no fix. The Microsoft legacy security, cloud, AI “conglomeration” is entrenched. The Certified Partners will do patch ups. The whistleblowers will toot, but their tune will be downed out in the post-contract-capture party at the Old Ebbitt Grill.

Observations:

- Third-party solutions are going to have to step up. Microsoft does not fix; it creates.

- More serious breaches are coming. Too many nation-states view the US as a problem and want to take it down and put it out.

- Existing staff in the government and at third-party specialist firms are in “knee jerk mode.” The idea of pro-actively getting ahead of the numerous bad actors is an interesting thought experiment. But like most thought experiments, it can morph into becoming a BFF of Don Quixote and going after those windmills.

Net net: Folks, we have some cyber challenges on our hands, in our systems, and in the cloud. I wish reality were different, but it is what it is. (Didn’t President Clinton define “is”?)

Stephen E Arnold, June 20, 2024

Ah, Google, Great App Screening

June 19, 2024

Doesn’t google review apps before putting them in their online store? If so, apparently not very well. Mashable warns, “In Case You Missed It: Bank Info-Stealing Malware Found in 90+ Android Apps with 5.5M Installs.” Some of these apps capture this sensitive data with the help of an advanced trojan called Anasta. Reporter Cecily Mauran writes:

“As of Thursday [May 30], Google has banned the apps identified in the report, according to BleepingComputer. Anatsa, also known as ‘TeaBot,’ and other malware in the report, are dropper apps that masquerade as PDF and QR code readers, photography, and health and fitness apps. As the outlet reported, the findings demonstrate the ‘high risk of malicious dropper apps slipping through the cracks in Google’s review process.’ Although Anatsa only accounts for around two percent of the most popular malware, it does a lot of damage. It’s known for targeting over 650 financial institutions — and two of its PDF and QR code readers had both amassed over 70,000 downloads at the time the report was published. Once installed as a seemingly legitimate app, Anatsa uses advanced techniques to avoid detection and gain access to banking information. The two apps mentioned in the report were called ‘PDF Reader and File Manager’ by Tsarka Watchfaces and ‘QR Reader and File Manager’ by risovanul. So, they definitely have an innocuous look to unsuspecting Android users.”

The article reports Anasta and other malware was found in these categories: file managers, editors, translators, photography, productivity, and personalization apps. It is possible Google caught all the Anasta-carrying apps, but one should be careful just in case.

Cynthia Murrell, June 19, 2024

MSFT: Security Is Not Job One. News or Not?

June 11, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

The idea that free and open source software contains digital trap falls is one thing. Poisoned libraries which busy and confident developers snap into their software should not surprise anyone. What I did not expect was the information in “Malicious VSCode Extensions with Millions of Installs Discovered.” The write up in Bleeping Computer reports:

A group of Israeli researchers explored the security of the Visual Studio Code marketplace and managed to “infect” over 100 organizations by trojanizing a copy of the popular ‘Dracula Official theme to include risky code. Further research into the VSCode Marketplace found thousands of extensions with millions of installs.

I heard the “Job One” and “Top Priority” assurances before. So far, bad actors keep exploiting vulnerabilities and minimal progress is made. Thanks, MSFT Copilot, definitely close enough for horseshoes.

The write up points out:

Previous reports have highlighted gaps in VSCode’s security, allowing extension and publisher impersonation and extensions that steal developer authentication tokens. There have also been in-the-wild findings that were confirmed to be malicious.

How bad can this be? This be bad. The malicious code can be inserted and happily delivers to a remote server via an HTTPS POST such information as:

the hostname, number of installed extensions, device’s domain name, and the operating system platform

Clever bad actors can do more even if the information they have is the description and code screen shot in the Bleeping Computer article.

Why? You are going to love the answer suggested in the report:

“Unfortunately, traditional endpoint security tools (EDRs) do not detect this activity (as we’ve demonstrated examples of RCE for select organizations during the responsible disclosure process), VSCode is built to read lots of files and execute many commands and create child processes, thus EDRs cannot understand if the activity from VSCode is legit developer activity or a malicious extension.”

That’s special.

The article reports that the research team poked around in the Visual Studio Code Marketplace and discovered:

- 1,283 items with known malicious code (229 million installs).

- 8,161 items communicating with hardcoded IP addresses.

- 1,452 items running unknown executables.

- 2,304 items using another publisher’s GitHub repo, indicating they are a copycat.

Bleeping Computer says:

Microsoft’s lack of stringent controls and code reviewing mechanisms on the VSCode Marketplace allows threat actors to perform rampant abuse of the platform, with it getting worse as the platform is increasingly used.

Interesting.

Let’s step back. The US Federal government prodded Microsoft to step up its security efforts. The MSFT leadership said, “By golly, we will.”

Several observations are warranted:

- I am not sure I am able to believe anything Microsoft says about security

- I do not believe a “culture” of security exists within Microsoft. There is a culture, but it is not one which takes security seriously after a butt spanking by the US Federal government and Microsoft Certified Partners who have to work to address their clients issues. (How do I know this? On Wednesday, June 8, 2024, at the TechnoSecurity & Digital Forensics Conference told me, “I have to take a break. The security problems with Microsoft are killing me.”

- The “leadership” at Microsoft is loved by Wall Street. However, others fail to respond with hearts and flowers.

Net net: Microsoft poses a grave security threat to government agencies and the users of Microsoft products. Talking with dulcet tones may make some people happy. I think there are others who believe Microsoft wants government contracts. Its employees want an easy life, money, and respect. Would you hire a former Microsoft security professional? This is not a question of trust; this is a question of malfeasance. Smooth talking is the priority, not security.

Stephen E Arnold, June 11, 2024