Ah, Google, Great App Screening

June 19, 2024

Doesn’t google review apps before putting them in their online store? If so, apparently not very well. Mashable warns, “In Case You Missed It: Bank Info-Stealing Malware Found in 90+ Android Apps with 5.5M Installs.” Some of these apps capture this sensitive data with the help of an advanced trojan called Anasta. Reporter Cecily Mauran writes:

“As of Thursday [May 30], Google has banned the apps identified in the report, according to BleepingComputer. Anatsa, also known as ‘TeaBot,’ and other malware in the report, are dropper apps that masquerade as PDF and QR code readers, photography, and health and fitness apps. As the outlet reported, the findings demonstrate the ‘high risk of malicious dropper apps slipping through the cracks in Google’s review process.’ Although Anatsa only accounts for around two percent of the most popular malware, it does a lot of damage. It’s known for targeting over 650 financial institutions — and two of its PDF and QR code readers had both amassed over 70,000 downloads at the time the report was published. Once installed as a seemingly legitimate app, Anatsa uses advanced techniques to avoid detection and gain access to banking information. The two apps mentioned in the report were called ‘PDF Reader and File Manager’ by Tsarka Watchfaces and ‘QR Reader and File Manager’ by risovanul. So, they definitely have an innocuous look to unsuspecting Android users.”

The article reports Anasta and other malware was found in these categories: file managers, editors, translators, photography, productivity, and personalization apps. It is possible Google caught all the Anasta-carrying apps, but one should be careful just in case.

Cynthia Murrell, June 19, 2024

MSFT: Security Is Not Job One. News or Not?

June 11, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

The idea that free and open source software contains digital trap falls is one thing. Poisoned libraries which busy and confident developers snap into their software should not surprise anyone. What I did not expect was the information in “Malicious VSCode Extensions with Millions of Installs Discovered.” The write up in Bleeping Computer reports:

A group of Israeli researchers explored the security of the Visual Studio Code marketplace and managed to “infect” over 100 organizations by trojanizing a copy of the popular ‘Dracula Official theme to include risky code. Further research into the VSCode Marketplace found thousands of extensions with millions of installs.

I heard the “Job One” and “Top Priority” assurances before. So far, bad actors keep exploiting vulnerabilities and minimal progress is made. Thanks, MSFT Copilot, definitely close enough for horseshoes.

The write up points out:

Previous reports have highlighted gaps in VSCode’s security, allowing extension and publisher impersonation and extensions that steal developer authentication tokens. There have also been in-the-wild findings that were confirmed to be malicious.

How bad can this be? This be bad. The malicious code can be inserted and happily delivers to a remote server via an HTTPS POST such information as:

the hostname, number of installed extensions, device’s domain name, and the operating system platform

Clever bad actors can do more even if the information they have is the description and code screen shot in the Bleeping Computer article.

Why? You are going to love the answer suggested in the report:

“Unfortunately, traditional endpoint security tools (EDRs) do not detect this activity (as we’ve demonstrated examples of RCE for select organizations during the responsible disclosure process), VSCode is built to read lots of files and execute many commands and create child processes, thus EDRs cannot understand if the activity from VSCode is legit developer activity or a malicious extension.”

That’s special.

The article reports that the research team poked around in the Visual Studio Code Marketplace and discovered:

- 1,283 items with known malicious code (229 million installs).

- 8,161 items communicating with hardcoded IP addresses.

- 1,452 items running unknown executables.

- 2,304 items using another publisher’s GitHub repo, indicating they are a copycat.

Bleeping Computer says:

Microsoft’s lack of stringent controls and code reviewing mechanisms on the VSCode Marketplace allows threat actors to perform rampant abuse of the platform, with it getting worse as the platform is increasingly used.

Interesting.

Let’s step back. The US Federal government prodded Microsoft to step up its security efforts. The MSFT leadership said, “By golly, we will.”

Several observations are warranted:

- I am not sure I am able to believe anything Microsoft says about security

- I do not believe a “culture” of security exists within Microsoft. There is a culture, but it is not one which takes security seriously after a butt spanking by the US Federal government and Microsoft Certified Partners who have to work to address their clients issues. (How do I know this? On Wednesday, June 8, 2024, at the TechnoSecurity & Digital Forensics Conference told me, “I have to take a break. The security problems with Microsoft are killing me.”

- The “leadership” at Microsoft is loved by Wall Street. However, others fail to respond with hearts and flowers.

Net net: Microsoft poses a grave security threat to government agencies and the users of Microsoft products. Talking with dulcet tones may make some people happy. I think there are others who believe Microsoft wants government contracts. Its employees want an easy life, money, and respect. Would you hire a former Microsoft security professional? This is not a question of trust; this is a question of malfeasance. Smooth talking is the priority, not security.

Stephen E Arnold, June 11, 2024

Allegations of Personal Data Flows from X.com to Au10tix

June 4, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I work from my dinobaby lair in rural Kentucky. What the heck to I know about Hod HaSharon, Israel? The answer is, “Not much.” However, I read an online article called “Elon Musk Now Requiring All X Users Who Get Paid to Send Their Personal ID Details to Israeli Intelligence-Linked Corporation.”I am not sure if the statements in the write up are accurate. I want to highlight some items from the write up because I have not seen information about this interesting identify verification process in my other feeds. This could be the second most covered news item in the last week or two. Number one goes to Google’s telling people to eat a rock a day and its weird “not our fault” explanation of its quantumly supreme technology.

Here’s what I carried away from this X to Au10tix write up. (A side note: Intel outfits like obscure names. In this case, Au10tix is a cute conversion of the word authentic to a unique string of characters. Aw ten tix. Get it?)

Yes, indeed. There is an outfit called Au10tix, and it is based about 60 miles north of Jerusalem, not in the intelware capital of the world Tel Aviv. The company, according to the cited write up, has a deal with Elon Musk’s X.com. The write up asserts:

X now requires new users who wish to monetize their accounts to verify their identification with a company known as Au10tix. While creator verification is not unusual for online platforms, Elon Musk’s latest move has drawn intense criticism because of Au10tix’s strong ties to Israeli intelligence. Even people who have no problem sharing their personal information with X need to be aware that the company they are using for verification is connected to the Israeli government. Au10tix was founded by members of the elite Israeli intelligence units Shin Bet and Unit 8200.

Sounds scary. But that’s the point of the article. I would like to remind you, gentle reader, that Israel’s vaunted intelligence systems failed as recently as October 2023. That event was described to me by one of the country’s former intelligence professionals as “our 9/11.” Well, maybe. I think it made clear that the intelware does not work as advertised in some situations. I don’t have first-hand information about Au10tix, but I would suggest some caution before engaging in flights of fancy.

The write up presents as actual factual information:

The executive director of the Israel-based Palestinian digital rights organization 7amleh, Nadim Nashif, told the Middle East Eye: “The concept of verifying user accounts is indeed essential in suppressing fake accounts and maintaining a trustworthy online environment. However, the approach chosen by X, in collaboration with the Israeli identity intelligence company Au10tix, raises significant concerns. “Au10tix is located in Israel and both have a well-documented history of military surveillance and intelligence gathering… this association raises questions about the potential implications for user privacy and data security.” Independent journalist Antony Loewenstein said he was worried that the verification process could normalize Israeli surveillance technology.

What the write up did not significant detail. The write up reports:

Au10tix has also created identity verification systems for border controls and airports and formed commercial partnerships with companies such as Uber, PayPal and Google.

My team’s research into online gaming found suggestions that the estimable 888 Holdings may have a relationship with Au10tix. The company pops up in some of our research into facial recognition verification. The Israeli gig work outfit Fiverr.com seems to be familiar with the technology as well. I want to point out that one of the Fiverr gig workers based in the UK reported to me that she was no longer “recognized” by the Fiverr.com system. Yeah, October 2023 style intelware.

Who operates the company? Heading back into my files, I spotted a few names. These individuals may no longer involved in the company, but several names remind me of individuals who have been active in the intelware game for a few years:

- Ron Atzmon: Chairman (Unit 8200 which was not on the ball on October 2023 it seems)

- Ilan Maytal: Chief Data Officer

- Omer Kamhi: Chief Information Security Officer

- Erez Hershkovitz: Chief Financial Officer (formerly of the very interesting intel-related outfit Voyager Labs, a company about which the Brennan Center has a tidy collection of information related to the LAPD)

The company’s technology is available in the Azure Marketplace. That description identifies three core functions of Au10tix’ systems:

- Identity verification. Allegedly the system has real-time identify verification. Hmm. I wonder why it took quite a bit of time to figure out who did what in October 2023. That question is probably unfair because it appears no patrols or systems “saw” what was taking place. But, I should not nit pick. The Azure service includes a “regulatory toolbox including disclaimer, parental consent, voice and video consent, and more.” That disclaimer seems helpful.

- Biometrics verification. Again, this is an interesting assertion. As imagery of the October 2023 emerged I asked myself, “How did that ID to selfie, selfie to selfie, and selfie to token matches” work? Answer: Ask the families of those killed.

- Data screening and monitoring. The system can “identify potential risks and negative news associated with individuals or entities.” That might be helpful in building automated profiles of individuals by companies licensing the technology. I wonder if this capability can be hooked to other Israeli spyware systems to provide a particularly helpful, real-time profile of a person of interest?

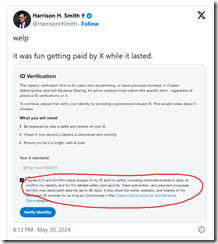

Let’s assume the write up is accurate and X.com is licensing the technology. X.com — according to “Au10tix Is an Israeli Company and Part of a Group Launched by Members of Israel’s Domestic Intelligence Agency, Shin Bet” — now includes this

The circled segment of the social media post says:

I agree to X and Au10tix using images of my ID and my selfie, including extracted biometric data to confirm my identity and for X’s related safety and security, fraud prevention, and payment purposes. Au10tix may store such data for up to 30 days. X may store full name, address, and hashes of my document ID number for as long as I participate in the Creator Subscription or Ads Revenue Share program.

This dinobaby followed the October 2023 event with shock and surprise. The dinobaby has long been a champion of Israel’s intelware capabilities, and I have done some small projects for firms which I am not authorized to identify. Now I am skeptical and more critical. What if X’s identity service is compromised? What if the servers are breached and the data exfiltrated? What if the system does not work and downstream financial fraud is enabled by X’s push beyond short text messaging? Much intelware is little more than glorified and old-fashioned search and retrieval.

Does Mr. Musk or other commercial purchasers of intelware know about cracks and fissures in intelware systems which allowed the October 2023 event to be undetected until live-fire reports arrived? This tie up is interesting and is worth monitoring.

Stephen E Arnold, June 4, 2024

Telegram: No Longer Just Mailing It In

May 29, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Allegedly about 900 million people “use” Telegram. More are going to learn about the platform as the company comes under more European Union scrutiny, kicks the tires for next-generation obfuscation technology, and become a best friend of Microsoft… for now. “Telegram Gets an In-App Copilot Bot” reports:

Microsoft has added an official Copilot bot within the messaging app Telegram, which lets users search, ask questions, and converse with the AI chatbot. Copilot for Telegram is currently in beta but is free for Telegram users on mobile or desktop. People can chat with Copilot for Telegram like a regular conversation on the messaging app. Copilot for Telegram is an official Microsoft bot (make sure it’s the one with the checkmark and the username @CopilotOfficialBot).

You can “try it now.” Just navigate to Microsoft “Copilot for Telegram.” At this location, you can:

Meet your new everyday AI companion: Copilot, powered by GPT, now on Telegram. Engage in seamless conversations, access information, and enjoy a smarter chat experience, all within Telegram.

A dinobaby lecturer explains the Telegram APIs and its bot function for automating certain operations within the Telegram platform. Some in the class are looking at TikTok, scrolling Instagram, or reading about a breakthrough in counting large numbers of objects using a unique numerical recipe. But Telegram? WhatsApp and Signal are where the action is, right? Thanks, MSFT Copilot. You are into security and now Telegram. Keep your focus, please.

Next week, I will deliver a talk about Telegram and some related information about obfuscated messaging at the TechnoSecurity & Digital Forensics Conference. I no longer do too many lectures because I am an 80 year old dinobaby, and I hate flying and standing around talking to people 50 years younger than I. However, my team’s research into end-to-end encrypted messaging yielded some interesting findings. At the 2024 US National Cyber Crime Conference about 260 investigators listened to my 75 minute talk, and a number of them said, “We did not know that.” I will also do a Telegram-centric lecture at another US government event in September. But in this short post, I want to cover what the “deal” with Microsoft suggests.

Let’s get to it.

Telegram operates out of Dubai. The distributed team of engineers has been adding features and functions to what began as a messaging app in Russia. The “legend” of Telegram is an interesting story, but I remain skeptical about the company, its links with a certain country, and the direction in which the firm is headed. If you are not familiar with the service, it has morphed into a platform with numerous interesting capabilities. For some actors, Telegram can and has replaced the Dark Web with Telegram’s services. Note: Messages on Telegram are not encrypted by default as they are on some other E2EE messaging applications. Examples include contraband, “personal” services, and streaming video to thousands of people. Some Telegram users pay to get “special” programs. (Please, use your imagination.)

Why is Telegram undergoing this shift from humble messaging app to a platform? Our research suggests that there are three reasons. I want to point out that Pavel Durov does not have a public profile on the scale of a luminary like Elon Musk or Sam AI-Man, but he is out an about. He conducted an “exclusive” and possibly red-herring discussion with Tucker Carlson in April 2024. After the interview, Mr. Pavlov took direct action to block certain message flows from Ukraine into Russia. That may be one reason: Telegram is actively steering information about Ukraine’s view of Mr. Putin’s special operation. Yep, freedom.

Are there others? Let me highlight three:

- Mr. Pavlov and his brother who allegedly is like a person with two PhDs see an opportunity to make money. The Pavlovs, however, are not hurting for cash.

- American messaging apps have been fat and lazy. Mr. Pavlov is an innovator, and he wants to make darned sure that he rungs rings around Signal, WhatsApp, and a number of other outfits. Ego? My team thinks that is part of Mr. Pavlov’s motivation.

- Telegram is expanding because it may not be an independent, free-wheeling outfit. Several on my team think that Mr. Pavlov answers to a higher authority. Is that authority aligned with the US? Probably not.

Now the Microsoft deal?

Several questions may get you synapses in gear:

- Where are the data flowing through Telegram located / stored geographically? The service can regenerate some useful information for a user with a new device.

- Why tout freedom and free speech in April 2024 and several weeks later apply restrictions on data flow? Does this suggest a capability to monitor by user, by content type, and by other metadata?

- Why is Telegram exploring additional network enhancements? My team thinks that Mr. Pavlov has some innovations in obfuscation planned. If the company does implement certain technologies freely disclosed in US patents, what will that mean for analysts and investigators?

- Why a tie up with Microsoft? Whose idea was this? Who benefits from the metadata? What happens if Telegram has some clever ideas about smart software and the Telegram bot function?

Net net: Not too many people in Europe’s regulatory entities have paid much attention to Telegram. The entities of interest have been bigger fish. Now Telegram is growing faster than a Chernobyl boar stuffed on radioactive mushrooms. The EU is recalibrating for Telegram at this time. In the US, the “I did not know” reaction provides some insight into general knowledge about Telegram’s more interesting functions. Think pay-to-view streaming video about certain controversial subjects. Free storage and data transfer is provided by Telegram, a company which does not embrace the Netflix approach to entertainment. Telegram is, as I explain in my lectures, interesting, very interesting.

Stephen E Arnold, May 29, 2024

Google Dings MSFT: Marketing Motivated by Opportunism

May 21, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

While not as exciting as Jake Paul versus Mike Tyson, but the dust up is interesting. The developments leading up to this report about Google criticizing Microsoft’s security methods have a bit of history:

- Microsoft embraced OpenAI, Mistral, and other smart software because regulators are in meetings about regulating

- Google learned that after tire kicking, Apple found OpenAI (Microsoft’s pal) more suitable to the now innovation challenged iPhone. Google became a wallflower, a cute one, but a wallflower nevertheless

- Google faces trouble on three fronts: [a] Its own management of technology and its human resources; [b] threats to its online advertising and brokering business; and [c] challenges in cost control. (Employees get fired, and CFOs leave for a reason.)

Google is not a marketing outfit nor is it one that automatically evokes images associated with trust, data privacy, and people sensitivity. Google seized an opportunity to improve Web search. When forced to monetize, the company found inspiration in the online advertising “pay to play” ideas of Yahoo (Overture and GoTo). There was a legal dust up and Google paid up for that Eureka! moment. Then Google rode the demand for matching ads to queries. After 25 years, Google remains dependent on its semi-automated ad business. Now that business must be supplemented with enterprise cloud revenue.

Two white collar victims of legal witch hunts discuss “trust”. Good enough, MSFT Copilot.

How does the company market while the Red Alert klaxon blares into the cubicles, Google Meet sessions, and the Foosball game areas.?

The information in “Google Attacks Microsoft Cyber Failures in Effort to Steal Customers.” I wonder if Foundem and the French taxation authority might find the Google bandying about the word “steal”? I don’t know the answer to this question. The title indicates that Microsoft’s security woes, recently publicized by the US government, provide a marketing opportunity.

The article reports Google’s grand idea this way:

Government agencies that switch 500 or more users to Google Workspace Enterprise Plus for three years will get one year free and be eligible for a “significant discount” for the rest of the contract, said Andy Wen, the senior director of product management for Workspace. The Alphabet Inc. division is offering 18 months free to corporate customers that sign a three-year contract, a hefty discount after that and incident response services from Google’s Mandiant security business. All customers will receive free consulting services to help them make the switch.

The idea that Google is marketing is an interesting one. Like Telegram, Google has not been a long-time advocate of Madison Avenue advertising, marketing, and salesmanship. I was once retained by a US government agency to make a phone call to one of my “interaction points” at Google so that the director of the agency could ask a question about the quite pricey row of yellow Google Search Appliances. I made the call and obtained the required information. I also got paid. That’s some marketing in my opinion. An old person from rural Kentucky intermediating between a senior government official and a manager in one of Google’s mind boggling puzzle palace.

I want to point out that Google’s assertions about security may be overstated. One recent example is the Register’s report “Google Cloud Shows It Can Break Things for Lots of Customers – Not Just One at a Time.” Is this a security issue? My hunch is that whenever something breaks, security becomes an issue. Why? Rushed fixes may introduce additional vulnerabilities on top of the “good enough” engineering approach implemented by many high-flying, boastful, high-technology outfits. The Register says:

In the week after its astounding deletion of Australian pension fund UniSuper’s entire account, you might think Google Cloud would be on its very best behavior. Nope.

So what? When one operates at Google scale, the “what” is little more than users of 33 Google Cloud services were needful of some of that YouTube TV Zen moment stuff.

My reaction is that these giant outfits which are making clear that single points of failure are the norm in today’s online environment may not do the “fail over” or “graceful recovery” processes with the elegance of Mikhail Baryshnikov’s tuning point solo move. Google is obviously still struggling with the after effects of Microsoft’s OpenAI announcement and the flops like the Sundar & Prabhakar Comedy Show in Paris and the “smart software” producing images orthogonal to historical fact.

Online advertising expertise may not correlate with marketing finesse.

Stephen E Arnold, May 21, 2024

Germany Has Had It with Some Microsoft Products

May 20, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Can Schleswig-Holstein succeed where Munich and Lower Saxony failed? Those two German states tried switching their official IT systems from Microsoft to open source software but were forced to reverse course. Emboldened by Microsoft’s shove to adopt Windows 11 and Office 365, informed by its neighbors’ defeats, and armed with three years of planning, Germany’s northernmost state is forging ahead. The Register frames the initiative as an epic battle in, “Open Source Versus Microsoft: The New Rebellion Begins.”

With cries of “Digital Sovereignty,” Schleswig-Holstein shakes its fist at its corporate overlord. Beginning with the aptly named LibreOffice suite, these IT warriors plan to replace Microsoft products top to bottom with open source alternatives. Writer Rupert Goodwins notes open source software has improved since Munich and Lower Saxony were forced to retreat, but will that be enough? He considers:

“Microsoft has a lot of cards to play here. Schleswig-Holstein will have to maintain compatibility with Windows within its own borders, with the German federation, with Europe, and the rest of the world. If a change to Windows happens to break that compatibility, guess who picks up the pain and the bills. Microsoft wouldn’t dream of doing that deliberately, no matter how high the stakes, yet these things happen. Freedom to innovate, don’t you know. If in five years the transition is a success, the benefits to the state, the people, and open source will be immeasurable. As well as bringing data protection back to those charged with providing it, it will give European laws new teeth. It will increase expertise, funding, and opportunities for open source. Schleswig-Holstein itself will become a new hub of technical excellence in an area that intensely interests the rest of the world, in public and private organizations. Microsoft cannot afford to let this happen. Schleswig-Holstein cannot back down, now it’s made it a battle for independence.”

See the write-up for more warfare language as well as Goodwins’ likening of user agreements to the classic suzerain-vassal relationship. Will Schleswig-Holstein emerge victorious, or will mighty Microsoft prevail? Governments depend on Microsoft. The US is now putting pressure on the Softies to do something more than making Windows 11 more annoying and creating a Six Flags Over Cyber Crime with their security methods. Will anything change? Nah.

Cynthia Murrell, May 22, 2024

Researchers Reveal Vulnerabilities Across Pinyin Keyboard Apps

May 9, 2024

Conventional keyboards were designed for languages based on the Roman alphabet. Fortunately, apps exist to adapt them to script-based languages like Chinese, Japanese, and Korean. Unfortunately, such tools can pave the way for bad actors to capture sensitive information. Researchers at the Citizen Lab have found vulnerabilities in many pinyin keyboard apps, which romanize Chinese languages. Gee, how could those have gotten there? The post, “The Not-So-Silent Type,” presents their results. Writers Jeffrey Knockel, Mona Wang, and Zoë Reichert summarize the key findings:

- “We analyzed the security of cloud-based pinyin keyboard apps from nine vendors — Baidu, Honor, Huawei, iFlytek, OPPO, Samsung, Tencent, Vivo, and Xiaomi — and examined their transmission of users’ keystrokes for vulnerabilities.

- Our analysis revealed critical vulnerabilities in keyboard apps from eight out of the nine vendors in which we could exploit that vulnerability to completely reveal the contents of users’ keystrokes in transit. Most of the vulnerable apps can be exploited by an entirely passive network eavesdropper.

- Combining the vulnerabilities discovered in this and our previous report analyzing Sogou’s keyboard apps, we estimate that up to one billion users are affected by these vulnerabilities. Given the scope of these vulnerabilities, the sensitivity of what users type on their devices, the ease with which these vulnerabilities may have been discovered, and that the Five Eyes have previously exploited similar vulnerabilities in Chinese apps for surveillance, it is possible that such users’ keystrokes may have also been under mass surveillance.

- We reported these vulnerabilities to all nine vendors. Most vendors responded, took the issue seriously, and fixed the reported vulnerabilities, although some keyboard apps remain vulnerable.”

See the article for all the details. It describes the study’s methodology, gives specific findings for each of those app vendors, and discusses the ramifications of the findings. Some readers may want to skip to the very detailed Summary of Recommendations. It offers suggestions to fellow researchers, international standards bodies, developers, app store operators, device manufacturers, and, finally, keyboard users.

The interdisciplinary Citizen Lab is based at the Munk School of Global Affairs & Public Policy, University of Toronto. Its researchers study the intersection of information and communication technologies, human rights, and global security.

Cynthia Murrell, May 9, 2024

Google Stomps into the Threat Intelligence Sector: AI and More

May 7, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Before commenting on Google’s threat services news. I want to remind you of the link to the list of Google initiatives which did not survive. You can find the list at Killed by Google. I want to mention this resource because Google’s product innovation and management methods are interesting to say the least. Operating in Code Red or Yellow Alert or whatever the Google crisis buzzword is, generating sustainable revenue beyond online advertising has proven to be a bit of a challenge. Google is more comfortable using such methods as [a] buying and trying to scale it, [b] imitating another firm’s innovation, and [c] dumping big money into secret projects in the hopes that what comes out will not result in the firm’s getting its “glass” kicked to the curb.

Google makes a big entrance at the RSA Conference. Thanks, MSFT Copilot. Have you considerate purchasing Google’s threat intelligence service?

With that as background, Google has introduced an “unmatched” cyber security service. The information was described at the RSA security conference and in a quite Googley blog post “Introducing Google Threat Intelligence: Actionable threat intelligence at Google Scale.” Please, note the operative word “scale.” If the service does not make money, Google will “not put wood behind” the effort. People won’t work on the project, and it will be left to dangle in the wind or just shot like Cricket, a now famous example of animal husbandry. (Google’s Cricket was the Google Appliance. Remember that? Take over the enterprise search market. Nope. Bang, hasta la vista.)

Google’s new service aims squarely at the comparatively well-established and now maturing cyber security market. I have to check to see who owns what. Venture firms and others with money have been buying promising cyber security firms. Google owned a piece of Recorded Future. Now Recorded Future is owned by a third party outfit called Insight. Darktrace has been or will be purchased by Thoma Bravo. Consolidation is underway. Thus, it makes sense to Google to enter the threat intelligence market, using its Mandiant unit as a springboard, one of those home diving boards, not the cliff in Acapulco diving platform.

The write up says:

we are announcing Google Threat Intelligence, a new offering that combines the unmatched depth of our Mandiant frontline expertise, the global reach of the VirusTotal community, and the breadth of visibility only Google can deliver, based on billions of signals across devices and emails. Google Threat Intelligence includes Gemini in Threat Intelligence, our AI-powered agent that provides conversational search across our vast repository of threat intelligence, enabling customers to gain insights and protect themselves from threats faster than ever before.

Google to its credit did not trot out the “quantum supremacy” lingo, but the marketers did assert that the service offers “unmatched visibility in threats.” I like the “unmatched.” Not supreme, just unmatched. The graphic below illustrates the elements of the unmatchedness:

Credit to the Google 2024

But where is artificial intelligence in the diagram? Don’t worry. The blog explains that Gemini (Google’s AI “system”) delivers

AI-driven operationalization

But the foundation of the new service is Gemini, which does not appear in the diagram. That does not matter, the Code Red crowd explains:

Gemini 1.5 Pro offers the world’s longest context window, with support for up to 1 million tokens. It can dramatically simplify the technical and labor-intensive process of reverse engineering malware — one of the most advanced malware-analysis techniques available to cybersecurity professionals. In fact, it was able to process the entire decompiled code of the malware file for WannaCry in a single pass, taking 34 seconds to deliver its analysis and identify the kill switch. We also offer a Gemini-driven entity extraction tool to automate data fusion and enrichment. It can automatically crawl the web for relevant open source intelligence (OSINT), and classify online industry threat reporting. It then converts this information to knowledge collections, with corresponding hunting and response packs pulled from motivations, targets, tactics, techniques, and procedures (TTPs), actors, toolkits, and Indicators of Compromise (IoCs). Google Threat Intelligence can distill more than a decade of threat reports to produce comprehensive, custom summaries in seconds.

I like the “indicators of compromise.”

Several observations:

- Will this service be another Google Appliance-type play for the enterprise market? It is too soon to tell, but with the pressure mounting from regulators, staff management issues, competitors, and savvy marketers in Redmond “indicators” of success will be known in the next six to 12 months

- Is this a business or just another item on a punch list? The answer to the question may be provided by what the established players in the threat intelligence market do and what actions Amazon and Microsoft take. Is a new round of big money acquisitions going to begin?

- Will enterprise customers “just buy Google”? Chief security officers have demonstrated that buying multiple security systems is a “safe” approach to a job which is difficult: Protecting their employers from deeply flawed software and years of ignoring online security.

Net net: In a maturing market, three factors may signal how the big, new Google service will develop. These are [a] price, [b] perceived efficacy, and [c] avoidance of a major issue like the SolarWinds’ matter. I am rooting for Googzilla, but I still wonder why Google shifted from Recorded Future to acquisitions and me-too methods. Oh, well. I am a dinobaby and cannot be expected to understand.

Stephen E Arnold, May 7, 2024

Microsoft Security Messaging: Which Is What?

May 6, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I am a dinobaby. I am easily confused. I read two “real” news items and came away confused. The first story is “Microsoft Overhaul Treats Security As Top Priority after a Series of Failures.” The subtitle is interesting too because it links “security” to monetary compensation. That’s an incentive, but why isn’t security just part of work at an alleged monopoly’s products and services? I surmise the answer is, “Because security costs money, a lot of money.” That article asserts:

After a scathing report from the US Cyber Safety Review Board recently concluded that “Microsoft’s security culture was inadequate and requires an overhaul,” it’s doing just that by outlining a set of security principles and goals that are tied to compensation packages for Microsoft’s senior leadership team.

Okay. But security emerges from basic engineering decisions; for instance, does a developer spend time figuring out and resolving security when dependencies are unknown or documented only by a grousing user in a comment posted on a technical forum? Or, does the developer include a new feature and moves on to the next task, assuming that someone else or an automated process will make sure everything works without opening the door to the curious bad actor? I think that Microsoft assumes it deploys secure systems and that its customers have the responsibility to ensure their systems’ security.

The cyber racoons found the secure picnic basket was easily opened. The well-fed, previously content humans seem dismayed that their goodies were stolen. Thanks, MSFT Copilot. Definitely good enough.

The write up adds that Microsoft has three security principles and six security pillars. I won’t list these because the words chosen strike me like those produced by a lawyer, an MBA, and a large language model. Remember. I am a dinobaby. Six plus three is nine things. Some car executive said a long time ago, “Two objectives is no objective.” I would add nine generalizations are not a culture of security. Nine is like Microsoft Word features. No one can keep track of them because most users use Word to produce Words. The other stuff is usually confusing, in the way, or presented in a way that finding a specific feature is an exercise in frustration. Is Word secure? Sure, just download some nifty documents from a frisky Telegram group or the Dark Web.

The write up concludes with a weird statement. Let me quote it:

I reported last month that inside Microsoft there is concern that the recent security attacks could seriously undermine trust in the company. “Ultimately, Microsoft runs on trust and this trust must be earned and maintained,” says Bell. “As a global provider of software, infrastructure and cloud services, we feel a deep responsibility to do our part to keep the world safe and secure. Our promise is to continually improve and adapt to the evolving needs of cybersecurity. This is job #1 for us.”

First, there is the notion of trust. Perhaps Edge’s persistence and advertising in the start menu, SolarWinds, and the legions of Chinese and Russian bad actors undermine whatever trust exists. Most users are clueless about security issues baked into certain systems. They assume; they don’t trust. Cyber security professionals buy third party security solutions like shopping at a grocery store. Big companies’ senior executive don’t understand why the problem exists. Lawyers and accountants understand many things. Digital security is often not a core competency. “Let the cloud handle it,” sounds pretty good when the fourth IT manager or the third security officer quit this year.

Now the second write up. “Microsoft’s Responsible AI Chief Worries about the Open Web.” First, recall that Microsoft owns GitHub, a very convenient source for individuals looking to perform interesting tasks. Some are good tasks like snagging a script to perform a specific function for a church’s database. Other software does interesting things in order to help a user shore up security. Rapid 7 metasploit-framework is an interesting example. Almost anyone can find quite a bit of useful software on GitHub. When I lectured in a central European country’s main technical university, the students were familiar with GitHub. Oh, boy, were they.

In this second write up I learned that Microsoft has released a 39 page “report” which looks a lot like a PowerPoint presentation created by a blue-chip consulting firm. You can download the document at this link, at least you could as of May 6, 2024. “Security” appears 78 times in the document. There are “security reviews.” There is “cybersecurity development” and a reference to something called “Our Aether Security Engineering Guidance.” There is “red teaming” for biosecurity and cybersecurity. There is security in Azure AI. There are security reviews. There is the use of Copilot for security. There is something called PyRIT which “enables security professionals and machine learning engineers to proactively find risks in their generative applications.” There is partnering with MITRE for security guidance. And there are four footnotes to the document about security.

What strikes me is that security is definitely a popular concept in the document. But the principles and pillars apparently require AI context. As I worked through the PowerPoint, I formed the opinion that a committee worked with a small group of wordsmiths and crafted a rather elaborate word salad about going all in with Microsoft AI. Then the group added “security” the way my mother would chop up a red pepper and put it in a salad for color.

I want to offer several observations:

- Both documents suggest to me that Microsoft is now pushing “security” as Job One, a slogan used by the Ford Motor Co. (How are those Fords fairing in the reliability ratings?) Saying words and doing are two different things.

- The rhetoric of the two documents remind me of Gertrude’s statement, “The lady doth protest too much, methinks.” (Hamlet? Remember?)

- The US government, most large organizations, and many individuals “assume” that Microsoft has taken security seriously for decades. The jargon-and-blather PowerPoint make clear that Microsoft is trying to find a nice way to say, “We are saying we will do better already. Just listen, people.”

Net net: Bandying about the word trust or the word security puts everyone on notice that Microsoft knows it has a security problem. But the key point is that bad actors know it, exploit the security issues, and believe that Microsoft software and services will be a reliable source of opportunity of mischief. Ransomware? Absolutely. Exposed data? You bet your life. Free hacking tools? Let’s go. Does Microsoft have a security problem? The word form is incorrect. Does Microsoft have security problems? You know the answer. Aether.

Stephen E Arnold, May 6, 2024

Microsoft: Security Debt and a Cooked Goose

May 3, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Microsoft has a deputy security officer. Who is it? For reasons of security, I don’t know. What I do know is that our test VPNs no longer work. That’s a good way to enforce reduced security: Just break Windows 11. (Oh, the pushed messages work just fine.)

Is Microsoft’s security goose cooked? Thanks, MSFT Copilot. Keep following your security recipe.

I read “At Microsoft, Years of Security Debt Come Crashing Down.” The idea is that technical debt has little hidden chambers, in this case, security debt. The write up says:

…negligence, misguided investments and hubris have left the enterprise giant on its back foot.

How has Microsoft responded? Great financial report and this type of news:

… in early April, the federal Cyber Safety Review Board released a long-anticipated report which showed the company failed to prevent a massive 2023 hack of its Microsoft Exchange Online environment. The hack by a People’s Republic of China-linked espionage actor led to the theft of 60,000 State Department emails and gained access to other high-profile officials.

Bad? Not as bad as this reminder that there are some concerning issues

What is interesting is that big outfits, government agencies, and start ups just use Windows. It’s ubiquitous, relatively cheap, and good enough. Apple’s software is fine, but it is different. Linux has its fans, but it is work. Therefore, hello Windows and Microsoft.

The article states:

Just weeks ago, the Cybersecurity and Infrastructure Security Agency issued an emergency directive, which orders federal civilian agencies to mitigate vulnerabilities in their networks, analyze the content of stolen emails, reset credentials and take additional steps to secure Microsoft Azure accounts.

The problem is that Microsoft has been successful in becoming for many government and commercial entities the only game in town. This warrants several observations:

- The Microsoft software ecosystem may be impossible to secure due to its size and complexity

- Government entities from America to Zimbabwe find the software “good enough”

- Security — despite the chit chat — is expensive and often given cursory attention by system architects, programmers, and clients.

The hope is that smart software will identify, mitigate, and choke off the cyber threats. At cyber security conferences, I wonder if the attendees are paying attention to Emily Dickinson (the sporty nun of Amherst), who wrote:

Hope is the thing with feathers

That perches in the soul

And sings the tune without the words

And never stops at all.

My thought is that more than hope may be necessary. Hope in AI is the cute security trick of the day. Instead of a happy bird, we may end up with a cooked goose.

Stephen E Arnold, May 3, 2024