You Do Not Search. You Insight.

April 12, 2017

I am delighted, thrilled. I read “Coveo, Microsoft, Sinequa Lead Insight Engine Market.” What a transformation is captured in what looks to me like a content marketing write up. Key word search morphs into “insight.” For folks who do not follow the history of enterprise search with the fanaticism of those involved in baseball statistics, the use of the word “insight” to describe locating a document is irrelevant. Do you search or insight?

For me, hunkered down in rural Kentucky, with my monitors flickering in the intellectual darkness of Kentucky, the use of the word “insight” is a linguistic singularity. Maybe not on the scale of an earthquake in Italy or a banker leaping from his apartment to the Manhattan asphalt, but a historical moment nevertheless.

Let me recap some of my perceptions of the three companies mentioned in the headline to this tsunami of jargon in the Datanami story:

- Coveo is a company which developed a search and retrieval system focused on Windows. With some marketing magic, the company explained keyword search as customer support, then Big data, and now this new thing, “insight”. For those who track vendor history, the roots of Coveo reach back to a consumer interface which was designed to make search easy. Remember Copernic. Yep, Coveo has been around a long while.

- Sinequa also was a search vendor. Like Exalead and Polyspot and other French search vendors, the company wanted manage data, provide federation, and enable workflows. After a president change and some executive shuffling, Sinequa emerged as a Big Data outfit with a core competency in analytics. Quite a change. How similar is Sinequa to enterprise search? Pretty similar.

- Microsoft. I enjoyed the “saved by the bell” deal in 2008 which delivered the “work in progress” Fast Search & Transfer enterprise search system to Redmond. Fast Search was one of the first search vendors to combine fast-flying jargon with a bit of sales magic. Despite the financial meltdown and an investigation of the Fast Search financials, Microsoft ponied up $1.2 billion and reinvented SharePoint search. Well, not exactly reinvented, but SharePoint is a giant hairball of content management, collaboration, business “intelligence” and, of course, search. Here’s a user friendly chart to help you grasp SharePoint search.

Flash forward to this Datanami article and what do I learn? Here’s a paragraph I noted with a smiley face and an exclamation point:

Among the areas where natural language processing is making inroads is so-called “insight engines” that are projected to account for half of analytic queries by 2019. Indeed, enterprise search is being supplanted by voice and automated voice commands, according to Gartner Inc. The market analyst released it latest “Magic Quadrant” rankings in late March that include a trio of “market leaders” along with a growing list of challengers that includes established vendors moving into the nascent market along with a batch of dedicated startups.

There you go. A trio like ZZTop with number one hits? Hardly. A consulting firm’s “magic” plucks these three companies from a chicken farm and gives each a blue ribbon. Even though we have chickens in our backyard, I cannot tell one from another. Subjectivity, not objectivity, applies to picking good chickens, and it seems to be what New York consulting firms do too.

Are the “scores” for the objective evaluations based on company revenue? No.

Return on investment? No.

Patents? No.

IRR? No. No. No.

Number of flagship customers like Amazon, Apple, and Google type companies? No.

The ranking is based on “vision.” And another key factor is “the ability to execute its “strategy.” There you go. A vision is what I want to help me make my way through Kabul. I need a strategy beyond stay alive.

What would I do if I have to index content in an enterprise? My answer may surprise you. I would take out my check book and license these systems.

- Palantir Technologies or Centrifuge Systems

- Bitext’s Deep Linguistic Analysis platform

- Recorded Future.

With these three systems I would have:

- The ability to locate an entity, concept, event, or document

- The capability to process content in more than 40 languages, perform subject verb object parsing and entity extraction in near real time

- Point-and-click predictive analytics

- Point-and-click visualization for financial, business, and military warfighting actions

- Numerous programming hooks for integrating other nifty things that I need to achieve an objective such as IBM’s Cybertap capability.

Why is there a logical and factual disconnect between what I would do to deliver real world, high value outputs to my employees and what the New York-Datanami folks recommend?

Well, “disconnect” may not be the right word. Have some search vendors and third party experts embraced the concept of “fake news” or embraced the know how explained in Propaganda, Father Ellul’s important book? Is the idea something along the lines of “we just say anything and people will believe our software will work this way”?

Many vendors stick reasonably close to the factual performance of their software and systems. Let me highlight three examples.

First, Darktrace, a company crafted by Dr. Michael Lynch, is a stickler for explaining what the smart software does. In a recent exchange with Darktrace, I learned that Darktrace’s senior staff bristle when a descriptive write up strays from the actual, verified technical functions of the software system. Anyone who has worked with Dr. Lynch and his senior managers knows that these people can be very persuasive. But when it comes to Darktrace, it is “facts R us”, thank you.

Second, Recorded Future takes a similar hard stand when explaining what the Recorded Future system can and cannot do. Anyone who suggests that Recorded Future predictive analytics can identify the winner of the Kentucky Derby a day before the race will be disabused of that notion by Recorded Future’s engineers. Accuracy is the name of the game at Recorded Future, but accuracy relates to the use of numerical recipes to identify likely events and assign a probability to some events. Even though the company deals with statistical probabilities, adding marketing spice to the predictive system’s capabilities is a no-go zone.

Third, Bitext, the company that offers a Deep Linguistics Analysis platform to improve the performance of a range of artificial intelligence functions, is anchored in facts. On a recent trip to Spain, we interviewed a number of the senior developers at this company and learned that Bitext software works. Furthermore, the professionals are enthusiastic about working for this linguistics-centric outfit because it avoid marketing hyperbole. “Our system works,” said one computational linguist. This person added, “We do magic with computational linguistics and deep linguistic analysis.” I like that—magic. Oh, Bitext does sales too with the likes of Porsche, Volkswagen, and the world’s leading vendor of mobile systems and services, among others. And from Madrid, Spain, no less. And without marketing hyperbole.

Why then are companies based on keyword indexing with a sprinkle of semantics and basic math repositioning themselves by chasing each new spun sugar-encrusted trend?

I have given a tiny bit of thought to this question.

In my monograph “The New Landscape of Search” I made the point that search had become devalued, a free download in open source repositories, and a utility like cat or dir. Most enterprise search systems have failed to deliver results painted in Technicolor in sales presentations and marketing collateral.

Today, if I want search and retrieval, I just use Lucene. In fact, Lucene is more than good enough; it is comparable to most proprietary enterprise search systems. If I need support, I can ring up Elastic or one of many vendors eager to gild the open source lily.

The extreme value and reliability of open source search and retrieval software has, in my opinion, gutted the market for proprietary search and retrieval software. The financial follies of Fast Search & Transfer reminded some investors of the costly failures of Convera, Delphes, Entopia, among others I documented on my Xenky.com site at this link.

Recently most of the news I see on my coal fired computer in Harrod’s Creek about enterprise search has been about repositioning, not innovation. What’s up?

The answer seems to be that the myth cherished by was that enterprise search was the one, true way make sense of digital information. What many organizations learned was that good enough search does the basic blocking and tackling of finding a document but precious little else without massive infusions of time, effort, and resources.

But do enterprise search systems–no matter how many sparkly buzzwords–work? Not too many, no matter what publicly traded consulting firms tell me to believe.

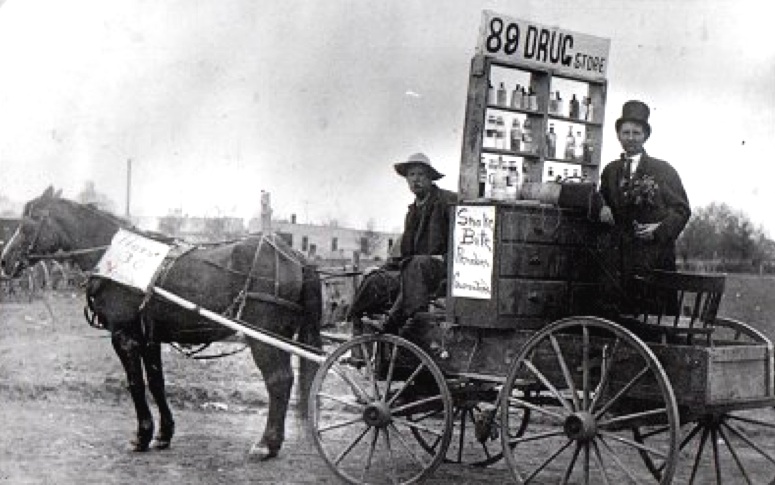

Snake oil? I don’t know. I just know my own experience, and after 45 years of trying to make digital information findable, I avoid fast talkers with covered wagons adorned with slogans.

What happens when an enterprise search system is fed videos, podcasts, telephone intercepts, flows of GPS data, and a couple of proprietary file formats?

Answer: Not much.

The search system has to be equipped with extra cost connectors, assorted oddments, and shimware to deal with a recorded webinar and a companion deck of PowerPoint slides used by the corporate speaker.

What happens when the content stream includes email and documents in six, 12, or 24 different languages?

Answer: Mad scrambling until the proud licensee of an enterprise search system can locate a vendor able to support multiple language inputs. The real life needs of an enterprise are often different from what the proprietary enterprise search system can deal with.

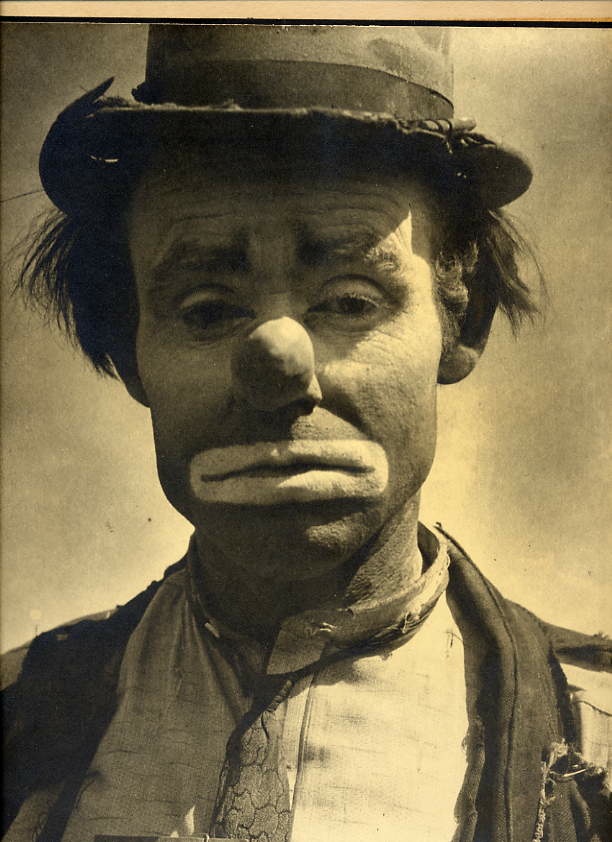

That’s why I find the repositioning of enterprise search technology a bit like a clown with a sad face. The clown is no longer funny. The unconvincing efforts to become something else clash with the sad face, the red nose, and worn shoes still popular in Harrod’s Creek, Kentucky.

When it comes to enterprise search, my litmus test is simple: If a system is keyword centric, it isn’t going to work for some of the real world applications I have encountered.

Oh, and don’t believe me, please.

Find a US special operations professional who relies on Palantir Gotham or IBM Analyst’s Notebook to determine a route through a hostile area. Ask whether a keyword search system or Palantir is more useful. Listen carefully to the answer.

No matter what keyword enthusiasts and quasi-slick New York consultants assert, enterprise search systems are not well suited for a great many real world applications. Heck, enterprise search often has trouble displaying documents which match the user’s query.

And why? Sluggish index updating, lousy indexing, wonky metadata, flawed set up, updates that kill a system, or interfaces that baffle users.

Personally I love to browse results lists. I like old fashioned high school type research too. I like to open documents and Easter egg hunt my way to a document that answers my question. But I am in the minority. Most users expect their finding systems to work without the query-read-click-scan-read-scan-read-scan Sisyphus-emulating slog.

Ah, you are thinking I have offered no court admissible evidence to support my argument, right? Well, just license a proprietary enterprise search system and let me know how your career is progressing. Remember when you look for a new job. You won’t search; you will insight.

Stephen E Arnold, April 12, 2017

Attivio Takes on SCOLA Repository

March 16, 2017

We noticed that Attivio is back to enterprise search, and now uses the fetching catchphrase, “data dexterity company.” Their News page announces, “Attivio Chosen as Enterprise Search Platform for World’s Largest Repository of Foreign Language Media.” We’ve been keeping an eye on Attivio as it grows. With this press release, Attivio touts a large, recent feather in their cap—providing enterprise search services to SCOLA, a non-profit dedicated to helping different peoples around the world learn about each other. This tool enables SCOLA’s subscribers to find any content in any language, we’re told. The organization regards today’s information technology as crucial to their efforts. The write-up explains:

SCOLA provides a wide range of online language learning services, including international TV programming, videos, radio, and newspapers in over 200 native languages, via a secure browser-based application. At 85 terabytes, it houses the largest repository of foreign language media in the world. With its users asking for an easier way to find and categorize this information, SCOLA chose Attivio Enterprise Search to act as the primary access point for information through the web portal. This enables users, including teachers and consumers, to enter a single keyword and find information across all formats, languages and geographical regions in a matter of seconds. After looking at several options, SCOLA chose Attivio Enterprise Search because of its multi-language support and ease of customization. ‘When you have 84,000 videos in 200 languages, trying to find the right content for a themed lesson is overwhelming,’ said Maggie Artus, project manager at SCOLA. ‘With the Attivio search function, the user only sees instant results. The behind-the-scenes processing complexity is completely hidden.’”

Attivia was founded in 2007, and is headquartered in Newton, Massachusetts. The company’s client roster includes prominent organizations like UBS, Cisco, Citi, and DARPA. They are also hiring for several positions as of this writing.

Cynthia Murrell, March 16, 2017

Comprehensive, Intelligent Enterprise Search Is Already Here

February 28, 2017

The article on Sys-Con Media titled Delivering Comprehensive Intelligent Search examines the accomplishments of World Wide Technology (WWT) in building a better search engine for the business organization. The Enterprise Search Project Manager and Manager of Enterprise Content at WWT discovered that the average employee will waste over a full week each year looking for the information they need to do their work. The article details how they approached a solution for enterprise search,

We used the Gartner Magic Quadrants and started talks with all of the Magic Quadrant leaders. Then, through a down-selection process, we eventually landed on HPE… It wound up being that we went with the HPE IDOL tool, which has been one of the leaders in enterprise search, as well as big data analytics, for well over a decade now, because it has very extensible platform, something that you can really scale out and customize and build on top of.

Trying to replicate what Google delivers in an enterprise is a complicated task because of how siloed data is in the typical organization. The new search solution offers vast improvements in presenting employees with the relevant information, and all of the relevant information and prevents major time waste through comprehensive and intelligent search.

Chelsea Kerwin, February 28, 2017

The Current State of Enterprise Search, by the Numbers

February 17, 2017

The article and delightful Infographic on BA Insight titled Stats Show Enterprise Search is Still a Challenge builds an interesting picture of the present challenges and opportunities surrounding enterprise search, or at least alludes to them with the numbers offered. The article states,

As referenced by AIIM in an Industry Watch whitepaper on search and discovery, three out of four people agree that information is easier to find outside of their organizations than within. That is startling! With a more effective enterprise search implementation, these users feel that better decision-making and faster customer service are some of the top benefits that could be immediately realized.

What follows is a collection of random statistics about enterprise search. We would like to highlight one stat in particular: 58% of those investing in enterprise search get no payback after one year. In spite of the clear need for improvements, it is difficult to argue for a technology that is so long-term in its ROI, and so shaky where it is in place. However, there is a massive impact on efficiency when employees waste time looking for the information they need to do their jobs. In sum: you can’t live with it, and you can’t live (productively) without it.

Chelsea Kerwin, February 17, 2017

Investment Group Acquires Lexmark

February 15, 2017

We read with some trepidation the Kansas City Business Journal’s article, “Former Perceptive’s Parent Gets Acquired for $3.6B in Cash.” The parent company referred to here is Lexmark, which bought up one of our favorite search systems, ISYS Search, in 2012 and placed it under its Perceptive subsidiary, based in Lenexa, Kentucky. We do hope this valuable tool is not lost in the shuffle.

Reporter Dora Grote specifies:

A few months after announcing that it was exploring ‘strategic alternatives,’ Lexmark International Inc. has agreed to be acquired by a consortium of investors led by Apex Technology Co. Ltd. and PAG Asia Capital for $3.6 billion cash, or $40.50 a share. Legend Capital Management Co. Ltd. is also a member of the consortium.

Lexmark Enterprise Software in Lenexa, formerly known as Perceptive Software, is expected to ‘continue unaffected and benefit strategically and financially from the transaction’ the company wrote in a release. The Lenexa operation — which makes enterprise content management software that helps digitize paper records — dropped the Perceptive Software name for the parent’s brand in 2014. Lexmark, which acquired Perceptive for $280 million in cash in 2010, is a $3.7 billion global technology company.

If the Lexmark Enterprise Software (formerly known as Perceptive) division will be unaffected, it seems they will be the lucky ones. Grote notes that Lexmark has announced that more than a thousand jobs are to be cut amid restructuring. She also observes that the company’s buildings in Lenexa have considerable space up for rent. Lexmark CEO Paul Rooke is expected to keep his job, and headquarters should remain in Lexington, Kentucky.

Cynthia Murrell, February 15, 2017

IDOL Is Back and with NLP

December 11, 2016

I must admit that I am confused. Hewlett Packard bought Autonomy, wrote off billions, and seemed to sell the Autonomy software (IDOL and DRE) to an outfit in England. Oh, HPE, the part of the Sillycon Valley icon, which sells “enterprise” products and services owns part of the UK outfit which owns Autonomy. Got that? I am not sure I have the intricacies of this stunning series of management moves straight in my addled goose brain.

Close enough for horseshoes, however.

I read what looks like a content marketing flufferoo called “HPE Boosts IDOL Data Analytics Engine with Natural Language Processing Tools.”

I thought that IDOL had NLP functions, but obviously I am wildly off base. To get my view of the Autonomy IDOL system, check out the free analysis at this link. (Nota bene: I have done a tiny bit of work for Autonomy and have had to wrestle with the system when I labored with the system as a contractor when I worked on US government projects. I know that this is no substitute for the whizzy analysis included in the aforementioned write up. But, hey, it is what it is.)

The write up states in reasonably clear marketing lingo:

HPE has added natural language processing capabilities to its HPE IDOL data analytics engine, which could improve how humans interact with computers and data, the company announced Tuesday. By using machine learning technology, HPE IDOL will be able to improve the context around data insights, the company said.

A minor point: IDOL is based on machine learning processes. That’s the guts of the black box comprising the Bayesian, LaPlacian, and Markovian methods in the guts of the Digital Reasoning Engine which underpins the Integrated Data Operating Layer of the Autonomy system.

Here’s the killer statement:

… the company [I presume this outfit is Hewlett Packard Enterprise and not MicroFocus] has introduced HPE Natural Language Question Answering to its IDOL platform to help solve the problem. According to the release, the technology seeks to determine the original intent of the question and then “provides an answer or initiates an action drawing from an organization’s own structured and unstructured data assets in addition to available public data sources to provide actionable, trusted answers and business critical responses.

I love the actionable, trusted bit. Autonomy’s core approach is based on probabilities. Trust is okay, but it is helpful to understand that probabilities are — well — probable. The notion of “trusted answers” is a quaint one to those who drink deep from the statistical springs of data.

I highlighted this quotation, presumably from the wizards at HPE:

“IDOL Natural Language Question Answering is the industry’s first comprehensive approach to delivering enterprise class answers,” Sean Blanchflower, vice president of engineering for big data platforms at HPE, said in the release. “Designed to meet the demanding needs of data-driven enterprises, this new, language-independent capability can enhance applications with machine learning powered natural language exchange.”

My hunch is that HPE or MicroFocus or an elf has wrapped a query system around the IDOL technology. The write up does not provide too many juicy details about the plumbing. I did note these features, however:

- An IDOL Answer Bank. Ah, ha. Ask Jeeves style canned questions. There is no better way to extract information than the use of carefully crafted queries. None of the variable, real life stuff that system users throw at search and retrieval systems. My experience is that maintaining canned queries can become a bit tedious and also expensive.

- IDOL Fact Bank. Ah, ha. A query that processes content to present “factoids.” Much better than a laundry list of results. What happens when the source data return factoids which are [a] not current, [b] not accurate, or [c] without context? Hey, don’t worry about the details. Take your facts and decide, folks.

- IDOL Passage Extract. Ah, ha. A snippet or key words in context! Not exactly new, but a time proven way to provide some context to the factoid. Now wasn’t that an IDOL function in 2001? Guess not.

- IDOL Answer Server. Ah, ha. A Google style wrapper; that is, leave the plumbing alone and provide a modernized paint job.

If you match these breakthroughs with the diagram in the HP IDOL write up’s diagrams, you will note that these capabilities appear in the IDOL/DRE system diagram and features.

What’s important in this content marketing piece. The write up provides a takeaway section to help out those who are unaware of the history of IDOL, which dates from the late 1990s. Here you go. Revel in new features, enjoy NLP, and recognize that HPE is competing with IBM Watson.

There you go. Factual content in action. Isn’t modern technology analysis satisfying? IBM Watson, your play.

Stephen E Arnold, December 11, 2017

MC+A Is Again Independent: Search, Discovery, and Engineering Services

December 7, 2016

Beyond Search learned that MC+A has added a turbo-charger to its impressive search, content processing, and content management credentials. The company, based in Chicago, earned a gold star from Google for MC+A’s support and integration services for the now-discontinued Google Search Appliance. After working with the Yippy implementation of Watson Explorer, MC+A retains its search and retrieval capabilities, but expanded its scope. Michael Cizmar, the company’s president told Beyond Search, “Search is incredibly important, but customers require more multi-faceted solutions.” MC+A provides the engineering and technical capabilities to cope with Big Data, disparate content, cloud and mixed-environment platforms, and the type of information processing needed to generate actionable reports. [For more information about Cizmar’s views about search and retrieval, see “An Interview with Michael Cizmar.”

Cizmar added:

We solve organizational problems rooted in the lack of insight and accessibility to data that promotes operational inefficiency. Think of a support rep who has to look through five systems to find an answer for a customer on the phone. We are changing the way these users get to answers by providing them better insights from existing data securely. At a higher level we provide strategy support for executives looking for guidance on organizational change.

Alphabet Google’s decision to withdraw the Google Search Appliance has left more than 60,000 licensees looking for an alternative. Since the début of the GSA in 2002, Google trimmed the product line and did not move the search system to the cloud. Cizmar’s view of the GSA’s 12 year journey reveals that:

The Google Search Appliance was definitely not a failure. The idea that organizations wanted an easy-to-use, reliable Google-style search system was ahead of its time. Current GSA customers need some guidance on planning and recommendations on available options. Our point of view is that it’s not the time to simply swap out one piece of metal for another even if vendors claim “OEM” equivalency. The options available for data processing and search today all provide tremendous capabilities, including cognitive solutions which provide amazing capabilities to assist users beyond the keyword search use case.

Cizmar sees an opportunity to provide GSA customers with guidance on planning and recommendations on available options. MC+A understands the options available for data processing and information access today. The company is deeply involved in solutions which tap “smart software” to deliver actionable information.

Cizmar said:

Keyword search is a commodity at this point, and we helping our customers put search where the user is without breaking an established workflow. Answers, not laundry lists of documents to read, is paramount today. Customers want to solve specific problems; for example, reducing average call time customer support using smart software or adaptive, self service solutions. This is where MC+A’s capabilities deliver value.

MC+A is cloud savvy. The company realized that cloud and hybrid or cloud-on premises solutions were ways to reduce costs and improve system payoff. Cizmar was one of the technologists recognized by Google for innovation in cloud applications of the GSA. MC+A builds on that engineering expertise. Today, MC+A supports Google, Amazon, and other cloud infrastructures.

Cizmar revealed:

Amazon Elastic Cloud Search is probably doing as much business as Google did with the GSA but in a much different way. Many of these cloud-based offerings are generally solving the problem with the deployment complexities that go into standing up Elasticsearch, the open source version of Elastic’s information access system.

MC+A does not offer a one size fits all solution. He said:

The problem still remains of what should go into the cloud, how to get a solution deployed, and how to ensure usability of the cloud-centric system. The cloud offers tremendous capabilities in running and scaling a search cluster. However, with the API consumption model that we have to operate in, getting your data out of other systems into your search clusters remains a challenge. MC+A does not make security an afterthought. Access controls and system integrity have high priority in our solutions.

MC+A takes a business approach to what many engineering firms view as a technical problem. The company’s engineers examine the business use case. Only then does MC+A determine if the cloud is an option. If so, which product or projects capabilities meet the general requirements. After that process, MC+A implements its carefully crafted, standard deployment process.

Cizmar noted:

If you are a customer with all of your data on premises or have a unique edge case, it may not make sense to use a cloud-based system. The search system needs to be near to the content most of the time.

MC+A offers its white-labeled search “Practice in a Box” to former Google partners and other integrators. High-profile specialist vendors like Onix in Ohio are be able to resell our technology backed by the MC+A engineering team.

In 2017, MC+A will roll out a search solution which is, at this time, shrouded in secrecy. This new offering will go “beyond the GSA” and offer expanded information access functionality. To support this new product, MC+A will announce a specialized search practice.

He said:

This international practice will offer depth and breadth in selling and implementing solutions around cognitive search, assist, and analytics with products other than Google throughout the Americas. I see this as beneficial to other Google and non-Google resellers because, it allows other them to utilize our award winning team, our content filters, and a wealth of social proofs on a just in time basis.

For 2017, MC+A offers a range of products and services. Based on the limited information provided by the secrecy-conscious Michael Ciznar, Beyond Search believes that the company will offer implementation and support services for Lucene and Solr, IBM Watson, and Microsoft SharePoint. The SharePoint support will embrace some vendors supplying SharePoint centric like Coveo. Plus, MC+A will continue to offer software to acquire content and perform extract-transform-load functions on premises, in the cloud, or in hybrid configurations.,

MC+A’s approach offers a business-technology approach to information access.

For more information about MC+A, contact sales@mcplusa.com 312-585-6396.

Stephen E Arnold, December 7, 2016

Search Competition Is Fiercer Than You Expect

December 5, 2016

In the United States, Google dominates the Internet search market. Bing has gained some traction, but the results are still muddy. In Russia, Yandex chases Google around in circles, but what about the enterprise search market? The enterprise search market has more competition than one would think. We recently received an email from Searchblox, a cognitive platform that developed to help organizations embed information in applications using artificial intelligence and deep learning models. SearchBlox is also a player in the enterprise software market as well as text analytics and sentiment analysis tool.

Their email explained, “3 Reasons To Choose SearchBlox Cognitive Platform” and here they are:

1. EPISTEMOLOGY-BASED. Go beyond just question and answers. SearchBlox uses artificial intelligence (AI) and deep learning models to learn and distill knowledge that is unique to your data. These models encapsulate knowledge far more accurately than any rules based model can create.

2. SMART OPERATION Building a model is half the challenge. Deploying a model to process big data can be even for challenging. SearchBlox is built on open source technologies like Elasticsearch and Apache Storm and is designed to use its custom models for processing high volumes of data.

3. SIMPLIFIED INTEGRATION SearchBlox is bundled with over 75 data connectors supporting over 40 file formats. This dramatically reduces the time required to get your data into SearchBlox. The REST API and the security capabilities allow external applications to easily embed the cognitive processing.

To us, this sounds like what enterprise search has been offering even before big data and artificial intelligence became buzzwords. Not to mention, SearchBlox’s competitors have said the same thing. What makes Searchblox different? The company claims to be more inexpensive and they have won several accolades. SearchBlox is made on open source technology, which allows it to lower the price. Elasticsearch is the most popular open source search software, but what is funny is that Searchblox is like a repackaged version of said Elasticsearch. Mind you are paying for a program that is already developed, but Searchblox is trying to compete with other outfits like Yippy.

Whitney Grace, December 5, 2016

BA Insight and Its Ideas for Enterprise Search Success

October 25, 2016

I read “Success Factors for Enterprise Search.” The write up spells out a checklist to make certain that an enterprise search system delivers what the users want—on point answers to their business information needs. The reason a checklist is necessary after more than 50 years of enterprise search adventures is a disconnect between what software can deliver and what the licensee and the users expect. Imagine figuring out how to get across the Grand Canyon only to encounter the Iguazu Falls.

The preamble states:

I’ll start with what absolutely does not work. The “dump it in the index and hope for the best” approach that I’ve seen some companies try, which just makes the problem worse. Increasing the size of the haystack won’t help you find a needle.

I think I agree, but the challenge is multiple piles of data. Some data are in haystacks; some are in odd ball piles from the AS/400 that the old guy in accounting uses for an inventory report.

Now the check list items:

- Metadata. To me, that’s indexing. Lousy indexing produces lousy search results in many cases. But “good indexing” like the best pie at the state fair is a matter of opinion. When the licensee, users, and the search vendor talk about indexing, some parties in the conversation don’t know indexing from oatmeal. The cost of indexing can be high. Improving the indexing requires more money. The magic of metadata often leads back to a discussion of why the system delivers off point results. Then there is talk about improving the indexing and its cost. The cycle can be more repetitive than a Kenmore 28132’s.

- Provide the content the user requires. Yep, that’s easy to say. Yep, if its on a distributed network, content disappears or does not get input into the search system. Putting the content into a repository creates another opportunity for spending money. Enterprise search which “federates” is easy to say, but the users quickly discover what is missing from the index or stale.

- Deliver off point results. The results create work by not answering the user’s question. From the days of STAIRS III to the latest whiz kid solution from Sillycon Valley, users find that search and retrieval systems provide an opportunity to go back to traditional research tools such as asking the person in the next cube, calling a self-appointed expert, guessing, digging through paper documents, or hiring an information or intelligence professional to gather the needed information.

The check list concludes with a good question, “Why is this happening?” The answer does not reside in the check list. The answer does not reside in my Enterprise Search Report, The Landscape of Search, or any of the journal and news articles I have written in the last 35 years.

The answer is that vendors directly or indirectly reassure that their software will provide the information a user needs. That’s an easy hook to plant in the customer who behaves like a tuna. The customer has a search system or experience with a search system that does not work. Pitching a better, faster, cheaper solution can close the deal.

The reality is that even the most sophisticated search and content processing systems end up in trouble. Search remains a very difficult problem. Today’s solutions do a few things better than STAIRS III did. But in the end, search software crashes and burns when it has to:

- Work within a budget

- Deal with structured and unstructured data

- Meet user expectations for timeliness, precision, recall, and accuracy

- Does not require specialized training to use

- Delivers zippy response time

- Does not crash or experience downtime due to maintenance

- Outputs usable, actionable reports without having to involve a programmer

- Provides an answer to a question.

Smart software can solve some of these problems for specific types of queries. Enterprise search will benefit incrementally. For now, baloney about enterprise search continues to create churn. The incumbent loses the contract, and a new search vendors inks a deal. Months later, the incumbent loses the contract, and the next round of vendors compete for the contract. This cycle has eroded the credibility of search and content processing vendors.

A check list with three items won’t do much to change the credibility gap between what vendors say, what licensees hope will occur, and what users expect. The Grand Canyon is a big hole to fill. The Iguazu Falls can be tough to cross. Same with enterprise search.

Stephen E Arnold, October 25, 2016

Attivio: Search and Almost Everything Else

October 24, 2016

I spent a few minutes catching up with the news on the Attivio blog. You can find the information at this link. As I worked through the write ups over the past five weeks, I was struck by the diversity of Attivio’s marketing messages. Here are the ones which I noted:

- Attivio is a cognitive computing company, not a search or database company

- Attivio has an interest in governance and risk / compliance

- Attivio is involved in Big Data management

- Attivio is active in anti fraud solutions

- Attivio embraces NoSQL

- Attivio knows about modernizing an organization’s data architecture

- Attivio is a business intelligence solution.

My reaction to these capabilities is two fold:

First, for a company which has its roots in Fast Search & Transfer type of software, Attivio has added a number of applications to basic content processing and information access. Attivio embodies the vision Fast Search articulated before the company ran into some “challenges” and sold to Microsoft in 2008. Fast Search, as I understood the vision, was a platform upon which information applications could be built. Attivio appears to be heading in that direction.

The second reaction is that Attivio is churning out capabilities which embody buzzwords, jargon, and trends. Like a fisherman in a bass boat, the Attivio approach is to use different lures in order to snag a decent sized bass. I find it difficult to accept the assertion that a company rooted in search can deliver in the array of technical niches the blog posts reference.

The major takeaway for me was that Attivio has hired a new Chief Revenue Officer whose job is to generate revenue from the company’s “data catalog” business. I learned from “Attivio Names New Chief Revenue Officer”:

Connon [the insider who took over the revenue job] sees his new role as a reflection of the growing demand for technology that can break down data silos and help successful companies answer, not just the question of “what” the data is reporting, but identify correlation and patterns to answer critical “why” questions. Connon is passionate when he talks about the value of Attivio’s newest technology solution—the Semantic Data Catalog–and its ability to unify a wide array of data for a diverse customer base. “The Semantic Data Catalog is not just for financial service industries. It’s truly a horizontal technology solution that can benefit companies in any industry with data—in other words, with any company, in any industry,” explains Connon. “Our established Cognitive Search and Insight technology provides the foundation for our Semantic Data Catalog to provide companies with a self-service, permission-based ability to locate, sort, and analyze key information across an unlimited number of data applications,” adds Connon.

For me, Attivio’s “momentum” in marketing has to be converted to sustainable revenue. My assumption is that almost every professional at a software / services company sells and generates revenue. When a company lags in revenue, will one person be able to generate revenue?

I don’t have an answer. Worth monitoring to learn if the Chief Revenue Officer can deliver the money.

Stephen E Arnold, October 24, 2016