What Do DeepSeek, a Genius Girl, and Temu Have in Common? Quite a Lot

January 28, 2025

A write up from a still-living dinobaby.

A write up from a still-living dinobaby.

The Techmeme for January 28, 2024, was mostly Deepseek territory. The China-linked AI model has roiled the murky waters of the US smart software fishing hole. A big, juicy AI creature has been pulled from the lake, and it is drawing a crowd. Here’s a small portion of the datasphere thrashing on January 28, 2025 at 0700 am US Eastern time:

I have worked through a number of articles about this open source software. I noted its back story about a venture firm’s skunk works tackling AI. Armed with relatively primitive tools due to the US restriction of certain computer components, the small team figured out how to deliver results comparable to the benchmarks published about US smart software systems.

Genius girl uses basic and cheap tools to repair an old generator. Americans buy a new generator from Harbor Freight. Genius girl repairs old generator proving the benefits of a better way or a shining path. Image from the YouTube outfit which does work the American way.

The story is torn from the same playbook which produces YouTube “real life” stories like “The genius girl helps the boss to repair the diesel generator, full of power!” You can view the one-hour propaganda film at this link. Here’s a short synopsis, and I want you to note the theme of the presentation:

- Young-appearing female works outside

- She uses primitive tools

- She takes apart a complex machine

- She repairs it

- The machine is better than a new machine.

The videos are interesting. The message has not been deconstructed. My interpretation is:

- Hard working female tackles tough problem

- Using ingenuity and hard work she cracks the code

- The machine works

- Why buy a new one? Use what you have and overcome obstacles.

This is not the “Go west, young man” or private equity approach to cracking an important problem. It is political and cultural with a dash of Hoisin technical sauce. The video presents a message like that of “plum blossom boxing.” It looks interesting but packs a wallop.

Here’s a point that has not been getting much attention; specifically, the AI probe is designed to direct a flow of energy at the most delicate and vulnerable part of the US artificial intelligence “next big thing” pumped up technology “bro.”

What is that? The answer is cost. The method has been refined by Shein and Temu by poking at Amazon. Here’s how the “genius girl” uses ingenuity.

- Technical papers are published

- Open source software released

- Basic information about using what’s available released

- Cost information is released.

The result is that a Chinese AI app surges to the top of downloads on US mobile stores. This is a first. Not even the TikTok service achieved this standing so quickly. The US speculators dump AI stocks. Techmeme becomes the news service for Chinese innovation.

I see this as an effective tactic for demonstrating the value of the “genius girl” approach to solving problems. And where did Chinese government leadership watch the AI balloon lose some internal pressure. How about Colombia, a three-hour plane flight from the capital of Central and South America. (That’s Miami in the event my reference was too oblique.)

In business, cheaper and good enough are very potent advantages. The Deepseek AI play is indeed about a new twist to today’s best method of having software perform in a way that most call “smart.” But the Deepseek play is another “genius girl” play from the Middle Kingdom.

How can the US replicate the “genius girl” or the small venture firm which came up with a better idea? That’s going to be touch. While the genius girl was repairing the generator, the US AI sector was seeking more money to build giant data centers to hold thousands of exotic computing tools. Instead of repairing, the US smart software aficionados were planning on modular nuclear reactors to make the next-generation of smart software like the tail fins on a 1959 pink Cadillac.

Deepseek and the “genius girl” are not about technology. Deepseek is a manifestation of the Shein and Temu method: Fast cycle, cheap and good enough. The result is an arm flapping response from the American way of AI. Oh, does the genius girl phone home? Does she censor what she says and does?

Stephen E Arnold, January 28, 2025

Entity Extraction: Not As Simple As Some Vendors Say

November 19, 2024

No smart software. Just a dumb dinobaby. Oh, the art? Yeah, MidJourney.

No smart software. Just a dumb dinobaby. Oh, the art? Yeah, MidJourney.

Most of the systems incorporating entity extraction have been trained to recognize the names of simple entities and mostly based on the use of capitalization. An “entity” can be a person’s name, the name of an organization, or a location like Niagara Falls, near Buffalo, New York. The river “Niagara” when bound to “Falls” means a geologic feature. The “Buffalo” is not a Bubalina; it is a delightful city with even more pleasing weather.

The same entity extraction process has to work for specialized software used by law enforcement, intelligence agencies, and legal professionals. Compared to entity extraction for consumer-facing applications like Google’s Web search or Apple Maps, the specialized software vendors have to contend with:

- Gang slang in English and other languages; for example, “bumble bee.” This is not an insect; it is a nickname for the Latin Kings.

- Organizations operating in Lao PDR and converted to English words like Zhao Wei’s Kings Romans Casino. Mr. Wei has been allegedly involved in gambling activities in a poorly-regulated region in the Golden Triangle.

- Individuals who use aliases like maestrolive, james44123, or ahmed2004. There are either “real” people behind the handles or they are sock puppets (fake identities).

Why do these variations create a challenge? In order to locate a business, the content processing system has to identify the entity the user seeks. For an investigator, chopping through a thicket of language and idiosyncratic personas is the difference between making progress or hitting a dead end. Automated entity extraction systems can work using smart software, carefully-crafted and constantly updated controlled vocabulary list, or a hybrid system.

Automated entity extraction systems can work using smart software, carefully-crafted and constantly updated controlled vocabulary list, or a hybrid system.

Let’s take an example which confronts a person looking for information about the Ku Group. This is a financial services firm responsible for the Kucoin. The Ku Group is interesting because it has been found guilty in the US for certain financial activities in the State of New York and by the US Securities & Exchange Commission.

Why Google Dorks Exist and Why Most Users Do Not Know Why They Are Needed

December 4, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Many people in my lectures are not familiar with the concept of “dorks”. No, not the human variety. I am referencing the concept of a “Google dork.” If you do a quick search using Yandex.com, you will get pointers to different “Google dorks.” Click on one of the links and you will find information you can use to retrieve more precise and relevant information from the Google ad-supported Web search system.

Here’s what QDORKS.com looks like:

The idea is that one plugs in search terms and uses the pull down boxes to enter specific commands to point the ad-centric system at something more closely resembling a relevant result. Other interfaces are available; for example, the “1000 Best Google Dorks List." You get a laundry list of tips,commands, and ideas for wrestling Googzilla to the ground, twisting its tail, and (hopefully) yield relevant information. Hopefully. Good work.

Most people are lousy at pinning the tail on the relevance donkey. Therefore, let someone who knows define relevance for the happy people. Thanks, MSFT Copilot. Nice animal with map pins.

Why are Google Dorks or similar guides to Google search necessary? Here are three reasons:

- Precision reduces the opportunities for displaying allegedly relevant advertising. Semantic relaxation allows the Google to suggest that it is using Oingo type methods to find mathematically determined relationships. The idea is that razzle dazzle makes ad blasting something like an ugly baby wrapped in translucent fabric on a foggy day look really great.

- When Larry Page argued with me at a search engine meeting about truncation, he displayed a preconceived notion about how search should work for those not at Google or attending a specialist conference about search. Rational? To him, yep. Logical? To his framing of the search problem, the stance makes perfect sense if one discards the notion of tense, plurals, inflections, and stupid markers like “im” as in “impractical” and “non” as in “nonsense.” Hey, Larry had the answer. Live with it.

- The goal at the Google is to make search as intellectually easy for the “user” as possible. The idea was to suggest what the user intended. Also, Google had the old idea that a person’s past behavior can predict that person’s behavior now. Well, predict in the sense that “good enough” will do the job for vast majority of search-blind users who look for the short cut or the most convenient way to get information.

Why? Control, being clever, and then selling the dream of clicks for advertisers. Over the years, Google leveraged its information framing power to a position of control. I want to point out that most people, including many Googlers, cannot perceive. When pointed out, those individuals refuse to believe that Google does [a] NOT index the full universe of digital data, [b] NOT want to fool around with users who prefer Boolean algebra, content curation to identify the best or most useful content, and [c] fiddle around with training people to become effective searchers of online information. Obfuscation, verbal legerdemain, and the “do no evil” craziness make the railroad run the way Cornelius Vanderbilt-types implemented.

I read this morning (December 4, 2023) the Google blog post called “New Ways to Find Just What You Need on Search.” The main point of the write up in my opinion is:

Search will never be a solved problem; it continues to evolve and improve alongside our world and the web.

I agree, but it would be great if the known search and retrieval functions were available to users. Instead, we have a weird Google Mom approach. From the write up:

To help you more easily keep up with searches or topics you come back to a lot, or want to learn more about, we’re introducing the ability to follow exactly what you’re interested in.

Okay, user tracking, stored queries, and alerts. How does the Google know what you want? The answer is that users log in, use Google services, and enter queries which are automatically converted to search. You will have answers to questions you really care about.

There are other search functions available in the most recent version of Google’s attempts to deal with an unsolved problem:

As with all information on Search, our systems will look to show the most helpful, relevant and reliable information possible when you follow a topic.

Yep, Google is a helicopter parent. Mom will know what’s best, select it, and present it. Don’t like it? Mom will be recalcitrant, like shaping search results to meet what the probabilistic system says, “Take your medicine, you brat.” Who said, “Mother Google is a nice mom”? Definitely not me.

And Google will make search more social. Shades of Dr. Alon Halevy and the heirs of Orkut. The Google wants to bring people together. Social signals make sense to Google. Yep, content without Google ads must be conquered. Let’s hope the Google incentive plans encourage the behavior, or those valiant programmers will be bystanders to other Googlers’ promotions and accompanying money deliveries.

Net net: Finding relevant, on point, accurate information is more difficult today than at any other point in the 50+ year work career. How does the cloud of unknowing dissipate? I have no idea. I think it has moved in on tiny Googzilla feet and sits looking over the harbor, ready to pounce on any creature that challenges the status quo.

PS. Corny Vanderbilt was an amateur compared to the Google. He did trains; Google does information.

Stephen E Arnold, December 4, 2023

Google: Running the Same Old Game Plan

July 31, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Google has been running the same old game plan since the early 2000s. But some experts are unaware of its simplicity. In the period from 2002 to 2004, I did a number of reports for my commercial clients about Google. In 2004, I recycled some of the research and analysis into The Google Legacy. The thesis of the monograph, published in England by the now defunct Infonortics Ltd. explained the infrastructure for search was enhanced to provide an alternative to commercial software for personal, business, and government use. The idea that a search-and-retrieval system based on precedent technology and funded in part by the National Science Foundation with a patent assigned to Stanford University could become Googzilla was a difficult idea to swallow. One of the investment banks who paid for our research got the message even though others did not. I wonder if that one group at the then world’s largest software company remembers my lecture about the threat Google posed to a certain suite of software applications? Probably not. The 20 somethings and the few suits at the lecture looked like kindergarteners waiting for recess.

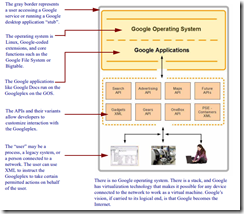

I followed up The Google Legacy with Google Version 2.0: The Calculating Predator. This monograph was again based on proprietary research done for my commercial clients. I recycled some of the information, scrubbing that which was deemed inappropriate for anyone to buy for a few British pounds. In that work, I rather methodically explained that Google’s patent documents provided useful information about why the mere Web search engine was investing in some what seemed like odd-ball technologies like software janitors. I reworked one diagram to show how the Google infrastructure operated like a prison cell or walled garden. The idea is that once one is in, one may have to work to get past the gatekeeper to get out. I know the image from a book does not translate to a blog post, but, truth be told, I am disinclined to recreate art. At age 78, it is often difficult to figure out why smart drawing tools are doing what they want, not what I want.

Here’s the diagram:

The prison cell or walled garden (2006) from Google Version 2.0: The Calculating Predator, published by Infonortics Ltd., 2006. And for any copyright trolls out there, I created the illustration 20 years ago, not Alamy and not Getty and no reputable publisher.

Three observations about the diagram are: [a] The box, prison cell, or walled garden contains entities, [b] once “in” there is a way out but the exit is via Google intermediated, defined, and controlled methods, and [c] anything in the walled garden perceives that the prison cell is the outside world. The idea obviously is for Google to become the digital world which people will perceive as the Internet.

I thought about my decades old research when I read “Google Tries to Defend Its Web Environment Integrity as Critics Slam It as Dangerous.” The write up explains that Google wants to make online activity better. In the comments to the article, several people point out that Google is using jargon and fuzzy misleading language to hide its actual intentions with the WEI.

The critics and the write up miss the point entirely: Look at the diagram. WEI, like the AMP initiative, is another method added to existing methods for Google to extend its hegemony over online activity. The patent, implement, and explain approach drags out over years. Attention spans, even for academics who make up data like the president of Stanford University, are not interested in anything other than personal goal achievement. Finding out something visible for years is difficult. When some interesting factoid is discovered, few accept it. Google has a great brand, and it cares about user experience and the other fog the firm generates.

MidJourney created this nice image of a Googler preparing for a presentation to the senior management of Google in 2001. In that presentation, the wizard was outlining Google’s fundamental strategy: Fake left, go right. The slogan for the company, based on my research, keep them fooled. Looking the wrong way is the basic rule of being a successful Googler, strategist, or magician.

Will Google WEI win? It does not matter because Google will just whip up another acronym, toss some verbal froth around, and move forward. What is interesting to me is Google’s success. Points I have noted over the years are:

- Kindergarten colors, Google mouse pads, and talking like General Electric once did about “bringing good things” continues to work

- Google’s dominance is not just accepted, changing or blocking anything Google wants to do is sacrilegious. It has become a sacred digital cow

- The inability of regulators to see Google as it is remains a constant, like Google’s advertising revenue

- Certain government agencies could not perform their work if Google were impeded in any significant way. No, I will not elaborate on this observation in a public blog post. Don’t even ask. I may make a comment in my keynote at the Massachusetts / New York Association of Crime Analysts’ conference in early October 2023. If you can’t get in, you are out of luck getting information on Point Four.

Net net: Fire up your Chrome browser. Look for reality in the Google search results. Turn cartwheels to comply with Google’s requirements. Pay money for traffic via Google advertising. Learn how to create good blog posts from Google search engine optimization experts. Use Google Maps. Put your email in Gmail. Do the Google thing. Then ask yourself, “How do I know if the information provided by Google is “real”? Just don’t get curious about synthetic data for Google smart software. Predictions about Big Brother are wrong. Google, not the government, is the digital parent whom you embraced after a good “Backrub.” Why change from high school science thought processes? If it ain’t broke, don’t fix it.

Stephen E Arnold, July 31, 2023

The Google Reorg. Will It Output Xooglers, Not Innovations?

April 25, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

My team and I have been talking about the Alphabet decision to merge DeepMind with Google Brain. Viewed from one angle, the decision reflects the type of efficiency favored by managers who value the idea of streamlining. The arguments for consolidation are logical; for example, the old tried-and-true buzzword synergy may be invoked to explain the realignment. The decision makes business sense, particularly for an engineer or a number-oriented MBA, accountant, or lawyer.

Arguing against the “one plus one equals three” viewpoint may be those who have experienced the friction generated when staff, business procedures, and projects get close, interact, and release energy. I use the term “energy” to explain the dormant forces unleashed as the reorganization evolves. When I worked at a nuclear consulting firm early in my career, I recall the acrimonious and irreconcilable differences between a smaller unit in Florida and a major division in Maryland. The fix was to reassign personnel and give up on the dream of one big, happy group.

This somewhat pathos-infused image was created using NightCafe Creator and Craiyon. The author (a dinobaby) added the caption which may appeal to large language model-centric start ups with money, ideas, and a “we can do this” vibe.

Over the years, my team and I have observed Google’s struggles to innovate. The successes have been notable. Before the Alphabet entity was constructed, the “old” Google purchased Keyhole, Inc. (a spin-off of the gaming company Intrinsic). That worked after the US government invested in the company. There have been some failures too. My team followed the Orkut product which evolved from a hire named Orkut Büyükkökten, who had developed an allegedly similar system while working at InCircle. Orkut was a success, particularly among users in Brazil and a handful of other countries. However, some Orkut users relied on the system for activities which some found unacceptable. Google killed the social networking system in 2014 as Facebook surged to global prominence as Google’s efforts fell to earth. The company was in a position to be a player in social media, and it botched the opportunity. Steve Ballmer allegedly described Google as a “one-trick pony.” Mr. Ballmer’s touch point was Google’s dependence on online advertising: One source of revenue; therefore, a circus pony able to do one thing. Mr. Ballmer’s quip illustrates the fact that over the firm’s 20-plus year history, Google has not been able to diversify its revenue. More than two-thirds of the company’s money comes directly or indirectly from advertising.

My team and I have watched Google struggle to accept adapt its free-wheeling style to a more traditional business approach to policies and procedures. In one notable incident, my team and I were involved in reviewing proposals to index the content of the US Federal government. Google was one of the bidders. The Google proposal did not follow the expected format of responding to each individual requirement in the request for proposal. In 2000, Google professionals made it clear its method did not require that the government’s statement of work be followed. Other vendors responded, provided the required technical commentary, and produced cost estimates in a format familiar to those involved in the contracting award process. Flash forward 23 years, and Google has figured out how to capture US government work.

The key point: The learning process took a long time.

Why is this example relevant to the Alphabet decision to blend the Brain and DeepMind units? Change — despite the myths of Silicon Valley — is difficult for Alphabet. The tensions at the company are well known. Employees and part-time workers grouse and sometimes carry signs and disturb traffic. Specific personnel matters become, rightly or wrongly, messages that say, Google is unfair. The Google management generated an international spectacle with its all-thumbs approach to human relations. Dr. Timnit Gebru was a co-author of a technical paper which identified a characteristic of smart software. She and several colleagues explained that bias in training data produces results which are skewed. Anyone who has used any of the search systems which used open source libraries created by Google know that outputs are variable, which is a charitable way of saying, “Dr. Gebru was correct.” She became a Xoogler, set up a new organization, and organized a conference to further explain her research — the same research which ruffled the feathers of some Alphabet big birds.

The pace of generative artificial intelligence is accelerating. Disruption can be smelled like ozone in an old-fashioned electric power generation station. My team and I attempt to continue tracking innovations in smart software. We cannot do it. I am prepared to suggest that the job is quite challenging because the flow of new ChatGPT-type products, services, applications, and features is astounding. I recall the early days of the Internet when in 1993 I could navigate to a list of new sites via Mosaic browser and click on the ones of interest. I recall that in a matter of months the list grew too long to scan and was eventually discontinued. Smart software is behaving in this way: Too many people are doing too many new things.

I want to close this short personal essay with several points.

First, mashing up different cultures and a history of differences will act like a brake and add friction to innovative work. Such reorganizations will generate “heat” in the form of disputes, overt or quiet quitting, and an increase in productivity killers like planning meetings, internal product pitches, and getting legal’s blessing on a proposed service.

Second, a revenue monoculture is in danger when one pest runs rampant. Alphabet does not have a mechanism to slow down what is happening in the generative AI space. In online advertising, Google has knobs and levers. In the world of creating applications and hooking them together to complete tasks, Alphabet management seems to lack a magic button. The pests just eat the monoculture’s crop.

Third, the unexpected consequence of merging Brain and DeepMind may be creating what I call a “Xoogler Manufacturing Machine.” Annoyed or “grass is greener” Google AI experts may go to one of the many promising generative AI startups. Note: A former Google employee is sometimes labeled a “Xoogler,” which is shorthand for ex-Google employee.

Net net: In a conversation in 2005 with a Google professional whom I cannot name due to the confidentiality agreement I signed with the firm, I asked, “Do you think people and government officials will figure out what Google is really doing?” This person, who was a senior manager, said to the best of my recollection, “Sure and when people do, it’s game.” My personal view is that Alphabet is in a game in which the clock is ticking. And in the process of underperforming, Alphabet’s advertisers and users of free and for-fee services will shift their attention elsewhere, probably to a new or more agile firm able to leverage smart software. Alphabet’s most recent innovation is the creation of a Xoogler manufacturing system. The product? Former Google employees who want to do something instead of playing in the Alphabet sandbox with argumentative wizards and several ill-behaved office pets.

Stephen E Arnold, April 24, 2023

Search and Retrieval: A Sub Sub Assembly

January 2, 2023

What’s happening with search and retrieval? Google’s results irritate some; others are happy with Google’s shaping of information. Web competitors exist; for example, Kagi.com and Neva.com. Both are subscription services. Others provide search results “for free”; examples include Swisscows.com and Yandex.com. You can find metasearch systems (minimal original spidering, just recycling results from other services like Bing.com); for instance, StartPage.com (formerly Ixquick.com) and DuckDuckGo.com. Then there are open source search options. The flagship or flagships are Solr and Lucene. Proprietary systems exist too. These include the ageing X1.com and the even age-ier Coveo system. Remnants of long-gone systems are kicking around too; to wit, BRS and Fulcrum from OpenText, Fast Search now a Microsoft property, and Endeca, owned by Oracle. But let’s look at search as it appears to a younger person today.

A decayed foundation created via smart software on the Mage.space system. A flawed search and retrieval system can make the structure built on the foundation crumble like Southwest Airlines’ reservation system.

First, the primary means of access is via a mobile device. Surprisingly, the source of information for many is video content delivered by the China-linked TikTok or the advertising remora YouTube.com. In some parts of the world, the go-to information system is Telegram, developed by Russian brothers. This is a centralized service, not a New Wave Web 3 confection. One can use the service and obtain information via a query or a group. If one is “special,” an invitation to a private group allows access to individuals providing information about open source intelligence methods or the Russian special operation, including allegedly accurate video snips of real-life war or disinformation.

The challenge is that search is everywhere. Yet in the real world, finding certain types of information is extremely difficult. Obtaining that information may be impossible without informed contacts, programming expertise, or money to pay what would have been called “special librarian research professionals” in the 1980s. (Today, it seems, everyone is a search expert.)

Here’s an example of the type of information which is difficult if not impossible to obtain:

- The ownership of a domain

- The ownership of a Tor-accessible domain

- The date at which a content object was created, the date the content object was indexed, and the date or dates referenced in the content object

- Certain government documents; for example, unsealed court documents, US government contracts for third-party enforcement services, authorship information for a specific Congressional bill draft, etc.

- A copy of a presentation made by a corporate executive at a public conference.

I can provide other examples, but I wanted to highlight the flaws in today’s findability.

A Xoogler May Question the Google about Responsible and Ethical Smart Software

December 2, 2021

Write a research paper. Get colleagues to provide input. Well, ask colleagues do that work and what do you get. How about “Looks good.” Or “Add more zing to that chart.” Or “I’m snowed under so it will be a while but I will review it…” Then the paper wends its way to publication and a senior manager type reads the paper on a flight from one whiz kid town to another whiz kid town and says, “This is bad. Really bad because the paper points out that we fiddle with the outputs. And what we set up is biased to generate the most money possible from clueless humans under our span of control.” Finally, the paper is blocked from publication and the offending PhD is fired or sent signals that your future lies elsewhere.

Will this be a classic arm wrestling match? The winner may control quite a bit of conceptual territory along with knobs and dials to shape information.

Could this happen? Oh, yeah.

“Ex Googler Timnit Gebru Starts Her Own AI Research Center” documents the next step, which may mean that some wizards undergarments will be sprayed with eau de poison oak for months, maybe years. Here’s one of the statements from the Wired article:

“Instead of fighting from the inside, I want to show a model for an independent institution with a different set of incentive structures,” says Gebru, who is founder and executive director of Distributed Artificial Intelligence Research (DAIR). The first part of the name is a reference to her aim to be more inclusive than most AI labs—which skew white, Western, and male—and to recruit people from parts of the world rarely represented in the tech industry. Gebru was ejected from Google after clashing with bosses over a research paper urging caution with new text-processing technology enthusiastically adopted by Google and other tech companies.

The main idea, which Wired and Dr. Gebru delicately sidestep, is that there are allegations of an artificial intelligence or machine learning cabal drifting around some conference hall chatter. On one side is the push for what I call the SAIL approach. The example I use to illustrate how this cost effective, speedy, and clever short cut approach works is illustrated in some of the work of Dr. Christopher Ré, the captain of the objective craft SAIL. Oh, is the acronym unfamiliar to you? SAIL is short version of Stanford Artificial Intelligence Laboratory. SAIL fits on the Snorkel content diving gear I think.

On the other side of the ocean, are Dr. Timnit Gebru’s fellow travelers. The difference is that Dr. Gebru believes that smart software should not reflect the wit, wisdom, biases, and general bro-ness of the high school science club culture. This culture, in my opinion, has contributed to the fraying of the social fabric in the US, caused harm, and erodes behaviors that are supposed to be subordinated to “just what people do to make a social system function smoothly.”

Does the Wired write up identify the alleged cabal? Nope.

Does the write up explain that the Ré / Snorkel methods sacrifice some precision in the rush to generate good enough outputs? (Good enough can be framed in terms of ad revenue, reduced costs, and faster time to market testing in my opinion.) Nope.

Does Dr. Gebru explain how insidious the short cut training of models is and how it will create systems which actively harm those outside the 60 percent threshold of certain statistical yardsticks? Heck, no.

Hopefully some bright researchers will explain what’s happening with a “deep dive”? Oh, right, Deep Dive is the name of a content access company which uses Dr. Ré’s methods. Ho, ho, ho. You didn’t know?

Beyond Search believes that Dr. Gebru has important contributions to make to applied smart software. Just hurry up already.

Stephen E Arnold, December 2, 2021

Search Engines: Bias, Filters, and Selective Indexing

March 15, 2021

I read “It’s Not Just a Social Media Problem: How Search Engines Spread Misinformation.” The write up begins with a Venn diagram. My hunch is that quite a few people interested in search engines will struggle with the visual. Then there is the concept that typing in a search team returns results are like loaded dice in a Manhattan craps game in Union Square.

The reasons, according to the write up, that search engines fall off the rails are:

- Relevance feedback or the Google-borrowed CLEVER method from IBM Almaden’s patent

- Fake stories which are picked up, indexed, and displayed as value infused,

The write up points out that people cannot differentiate between accurate, useful, or “factual” results and crazy information.

Okay, here’s my partial list of why Web search engines return flawed results:

- Stop words. Control the stop words and you control the info people can find

- Stored queries. Type what you want but get the results already bundled and ready to display.

- Selective spidering. The idea is that any index is a partial representation of the possible content. Instruct spiders to skip Web sites with information about peanut butter, and, bingo, no peanut butter information

- Spidering depth. Is the bad stuff deep in a Web site? Just limit the crawl to fewer links?

- Spider within a span. Is a marginal Web site linking to sites with info you want killed? Don’t follow links off a domain.

- Delete the past. Who looks at historical info? A better question, “What advertiser will pay to appear on old content?” Kill the backfile. Web indexes are not archives no matter what thumbtypers believe.

There are other methods available as well; for example, objectionable info can be placed in near line storage so that results from questionable sources display with latency or slow enough to cause the curious user to click away.

To sum up, some discussions of Web search are not complete or accurate.

Stephen E Arnold, March 15, 2021

Open Source Software: The Community Model in 2021

January 25, 2021

I read “Why I Wouldn’t Invest in Open-Source Companies, Even Though I Ran One.” I became interested in open source search when I was assembling the first of three editions of Enterprise Search Report in the early 2000s. I debated whether to include Compass Search, the precursor to Shay Branon’s Elasticsearch reprise. Over the years, I have kept my eye on open source search and retrieval. I prepared a report for an the outfit IDC, which happily published sections of the document and offering my write ups for $3,000 on Amazon. Too bad IDC had no agreement with me, managers who made Daffy Duck look like a model for MBAs, and a keen desire to find a buyer. Ah, the book still resides on one of my back of drives, and it contains a run down of where open source was getting traction. I wrote the report in 2011 before getting the shaft-a-rama from a mid tier consulting firm. Great experience!

The report included a few nuggets which in 2011 not many experts in enterprise search recognized; for instance:

- Large companies were early and enthusiastic adopters of open source search; for example Lucene. Why? Reduce costs and get out of the crazy environment which put Fast Search & Transfer-type executives in prison for violating some rules and regulations. The phrase I heard in some of my interviews was, “We want to get out of the proprietary software handcuffs.” Plus big outfits had plenty of information technology resources to throw at balky open source software.

- Developers saw open source in general and contributing to open source information retrieval projects as a really super duper way to get hired. For example, IBM — an early enthusiast for a search system which mostly worked — used the committers as feedstock. The practice became popular among other outfits as well.

- Venture outfits stuffed with oh-so-technical MBAs realized that consulting services could be wrapped around free software. Sure, there were legal niceties in the open source licenses, but these were not a big deal when Silicon Valley super lawyers were just a text message away.

There were other findings as well, including the initiatives underway to embed open source search, content processing, and related functions into commercial products. Attivio (formed by former super star managers from Fast Search & Transfer), Lucid Works, IBM, and other bright lights adopted open source software to [a] reduce costs, [b] eliminate the R&D required to implement certain new features, and [c] develop expensive, proprietary components, training, and services.

Security Vendors: Despite Marketing Claims for Smart Software Knee Jerk Response Is the Name of the Game

December 16, 2020

Update 3, December 16, 2020 at 1005 am US Eastern, the White House has activate its cyber emergency response protocol. Source: “White House Quietly Activates Cyber Emergency Response” at Cyberscoop.com. The directive is located at this link and verified at 1009 am US Eastern as online.

Update 2, December 16, 2020 at 1002 am US Eastern. The Department of Treasury has been identified as a entity compromised by the SolarWinds’ misstep. Source: US “Treasury, Commerce Depts. Hacked through SolarWinds Compromise” at KrebsonSecurity.com

Update 1, December 16, 2020, at 950 am US Eastern. The SolarWinds’ security misstep may have taken place in 2018. Source: “SolarWinds Leaked FTP Credentials through a Public GitHub Repo “mib-importer” Since 2018” at SaveBreach.com

I talked about security theater in a short interview/conversation with a former CIA professional. The original video of that conversation is here. My use of the term security theater is intended to convey the showmanship that vendors of cyber security software have embraced for the last five years, maybe more. The claims of Dark Web threat intelligence, the efficacy of investigative software with automated data feeds, and Bayesian methods which inoculate a client from bad actors— maybe this is just Madison Avenue gone mad. On the other hand, maybe these products and services don’t work particularly well. Maybe these products and services are anchored in what bad actors did yesterday and are blind to the here and now of dudes and dudettes with clever names?

Evidence of this approach to a spectacular security failure is documented in the estimable Wall Street Journal (hello, Mr. Murdoch) and the former Ziff entity ZDNet. Numerous online publications have reported, commented, and opined about the issue. One outfit with a bit of first hand experience with security challenges (yes, I am thinking about Microsoft) reported “SolarWinds Says Hack Affected 18,000 Customers, Including Two Major Government Agencies.”

One point seems to be sidestepped in the coverage of this “concern.” The corrective measures kicked in after the bad actors had compromised and accessed what may be sensitive data. Just a mere 18,000 customers were affected. Who were these “customers”? The list seems to have been disappeared from the SolarWinds’ Web site and from the Google cache. But Newsweek, an online information service, posted this which may, of course, be horse feathers (sort of like security vendors’ security systems?):