Beyond Search About Page Update

November 9, 2011

After the carpet bombing by AtomicPR, I updated my About page. I wanted to make clearer than ever my policies. You can find the About page at this link.

The main point is that I did not design Beyond Search to be a news publication. If news turns up in the blog content stream, that’s an error. We rely on open source content and use it as a spring board for our commentary.

Beyond Search exists for three reasons:

First, I capture factoids, quotes, and thoughts in a chronological manner, seven days a week, three to seven stories a day. We have more than 6,700 content objects in the archive. I use this material for my for fee columns, reports, and personal research. If someone reads a blog post and thinks I am doing news, get out of here. The blog was designed for me by me. I have two or three readers, but the only reader who counts is I.

|

|

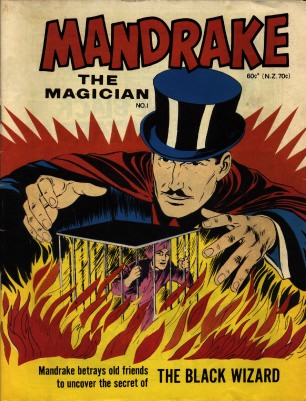

Test question: Which one bills for time and which one donates to better the world? Give up. Mother Theresa is on the right. The billing machine is on the left with the mustache. PR and marketing professionals, are you processing the time=money statement?

Second, if someone wants us to cover a particular technology topic, we will do it if the item is interesting to us. If we write something up, we charge for our time. We also sell ads at the top and side of the splash page content window. The reason? I pay humans to work on my research with me, and I put some, not all, just some of the information I process in the blog. I am not Mother Theresa, don’t have much interest in helping injured dogs or starving artists. Therefore, in my world my time invokes money. Don’t like it? Don’t ask me to do something for you, your client, your mom’s recent investment, or a friend of a friend.

Third, the Beyond Search content links to high value, original information like the Search Wizards Speak series. Anyone in an academic or educational role can use my content without asking. If you are a failed home economics teacher now working as a search expert or an azure chip consultant pretending you know something about content analytics, you will have to get my permission to rip off, recycle, republish, or otherwise make what’s mine yours. I have been reasonable in allowing reuse of my content. There’s one person in Slovenia who actually translates my blog content into a language with many consonants. No problem. Just ask. Don’t ask? Well, I can get frisky.

A Coming Dust Up between Oracle and MarkLogic?

November 7, 2011

Is XML the solution to enterprise data management woes? Is XML a better silver bullet than taxonomy management? Will Oracle sit on the sidelines or joust with MarkLogic?

Last week, an outfit named AtomicPR sent me a flurry of news releases. I wrote a chipper Atomic person mentioning that I sell coverage and that I thought the three news releases looked a lot like Spam to me. No answer, of course.

A couple of years ago, we did some work for MarkLogic, a company focused on Extensible Markup Language or XML. I suppose that means AtomicPR can nuke me with marketing fluff. At age 67, getting nuked is not my idea of fun via email or just by aches and pains.

Since August 2011, MarkLogic has been “messaging” me. The recent 2011 news releases explained that MarkLogic was hooking XML to the buzz word “big data.” I am not exactly sure what “big data” means, but that is neither here nor there.

In September 2011, I learned that MarkLogic had morphed into a search vendor. I was surprised. Maybe, amazed is a more appropriate word. See Information Today’s interview with Ken Bado, formerly an Autodesk employee. (Autodesk makes “proven 3D software that accelerates better design.” Autodesk was the former employer of Carol Bartz when Autodesk was an engineering and architectural design software company. I have a difficult time keeping up with information management firms’ positioning statements. I refer to this as “fancy dancing” or “floundering” even though an azure chip consultant insists I really should use the word “foundering”. I love it when azure chip consultants and self appointed experts input advice to my free blog.)

In a joust between Oracle and MarkLogic, which combatant will be on the wrong end of the pointy stick thing? When marketing goes off the rails, the horse could be killed. Is that one reason senior executives exit the field of battle? Is that one reason veterinarians haunt medieval re-enactments?

Trade Magazine Explains the New MarkLogic

I thought about MarkLogic when I read “MarkLogic Ties Its Database to Hadoop for Big Data Support.” The PCWorld story stated:

MarkLogic 5, which became generally available on Tuesday, includes a Hadoop connector that will allow customers to “aggregate data inside MarkLogic for richer analytics, while maintaining the advantages of MarkLogic indexes for performance and accuracy,” the company said.

A connector is a software widget that allows one system to access the information in another system. I know this is a vastly simplified explanation. Earlier this year, Palantir and i2 Group (now part of IBM) got into an interesting legal squabble over connectors. I believe I made the point in a private briefing that “connectors are a new battleground.” the MarkLogic story in PCWorld indicated that MarkLogic is chummy with Hadoop via connectors. I don’t think MarkLogic codes its own connectors. My recollection is that ISYS Search Software licenses some connectors to MarkLogic, but that deal may have gone south by now. And, MarkLogic is a privately held company funded, I believe, by Lehman Brothers, Sequoia Capital, and Tenaya Capital. I am not sure “open source” and these financial wizards are truly harmonized, but again I could be wrong, living in rural Kentucky and wasting my time in retirement writing blog posts.

The Perils of Searching in a Hurry

November 1, 2011

I read the Computerworld story “How Google Was Tripped Up by a Bad Search.” I assume that it is pretty close to events as the “real” reporter summarized them.

Let me say that I am not too concerned about the fact that Google was caught in a search trip wire. I am concerned with a larger issue, and one that is quite important as search becomes indexing, facets, knowledge, prediction, and apps. The case reported by Computerworld applies to much of “finding” information today.

Legal matters are rich with examples of big outfits fumbling a procedure or making an error under the pressure of litigation or even contemplating litigation. The Computerworld story describes an email which may be interpreted as having a bright LED to shine on the Java in Android matter. I found this sentence fascinating:

Lindholm’s computer saved nine drafts of the email while he was writing it, Google explained in court filings. Only to the last draft did he add the words “Attorney Work Product,” and only on the version that was sent did he fill out the “to” field, with the names of Rubin and Google in-house attorney Ben Lee.

Ah, the issue of versioning. How many content management experts have ignored this issue in the enterprise. When search systems index, does one want every version indexed or just the “real” version? Oh, what is the “real” version. A person has to investigate and then make a decision. Software and azure chip consultants, governance and content management experts, and busy MBAs and contractors are often too busy to perform this work. Grunt work, I believe, it may be described by some.

What I am considering is the confluence of people who assume “search” works, the lack of time Outlook and iCalandar “priority one” people face, and the reluctance to sit down and work through documents in a thorough manner. This is part of the “problem” with search and software is not going to resolve the problem quickly, if ever.

Source: http://www.clipartguide.com/_pages/0511-1010-0617-4419.html

What struck me is how people in a hurry, assumptions about search, and legal procedures underscore a number of problems in findability. But the key paragraph in the write up, in my opinion, was:

It’s unclear exactly how the email drafts slipped through the net, and Google and two of its law firms did not reply to requests for comment. In a court filing, Google’s lawyers said their “electronic scanning tools” — which basically perform a search function — failed to catch the documents before they were produced, because the “to” field was blank and Lindholm hadn’t yet added the words “attorney work product.” But documents produced for opposing counsel should normally be reviewed by a person before they go out the door, said Caitlin Murphy, a senior product manager at AccessData, which makes e-discovery tools, and a former attorney herself. It’s a time-consuming process, she said, but it was “a big mistake” for the email to have slipped through.

What did I think when I read this?

First, all the baloney—yep, the right word, folks–about search, facets, metadata, indexing, clustering, governance and analytics underscore something I have been saying for a long, long time. Search is not working as lots of people assume it does. You can substitute “eDiscovery,” “text mining,” or “metatagging” for search. The statement holds water for each.

The algorithms will work within limits but the problem with search has to do with language. Software, no matter how sophisticated, gets fooled with missing data elements, versions, and words themselves. It is high time that the people yapping about how wonderful automated systems are stop and ask themselves this question, “Do I want to go to jail because I assumed a search or content processing system was working?” I know my answer.

Second, in the Computerworld write up, the user’s system dutifully saved multiple versions of the document. Okay, SharePoint lovers, here’s a question for you? Does your search system make clear which antecedent version is which and which document is the best and final version? We know from the Computerworld write up that the Google system did not make this distinction. My point is that the nifty sounding yap about how “findable” a document is remains mostly baloney. Azure chip consultants and investment banks can convince themselves and the widows from whom money is derived that a new search system works wonderfully. I think the version issue makes clear that most search and content processing systems still have problems with multiple instances of documents. Don’t believe me. Go look for the drafts of your last PowerPoint. Now to whom did you email a copy? From whom did you get inputs? Which set of slides were the ones on the laptop you used for the briefing? What the “correct” version of the presentation? If you cannot answer the question, how will software?

Software and Smart Content

October 30, 2011

I was moving data from Point A to Point B yesterday, filtering junk that has marginal value. I scanned a news story from a Web site which covers information technology with a Canadian perspective. The story was “IBM, Yahoo turn to Montreal’s NStein to Test Search Tool.” In 2006, IBM was a pace-setter in search development cost control The company was relying on the open source community’s Lucene technology, not the wild and crazy innovations from Almaden and other IBM research facilities. Web Fountain and jazzy XML methods were promising ways to make dumb content smart, but IBM needed a way to deliver the bread-and-butter findability at a sustainable, acceptable cost. The result was OmniFind. I had made a note to myself that we tested the Yahoo OmniFind edition when it became available and noted:

Installation was fine on the IBM server. Indexing seemed sluggish. Basic search functions generated a laundry list of documents. Ho hum.

Maybe this comment was unfair, but five years ago, there were arguably better search and retrieval systems. I was in the midst of the third edition of the Enterprise Search Report, long since batardized by the azure chip crowd and the “real” experts. But we had a test corpus, lots of hardware, and an interest is seeing for ourselves how tough it was to get an enterprise search system up and running. Our impression was that most people would slam in the system, skip the fancy stuff, and move on to more interesting things such as playing Foosball.

Thanks to Adobe for making software that creates a need for Photoshop training. Source: http://www.practical-photoshop.com/PS2/pages/assign.html

Smart, Intelligent… Information?

In this blast from the past article, NStein’s product in 2006 was “an intelligent content management product used by media companies such as Time Magazine and the BBC, and a text mining tool called NServer.” The idea was to use search plus a value adding system to improve the enterprise user’s search experience.

Now the use of the word “intelligent” to describe a content processing system, reaching back through the decades to computer aided logistics and forward to the Extensible Markup Language methods.

The idea of “intelligent” is a pregnant one, with a gestation period measured in decades.

Flash forward to the present. IBM markets OmniFind and a range of products which provide basic search as a utility function. NStein is a unit of OpenText, and it has been absorbed into a conglomerate with a number of search systems. The investment needed to update, enhance, and extend BASIS, BRS Search, NStein, and the other systems OpenText “sells” is a big number. “Intelligent content” has not been an OpenText buzzword for a couple of years.

The torch has been passed to conference organizers and a company called Thoora, which “combines aggregation, curation, and search for personalized news streams.” You can get some basic information in the TechCrunch article “Thoora Releases Intelligent Content Discovery Engine to the Public.”

In two separate teleconference calls last week (October 24 to 28, 2011), “intelligent content” came up. In one call, the firm was explaining that traditional indexing system missed important nuances. By processing a wide range of content and querying a proprietary index of the content, the information derived from the content would be more findable. When a document was accessed, the content was “intelligent”; that is, the document contained value added indexing.

The second call focused on the importance of analytics. The content processing system would ingest a wide range of unstructured data, identify items of interest such as the name of a company, and use advanced analytics to make relationships and other important facets of the content visible. The documents were decomposed into components, and each of the components was “smart”. Again the idea is that the fact or component of information was related to the original document and to the processed corpus of information.

No problem.

Shift in Search

We are witnessing another one of those abrupt shifts in enterprise search. Here’s my working hypothesis. (If you harbor a life long love of marketing baloney, quit reading because I am gunning for this pressure point.)

Let’s face it. Enterprise search is just not revving the engines of the people in information technology or the chief financial officer’s office. Money pumped into search typically generates a large number of user complaints, security issues, and cost spikes. As content volume goes up, so do costs. The enterprise is not Google-land, and money is limited. The content is quite complex, and who wants to try and crack 1990s technology against the nut of 21st century data flows. Not I. So something hotter is needed.

Second, the hottest trends in “search” have nothing to do with search whatsoever. Examples range from conflating the interface with precision and recall. Sorry. Does not compute for me. The other angle is “mobile.” Sure, search will work when everything is monitored and “smart” software provides a statistically appropriate method suggests will work “most” of the time. There is also the baloney about apps, which is little more than the gameification of what in many cases might better be served with a system that makes the user confront actual data, not an abstraction of data. What this means is that people are looking for a way to provide information access without having to grunt around in the messy innards of editorial policies, precision, recall, and other tasks that are intellectually rigorous in a way that Angry Birds interfaces for business intelligence are not.

Third, companies engaged in content access are struggling for revenue. Sure, the best of the search vendors have been purchased by larger technology companies. These acquisitions guarantee three things.

- The Wild West spirit of the innovative content processing vendors is essentially going to be stamped out. Creativity will be herded into the corporate killing pens, and the “team” will be rendered as meat products for a technology McDonald’s

- The cash sink holes that search vendors research programs were will be filled with procedure manuals and forms. There is no money for blue sky problem solving to crack the tough problems in information retrieval at a Fortune 1000 company. Cash can be better spent on things that may actually generate a return. After all, if the search vendors were so smart, why did most companies hit revenue ceilings and have to turn to acquisitions to generate growth? For firms unable to grow revenues, some just fiddled the books. Others had to get injections of cash like a senior citizen in the last six months of life in a care facility. So acquired companies are not likely to be hot beds of innovation.

- The pricing mechanisms which search vendors have so cleverly hidden, obfuscated, and complexified will be tossed out the window. When a technology is a utility, then giant corporations will incorporate some of the technology in other products to make a sale.

What we have, therefore, is a search marketplace where the most visible and arguably successful companies have been acquired. The companies still in the marketplace now have to market like the Dickens and figure out how to cope with free open source solutions and giant acquirers who will just give away search technology.

Enterprise Search: The Floundering Fish!

October 27, 2011

I am thinking about another monograph on the topic of “enterprise search.” The subject seems to be a bit like the motion picture protagonist Jason. Every film ends with Jason apparently out of action. Then, six or nine months later, he’s back. Knives, chains, you name it.

The Landscape

The landscape of enterprise search is pretty much unchanged. I know that the folks who pulled off the billion dollar deals are different. These guys and gals have new Bimmers and maybe a private island or some other sign of wealth. But the technology of yesterday’s giants of enterprise search is pretty much unchanged. Whenever I say this, I get email from the chief technology officers at various “big name” vendors who tell me, “Our technology is constantly enhanced, refreshed, updated, revolutionized, reinvented, whatever.”

Source: http://www.goneclear.com/photos_2003.htm

The reality is that the original Big Five had and still have technology rooted in the mid to late 1990s. I provide some details in my various writings about enterprise search in the Enterprise Search Report, Beyond Search for the “old” Gilbane, Successful Enterprise Search Management, and my June 2011 The New Landscape of Search.

Former Stand Alone Champions of Search

For those of you who have forgotten, here’s a précis:

- Autonomy IDOL, Bayesian, mid 1990s via the 18th century

- Convera, shotgun marriage of “old” Excalibur and “less old” Conquest (which was a product of a former colleague of mine at Booz, Allen & Hamilton, back when it was a top tier consulting firm

- Endeca, hybrid of Yahoo directory and Inktomi with some jazzy marketing, late 1990

- Exalead. Early 2000 technology and arguably the best of this elite group of information retrieval technology firms. Exalead is now part of Dassault, the French engineering wizardry firm.

- Fast Search & Transfer, Norwegian university, late 1990s. Now part of Microsoft Corp.

- Fulcrum, now part of OpenText. Dates from the early 1990s and maybe retired. I have lost track.

- Google Search Appliance. Late 1990s technology in an appliance form. The product looks a bit like an orphan to me as Google chases the enterprise cloud. GSA was reworked because “voting” doesn’t help a person in a company find a document, but it seems to be a dead end of sorts.

- IBM Stairs III, recoded in Germany and then kept alive via the Search Manager product and the third-party BRS system, which is now part of the OpenText stable of search solutions. Dates from the mid 1970s. IBM now “loves” open source Lucene. Sort of.

- Oracle Text. Late 1980s via acquisition of Artificial Linguistics.

There are some other interesting and important systems, but these are of interest to dinosaurs like me, not the Gen X and Gen Y azure chip crowd or the “we don’t have any time” procurement teams. These systems are Inquire (supported forward and rearward truncation), Island Search (a useful on-the-fly summarizer from decades ago), and the much loved RECON and SDC Orbit engines. Ah, memories.

What’s important is that the big deals in the last couple of months have been for customers and opportunities to sell consulting and engineering services. The deals are not about search, information retrieval, findability, or information access. The purchasers will talk about the importance of these buzzwords, but in my opinion, the focus is on getting customers and selling them stuff.

Three points:

Enterprise Search Silliness

October 23, 2011

I am back in Kentucky and working through quite a stack of articles which have been sent to me to review. I don’t want to get phone calls from Gen X and Gen Y CEOs, chipper attorneys, and annoyed vulture capitalists, so I won’t do the gory details thing.

I do want to present my view of the enterprise search market. I finished the manuscript for “The New Landscape of Search”, published in June 2011, before the notable acquisitions which have taken place in the discombobulated enterprise search market. Readers of this blog know that I am not too fond of the words “information,” “search”, “enterprise”, “governance”, and a dozen or so buzzwords that Art History majors from Smith have invented in the sales job.

In this write up I want to comment on three topics:

- What’s the reason for the buy outs?

- The chase for the silver bullet which will allow a vendor to close a deal, shooting the competitors dead

- The vapidity of the analyses of the search market.

Same rules apply. Put your comments in the comments section of the blog. Please, do not call and want to “convince” me that a particular firm has the “one, true way”. Also, do not send me email with a friendly salutation like “Hi, Steve.” I am not in a “hi, Steve” mood due to my lousy vision and 67 year old stamina. What little I have is not going to be applied to emails from people who want to give me a demo, a briefing, or some other Talmudic type of input. Not much magic in search.

What’s the Reason for the Buy Outs?

The reasons will vary by acquirer, but here’s my take on the deals we have been tracking and commenting upon to our paying clients. I had a former client want to talk with me about one of these deals. Surprise. I talk for money. Chalk that up to my age and the experience of the “something for nothing” mentality of search marketers.

The HP Autonomy deal was designed to snag a company with an alleged 20,000 licensees and close to $1.0 billion in revenue, and not PriceWaterhouseCooper or Deloitte type of consulting revenue stream. HP wants to become more of a services company, and Autonomy’s packagers presented a picture that whipped HP’s Board and management into a frenzy. With the deal, HP gets a shot at services revenue, but there will be a learning curve. I think Meg Whitman of eBay Skype fame will have her hands full with Autonomy’s senior management. My hunch is that Mike Lynch and Andrew Kantor could run HP better than Ms. Whitman, but that’s my opinion.

HP gets a shot at selling higher margin engineering and consulting services. A bonus is the upsell opportunity to Autonomy’s customer base. Is their overlap? Will HP muff the bunny? Will HP’s broader challenges kill this reasonably good opportunity? Those answers appear in my HP Autonomy briefing which, gentle reader, costs money. And Oracle bought Endeca as a “me too” play.

Google and the Perils of Posting

October 21, 2011

I don’t want to make a big deal out of an simple human mistake from a button click. I just had eye surgery, and it is a miracle that I can [a] find my keyboard and [b] make any function on my computers work.

However, I did notice this item this morning and wanted to snag it before it magically disappeared due to mysterious computer gremlins. The item in question is “Last Week I Accidentally Posted”, via Google Plus at this url. I apologize for the notation style, but Google Plus posts come with the weird use of the “+” sign which is a killer when running queries on some search systems. Also, there is no title, which means this is more of a James Joyce type of writing than a standard news article or even a blog post from the addled goose in Harrod’s Creek.

To get some context you can read my original commentary in “Google Amazon Dust Bunnies.” My focus in that write up is squarely on the battle between Google and Amazon, which I think is more serious confrontation that the unemployed English teachers, aging hippies turned consultant, and the failed yet smarmy Web masters who have reinvented themselves as “search experts” think.

Believe me, Google versus Amazon is going to be interesting. If my research is on the money, the problems between Google and Amazon will escalate to and may surpass the tension that exists between Google and Oracle, Google and Apple, and Google and Viacom. (Well, Viacom may be different because that is a personal and business spat, not just big companies trying to grab the entire supply of apple pies in the cafeteria.)

In the Dust Bunnies write up, I focused on the management context of the information in the original post and the subsequent news stories. In this write up, I want to comment on four aspects of this second post about why Google and Amazon are both so good, so important, and so often misunderstood. If you want me to talk about the writer of these Google Plus essays, stop reading. The individual’s name which appears on the source documents is irrelevant.

1. Altering or Idealizing What Really Happened

I had a college professor, Dr. Philip Crane who told us in history class in 1963, “When Stalin wanted to change history, he ordered history textbooks to be rewritten.” I don’t know if the anecdote is true or not. Dr. Crane went on to become a US congressman, and you know how reliable those folks’ public statements are. What we have in the original document and this apologia is a rewriting of history. I find this interesting because the author could use other methods to make the content disappear. My question, “Why not?” And, “Why revisit what was a pretty sophomoric tirade involving a couple of big companies?”

2, Suppressing Content with New Content

One of the quirks of modern indexing systems such as Baidu, Jike, and Yandex is that once content is in the index, it can persist. As more content on a particular topic accretes “around” an anchor document, the document becomes more findable. What I find interesting is that despite the removal of the original post the secondary post continues to “hook” to discussions of that original post. In fact, the snippet I quoted in “Dust Bunnies” comes from a secondary source. I have noted and adapted to “good stuff” disappearing as a primary document. The only evidence of a document’s existence are secondary references. As these expand, then the original item becomes more visible and more difficult to suppress. In short, the author of the apologia is ensuring the findability of the gaffe. Fascinating to me.

3. Amazon: A Problem for Google

Observations about Content Shaping

October 3, 2011

Writer’s Note: Stephen E Arnold can be surprising. He asked me to review the text of his keynote speech at ISS World Americas October 2011 conference, which is described as “America’s premier intelligence gathering and high technology criminal investigation conference.” Mr. Arnold has moved from government work to a life of semi retirement in Harrod’s Creek. I am one of the 20 somethings against whom he rails in his Web log posts and columns. Nevertheless, he continues to rely on my editorial skills, and I have to admit I find his approach to topics interesting and thought provoking. He asked me to summarize his keynote, which I attempted to do. If you have questions about the issues he addresses, he has asked me to invite you to write him at seaky2000 at yahoo dot com. Prepare to find a different approach to the content mechanisms he touches upon. (Yes, you can believe this write up.) If you want to register, point your browser at www.issworldtraining.com.— Andrea Hayden

Research results manipulation is not a topic that is new in the era of the Internet. Information has been manipulated by individuals in record keeping and researching for ages. People want to (and can) affect how and what information is presented. Information can also be manipulated not just by people, but by the accidents of numerical recipes.

However, even though this is not a new issue, the information manipulation in this age is much more frequent than many believe, and the information we are trying to gather is much more accessible. I want to answer the question, “What information analysts need to know about this interesting variant of disinformation?”

The volume of data in a digital environment means that algorithms or numerical recipes process content in digital form. The search and content processing vendors can acquire as much or as little content as the system administrator wishes.

In addition to this, most people don’t know that all of the leading search engines specify what content to acquire, how much content to process, and when to look for new content. This is where search engine optimization comes in. Boosting a ranking in a search result is believed to be an important factor for many projects, businesses, and agencies.

Intelligence professionals should realize that conforming to the Webmaster guidelines set forth by Web indexing services will result in a grade much like the scoring of an essay with a set rubric. Documents should conform to these set guidelines to result in a higher search result ranking. This works because most researches rely on the relevance ranking to provide the starting point for research. Well-written content which conforms to the guidelines will then frame the research on what is or is not important. Such content can be shaped in a number of ways.

Lucid Imagination: Open Source Search Reaches for Big Data

September 30, 2011

We are wrapping up a report about the challenges “big data” pose to organizations. Perhaps the most interesting outcome of our research is that there are very few search and content processing systems which can cope with the digital information required by some organizations. Three examples merit listing before I comment on open source search and “big data”.

The first example is the challenge of filtering information required by orgnaizatio0ns produced within the organization and by the organizations staff, contractors, and advisors. We learned in the course of our investigation that the promises of processing updates to Web pages, price lists, contracts, sales and marketing collateral, and other routine information are largely unmet. One of the problems is that the disparate content types have different update and change cycles. The most widely used content management system based on our research results is SharePoint, and SharePoint is not able to deliver a comprehensive listing of content without significant latency. Fixes are available but these are engineering tasks which consume resources. Cloud solutions do not fare much better, once again due to latency. The bottom line is that for information produced within an organization employees are mostly unable to locate information without a manual double check. Latency is the problem. We did identify one system which delivered documented latency across disparate content types of 10 to 15 minutes. The solution is available from Exalead, but the other vendors’ systems were not able to match this problem of putting fresh, timely information produced within an organization in front of system users. Shocked? We were.

Reducing latency in search and content processing systems is a major challenge. Vendors often lack the resources required to solve a “hard problem” so “easy problems” are positioned as the key to improving information access. Is latency a popular topic? A few vendors do address the issue; for example, Digital Reasoning and Exalead.

Second, when organizations tap into content produced by third parties, the latency problem becomes more severe. There is the issue of the inefficiency and scaling of frequent index updates. But the larger problem is that once an organization “goes outside” for information, additional variables are introduced. In order to process the broad range of content available from publicly accessible Web sites or the specialized file types used by certain third party content producers, connectors become a factor. Most search vendors obtain connectors from third parties. These work pretty much as advertised for common file types such as Lotus Notes. However, when one of the targeted Web sites such as a commercial news services or a third-party research firm makes a change, the content acquisition system cannot acquire content until the connectors are “fixed”. No problem as long as the company needing the information is prepared to wait. In my experience, broken connectors mean another variable. Again, no problem unless critical information needed to close a deal is overlooked.

Traditional Entity Extraction’s Six Weaknesses

September 26, 2011

Editor’s Note: This is an article written by Tim Estes, founder of Digital Reasoning, one of the world’s leading providers of technology for entity based analytics. You can learn more about Digital Reasoning at www.digitalreasoning.com.

Most university programming courses ignore entity extraction. Some professors talk about the challenges of identifying people, places, things, events, Social Security Numbers and leave the rest to the students. Other professors may have an assignment related to parsing text and detecting anomalies or bound phrases. But most of those emerging with a degree in computer science consign the challenge of entity extraction to the Miscellaneous file.

Entity extraction means processing text to identify, tag, and properly account for those elements that are the names of person, numbers, organizations, locations, and expressions such as a telephone number, among other items. An entity can consist of a single word like Cher or a bound sequence of words like White House. The challenge of figuring out names is tough one for several reasons. Many names exist in richly varied forms. You can find interesting naming conventions in street addresses in Madrid, Spain, and for the owner of a falafel shop in Tripoli.

Entities, as information retrieval experts have learned since the first DARPA conference on the subject in 1987, are quite important to certain types of content analysis. Digital Reasoning has been working for more than 11 years on entity extraction and related content processing problems. Entity oriented analytics have become a very important issue these days as companies deal with too much data, the need to understand the meaning and not the just the statistics of the data and finally to understand entities in context – critical to understanding code terms, etc.

I want to highlight the six weaknesses of traditional entity extraction and highlight Digital Reasoning’s patented, fully automated method. Let’s look at the weaknesses.

1 Prior Knowledge

Traditional entity extraction systems assume that the system will “know” about the entities. This information has been obtained via training or specialized knowledge bases. The idea is that a system processes content similar to that which the system will process when fully operational. When the system is able to locate or a human “helps” the system locate an entity, the software will “remember” the entity. In effect, entity extraction assumes that the system either has a list of entities to identify and tag or a human will interact with various parsing methods to “teach” the system about the entities. The obvious problem is that when a new entity becomes available and is mentioned one time, the system may not identify the entity.

2 Human Inputs

I have already mentioned the need for a human to interact with the system. The approach is widely used, even in the sophisticated systems associated with firms such as Hewlett Packard Autonomy and Microsoft Fast Search. The problem with relying on humans is a time and cost equation. As the volume of data to be processed goes up, more human time is needed to make sure the system is identifying and tagging correctly. In our era of data doubling every four months, the cost of coping with massive data flows makes human intermediated entity identification impractical.

3 Slow Throughput

Most content processing systems talk about high performance, scalability, and massively parallel computing. The reality is that most of the subsystems required to manipulate content for the purpose of identifying, tagging, and performing other operations on entities are bottlenecks. What is the solution? Most vendors of entity extraction solutions push the problem back to the client. Most information technology managers solve performance problems by adding hardware to either an on premises or cloud-based solution. The problem is that adding hardware is at best a temporary fix. In the present era of big data, content volume will increase. The appetite for adding hardware lessens in a business climate characterized by financial constraints. Not surprisingly entity extraction systems are often “turned off” because the client cannot afford the infrastructure required to deal with the volume of data to be processed. A great system that is too expensive introduces some flaws in the analytic process.