Google Leadership Versus Valued Googlers

August 23, 2024

![green-dino_thumb_thumb_thumb_thumb_t[1] green-dino_thumb_thumb_thumb_thumb_t[1]](https://arnoldit.com/wordpress/wp-content/uploads/2024/08/green-dino_thumb_thumb_thumb_thumb_t1_thumb-2.gif) This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The summer in rural Kentucky lingers on. About 2,300 miles away from the Sundar & Prabhakar Comedy Show’s nerve center, the Alphabet Google YouTube DeepMind entity is also “cyclonic heating from chaotic employee motion.” What’s this mean? Unsteady waters? Heat stroke? Confusion? Hallucinations? My goodness.

The Google leadership faces another round of employee pushback. I read “Workers at Google DeepMind Push Company to Drop Military Contracts.”

How could the Google smart software fail to predict this pattern? My view is that smart software has some limitations when it comes to managing AI wizards. Furthermore, Google senior managers have not been able to extract full knowledge value from the tools at their disposal to deal with complexity. Time Magazine reports:

Nearly 200 workers inside Google DeepMind, the company’s AI division, signed a letter calling on the tech giant to drop its contracts with military organizations earlier this year, according to a copy of the document reviewed by TIME and five people with knowledge of the matter. The letter circulated amid growing concerns inside the AI lab that its technology is being sold to militaries engaged in warfare, in what the workers say is a violation of Google’s own AI rules.

Why are AI Googlers grousing about military work? My personal view is that the recent hagiography of Palantir’s Alex Karp and the tie up between Microsoft and Palantir for Impact Level 5 services means that the US government is gearing up to spend some big bucks for warfighting technology. Google wants — really needs — this revenue. Penalties for its frisky behavior as what Judge Mehta describes and “monopolistic” could put a hit in the git along of Google ad revenue. Therefore, Google’s smart software can meet the hunger militaries have for intelligent software to perform a wide variety of functions. As the Russian special operation makes clear, “meat based” warfare is somewhat inefficient. Ukrainian garage-built drones with some AI bolted on perform better than a wave of 18 year olds with rifles and a handful of bullets. The example which sticks in my mind is a Ukrainian drone spotting a Russian soldier in the field partially obscured by bushes. The individual is attending to nature’s call.l The drone spots the “shape” and explodes near the Russian infantry man.

A former consultant faces an interpersonal Waterloo. How did that work out for Napoleon? Thanks, MSFT Copilot. Are you guys working on the IPv6 issue? Busy weekend ahead?

Those who study warfare probably have their own ah-ha moment.

The Time Magazine write up adds:

Those principles state the company [Google/DeepMind] will not pursue applications of AI that are likely to cause “overall harm,” contribute to weapons or other technologies whose “principal purpose or implementation” is to cause injury, or build technologies “whose purpose contravenes widely accepted principles of international law and human rights.”) The letter says its signatories are concerned with “ensuring that Google’s AI Principles are upheld,” and adds: “We believe [DeepMind’s] leadership shares our concerns.”

I love it when wizards “believe” something.

Will the Sundar & Prabhakar brain trust do believing or banking revenue from government agencies eager to gain access to advantage artificial intelligence services and systems? My view is that the “believers” underestimate the uncertainty arising from potential sanctions, fines, or corporate deconstruction the decision of Judge Mehta presents.

The article adds this bit of color about the Sundar & Prabhakar response time to Googlers’ concern about warfighting applications:

The [objecting employees’] letter calls on DeepMind’s leaders to investigate allegations that militaries and weapons manufacturers are Google Cloud users; terminate access to DeepMind technology for military users; and set up a new governance body responsible for preventing DeepMind technology from being used by military clients in the future. Three months on from the letter’s circulation, Google has done none of those things, according to four people with knowledge of the matter. “We have received no meaningful response from leadership,” one said, “and we are growing increasingly frustrated.”

“No meaningful response” suggests that the Alphabet Google YouTube DeepMind rhetoric is not satisfactory.

The write up concludes with this paragraph:

At a DeepMind town hall event in June, executives were asked to respond to the letter, according to three people with knowledge of the matter. DeepMind’s chief operating officer Lila Ibrahim answered the question. She told employees that DeepMind would not design or deploy any AI applications for weaponry or mass surveillance, and that Google Cloud customers were legally bound by the company’s terms of service and acceptable use policy, according to a set of notes taken during the meeting that were reviewed by TIME. Ibrahim added that she was proud of Google’s track record of advancing safe and responsible AI, and that it was the reason she chose to join, and stay at, the company.

With Microsoft and Palantir, among others, poised to capture some end-of-fiscal-year money from certain US government budgets, the comedy act’s headquarters’ planners want a piece of the action. How will the Sundar & Prabhakar Comedy Act handle the situation? Why procrastinate? Perhaps the comedy act hopes the issue will just go away. The complaining employees have short attention spans, rely on TikTok-type services for information, and can be terminated like other Googlers who grouse, picket, boycott the Foosball table, or quiet quit while working on a personal start up.

The approach worked reasonably well before Judge Mehta labeled Google a monopoly operation. It worked when ad dollars flowed like latte at Philz Coffee. But today is different, and the unsettled personnel are not a joke and add to the uncertainty some have about the Google we know and love.

Stephen E Arnold, August 23, 2024

AI Balloon: Losing Air and Boring People

August 22, 2024

Though tech bros who went all-in on AI still promise huge breakthroughs just over the horizon, Windows Central’s Kevin Okemwa warns: “The Generative AI Bubble Might Burst, Sending the Tech to an Early Deathbed Before Its Prime: ‘Don’t Believe the Hype’.” Sadly, it is probably too late to save certain career paths, like coding, from an AI takeover. But perhaps a slowdown would conserve some valuable resources. Wouldn’t that be nice? The write-up observes:

“While AI has opened up the world to endless opportunities and untapped potential, its hype might be short-lived, with challenges abounding. Aside from its high water and power demands, recent studies show that AI might be a fad and further claim that 30% of its projects will be abandoned after proof of concept. Similar sentiments are echoed in a recent Blood In The Machine newsletter, which points out critical issues that might potentially lead to ‘the beginning of the end of the generative AI boom.’ From the Blood in the Machine newsletter analysis by Brian Merchant, who is also the Los Angeles Times’ technology columnist:

‘This is it. Generative AI, as a commercial tech phenomenon, has reached its apex. The hype is evaporating. The tech is too unreliable, too often. The vibes are terrible. The air is escaping from the bubble. To me, the question is more about whether the air will rush out all at once, sending the tech sector careening downward like a balloon that someone blew up, failed to tie off properly, and let go—or, more slowly, shrinking down to size in gradual sputters, while emitting embarrassing fart sounds, like a balloon being deliberately pinched around the opening by a smirking teenager.’”

Such evocative imagery. Merchant’s article also notes that, though Enterprise AI was meant to be the way AI firms made their money, it is turning out to be a dud. There are several reasons for this, not the least of which is AI models’ tendency to “hallucinate.”

Okemwa offers several points to support Merchant’s deflating-balloon claim. For example, Microsoft was recently criticized by investors for wasting their money on AI technology. Then there NVIDIA: The chipmaker recently became the most valuable company in the world thanks to astronomical demand for its hardware to power AI projects. However, a delay of its latest powerful chip dropped its stock’s value by 5%, and market experts suspect its value will continue to decline. The write-up also points to trouble at generative AI’s flagship firm, OpenAI. The company is plagued by a disturbing exodus of top executives, rumors of pending bankruptcy, and a pesky lawsuit from Elon Musk.

Speaking of Mr. Musk, how do those who say AI will kill us all respond to the potential AI downturn? Crickets.

Cynthia Murrell, August 22, 2024

Telegram Rolled Over for Russia. Has Google YouTube Become a Circus Animal Too?

August 19, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Most of the people with whom I interact do not know that Telegram apparently took steps to filter content which the Kremlin deemed unsuitable for Russian citizens. Information reaching me in late April 2024 asserted that Ukrainian government units were no longer able to use Telegram Messenger functions to disseminate information to Telegram users in Russia about Mr. Putin’s “special operation.” Telegram has made a big deal about its commitment to free speech, and it has taken a very, very light touch to censoring content and transactions on its basic and “secret” messaging service. Then, at the end of April 2024, Mr. Pavel Durov flipped, apparently in response to either a request or threat from someone in Russia. The change in direction for a messaging app with 900 million users is a peanut compared to Meta WhatsApp five million or so. But when free speech becomes obeisance I take note.

I have been tracking Russian YouTubers because I have found that some of the videos provide useful insights into the impact of the “special operation” on prices, the attitudes of young people, and imagery about the condition of housing, information day-to-day banking matters, and the demeanor of people in the background of some YouTube, TikTok, Rutube, and Kick videos.

I want to mention that Alphabet Google YouTube a couple of years ago took action to suspend Russian state channels from earning advertising revenue from the Google “we pay you” platform. Facebook and the “old” Twitter did this as well. I have heard that Google and YouTube leadership understood that Ukraine wanted those “propaganda channels” blocked. The online advertising giant complied. About 9,000 channels were demonetized or made difficult to find (to be fair, finding certain information on YouTube is not an easy task.) Now Russia has convinced Google to respond to its wishes.

So what? To most people, this is not important. Just block the “bad” content. Get on with life.

I watched a video called “Demonetized! Update and the Future.” The presenter is a former business manager who turned to YouTube to document his view of Russian political, business, and social events. The gentleman — allegedly named “Konstantin” — worked in the US. He returned to Russia and then moved to Uzbekistan. His YouTube channel is (was) titled Inside Russia.

The video “Demonetized! Update and the Future” caught my attention. Please, note, that the video may be unavailable when you read this blog post. “Demonetization” is Google speak for cutting of advertising revenue itself and to the creator.

Several other Russian vloggers producing English language content about Russia, the Land of Putin on the Fritz, have expressed concern about their vlogging since Russia slowed down YouTube bandwidth making some content unwatchable. Others have taken steps to avoid problems; for example, creators Svetlana, Niki, and Nfkrz have left Russia. Others are keeping a low profile.

This raises questions about the management approach in a large and mostly unregulated American high-technology company. According to Inside Russia’s owner Konstantin, YouTube offered no explanation for the demonetization of the channel. Konstantin asserts that YouTube is not providing information to him about its unilateral action. My hunch is that he does not want to come out and say, “The Kremlin pressured an American company to cut off my information about the impact of the ‘special operation’ on Russia.”

Several observations:

- I have heard but not verified that Apple has cooperated with the Kremlin’s wish for certain content to be blocked so that it does not quickly reach Russian citizens. It is unclear what has caused the US companies to knuckle under. My initial thought was, “Money.” These outfits want to obtain revenues from Russia and its federation, hoping to avoid a permanent ban when the “special operation” ends. The inducements (and I am speculating) might also have a kinetic component. That occurs when a person falls out of a third story window and then impacts the ground. Yes, falling out of windows can happen.

- I surmise that the vloggers who are “demonetized” are probably on a list. These individuals and their families are likely to have a tough time getting a Russian government job, a visa, or a passport. The list may have the address for the individual who is generating unacceptable-to-the-Kremlin content. (There is a Google Map for Uzbekistan’s suburb where Konstantin may be living.)

- It is possible that YouTube is doing nothing other than letting its “algorithm” make decisions. Demonetizing Russian YouTubers is nothing more than an unintended consequence of no material significance.

- Does YouTube deserve some attention because its mostly anything-goes approach to content seems to be malleable? For example, I can find information about how to steal a commercial software program owned by a German company via the YouTube search box. Why is this crime not filtered? Is a fellow talking about the “special operation” subject to a different set of rules?

Screen shot of suggested terms for the prompt “Magix Vegas Pro 21 crack” taken on August 16, 2024, at 224 pm US Eastern.

I have seen some interesting corporate actions in my 80 years. But the idea that a country, not particularly friendly to the U.S. at this time, can cause an American company to take what appears to be an specific step designed to curtail information flow is remarkable. Perhaps when Alphabet executives explain to Congress the filtering of certain American news related to events linked to the current presidential campaign more information will be made available?

If Konstantin’s allegations about demonetization are accurate, what’s next on Alphabet, Google, and YouTube’s to-do list for information snuffing or information cleansing?

Stephen E Arnold, August 18, 2024

Pragmatic AI: Individualized Monitoring

August 15, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

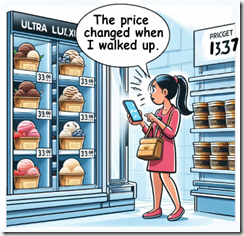

In June 2024 at the TechnoSecurity & Digital Forensics conference, one of the cyber investigators asked me, “What are some practical uses of AI in law enforcement?” I told the person that I would send him a summary of my earlier lecture called “AI for LE.” He said, “Thanks, but what should I watch to see some AI in action.” I told him to pay attention to the Kroger pricing methods. I had heard that Kroger was experimenting with altering prices based on certain signals. The example I gave is that if the Kroger is located in a certain zip code, then the Kroger stores in that specific area would use dynamic pricing. The example I gave was similar to Coca-Cola’s tests of a vending machine that charged more if the temperature was hot. In the Kroger example, a hot day would trigger a change in the price of a frozen dessert. He replied, “Kroger?” I said, “Yes, Kroger is experimenting with AI in order to detect specific behaviors and modify prices to reflect those signals.” What Kroger is doing will be coming to law enforcement and intelligence operations. Smart software monitors the behavior of a prisoner, for example, and automatically notifies an investigator when a certain signal is received. I recall mentioning that smart software, signals, and behavior change or direct action will become key components of a cyber investigator’s tool kit. He said, laughing, “Kroger. Interesting.”

Thanks, MSFT Copilot. Good enough.

I learned that Kroger’s surveillance concept is now not a rumor discussed at a neighborhood get together. “‘Corporate Greed Is Out of Control’: Warren Slams Kroger’s AI Pricing Scheme” reveals that elected officials and probably some consumer protection officials may be aware of the company’s plans for smart software. The write up reports:

Warren (D-Mass.) was joined by Sen. Bob Casey (D-Pa.) on Wednesday in writing a letter to the chairman and CEO of the Kroger Company, Rodney McMullen, raising concerns about how the company’s collaboration with AI company IntelligenceNode could result in both privacy violations and worsened inequality as customers are forced to pay more based on personal data Kroger gathers about them “to determine how much price hiking [they] can tolerate.” As the senators wrote, the chain first introduced dynamic pricing in 2018 and expanded to 500 of its nearly 3,000 stores last year. The company has partnered with Microsoft to develop an Electronic Shelving Label (ESL) system known as Enhanced Display for Grocery Environment (EDGE), using a digital tag to display prices in stores so that employees can change prices throughout the day with the click of a button.

My view is that AI orchestration will allow additional features and functions. Some of these may be appropriate for use in policeware and intelware systems. Kroger makes an effort to get individuals to sign up for a discount card. Also, Kroger wants users to install the Kroger app. The idea is that discounts or other incentives may be “awarded” to the customer who takes advantages of the services.

However, I am speculating that AI orchestration will allow Kroger to implement a chain of actions like this:

- Customer with a mobile phone enters the store

- The store “acknowledges” the customer

- The customer’s spending profile is accessed

- The customer is “known” to purchase upscale branded ice cream

- The price for that item automatically changes as the customer approaches the display

- The system records the item bar code and the customer ID number

- At check out, the customer is charged the higher price.

Is this type of AI orchestration possible? Yes. Is it practical for a grocery store to deploy? Yes because Kroger uses third parties to provide its systems and technical capabilities for many applications.

How does this apply to law enforcement? Kroger’s use of individualized tracking may provide some ideas for cyber investigators.

As large firms with the resources to deploy state-of-the-art technology to boost sales, know the customer, and adjust prices at the individual shopper level, the benefit of smart software become increasingly visible. Some specialized software systems lag behind commercial systems. Among the reasons are budget constraints and the often complicated procurement processes.

But what is at the grocery store is going to become a standard function in many specialized software systems. These will range from security monitoring systems which can follow a person of interest in an specific area to automatically updating a person of interest’s location on a geographic information module.

If you are interested in watching smart software and individualized “smart” actions, just pay attention at Kroger or a similar retail outfit.

Stephen E Arnold, August 15, 2024

Canadians Unhappy about Tax on Streaming Video

August 15, 2024

Unfortunately the movie industry has tanked worldwide because streaming services have democratized delivery. Producers, directors, actors, and other industry professionals are all feeling the pain of tighter purse strings. The problems aren’t limited to Hollywood, because Morningstar explains that the US’s northern neighbor is also feeling the strain: “The Motion Picture Association-Canada Asks Canada Appeal Court To Stop Proposed Tax On Streaming Revenue.”

A group representing big entertainment companies: Walt Disney, Netflix, Warner Brothers, Discovery, Paramount Global, and more are asking a Canadian court to stop a law that would force the companies to pay 5% of their sales to the country to fund local news and other domestic content. The Motion Picture Association- Canada stated that tax from the Canadian Radio-television and Telecommunications Commission oversteps the organization’s authority. The group representing the Hollywood bigwigs also mentions that its clients spent billions in Canada every year.

The representative group are also arguing that the tax would force Canadian subscribers to pay more for streaming services and the companies might consider leaving the northern country. Canadian Radio-television and Telecommunications Commission countered that without the tax local content might not be made or distributed anymore. Hollywood’s lawyers doesn’t like it at all:

“In their filing with Canada’s Federal Court of Appeal, lawyers for the group say the regulator didn’t reveal “any basis” for why foreign streamers are required to contribute to the production of local television and radio newscasts. The broadcast regulator “concluded, without evidence, that ‘there is a need to increase support for news production,'” the lawyers said in their filing. ‘Imposing on foreign online undertakings a requirement to fund news production is not appropriate in the light of the nature of the services that foreign online undertakings provide.’”

Canada will probably keep the tax and Hollywood, instead of the executives eating the costs, will pass it onto consumers. Consumers will also be shafted, because their entertainment streaming services will continue to become expensive.

Whitney Grace, August 15, 2024

Takedown Notices May Slightly Boost Sales of Content

August 13, 2024

It looks like take-down notices might help sales of legitimate books. A little bit. TorrentFreak shares the findings from a study by the University of Warsaw, Poland, in, “Taking Pirated Copies Offline Can Benefit Book Sales, Research Finds.” Writer Ernesto Van der Sar explains:

“This year alone, Google has processed hundreds of millions of takedown requests on behalf of publishers, at a frequency we have never seen before. The same publishers also target the pirate sites and their hosting providers directly, hoping to achieve results. Thus far, little is known about the effectiveness of these measures. In theory, takedowns are supposed to lead to limited availability of pirate sources and a subsequent increase in legitimate sales. But does it really work that way? To find out more, researchers from the University of Warsaw, Poland, set up a field experiment. They reached out to several major publishers and partnered with an anti-piracy outfit, to test whether takedown efforts have a measurable effect on legitimate book sales.”

See the write-up for the team’s methodology. There is a caveat: The study included only print books, because Poland’s e-book market is too small to be statistically reliable. This is an important detail, since digital e-books are a more direct swap for pirated copies found online. Even so, the researchers found takedown notices produced a slight bump in print-book sales. Research assistants confirmed they could find fewer pirated copies, and the ones they did find were harder to unearth. The write-up notes more research is needed before any conclusions can be drawn.

How hard will publishers tug at this thread? By this logic, if one closes libraries that will help book sales, too. Eliminating review copies may cause some sales. Why not publish books and keep them secret until Amazon provides a link? So many money-grubbing possibilities, and all it would cost is an educated public.

Cynthia Murrell, August 13, 2024

Apple Does Not Just Take Money from Google

August 12, 2024

In an apparent snub to Nvidia, reports MacRumors, “Apple Used Google Tensor Chips to Develop Apple Intelligence.” The decision to go with Google’s TPUv5p chips over Nvidia’s hardware is surprising, since Nvidia has been dominating the AI processor market. (Though some suggest that will soon change.) Citing Apple’s paper on the subject, writer Hartley Charlton reveals:

“The paper reveals that Apple utilized 2,048 of Google’s TPUv5p chips to build AI models and 8,192 TPUv4 processors for server AI models. The research paper does not mention Nvidia explicitly, but the absence of any reference to Nvidia’s hardware in the description of Apple’s AI infrastructure is telling and this omission suggests a deliberate choice to favor Google’s technology. The decision is noteworthy given Nvidia’s dominance in the AI processor market and since Apple very rarely discloses its hardware choices for development purposes. Nvidia’s GPUs are highly sought after for AI applications due to their performance and efficiency. Unlike Nvidia, which sells its chips and systems as standalone products, Google provides access to its TPUs through cloud services. Customers using Google’s TPUs have to develop their software within Google’s ecosystem, which offers integrated tools and services to streamline the development and deployment of AI models. In the paper, Apple’s engineers explain that the TPUs allowed them to train large, sophisticated AI models efficiently. They describe how Google’s TPUs are organized into large clusters, enabling the processing power necessary for training Apple’s AI models.”

Over the next two years, Apple says, it plans to spend $5 billion in AI server enhancements. The paper gives a nod to ethics, promising no private user data is used to train its AI models. Instead, it uses publicly available web data and licensed content, curated to protect user privacy. That is good. Now what about the astronomical power and water consumption? Apple has no reassuring words for us there. Is it because Apple is paying Google, not just taking money from Google?

Cynthia Murrell, August 12, 2024

Podcasts 2024: The Long Tail Is a Killer

August 9, 2024

![green-dino_thumb_thumb_thumb_thumb_t[2] green-dino_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2024/07/green-dino_thumb_thumb_thumb_thumb_t2_thumb.gif) This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

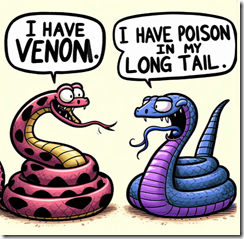

One of my Laws of Online is that the big get bigger. Those who are small go nowhere.

My laws have not been popular since I started promulgating them in the early 1980s. But they are useful to me. The write up “Golden Spike: Podcasting Saw A 22% Rise In Ad Spending In Q2 [2024].” The information in the article, if on the money, appear to support the Arnold Law articulated in the first sentence of this blog post.

The long tail can be a killer. Thanks, MSFT Copilot. How’s life these days? Oh, that’s too bad.

The write up contains an item of information which not surprising to those who paid attention in a good middle school or in a second year economics class. (I know. Snooze time for many students.) The main idea is that a small number of items account for a large proportion of the total occurrences.

Here’s what the article reports:

Unsurprisingly, podcasts in the top 500 attracted the majority of ad spend, with these shows garnering an average of $252,000 per month each. However, the profits made by series down the list don’t have much to complain about – podcasts ranked 501 to 3000 earned about $30,000 monthly. Magellan found seven out of the top ten advertisers from the first quarter continued their heavy investment in the second quarter, with one new entrant making its way onto the list.

This means that of the estimated three to four million podcasts, the power law nails where the advertising revenue goes.

I mention this because when I go to the gym I listen to some of the podcasts on the Leo Laporte TWIT network. At one time, the vision was to create the CNN of the technology industry. Now the podcasts seem to be the voice of the podcasts which cannot generate sufficient money from advertising to pay the bills. Therefore, hasta la vista staff, dedicated studio, and presumably some other expenses associated with a permanent studio.

Other podcasts will be hit by the stinging long tail. The question becomes, “How do these 2.9 million podcasts make money?”

Here’s what I have noticed in the last few months:

- Podcasters (video and voice) just quit. I assume they get a job or move in with friends. Van life is too expensive due to the cost of fuel, food, and maintenance now that advertising is chasing the winners in the long tail game.

- Some beg for subscribers.

- Some point people to their Buy Me a Coffee or Patreon page, among other similar community support services.

- Some sell T shirts. One popular technology podcaster sells a $60 screwdriver. (I need that.)

- Some just whine. (No, I won’t single out the winning whiner.)

If I were teaching math, this podcast advertising data would make an interesting example of the power law. Too bad most will be impotent to change its impact on podcasting.

Stephen E Arnold, August 9, 2024

AI Research: A New and Slippery Cost Center for the Google

August 7, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

A week or so ago, I read “Scaling Exponents Across Parameterizations and Optimizers.” The write up made crystal clear that Google’s DeepMind can cook up a test, throw bodies at it, and generate a bit of “gray” literature. The objective, in my opinion, was three-fold. [1] The paper makes clear that DeepMind is thinking about its smart software’s weaknesses and wants to figure out what to do about them. And [2] DeepMind wants to keep up the flow of PR – Marketing which says, “We are really the Big Dogs in this stuff. Good luck catching up with the DeepMind deep researchers.” Note: The third item appears after the numbers.

I think the paper reveals a third and unintended consequence. This issue is made more tangible by an entity named 152334H and captured in “Calculating the Cost of a Google DeepMind Paper.” (Oh, 152334 is a deep blue black color if anyone cares.)

That write up presents calculations supporting this assertion:

How to burn US$10,000,000 on an arXiv preprint

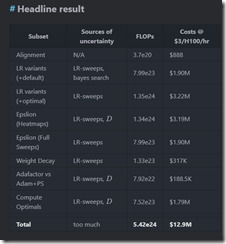

The write up included this table presenting the costs to replicate what the xx Googlers and DeepMinders did to produce the ArXiv gray paper:

Notice, please, that the estimate is nearly $13 million. Anyone want to verify the Google results? What am I hearing? Crickets.

The gray paper’s 11 authors had to run the draft by review leadership and a lawyer or two. Once okayed, the document was converted to the arXiv format, and we the findings to improve our understanding of how much work goes into the achievements of the quantumly supreme Google.

Thijs number of $12 million and change brings me to item [3]. The paper illustrates why Google has a tough time controlling its costs. The paper is not “marketing,” because it is R&D. Some of the expense can be shuffled around. But in my book, the research is overhead, but it is not counted like the costs of cubicles for administrative assistants. It is science; it is a cost of doing business. Suck it up, you buttercups, in accounting.

The write up illustrates why Google needs as much money as it can possibly grab. These costs which are not really nice, tidy costs have to be covered. With more than 150,000 people working on projects, the costs of “gray” papers is a trigger for more costs. The compute time has to be paid for. Hello, cloud customers. The “thinking time” has to be paid for because coming up with great research is open ended and may take weeks, months, or years. One could not rush Einstein. One cannot rush Google wizards in the AI realm either.

The point of this blog post is to create a bit of sympathy for the professionals in Google’s accounting department. Those folks have a tough job figuring out how to cut costs. One cannot prevent 11 people from burning through computer time. The costs just hockey stick. Consequently the quantumly supreme professionals involved in Google cost control look for simpler, more comprehensible ways to generate sufficient cash to cover what are essentially “surprise” costs. These tools include magic wand behavior over payments to creators, smart commission tables to compensate advertising partners, and demands for more efficiency from Googlers who are not thinking big thoughts about big AI topics.

Net net: Have some awareness of how tough it is to be quantumly supreme. One has to keep the PR and Marketing messaging on track. One has to notch breakthroughs, insights, and innovations. What about that glue on the pizza thing? Answer: What?

Stephen E Arnold, August 7, 2024

Curating Content: Not Really and Maybe Not at All

August 5, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Most people assume that if software is downloaded from an official “store” or from a “trusted” online Web search system, the user assumes that malware is not part of the deal. Vendors bandy about the word “trust” at the same time wizards in the back office are filtering, selecting, and setting up mechanisms to sell advertising to anyone who has money.

Advertising sales professionals are the epitome of professionalism. Google the word “trust”. You will find many references to these skilled individuals. Thanks, MSFT Copilot. Good enough.

Are these statements accurate? Because I love the high-tech outfits, my personal view is that online users today have these characteristics:

- Deep knowledge about nefarious methods

- The time to verify each content object is not malware

- A keen interest in sustaining the perception that the Internet is a clean, well-lit place. (Sorry, Mr. Hemingway, “lighted” will get you a points deduction in some grammarians’ fantasy world.)

I read “Google Ads Spread Mac Malware Disguised As Popular Browser.” My world is shattered. Is an alleged monopoly fostering malware? Is the dominant force in online advertising unable to verify that its advertisers are dealing from the top of the digital card deck? Is Google incapable of behaving in a responsible manner? I have to sit down. What a shock to my dinobaby system.

The write up alleges:

Google Ads are mostly harmless, but if you see one promoting a particular web browser, avoid clicking. Security researchers have discovered new malware for Mac devices that steals passwords, cryptocurrency wallets and other sensitive data. It masquerades as Arc, a new browser that recently gained popularity due to its unconventional user experience.

My assumption is that Google’s AI and human monitors would be paying close attention to a browser that seeks to challenge Google’s Chrome browser. Could I be incorrect? Obviously if the write up is accurate I am. Be still my heart.

The write up continues:

The Mac malware posing as a Google ad is called Poseidon, according to researchers at Malwarebytes. When clicking the “more information” option next to the ad, it shows it was purchased by an entity called Coles & Co, an advertiser identity Google claims to have verified. Google verifies every entity that wants to advertise on its platform. In Google’s own words, this process aims “to provide a safe and trustworthy ad ecosystem for users and to comply with emerging regulations.” However, there seems to be some lapse in the verification process if advertisers can openly distribute malware to users. Though it is Google’s job to do everything it can to block bad ads, sometimes bad actors can temporarily evade their detection.

But the malware apparently exists and the ads are the vector. What’s the fix? Google is already doing its typical A Number One Quantumly Supreme Job. Well, the fix is you, the user.

You are sufficiently skilled to detect, understand, and avoid such online trickery, right?

Stephen E Arnold, August 5, 2024