A Business Opportunity for Some Failed VCs?

June 26, 2025

An opinion essay written by a dinobaby who did not rely on smart software .

An opinion essay written by a dinobaby who did not rely on smart software .

Do you want to open a T shirt and baseball cap with snappy quotes? If the answer is, “Yes,” I have a suggestion for you. Tucked into “Artificial Intelligence Is Not a Miracle Cure: Nobel Laureate Raises Questions about AI-Generated Image of Black Hole Spinning at the Heart of Our Galaxy” is this gem of a quotation:

“But artificial intelligence is not a miracle cure.”

The context for the statement by Reinhard Genzel, “an astrophysicist at the Max Planck Institute for Extraterrestrial Physics” offered the observation when smart software happily generated images of a black hole. These are mysterious “things” which industrious wizards find amidst the numbers spewed by “telescopes.” Astrophysicists are discussing in an academic way exactly what the properties of a black hole are. One wing of the community has suggested that our universe exists within a black hole. Other wings offer equally interesting observations about these phenomena.

The write up explains:

an international team of scientists has attempted to harness the power of AI to glean more information about Sagittarius A* from data collected by the Event Horizon Telescope (EHT). Unlike some telescopes, the EHT doesn’t reside in a single location. Rather, it is composed of several linked instruments scattered across the globe that work in tandem. The EHT uses long electromagnetic waves — up to a millimeter in length — to measure the radius of the photons surrounding a black hole. However, this technique, known as very long baseline interferometry, is very susceptible to interference from water vapor in Earth’s atmosphere. This means it can be tough for researchers to make sense of the information the instruments collect.

The fix is to feed the data into a neural network and let the smart software solve the problem. It did, and generated the somewhat tough-to-parse images in the write up. To a dinobaby, one black hole image looks like another.

But the quote states what strikes me as a truism for 2025:

“But artificial intelligence is not a miracle cure.”

Those who have funded are unlikely to buy a hat to T shirt with this statement printed in bold letters.

Stephen E Arnold, June 26, 2025

Meeker Reveals the Hurdle the Google Must Over: Can Google Be Agile Again?

June 20, 2025

Just a dinobaby and no AI: How horrible an approach?

Just a dinobaby and no AI: How horrible an approach?

The hefty Meeker Report explains Google’s PR push, flood of AI announcement, and statements about advertising revenue. Fear may be driving the Googlers to be the Silicon Valley equivalent of Dan Aykroyd and Steve Martin’s “wild and crazy guys.” Google offers up the Sundar & Prabhakar Comedy Show. Similar? I think so.

I want to highlight two items from the 300 page plus PowerPoint deck. The document makes clear that one can create a lot of slides (foils) in six years.

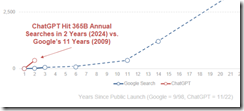

The first item is a chart on page 21. Here it is:

Note the tiny little line near the junction of the x and y axis. Now look at the red lettering:

ChatGPT hit 365 billion annual searches by Year since public launches of Google and Chat GPT — 1998 – 2025.

Let’s assume Ms. Meeker’s numbers are close enough for horse shoes. The slope of the ChatGPT search growth suggests that the Google is losing click traffic to Sam AI-Man’s ChatGPT. I wonder if Sundar & Prabhakar eat, sleep, worry, and think as the Code Red lights flashes quietly in the Google lair? The light flashes: Sundar says, “Fast growth is not ours, brother.” Prabhakar responds, “The chart’s slope makes me uncomfortable.” Sundar says, “Prabhakar, please, don’t think of me as your boss. Think of me as a friend who can fire you.”

Now this quote from the top Googler on page 65 of the Meeker 2025 AI encomium:

The chance to improve lives and reimagine things is why Google has been investing in AI for more than a decade…

So why did Microsoft ace out Google with its OpenAI, ChatGPT deal in January 2023?

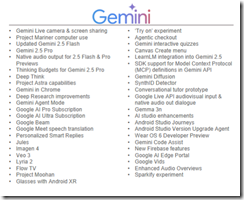

Ms. Meeker’s data suggests that Google is doing many AI projects because it named them for the period 5/19/25-5/23/25. Here’s a run down from page 260 in her report:

And what di Microsoft, Anthropic, and OpenAI talk about in the some time period?

Google is an outputter of stuff.

Let’s assume Ms. Meeker is wildly wrong in her presentation of Google-related data. What’s going to happen if the legal proceedings against Google force divestment of Chrome or there are remediating actions required related to the Google index? The Google may be in trouble.

Let’s assume Ms. Meeker is wildly correct in her presentation of Google-related data? What’s going to happen if OpenAI, the open source AI push, and the clicks migrate from the Google to another firm? The Google may be in trouble.

Net net: Google, assuming the data in Ms. Meeker’s report are good enough, may be confronting a challenge it cannot easily resolve. The good news is that the Sundar & Prabhakar Comedy Show can be monetized on other platforms.

Is there some hard evidence? One can read about it in Business Insider? Well, ooops. Staff have been allegedly terminated due to a decline in Google traffic.

Stephen E Arnold, June 20, 2025

Move Fast, Break Your Expensive Toy

June 19, 2025

An opinion essay written by a dinobaby who did not rely on smart software .

An opinion essay written by a dinobaby who did not rely on smart software .

The weird orange newspaper online service published “Microsoft Prepared to Walk Away from High-Stakes OpenAI Talks.” (I quite like the Financial Times, but orange?) The big news is that a copilot may be creating tension in the cabin of the high-flying software company. The squabble has to do with? Give up? Money and power. Shocked? It is Sillycon Valley type stuff, and I think the squabble is becoming more visible. What’s next? Live streaming the face-to-face meetings?

A pilot and copilot engage in a friendly discussion about paying for lunch. The art was created by that outstanding organization OpenAI. Yes, good enough.

The orange service reports:

Microsoft is prepared to walk away from high-stakes negotiations with OpenAI over the future of its multibillion-dollar alliance, as the ChatGPT maker seeks to convert into a for-profit company.

Does this sound like a threat?

The squabbling pilot and copilot radioed into the control tower this burst of static filled information:

“We have a long-term, productive partnership that has delivered amazing AI tools for everyone,” Microsoft and OpenAI said in a joint statement. “Talks are ongoing and we are optimistic we will continue to build together for years to come.”

The newspaper online service added:

In discussions over the past year, the two sides have battled over how much equity in the restructured group Microsoft should receive in exchange for the more than $13bn it has invested in OpenAI to date. Discussions over the stake have ranged from 20 per cent to 49 per cent.

As a dinobaby observing the pilot and copilot navigate through the cloudy skies of smart software, it certainly looks as if the duo are arguing about who pays what for lunch when the big AI tie up glides to a safe landing. However, the introduction of a “nuclear option” seems dramatic. Will this option be a modest low yield neutron gizmo or a variant of the 1961 Tsar Bomba fried animals and lichen within a 35 kilometer radius and converted an island in the arctic to a parking lot?

How important is Sam AI-Man’s OpenAI? The cited article reports this from an anonymous source (the best kind in my opinion):

“OpenAI is not necessarily the frontrunner anymore,” said one person close to Microsoft, remarking on the competition between rival AI model makers.

Which company kicked off what seems to be a rather snappy set of negotiations between the pilot and the copilot. The cited orange newspaper adds:

A Silicon Valley veteran close to Microsoft said the software giant “knows that this is not their problem to figure this out, technically, it’s OpenAI’s problem to have the negotiation at all”.

What could the squabbling duo do do do (a reference to Bing Crosby’s version of “I Love You” for those too young to remember the song’s hook or the Bingster for that matter):

- Microsoft could reach a deal, make some money, and grab the controls of the AI powered P-39 Airacobra training aircraft, and land without crashing at the Renton Municipal Airport

- Microsoft and OpenAI could fumble the landing and end up in Lake Washington

- OpenAI could bail out and hitchhike to the nearest venture capital firm for some assistance

- The pilot and copilot could just agree to disagree and sit at separate tables at the IHOP in Renton, Washington

One can imagine other scenarios, but the FT’s news story makes it clear that anonymous sources, threats, and a bit of desperation are now part of the Microsoft and OpenAI relationship.

Yep, money and control — business essentials in the world of smart software which seems to be losing its claim as the “next big thing.” Are those stupid red and yellow lights flashing at Microsoft and OpenAI as they are at Google?

Stephen E Arnold, June 19, 2025

Up for a Downer: The Limits of Growth… Baaaackkkk with a Vengeance

June 13, 2025

Just a dinobaby and no AI: How horrible an approach?

Just a dinobaby and no AI: How horrible an approach?

Where were you in 1972? Oh, not born yet. Oh, hanging out in the frat house or shopping with sorority pals? Maybe you were working at a big time consulting firm?

An outfit known as Potomac Associates slapped its name on a thought piece with some repetitive charts. The original work evolved from an outfit contributing big ideas. The Club of Rome lassoed William W. Behrens, Dennis and Donella Meadows, and Jørgen Randers to pound data into the then-state-of-the-art World3 model allegedly developed by Jay Forrester at MIT. (Were there graduate students involved? Of course not.)

The result of the effort was evidence that growth becomes unsustainable and everything falls down. Business, government systems, universities, etc. etc. Personally I am not sure why the idea that infinite growth with finite resources will last forever was a big deal. The idea seems obvious to me. I was able to get my little hands on a copy of the document courtesy of Dominique Doré, the super great documentalist at the company which employed my jejune and naive self. Who was I too think, “This book’s conclusion is obvious, right?” Was I wrong. The concept of hockey sticks that had handles to the ends of the universe was a shocker to some.

The book’s big conclusion is the focus of “Limits to Growth Was Right about Collapse.” Why? I think the idea that the realization is a novel one to those who watched their shares in Amazon, Google, and Meta zoom to the sky. Growth is unlimited, some believed. The write up in “The Next Wave,” an online newsletter or information service happily quotes an update to the original Club of Rome document:

This improved parameter set results in a World3 simulation that shows the same overshoot and collapse mode in the coming decade as the original business as usual scenario of the LtG standard run.

Bummer. The kiddie story about Chicken Little had an acorn plop on its head. Chicken Little promptly proclaimed in a peer reviewed academic paper with non reproducible research and a YouTube video:

The sky is falling.

But keep in mind that the kiddie story is fiction. Humans are adept at survival. Maslow’s hierarchy of needs captures the spirit of species. Will life as modern CLs perceive it end?

I don’t think so. Without getting to philosophical, I would point to Gottlief Fichte’s thesis, antithesis, synthesis as a reasonably good way to think about change (gradual and catastrophic). I am not into philosophy so when life gives you lemons, one can make lemonade. Then sell the business to a local food service company.

Collapse and its pal chaos create opportunities. The sky remains.

The cited write up says:

Economists get over-excited when anyone mentions ‘degrowth’, and fellow-travelers such as the Tony Blair Institute treat climate policy as if it is some kind of typical 1990s political discussion. The point is that we’re going to get degrowth whether we think it’s a good idea or not. The data here is, in effect, about the tipping point at the end of a 200-to-250-year exponential curve, at least in the richer parts of the world. The only question is whether we manage degrowth or just let it happen to us. This isn’t a neutral question. I know which one of these is worse.

See de-growth creates opportunities. Chicken Little was wrong when the acorn beaned her. The collapse will be just another chance to monetize. Today is Friday the 13th. Watch out for acorns and recycled “insights.”

Stephen E Arnold, June 13, 2025

Developers: Try to Kill ‘Em Off and They Come Back Like Giant Hogweeds

June 12, 2025

Just a dinobaby and no AI: How horrible an approach?

Just a dinobaby and no AI: How horrible an approach?

Developers, which probably extends to “coders” and “programmers”, have been an employee category of note for more than a half century. Even the esteemed Institute of Advanced Study enforced some boundaries between the “real” thinking mathematicians and the engineers who fooled around in the basement with a Stone Age computer.

Giant hogweeds can have negative impacts on humanoids who interact with them. Some say the same consequences ensue when accountants, lawyers, and MBAs engage in contact with programmers: Skin irritation and possibly blindness.

“The Recurring Cycle of ‘Developer Replacement’ Hype” addresses this boundary. The focus is on smart software which allegedly can do heavy-lifting programming. One of my team (Howard, the recipient of the old and forgotten Information Industry Association award for outstanding programming) is skeptical that AI can do what he does. I think that our work on the original MARS system which chugged along on the AT&T IBM MVS installation in Piscataway in the 1980s may have been a stretch for today’s coding wonders like Claude and ChatGPT. But who knows? Maybe these smart systems would have happily integrated Information Dimensions database with the MVS and allowed the newly formed Baby Bells to share certain data and “charge” one another for those bits? Trivial work now I suppose in the wonderful world of PL/1, Assembler, and the Basis “GO” instruction in one of today’s LLMs tuned to “do” code.

The write up points out that the tension between bean counters, MBAs and developers follows a cycle. Over time, different memes have surfaced suggesting that there was a better, faster, and cheaper way to “do” code than with programmers. Here are the “movements” or “memes” the author of the cited essay presents:

- No code or low code. The idea is that working in PL/1 or any other “language” can be streamlined with middleware between the human and the executables, the libraries, and the control instructions.

- The cloud revolution. The idea is that one just taps into really reliable and super secure services or micro services. One needs to hook these together and a robust application emerges.

- Offshore coding. The concept is simple: Code where it is cheap. The code just has to be good enough. The operative word is cheap. Note that I did not highlight secure, stable, extensible, and similar semi desirable attributes.

- AI coding assistants. Let smart software do the work. Microsoft allegedly produces oodles of code with its smart software. Google is similarly thrilled with the idea that quirky wizards can be allowed to find their future elsewhere.

The essay’s main point is that despite the memes, developers keep cropping up like those pesky giant hogweeds.

The essay states:

Here’s what the "AI will replace developers" crowd fundamentally misunderstands: code is not an asset—it’s a liability. Every line must be maintained, debugged, secured, and eventually replaced. The real asset is the business capability that code enables. If AI makes writing code faster and cheaper, it’s really making it easier to create liability. When you can generate liability at unprecedented speed, the ability to manage and minimize that liability strategically becomes exponentially more valuable. This is particularly true because AI excels at local optimization but fails at global design. It can optimize individual functions but can’t determine whether a service should exist in the first place, or how it should interact with the broader system. When implementation speed increases dramatically, architectural mistakes get baked in before you realize they’re mistakes. For agency work building disposable marketing sites, this doesn’t matter. For systems that need to evolve over years, it’s catastrophic. The pattern of technological transformation remains consistent—sysadmins became DevOps engineers, backend developers became cloud architects—but AI accelerates everything. The skill that survives and thrives isn’t writing code. It’s architecting systems. And that’s the one thing AI can’t do.

I agree, but there are some things programmers can do that smart software cannot. Get medical insurance.

Stephen E Arnold, June 12, 2025

A SundAI Special: Who Will Get RIFed? Answer: News Presenters for Sure

June 1, 2025

Just a dinobaby and some AI: How horrible an approach?

Just a dinobaby and some AI: How horrible an approach?

Why would “real” news outfits dump humanoids for AI-generated personalities? For my money, there are three good reasons:

- Cost reduction

- Cost reduction

- Cost reduction.

The bean counter has donned his Ivy League super smart financial accoutrements: Meta smart glasses, an Open AI smart device, and an Apple iPhone with the vaunted AI inside (sorry, Intel, you missed this trend). Unfortunately the “good enough” approach, like a gradient descent does not deal in reality. Sum those near misses and what do you get: Dead organic things. The method applies to flora and fauna, including humanoids with automatable jobs. Thanks, You.com, you beat the pants off Venice.ai which simply does not follow prompts. A perfect solution for some applications, right?

My hunch is that many people (humanoids) will disagree. The counter arguments are:

- Human quantum behavior; that is, flubbing lines, getting into on air spats, displaying annoyance standing in a rain storm saying, “The wind velocity is picking up.”

- The cost of recruitment, training, health care, vacations, and pension plans (ho ho ho)

- The management hassle of having to attend meetings to talk about, become deciders, and — oh, no — accept responsibility for those decisions.

I read “The White-Collar Bloodbath’ Is All Part of the AI Hype Machine.” I am not sure how fear creates an appetite for smart software. The push for smart software boils down to generating revenues. To achieve revenues one can create a new product or service like the iPhone of the original Google search advertising machine. But how often do those inventions doddle down the Information Highway? Not too often because most of the innovative new new next big things are smashed by a Meta-type tractor trailer.

The write up explains that layoff fears are not operable in the CNN dataspace:

If the CEO of a soda company declared that soda-making technology is getting so good it’s going to ruin the global economy, you’d be forgiven for thinking that person is either lying or fully detached from reality. Yet when tech CEOs do the same thing, people tend to perk up. ICYMI: The 42-year-old billionaire Dario Amodei, who runs the AI firm Anthropic, told Axios this week that the technology he and other companies are building could wipe out half of all entry-level office jobs … sometime soon. Maybe in the next couple of years, he said.

First, the killing jobs angle is probably easily understood and accepted by individuals responsible for “cost reduction.” Second, the ICYMI reference means “in case you missed it,” a bit of short hand popular with those are not yet 80 year old dinobabies like me. Third, the source is a member of the AI leadership class. Listen up!

Several observations:

- AI hype is marketing. Money is at stake. Do stakeholders want their investments to sit mute and wait for the old “build it and they will come” pipedream to manifest?

- Smart software does not have to be perfect; it needs to be good enough. Once it is good enough cost reductionists take the stage and employees are ushered out of specific functions. One does not implement cost reductions at random. Consultants set priorities, develop scorecards, and make some charts with red numbers and arrows point up. Employees are expensive in general, so some work is needed to determine which can be replaced with good enough AI.

- News, journalism, and certain types of writing along with customer “support”, and some jobs suitable for automation like reviewing financial data for anomalies are likely to be among the first to be subject to a reduction in force or RIF.

So where does that leave the neutral observer? On one hand, the owners of the money dumpster fires are promoting like crazy. These wizards have to pull rabbit after rabbit out of a hat. How does that get handled? Think P.T. Barnum.

Some AI bean counters, CFOs, and financial advisors dream about dumpsters filled with money burning. This was supposed to be an icon, but Venice.ai happily ignores prompt instructions and includes fruit next to a burning something against a wooden wall. Perfect for the good enough approach to news, customer service, and MBA analyses.

On the other hand, you have the endangered species, the “real” news people and others in the “knowledge business but automatable knowledge business.” These folks are doing what they can to impede the hyperbole machine of smart software people.

Who or what will win? Keep in mind that I am a dinobaby. I am going extinct, so smart software has zero impact on me other than making devices less predictable and resistant to my approach to “work.” Here’s what I see happening:

- Increasing unemployment for those lower on the “knowledge word” food chain. Sorry, junior MBAs at blue chip consulting firms. Make sure you have lots of money, influential parents, or a former partner at a prestigious firm as a mom or dad. Too bad for those studying to purvey “real” news. Junior college graduates working in customer support. Yikes.

- “Good enough” will replace excellence in work. This means that the air traffic controller situation is a glimpse of what deteriorating systems will deliver. Smart software will probably come to the rescue, but those antacid gobblers will be history.

- Increasing social discontent will manifest itself. To get a glimpse of the future, take an Uber from Cape Town to the airport. Check out the low income housing.

Net net: The cited write up is essentially anti-AI marketing. Good luck with that until people realize the current path is unlikely to deliver the pot of gold for most AI implementations. But cost reduction only has to show payoffs. Balance sheets do not reflect a healthy, functioning datasphere.

Stephen E Arnold, June 1, 2025

AI Can Do Your Knowledge Work But You Will Not Lose Your Job. Never!

May 30, 2025

The dinobaby wrote this without smart software. How stupid is that?

The dinobaby wrote this without smart software. How stupid is that?

Ravical is going to preserve jobs for knowledge workers. Nevertheless, the company’s AI may complete 80% of the work in these types of organizations. No bean counter on earth would figure out that reducing humanoid workers would cut costs, eliminate the useless vacation scam, and chop the totally unnecessary health care plan. None.

The write up “Belgian AI Startup Says It Can Automate 80% of Work at Expert Firms” reports:

Joris Van Der Gucht, Ravical’s CEO and co-founder, said the “virtual employees” could do 80% of the work in these firms. “Ravical’s agents take on the repetitive, time-consuming tasks that slow experts down,” he told TNW, citing examples such as retrieving data from internal systems, checking the latest regulations, or reading long policies. Despite doing up to 80% of the work in these firms, Van Der Gucht downplayed concerns about the agents supplanting humans.

I believe this statement is 100 percent accurate. AI firms do not use excessive statements to explain their systems and methods. The article provides more concrete evidence that this replacement of humans is spot on:

Enrico Mellis, partner at Lakestar, the lead investor in the round, said he was excited to support the company in bringing its “proven” experience in automation to the booming agentic AI market. “Agentic AI is moving from buzzword to board-level priority,” Mellis said.

Several observations:

- Humans absolutely will be replaced, particularly those who cannot sell

- Bean counters will be among the first to point out that software, as long as it is good enough, will reduce costs

- Executives are judged on financial performance, not the quality of the work as long as revenues and profits result.

Will Ravical become the go-to solution for outfits engaged in knowledge work? No, but it will become a company that other agentic AI firms will watch closely. As long as the AI is good enough, humanoids without the ability to close deals will have plenty of time to ponder opportunities in the world of good enough, hallucinating smart software.

Stephen E Arnold, May 30, 2025

Real News Outfit Finds a Study Proving That AI Has No Impact in the Workplace

May 27, 2025

Just the dinobaby operating without Copilot or its ilk.

Just the dinobaby operating without Copilot or its ilk.

The “real news” outfit is the wonderful business magazine Fortune, now only $1 a month. Subscribe now!

The title of the write up catching my attention was “Study Looking at AI Chatbots in 7,000 Workplaces Finds ‘No Significant Impact on Earnings or Recorded Hours in Any Occupation.” Out of the blocks this story caused me to say to myself, “This is another you-can’t-fire-human-writers” proxy.”

Was I correct? Here are three snips, and I not only urge you to subscribe to Fortune but read the original article and form your own opinion. Another option is to feed it into an LLM which is able to include Web content and ask it to tell you about the story. If you are reading my essay, you know that a dinobaby plucks the examples, no smart software required, although as I creep toward 81, I probably should let a free AI do the thinking for me.

Here’s the first snip I captured:

Their [smart software or large language models] popularity has created and destroyed entire job descriptions and sent company valuations into the stratosphere—then back down to earth. And yet, one of the first studies to look at AI use in conjunction with employment data finds the technology’s effect on time and money to be negligible.

You thought you could destroy humans, you high technology snake oil peddlers (not the contraband Snake Oil popular in Hong Kong at this time). Think old-time carnival barkers.

Here’s the second snip about the sample:

focusing on occupations believed to be susceptible to disruption by AI

Okay, “believed” is the operative word. Who does the believing a University of Chicago assistant professor of economics (Yay, Adam Smith. Yay, yay, Friedrich Hayak) and a graduate student. Yep, a time honored method: A graduate student.

Now the third snip which presents the rock solid proof:

On average, users of AI at work had a time savings of 3%, the researchers found. Some saved more time, but didn’t see better pay, with just 3%-7% of productivity gains being passed on to paychecks. In other words, while they found no mass displacement of human workers, neither did they see transformed productivity or hefty raises for AI-wielding super workers.

Okay, not much payoff from time savings. Okay, not much of a financial reward for the users. Okay, nobody got fired. I thought it was hard to terminate workers in some European countries.

After reading the article, I like the penultimate paragraph’s reminder that outfits like Duolingo and Shopify have begun rethinking the use of chatbots. Translation: You cannot get rid of human writers and real journalists.

Net net: A temporary reprieve will not stop the push to shift from expensive humans who want health care and vacations. That’s the news.

Stephen E Arnold, May 27, 2025

Behind Microsoft’s Dogged Copilot Push

May 20, 2025

Writer Simon Batt at XDA foresees a lot of annoyance in Windows users’ future. “Microsoft Will Only Get More Persistent Now that Copilot has Plateaued,” he predicts. Yes, Microsoft has failed to attract as many users to Copilot as it had hoped. It is as if users see through the AI hype. According to Batt, the company famous for doubling down on unpopular ideas will now pester us like never before. This can already be seen in the new way Microsoft harasses Windows 10 users. While it used to suggest every now and then such users purchase a Windows 11-capable device, now it specifically touts Copilot+ machines.

Batt suspects Microsoft will also relentlessly push other products to boost revenue. Especially anything it can bill monthly. Though Windows is ubiquitous, he notes, users can go years between purchases. Many of us, we would add, put off buying a new version until left with little choice. (Any XP users still out there?) He writes:

“When ChatGPT began to take off, I can imagine Microsoft seeing dollar signs when looking at its own assistant, Copilot. They could make special Copilot-enhanced devices (which make them money) that run Copilot locally and encourage people to upgrade to Copilot Pro (which makes them money) and perhaps then pay extra for the Office integration (which makes them money). But now that golden egg hasn’t panned out like Microsoft wants, and now it needs to find a way to help prop up the income while it tries to get Copilot off the ground. This means more ads for the Microsoft Store, more ads for its game store, and more ads for Microsoft 365. Oh, and let’s not forget the ads within Copilot itself. If you thought things were bad now, I have a nasty feeling we’re only just getting started with the ads.”

And they won’t stop, he expects, until most users have embraced Copilot. Microsoft may be creeping toward some painful financial realities.

Cynthia Murrell, May 20, 2025

Google Innovates: Another Investment Play. (How Many Are There Now?)

May 13, 2025

No AI, just the dinobaby expressing his opinions to Zillennials.

No AI, just the dinobaby expressing his opinions to Zillennials.

I am not sure how many investment, funding, and partnering deals Google has. But as the selfish only child says, “I want more, Mommy.” Is that Google’s strategy for achieving more AI dominance. The company has already suggested that it has won the AI battle. AI is everywhere even when one does not want it. But inferiority complexes have a way of motivating bright people to claim that they are winners only to wake at 3 am to think, “I must do more. Don’t hit me in the head, grandma.”

The write up “Google Launches New Initiative to Back Startups Building AI” brilliant, never before implemented tactic. The idea is to shovel money at startups that are [a] Googley, [b] focus on AI’s cutting edge, and [c] can reduce Google’s angst ridden 3 am soul searching. (Don’t hit me in the head, grandma.)

The article says:

Google announced the launch of its AI Futures Fund, a new initiative that seeks to invest in startups that are building with the latest AI tools from Google DeepMind, the company’s AI R&D lab. The fund will back startups from seed to late stage and will offer varying degrees of support, including allowing founders to have early access to Google AI models from DeepMind, the ability to work with Google experts from DeepMind and Google Labs, and Google Cloud credits. Some startups will also have the opportunity to receive direct investment from Google.

This meets criterion [a] above. The firms have to embrace Google’s quantumly supreme DeepMind, state of the art, world beating AI. I interpret the need to pay people to use DeepMind as a hint that making something commercially viable is just outside the sharp claws of Googzilla. Therefore, just pay for those who will be Googley and use the quantumly supreme DeepMind AI.

The write up adds:

Google has been making big commitments over the past few months to support the next generation of AI talent and scientific breakthroughs.

This meets criterion [b] above. Google is paying to try to get the future to appear under the new blurry G logo. Will this work? Sure, just as it works for regular investment outfits. The hit ratio is hoped to be 17X or more. But in tough times, a 10X return is good. Why? Many people are chasing AI opportunities. The failure rate of new high technology companies remains high even with the buzz of AI. If Google has infinite money, it can indeed win the future. But if the search advertising business takes a hit or the Chrome data system has a groin pull, owning or “inventing” the future becomes a more difficult job for Googzilla.

Now we come to criterion [c], the inferiority complex and the need to meeting grandma’s and the investors’ expectations. The write up does not spend much time on the psyches of the Google leadership. The write points out:

Google also has its Google for Startups Founders Funds, which supports founders from an array of industries and backgrounds building companies, including AI companies. A spokesperson told TechCrunch in February that this year, the fund would start investing in AI-focused startups in the U.S., with more information to come at a later date.

The article does not address the psychology of Googzilla. That’s too bad because that’s what makes fuzzy G logos, impending legal penalties, intense competition from Sam AI-Man and every engineering student in China, and the self serving quantumly supreme type lingo big picture windows into the inner Google.

Grandma, don’t hit any of those ever young leaders at Google on the head. It may do some psychological rewiring that may make you proud and some other people expecting even greater achievements in AI, self driving cars, relevant search, better-than-Facebook ad targeting, and more investment initiatives.

Stephen E Arnold, May 13, 2025