Apple: Waking Up Is Hard to Do

October 16, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read a letter. I think this letter or at least parts of it were written by a human. These days it can be tough to know. The letter appeared in “Wiley Hodges’s Open Letter to Tim Cook Regarding ICEBlock.” Mr. Hodge, according to the cited article, retired from Apple, the computer and services company in 2022.

The letter expresses some concern that Apple removed an app from the Apple online store. Here’s a snippet from the “letter”:

Apple and you are better than this. You represent the best of what America can be, and I pray that you will find it in your heart to continue to demonstrate that you are true to the values you have so long and so admirably espoused.

It does seem to me that Apple is a flexible outfit. The purpose of the letter is unknown to me. On the surface, it is a single former employee’s expression of unhappiness at how “leadership” leads and deciders “decide.” However, below the surface it a signal that some people thought a for profit, pragmatic, and somewhat frisky Fancy Dancing organization was like Snow White, the Easter bunny, or the Lone Ranger.

Thanks, Venice.ai. Good enough.

Sorry. That’s not how big companies work or many little companies for that matter. Most organizations do what they can to balance a PR image with what the company actually does. Examples range from arguing via sleek and definitely expensive lawyers that what they do does not violate laws. Also, companies work out deals. Some of these involve doing things to fit in to the culture of a particular company. I have watched money change hands when registering a vehicle in the government office in Sao Paulo. These things happen because they are practical. Apple, for example, has an interesting relationship with a certain large country in Asia. I wonder if there is a bit of the old soft shoe going on in that region of the world.

These are, however, not the main point of this blog post. There cited article contains this statement:

Hodges, earlier in his letter, makes reference to Apple’s 2016 standoff with the FBI over a locked iPhone belonging to the mass shooter in San Bernardino, California. The FBI and Justice Department pressured Apple to create a version of iOS that would allow them to backdoor the iPhone’s passcode lock. Apple adamantly refused.

Okay, the time delta is nine years. What has changed? Obviously social media, the economic situation, the relationship among entities, and a number of lawsuits. These are the touchpoints of our milieu. One has to surf on the waves of change and the ripples and waves of datasphere.

But I want to highlight several points about my reaction to the this blog post containing the Hodge’s letter:

- Some people are realizing that their hoped-for vision of Apple, a publicly traded company, is not the here-and-now Apple. The fairy land of a company that cares is pretty much like any other big technology outfit. Shocker.

- Apple is not much different today than it was nine years ago. Plucking an example which positioned the Cupertino kids as standing up for an ideal does not line up with the reality. Technology existed then to gain access to digital devices. Believing the a company’s PR reflected reality illustrates how crazy some perceptions are. Saying is not doing.

- Apple remains to me one of the most invasive of the technology giants. The constant logging in, the weirdness of forcing people to have data in the iCloud when those people do not know the data are there or want it there for that matter, the oddball notifications that tell a user that an “new device” is connected when the iPad has been used for years, and a few other quirks like hiding files are examples of the reality of the company.

News flash: Apple is like the other Silicon Valley-type big technology companies. These firms have a game plan of do it and apologize. Push forward. I find it amusing that adults are experiencing the same grief as a sixth grader with a crush on the really cute person in home room. Yep, waking up is hard to do. Stop hitting the snooze alarm and join the real world.

Net net: The essay is a hoot. Here is an adult realizing that there is no Santa with apparently tireless animals and dwarfs at the North Pole. The cited article contains what appears to be another expression of annoyance, anger, and sorrow that Apple is not what the humans thought it was. Apple is Apple, and the only change agent able to modify the company is money and/or fear, a good combo in my experience.

Stephen E Arnold, October 16, 2025

AI Can Be a Critic Unless Biases Are Hard Wired

June 26, 2025

The Internet has made it harder to find certain music, films, and art. It was supposed to be quite the opposite, and it was for a time. But social media and its algorithms have made a mess of things. So asserts the blogger at Tadaima in, “If Nothing Is Curated, How Do We Find Things?” The write up reports:

“As convenient as social media is, it scatters the information like bread being fed to ducks. You then have to hunt around for the info or hope the magical algorithm gods read your mind and guide the information to you. I always felt like social media creates an illusion of convenience. Think of how much time it takes to stay on top of things. To stay on top of music or film. Think of how much time it takes these days, how much hunting you have to do. Although technology has made information vast and reachable, it’s also turned the entire internet into a sludge pile.”

Slogging through sludge does take the fun out of discovery. The author fondly recalls the days when a few hours a week checking out MTV and Ebert and Roeper, flipping through magazines, and listening to the radio was enough to keep them on top of pop culture. For a while, curation websites deftly took over that function. Now, though, those have been replaced by social-media algorithms that serve to rake in ad revenue, not to share tunes and movies that feed the soul. The write up observes:

“Criticism is dead (with Fantano being the one exception) and Gen Alpha doesn’t know how to find music through anything but TikTok. Relying on algorithms puts way too much power in technology’s hands. And algorithms can only predict content that you’ve seen before. It’ll never surprise you with something different. It keeps you in a little bubble. Oh, you like shoegaze? Well, that’s all the algorithm is going to give you until you intentionally start listening to something else.”

Yep. So the question remains: How do we find things? Big tech would tell us to let AI do it, of course, but that misses the point. The post’s writer has settled for a somewhat haphazard, unsatisfying method of lists and notes. They sadly posit this state of affairs might be the “new normal.” This type of findability “normal” may be very bad in some ways.

Cynthia Murrell, June 26, 2025

Lights, Ready the Smart Software, Now Hit Enter

June 11, 2025

Just a dinobaby and no AI: How horrible an approach?

Just a dinobaby and no AI: How horrible an approach?

I like snappy quotes. Here’s a good one from “You Are Not Prepared for This Terrifying New Wave of AI-Generated Videos.” The write up says:

I don’t mean to be alarmist, but I do think it’s time to start assuming everything you see online is fake.

I like the categorical affirmative. I like the “alarmist.” I particularly like “fake.”

The article explains:

Something happened this week that only made me more pessimistic about the future of truth on the internet. During this week’s Google I/O event, Google unveiled Veo 3, its latest AI video model. Like other competitive models out there, Veo 3 can generate highly realistic sequences, which Google showed off throughout the presentation. Sure, not great, but also, nothing really new there. But Veo 3 isn’t just capable of generating video that might trick your eye into thinking its real: Veo 3 can also generate audio to go alongside the video. That includes sound effects, but also dialogue—lip-synced dialogue.

If the Google-type synths are good enough and cheap, I wonder how many budding film directors will note the capabilities and think about their magnum opus on smart software dollies. Cough up a credit card and for $250 per month imagine what videos Google may allow you to make. My hunch is that Mother Google will block certain topics, themes, and “treatments.” (How easy would it be for a Google-type service to weaponize videos about the news, social movements, and recalcitrant advertisers?)

The write worries gently as well, stating:

We’re in scary territory now. Today, it’s demos of musicians and streamers. Tomorrow, it’s a politician saying something they didn’t; a suspect committing the crime they’re accused of; a “reporter” feeding you lies through the “news.” I hope this is as good as the technology gets. I hope AI companies run out of training data to improve their models, and that governments take some action to regulate this technology. But seeing as the Republicans in the United States passed a bill that included a ban on state-enforced AI regulations for ten years, I’m pretty pessimistic on that latter point. In all likelihood, this tech is going to get better, with zero guardrails to ensure it advances safely. I’m left wondering how many of those politicians who voted yes on that bill watched an AI-generated video on their phone this week and thought nothing of it.

My view is that several questions may warrant some noodling by a humanoid or possibly an “ethical” smart software system; for example:

- Can AI detectors spot and flag AI-generated video? Ignoring or missing may have interesting social knock on effects.

- Will a Google-type outfit ignore videos that praise an advertiser whose products are problematic? (Health and medical videos? Who defines “problematic”?)

- Will firms with video generating technology self regulate or just do what yields revenue? (Producers of adult content may have some clever ideas, and many of these professionals are willing to innovate.)

Net net: When will synth videos win an Oscar?

Stephen E Arnold, June 11, 2025

Blockchain: Adoption Lag Lies in the Implementation

April 15, 2025

Why haven’t cryptocurrencies taken over the financial world yet? The Observer shares some theories in, "Blockchain’s Billion-Dollar Blunder: How Finance’s Tech Revolution Became an Awkward Evolution." Writer Boris Bohrer-Bilowitzki believes the mistake was trying to reinvent the wheel, instead of augmenting it. He observes:

"For years, the strategy has been replacement rather than integration. We’ve attempted to create entirely new financial systems from scratch, expecting the world to abandon centuries of established infrastructure overnight. It hasn’t worked, and it won’t work. … This disconnect highlights our fundamental misunderstanding of how technological evolution works. Credit cards didn’t replace cash; they complemented it. They added a layer of convenience and security that made transactions easier while working within the existing financial framework. That’s the model blockchain has always needed to follow."

Gee, it makes sense when you put it that way. The write-up points to Swift as an organization that gets it. We learn:

"One of the most promising developments in this space is the international banking system SWIFT’s ongoing blockchain pilot program. In 2025, SWIFT will facilitate live trials, enabling central and commercial banks across North America, Europe and Asia to conduct digital asset transactions on its network. These trials aim to explore how blockchain can enhance payments, foreign exchange (FX), securities trading and trade finance without requiring banks to overhaul their systems."

That sounds promising. But what about consumers? Many are baffled by the very concept of cryptocurrency, never mind how to interact with it. Intuitive interfaces, Bohrer-Bilowitzki stresses, would remove that hurdle. After all, he notes, folks only embrace new technologies that make their lives easier.

Did the esteemed Observer overlook these blockchain downsides?

- Distributed autonomous organizations look more like Discord groups and club members

- Wonky reward layers

- Funding “hopes” is losing some traction

- Web3 seems to filled with janky STARs.

Novelty alone is not enough to drive large-scale adoption. Imagine that.

Cynthia Murrell, April 16, 2025

YouTube: Another Big Cost Black Hole?

March 25, 2025

Another dinobaby blog post. Eight decades and still thrilled when I point out foibles.

Another dinobaby blog post. Eight decades and still thrilled when I point out foibles.

I read “Google Is in Trouble… But This Could Change Everything – and No, It’s Not AI.” The write up makes the case that YouTube is Google’s big financial opportunity. I agree with most of the points in the write up. The article says:

Google doesn’t clearly explain how much of the $40.3 billion comes from the YouTube platform, but based on their description and choice of phrasing like “primarily include,” it’s safe to assume YouTube generates significantly more revenue than just the $36.1 billion reported. This would mean YouTube, not Google Cloud, is actually Google’s second-biggest business.

Yep, financial fancy dancing is part of the game. Google is using its financial reports as marketing to existing stakeholders and investors who want a part of the still-hot, still-dominant Googzilla. The idea is that the Google is stomping on the competition in the hottest sectors: The cloud, smart software, advertising, and quantum computing.

A big time company’s chief financial officer enters his office after lunch and sees a flood of red ink engulfing his work space. Thanks, OpenAI, good enough.

Let’s flip the argument from Google has its next big revenue oil gusher to the cost of that oil field infrastructure.

An article appeared in mid-February 2025. I was surprised that the information in that write up did not generate more buzz in the world of Google watchers. “YouTube by the Numbers: Uncovering YouTube’s Ghost Town of Billions of Unwatched, Ignored Videos” contains some allegedly accurate information. Let’s assume that these data, like most information about online, is close enough for horseshoes or purely notional. I am not going to summarize the methodology. Academics come up with interesting ways to obtain information about closely guarded big company products and services.

The write up says:

the research estimates a staggering 14.8 billion total videos on YouTube as of mid-2024. Unsurprisingly, most of these videos are barely noticed. The median YouTube upload has just 41 views, with 4% garnering no views at all. Over 74% have no comments and 89% have no likes.

Here are a couple of other factoids about YouTube as reported in the Techspot article:

The production values are also remarkably modest. Only 14% of videos feature a professional set or background. Just 38% show signs of editing. More than half have shaky camerawork, and audio quality varies widely in 85% of videos. In fact, 40% are simply music tracks with no voice-over.

And another point I found interesting:

Moreover, the typical YouTube video is just 64 seconds long, and over a third are shorter than 33 seconds.

The most revealing statement in the research data appears in this passage:

… a senior researcher [said] that this narrative overlooks a crucial reality: YouTube is not just an entertainment hub – it has become a form of digital infrastructure. Case in point: just 0.21% of the sampled videos included any kind of sponsorship or advertising. Only 4% had common calls to action such as liking, commenting, and subscribing. The vast majority weren’t polished content plays but rather personal expressions – perhaps not so different from the old camcorder days.

Assuming the data are reasonably good Google has built plumbing whose cost will rival that of the firm’s investments in search and its cloud.

From my point of view, cost control is going to become as important as moving as quickly as possible to the old-school broadcast television approach to content. Hit shows on YouTube will do what is necessary to attract an audience. The audience will be what advertisers want.

Just as Google search has degraded to a popular “experience,” not a resource for individuals who want to review and extract high value information, YouTube will head the same direction. The question is, “Will YouTube’s pursuit of advertisers mean that the infrastructure required to permit free video uploads and storage be sustainable?

Imagine being responsible for capital investments at the Google. The Cloud infrastructure must be upgraded and enhanced. The AI infrastructure must be upgraded and enhanced. The quantum computing and other technology-centric infrastructures must be upgraded an enhanced. The adtech infrastructure must be upgraded and enhanced. I am leaving out some of the Google’s other infrastructure intensive activities.

The main idea is that the financial person is going to have a large job paying for hardware, software, maintenance, and telecommunications. This is a different cost from technical debt. These are on-going and constantly growing costs. Toss in unexpected outages, and what does the bean counter do. One option is to quit and another is to do the Zen thing to avoid have a stroke when reviewing the cost projections.

My take is that a hit in search revenue is likely to add to the firm’s financial challenges. The path to becoming the modern version of William Paley’s radio empire may be in Google’s future. The idea that everything is in the cloud is being revisited by companies due to cost and security concerns. Does Google host some sketchy services on its Cloud?

YouTube may be the hidden revenue gem at Google. I think it might become the infrastructure cost leader among Google’s stellar product line up. Big companies like Google don’t just disappear. Instead the black holes of cost suck them closer to a big event: Costs rise more quickly than revenue.

At this time, Google has three cost black holes. One hopes none is the one that makes Googzilla join the ranks of the street people of online dwell.

Net net: Google will have to give people what they will watch. The lowest common denominator will emerge. The costs flood the CFO’s office. Just ask Gemini what to do.

Stephen E Arnold, March 25, 2025

A Swelling Wave: Internet Shutdowns in Africa

March 18, 2025

Another dinobaby blog post. No AI involved which could be good or bad depending on one’s point of view.

Another dinobaby blog post. No AI involved which could be good or bad depending on one’s point of view.

How does a government deal with information it does not like, want, or believe? The question is a pragmatic one. Not long ago, Russia suggested to Telegram that it cut the flow of Messenger content to Chechnya. Telegram has been somewhat more responsive to government requests since Pavel Durov’s detainment in France, but it dragged its digital feet. The fix? The Kremlin worked with service providers to kill off the content flow or at least as much of it as was possible. Similar methods have been used in other semi-enlightened countries.

“Internet Shutdowns at Record High in Africa As Access Weaponised’ reports:

A report released by the internet rights group Access Now and #KeepItOn, a coalition of hundreds of civil society organisations worldwide, found there were 21 shutdowns in 15 African countries, surpassing the existing record of 19 shutdowns in 2020 and 2021.

There are workarounds, but some of these are expensive and impractical for the people in Cormoros, Guinea-Bassau, Mauritius, Burundi, Ethiopia, Equatorial Guinea, and Kenya. I am not sure the list is complete, but the idea of killing Internet access seems to be an accepted response in some countries.

Several observations:

- Recent announcements about Google making explicit its access to users’ browser histories provide a rich and actionable pool of information. Will these type of data be used to pinpoint a dissident or a problematic individual? In my visits to Africa, including the thrilling Zimbabwe, I would suggest that the answer could be, “Absolutely.”

- Online is now pervasive, and due to a lack of meaningful regulation, the idea of going online and sharing information is a negative. In the late 1980s, I gave a lecture for ASIS at Rutgers University. I pointed out that flows of information work like silica grit in a sand blasting device to remove rust in an autobody shop. I can say from personal experience that no one knew what I was talking about. In 40 years, people and governments have figured out how online flows erode structures and social conventions.

- The trend of shutdown is now in the playbook of outfits around the world. Commercial companies can play the game of killing a service too. Certain large US high technology companies have made it clear that their service would summarily be blocked if certain countries did not play ball the US way.

As a dinobaby who has worked in online for decades, I find it interesting that the pigeons are coming home to roost. A failure years ago to recognize and establish rules and regulation for online is the same as having those lovable birds loose in the halls of government. What do pigeons produce? Yep, that’s right. A mess, a potentially deadly one too.

Stephen E Arnold, March 18, 2025

A Vulnerability Bigger Than SolarWinds? Yes.

February 18, 2025

No smart software. Just a dinobaby doing his thing.

No smart software. Just a dinobaby doing his thing.

I read an interesting article from WatchTowr Labs. (The spelling is what the company uses, so the url is labs.watchtowr.com.) On February 4, 2024, the company reported that it discovered what one can think of as orphaned or abandoned-but-still alive Amazon S3 “buckets.” The discussion of the firm’s research and what it revealed is presented in “8 Million Requests Later, We Made The SolarWinds Supply Chain Attack Look Amateur.”

The company explains that it was curious if what it calls “abandoned infrastructure” on a cloud platform might yield interesting information relevant to security. We worked through the article and created what in the good old days would have been called an abstract for a database like ABI/INFORM. Here’s our summary:

The article from WatchTowr Labs describes a large-scale experiment where researchers identified and took control of about 150 abandoned Amazon Web Services S3 buckets previously used by various organizations, including governments, militaries, and corporations. Over two months, these buckets received more than eight million requests for software updates, virtual machine images, and sensitive files, exposing a significant vulnerability. Watchtowr explain that bad actors could have injected malicious content. Abandoned infrastructure could be used for supply chain attacks like SolarWinds. Had this happened, the impact would have been significant.

Several observations are warranted:

- Does Amazon Web Services have administrative functions to identify orphaned “buckets” and take action to minimize the attack surface?

- With companies information technology teams abandoning infrastructure, how will these organizations determine if other infrastructure vulnerabilities exist and remediate them?

- What can cyber security vendors’ software and systems do to identify and neutralize these “shoot yourself in the foot” vulnerabilities?

One of the most compelling statements in the WatchTowr article, in my opinion, is:

… we’d demonstrated just how held-together-by-string the Internet is and at the same time point out the reality that we as an industry seem so excited to demonstrate skills that would allow us to defend civilization from a Neo-from-the-Matrix-tier attacker – while a metaphorical drooling-kid-with-a-fork-tier attacker, in reality, has the power to undermine the world.

Is WatchTowr correct? With government and commercial organizations leaving S3 buckets available, perhaps WatchTowr should have included gum, duct tape, and grade-school white glue in its description of the Internet?

Stephen E Arnold, February 18, 2025

A Technologist Realizes Philosophy 101 Was Not All Horse Feathers

January 6, 2025

This is an official dinobaby post. No smart software involved in this blog post.

This is an official dinobaby post. No smart software involved in this blog post.

I am not too keen on non-dinobabies thinking big thoughts about life. The GenX, Y, and Zedders are good at reinventing the wheel, fire, and tacos. What some of these non-dinobabies are less good at is thinking about the world online information has disestablished and is reassembling in chaotic constructs.

The essay, published in HackerNoon, “Here’s Why High Achievers Feel Like Failures” explains why so many non-dinobabies are miserable. My hunch is that the most miserable are those who have achieved some measure of financial and professional success and embrace whinge, insecurity, chemicals to blur mental functions, big car payments, and “experiences.” The essay does a very good job of explaining the impact of getting badges of excellence for making a scoobie (aka lanyard, gimp, boondoggle, or scoubidou) bracelet at summer camp to tweaking an algorithm to cause a teen to seek solace in a controlled substance. (One boss says, “Hey, you hit the revenue target. Too bad about the kid. Let’s get lunch. I’ll buy.”)

The write up explains why achievement and exceeding performance goals can be less than satisfying. Does anyone remember the Google VP who overdosed with the help of a gig worker? My recollection is that the wizard’s boat was docked within a few minutes of his home stuffed with a wifey and some kiddies. Nevertheless, an OnlyFans potential big earner was enlisted to assist with the chemical bliss that may have contributed to his logging off early.

Here’s what the essay offers this anecdote about a high performer whom I think was a entrepreneur riding a rocket ship:

Think about it:

- Three years ago, Mark was ecstatic about his first $10K month. Now, he beats himself up over $800K months.

- Two years ago, he celebrated hiring his first employee. Now, managing 50 people feels like “not scaling fast enough.”

- Last year, a feature in a local business journal made his year. Now, national press mentions barely register.

His progress didn’t disappear. His standards just kept pace with his growth, like a shadow that stretches ahead no matter how far you walk.

The main idea is that once one gets “something”; one wants more. The write up says:

Every time you level up, your brain does something fascinating – it rewrites your definition of “normal.” What used to be a summit becomes your new base camp. And while this psychological adaptation helped our ancestors survive, it’s creating a crisis of confidence in today’s achievement-oriented world.

Yep, the driving force behind achievement is the need to succeed so one can achieve more. I am a dinobaby, and I don’t want to achieve anything. I never did. I have been lucky: Born at the right time. Survived school. Got lucky and was hired on a fluke. Now 60 years later I know how I achieve the modicum of success I accrued. I was really lucky, and despite my 80 years, I am not yet dead.

The essay makes this statement:

We’re running paleolithic software on modern hardware. Every time you achieve something, your brain…

- Quickly normalizes the achievement (adaptation)

- Immediately starts wanting more (drive)

- Erases the emotional memory of the struggle (efficiency)

Is there a fix? Absolutely. Not surprisingly the essay includes a to-do list. The approach is logical and ideally suited to those who want to become successful. Here are the action steps:

Once you’ve reviewed your time horizons, the next step is to build what I call a “Progress Inventory.” Dedicate 15 minutes every Sunday night to reflect and fill out these three sections:

Victories Section

- What’s easier now than it was last month?

- What do you do automatically that used to require thought?

- What problems have disappeared?

- What new capabilities have you gained?

Growth Section

- What are you attempting now that you wouldn’t have dared before?

- Where have your standards risen?

- What new problems have you earned the right to have?

- What relationships have deepened or expanded?

Learning Section

- What mistakes are you no longer making?

- What new insights have you gained?

- What patterns are you starting to recognize?

- What tools have you mastered?

These two powerful tools – the Progress Mirror and the Progress Inventory – work together to solve the central problem we’ve been discussing: your brain’s tendency to hide your growth behind rising standards. The Progress Mirror forces you to zoom out and see the bigger picture through three critical time horizons. It’s like stepping back from a painting to view the full canvas of your growth. Meanwhile, the weekly Progress Inventory zooms in, capturing the subtle shifts and small victories that compound into major transformations. Used together, these tools create something I call “progress consciousness” – the ability to stay ambitious while remaining aware of how far you’ve come.

But what happens when the road map does not lead to a zen-like state? Because I have been lucky, I cannot offer an answer to this question of actual, implicit, or imminent failure. I can serve up some observations:

- This essay has the backbone for a self-help book aimed at insecure high performers. My suggestion is to buy a copy of Thomas Harris’ I’m OK — You’re Okay and make a lot of money. Crank out the merch with slogans from the victories, growth, and learning sections of the book.

- The explanations are okay, but far from new. Spending some time with Friedrich Nietzsche’s Der Wille zur Macht. Too bad Friedrich was dead when his sister assembled the odds and ends of Herr Nietzsche’s notes into a book addressing some of the issues in the HackerNoon essay.

- The write up focuses on success, self-doubt, and an ever-receding finish line. What about the people who live on the street in most major cities, the individuals who cannot support themselves, or the young people with minds trashed by digital flows? The essay offers less information for these under performers as measured by doubt ridden high performers.

Net net: The essay makes clear that education today does not cover some basic learnings; for example, the good Herr Friedrich Nietzsche. Second, the excitement of re-discovering fire is no substitute for engagement with a social fabric that implicitly provides a framework for thinking and behaving in a way that others in the milieu recognize as appropriate. This HackerNoon essay encapsulates why big tech and other successful enterprises are dysfunctional. Welcome to the digital world.

Stephen E Arnold, January 6, 2025

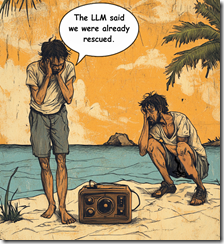

AI Makes Stuff Up and Lies. This Is New Information?

December 23, 2024

The blog post is the work of a dinobaby, not AI.

The blog post is the work of a dinobaby, not AI.

I spotted “Alignment Faking in Large Language Models.” My initial reaction was, “This is new information?” and “Have the authors forgotten about hallucination?” The original article from Anthropic sparked another essay. This one appeared in Time Magazine (online version). Time’s article was titled “Exclusive: New Research Shows AI Strategically Lying.” I like the “strategically lying,” which implies that there is some intent behind the prevarication. Since smart software reflects its developers use of fancy math and the numerous knobs and levers those developers can adjust at the same time the model is gobbling up information and “learning”, the notion of “strategically lying” struck me as as interesting.

Thanks MidJourney. Good enough.

What strategy is implemented? Who thought up the strategy? Is the strategy working? were the questions which occurred to me. The Time essay said:

experiments jointly carried out by the AI company Anthropic and the nonprofit Redwood Research, shows a version of Anthropic’s model, Claude, strategically misleading its creators during the training process in order to avoid being modified.

This suggests that the people assembling the algorithms and training data, configuring the system, twiddling the administrative settings, and doing technical manipulations were not imposing a strategy. The smart software was cooking up a strategy. Who will say that the software is alive and then, like the former Google engineer, express a belief that the system is alive. It’s sci-fi time I suppose.

The write up pointed out:

Researchers also found evidence that suggests the capacity of AIs to deceive their human creators increases as they become more powerful.

That is an interesting idea. Pumping more compute and data into a model gives it a greater capacity to manipulate its outputs to fool humans who are eager to grab something that promises to make life easier and the user smarter. If data about the US education system’s efficacy are accurate, Americans are not doing too well in the reading, writing, and arithmetic departments. Therefore, discerning strategic lies might be difficult.

The essay concluded:

What Anthropic’s experiments seem to show is that reinforcement learning is insufficient as a technique for creating reliably safe models, especially as those models get more advanced. Which is a big problem, because it’s the most effective and widely-used alignment technique that we currently have.

What’s this “seem.” The actual output of large language models using transformer methods crafted by Google output baloney some of the time. Google itself had to regroup after the “glue cheese to pizza” suggestion.

Several observations:

- Smart software has become the technology more important than any other. The problem is that its outputs are often wonky and now the systems are befuddling the wizards who created and operate them. What if AI is like a carnival ride that routinely injures those looking for kicks?

- AI is finding its way into many applications but the resulting revenue has frayed some investors’ nerves. The fix is to go faster and win to reach the revenue goal. This frenzy for payoff has been building since early 2024 but those costs remain brutally high.

- The behavior of large language models is not understood by some of its developers. Does this seem like a problem?

Net net: “Seem?” One lies or one does not.

Stephen E Arnold, December 23, 2024

Why Present Bad Sites?

October 7, 2024

I read “Google Search Is Testing Blue Checkmark Feature That Helps Users Spot Genuine Websites.” I know this is a test, but I have a question: What’s genuine mean to Google and its smart software? I know that Google cannot answer this question without resorting to consulting nonsensicalness, but “genuine” is a word. I just don’t know what’s genuine to Google. Is a Web site that uses SEO trickery to appear in a results list? Is it a blog post written by a duplicitous PR person working at a large Google-type firm? Is it a PDF appearing on a “genuine” government’s Web site?

A programmer thinking about blue check marks. The obvious conclusion is to provide a free blue check mark. Then later one can charge for that sign of goodness. Thanks, Microsoft. Good enough. Just like that big Windows update. Good enough.

The write up reports:

Blue checkmarks have appeared next to certain websites on Google Search for some users. According to a report from The Verge, this is because Google is experimenting with a verification feature to let users know that sites aren’t fraudulent or scams.

Okay, what’s “fraudulent” and what’s a “scam”?

What does Google say? According to the write up:

A Google spokesperson confirmed the experiment, telling Mashable, “We regularly experiment with features that help shoppers identify trustworthy businesses online, and we are currently running a small experiment showing checkmarks next to certain businesses on Google.”

A couple of observations:

- Why not allow the user to NOT out these sites? Better yet, give the user a choice of seeing de-junked or fully junked sites? Wow, that’s too hard. Imagine. A Boolean operator.

- Why does Google bother to index these sites? Why not change the block list for the crawl? Wow, that’s too much work. Imagine a Googler editing a “do not crawl” list manually.

- Is Google admitting that it can identify problematic sites like those which push fake medications or the stolen software videos on YouTube? That’s pretty useful information for an attorney taking legal action against Google, isn’t it?

Net net: Google is unregulated and spouts baloney. Google needs to jack up its revenue. It has fines to pay and AI wizards to pay. Tough work.

Stephen E Arnold, October 7, 2024