Japan Alleges Google Is a Monopoly Doing Monopolistic Things. What?

April 28, 2025

No AI, just the dinobaby himself.

No AI, just the dinobaby himself.

The Google has been around a couple of decades or more. The company caught my attention, and I wrote three monographs for a now defunct publisher in a very damp part of England. These are now out of print, but their titles illustrate my perception of what I call affectionately Googzilla:

- The Google Legacy. I tried to explain why Google’s approach was going to define how future online companies built their technical plumbing. Yep, OpenAI in all its charm is a descendant of those smart lads, Messrs. Brin and Page.

- Google Version 2.0. I attempted to document the shift in technical focus from search relevance to a more invasive approach to using user data to generate revenue. The subtitle, I thought at the time, gave away the theme of the book: “The Calculating Predator.”

- Google: The Digital Gutenberg. I presented information about how Google’s “outputs” from search results to more sophisticated content structures like profiles of people, places, and things was preparing Google to reinvent publishing. I was correct because the new head of search (Prabhakar Version 2.0) is making little reports the big thing in search results. This will doom many small publications because Google just tells you what it wants you to know.

I wrote these monographs between 2002 and 2008. I must admit that my 300 page Enterprise Search Report sold more copies than my Google work. But I think my Google trilogy explained what Googzilla was doing. No one cared.

Now I learn “Japan orders Google to stop pushing smartphone makers to install its apps.”* Okay, a little slow on the trigger, but government officials in the land of the rising sun figured out that Google is doing what Google has been doing for decades.

Enlightenment arrives!

The article reports:

Japan has issued a cease-and-desist order telling Google to stop pressuring smartphone makers to preinstall its search services on Android phones. The Japan Fair Trade Commission said on Tuesday Google had unfairly hindered competition by asking for preferential treatment for its search and browser from smartphone makers in violation of the country’s anti-monopoly law. The antitrust watchdog said Google, as far back as July 2020, had asked at least six Android smartphone manufacturers to preinstall its apps when they signed the license for the American tech giant’s app store…

Google has been this rodeo before. At the end of a legal process, Google will apologize, write a check, and move on down the road.

The question for me is, “How many other countries will see Google as a check writing machine?”

Quite a few in my opinion. The only problem is that these actions have taken many years to move from the thrill of getting a Google mouse pad to actual governmental action. (The best Google freebie was its flashing LED pin. Mine corroded and no longer flashed. I dumped it.)

Note for the * — Links to Microsoft “news” stories often go dead. Suck it up and run a query for the title using Google, of course.

Stephen E Arnold, April 28, 2025

Hey, We Know This Is Fake News: Sharing Secrets on Signal

April 24, 2025

Some government professionals allegedly blundered when they accidentally shared secret information via Signal with a reporter. The reporter, by the way, is not a person who wears a fedora with a command on it. To some, sharing close-hold information is an oopsie, but doing so with a non-hat wearing reporter is special. The BBC explained what the fallout will be from this mistake: “Why Is It A Problem If Yemen Strike Plans Shared On Signal?”

The Atlantic editor-in-chief Jeffrey Goldberg alleged received close-hold information via a free messaging application. A government professional seemed to agree that the messages appeared to be authentic. Hey, a free application is a cost reducer. Plus, Signal is believed to be encrypted end to end and super secure to boot. Signal is believed to be the “whisper network” of Washington D., an area known for its appropriate behavior and penchant for secrecy. (What was Wilbur Mills doing in the reflecting pool?)

While the messages are encrypted, bad actors (particularly those who may or may not be pals of the United States) allegedly can penetrate the Signal system. The Google Threat Intelligence Group noticed that Russia’s intelligence services have stepped up their hacking activities. Well, maybe or maybe not. Google is the leader in online advertising, but its “cyber security” expertise was acquired and may not be Googley yet.

The US government is not encouraging use of free messaging apps for sensitive information. That’s good. And the Pentagon is not too keen on a system not authorized to transmit non-public Department of Defense information. That’s good to know.

The whole sharing thing presents a potential downside for whomever is responsible for the misstep. The article says:

“Sensitive government communications are required to take place in a sealed-off room called a Sensitive Compartmentalized Information Facility (SCIF), where mobile phones are generally forbidden. The US government has other systems in place to communicate classified information, including the Joint Worldwide Intelligence Communications System (JWICS) and the Secret Internet Protocol Router (SIPR) network, which top government officials can access via specifically configured laptops and phones.”

Will there be consequences?

The article points out that the slip betwixt the cup and the lip may have violated two Federal laws:

“If confirmed, that would raise questions about two federal laws that require the preservation of government records: the Presidential Records Act and the Federal Records Act. "The law requires that electronic messages that take place on a non-official account are preserved, in some fashion, on an official electronic record keeping system," said Jason R Baron, a former director of litigation at the National Archives and Records Administration. Such regulations would cover Signal, he said.”

Hey, wasn’t the National Archive the agent interrupting normal business and holiday activities at a high profile resort residence in Florida recently? What does that outfit know about the best way to share sensitive information?

Whitney Grace, April 24, 2025

Israel Military: An Alleged Lapse via the Cloud

April 23, 2025

No AI, just a dinobaby watching the world respond to the tech bros.

No AI, just a dinobaby watching the world respond to the tech bros.

Israel is one of the countries producing a range of intelware and policeware products. These have been adopted in a number of countries. Security-related issues involving software and systems in the country are on my radar. I noted the write up “Israeli Air Force Pilots Exposed Classified Information, Including Preparations for Striking Iran.” I do not know if the write up is accurate. My attempts to verify did not produce results which made me confident about the accuracy of the Haaretz article. Based on the write up, the key points seem to be:

- Another security lapse, possibly more severe than that which contributed to the October 2023 matter

- Classified information was uploaded to a cloud service, possibly Click Portal, associated with Microsoft’s Azure and the SharePoint content management system. Haaretz asserts: “… it [MSFT Azure SharePoint Click Portal] enables users to hold video calls and chats, create documents using Office applications, and share files.”

- Documents were possibly scanned using CamScanner, A Chinese mobile app rolled out in 2010. The app is available from the Russian version of the Apple App Store. A CamScanner app is available from the Google Play Store; however, I elected to not download the app.

Modern interfaces can confuse users. Lack of training rigor and dashboards can create a security problem for many users. Thanks, Open AI, good enough.

Haaretz’s story presents this information:

Officials from the IDF’s Information Security Department were always aware of this risk, and require users to sign a statement that they adhere to information security guidelines. This declaration did not prevent some users from ignoring the guidelines. For example, any user could easily find documents uploaded by members of the Air Force’s elite Squadron 69.

Regarding the China-linked CamScanner software, Haaretz offers this information:

… several files that were uploaded to the system had been scanned using CamScanner. These included a duty roster and biannual training schedules, two classified training presentations outlining methods for dealing with enemy weaponry, and even training materials for operating classified weapons systems.

Regarding security procedures, Haaretz states:

According to standard IDF regulations, even discussing classified matters near mobile phones is prohibited, due to concerns about eavesdropping. Scanning such materials using a phone is, all the more so, strictly forbidden…According to the Click Portal usage guidelines, only unclassified files can be uploaded to the system. This is the lowest level of classification, followed by restricted, confidential, secret and top secret classifications.

The military unit involved was allegedly Squadron 69 which could be the General Staff Reconnaissance Unit. The group might be involved in war planning and fighting against the adversaries of Israel. Haaretz asserts that other units’ sensitive information was exposed within the MSFT Azure SharePoint Click Portal system.

Several observations seem to be warranted:

- Overly complicated systems involving multiple products increase the likelihood of access control issues. Either operators are not well trained or the interfaces and options confuse an operator so errors result

- The training of those involved in sensitive information access and handling has to be made more rigorous despite the tendency to “go through the motions” and move on in many professionals undergoing specialized instruction

- The “brand” of Israel’s security systems and procedures has taken another hit with the allegations spelled out by Haaretz. October 2023 and now Squadron 69. This raises the question, “What else is not buttoned up and ready for inspection in the Israel security sector?

Net net: I don’t want to accept this write up as 100 percent accurate. I don’t want to point the finger of blame at any one individual, government entity, or commercial enterprise. But security issues and Microsoft seem to be similar to ham and eggs and peanut butter and jelly from this dinobaby’s point of view.

Stephen E Arnold, April 23, 2025

The French Are Going After Enablers: Other Countries May Follow

April 16, 2025

Another post by the dinobaby. Judging by the number of machine-generated images of young female “entities” I receive, this 80-year-old must be quite fetching to some scammers with AI. Who knew?

Another post by the dinobaby. Judging by the number of machine-generated images of young female “entities” I receive, this 80-year-old must be quite fetching to some scammers with AI. Who knew?

Enervated by the French judiciary’s ability to reason with Pavel Durov, the Paris Judicial Tribunal is going after what I call “enablers.” The term applies to the legitimate companies which make their online services available to customers. With the popularity of self-managed virtual machines, the online services firms receive an online order, collect a credit card, validate it, and let the remote customer set up and manage a computing resource.

Hey, the approach is popular and does not require expensive online service technical staff to do the handholding. Just collect the money and move forward. I am not sure the Paris Judicial Tribunal is interested in virtual anything. According to “French Court Orders Cloudflare to ‘Dynamically’ Block MotoGP Streaming Piracy”:

In the seemingly endless game of online piracy whack-a-mole, a French court has ordered Cloudflare to block several sites illegally streaming MotoGP. The ruling is an escalation of French blocking measures that began increasing their scope beyond traditional ISPs in the last few months of 2024. Obtained by MotoGP rightsholder Canal+, the order applies to all Cloudflare services, including DNS, and can be updated with ‘future’ domains.

The write up explains:

The reasoning behind the blocking request is similar to a previous blocking order, which also targeted OpenDNS and Google DNS. It is grounded in Article L. 333-10 of the French Sports Code, which empowers rightsholders to seek court orders against any outfit that can help to stop ‘serious and repeated’ sports piracy. This time, SECP’s demands are broader than DNS blocking alone. The rightsholder also requested blocking measures across Cloudflare’s other services, including its CDN and proxy services.

The approach taken by the French provides a framework which other countries can use to crack down on what seem to be legal online services. Many of these outfits expose one face to the public and regulators. Like the fictional Dr. Jekyll and Mr. Hyde, these online service firms make it possible for bad actors to perform a number of services to a special clientele; for example:

- Providing outlets for hate speech

- Hosting all or part of a Dark Web eCommerce site

- Allowing “roulette wheel” DNS changes for streaming sites distributing sports events

- Enabling services used by encrypted messaging companies whose clientele engages in illegal activity

- Hosting images of a controversial nature.

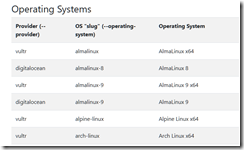

How can this be? Today’s technology makes it possible for an individual to do a search for a DMCA ignored advertisement for a service provider. Then one locates the provider’s Web site. Using a stolen credit card and the card owner’s identity, the bad actor signs up for a service from these providers:

This is a partial list of Dark Web hosting services compiled by SporeStack. Do you recognize the vendors Digital Ocean or Vultr? I recognized one.

These providers offer virtual machines and an API for interaction. With a bit of effort, the online providers have set up a vendor-customer experience that allows the online provider to say, “We don’t know what customer X is doing.” A cyber investigator has to poke around hunting for the “service” identified in the warrant in the hopes that the “service” will not be “gone.”

My view is that the French court may be ready to make life a bit less comfortable for some online service providers. The cited article asserts:

… the blockades may not stop at the 14 domain names mentioned in the original complaint. The ‘dynamic’ order allows SECP to request additional blockades from Cloudflare, if future pirate sites are flagged by French media regulator, ARCOM. Refusal to comply could see Cloudflare incur a €5,000 daily fine per site. “[Cloudflare is ordered to implement] all measures likely to prevent, until the date of the last race in the MotoGP season 2025, currently set for November 16, 2025, access to the sites identified above, as well as to sites not yet identified at the date of the present decision,” the order reads.

The US has a proposed site blocking bill as well.

But the French may continue to push forward using the “Pavel Durov action” as evidence that sitting on one’s hands and worrying about international repercussions is a waste of time. If companies like Amazon and Google operate in France, the French could begin tire kicking in the hopes of finding a bad wheel.

Mr. Durov believed he was not going to have a problem in France. He is a French citizen. He had the big time Kaminski firm represent him. He has lots of money. He has 114 children. What could go wrong? For starters, the French experience convinced him to begin cooperating with law enforcement requests.

Now France is getting some first hand experience with the enablers. Those who dismiss France as a land with too many different types of cheese may want to spend a few moments reading about French methods. Only one nation has some special French judicial savoir faire.

Stephen E Arnold, April 16, 2025

China Smart, US Dumb: The Fluid Mechanics Problem Solved

April 16, 2025

There are many puzzles that haven’t been solved, but with advanced technology and new ways of thinking some of them are finally getting answered. Two Chinese mathematicians working in the United States claim to have solved an old puzzle involving fluid mechanics says the South China Morning Post: “Chinese Mathematicians Say They Have Cracked Century-Old Fluid Mechanics Puzzle.”

Fluid mechanics is a study used in engineering and it is applied to aerodynamics, dams and bridges design, and hydraulic systems. The Chinese mathematicians are Deng Yu from the University of Chicago and Ma Xiao from the University of Michigan. They were joined by their international collaborator Zaher Hani also of the University of Michigan. They published a paper to arXiv-a platform that posts research papers before they are peer reviewed. The team said they found a solution to “Hilbert’s sixth problem.

What exactly did the mathematicians solve?

“At the intersection of physics and mathematics, researchers ask whether it is possible to establish physics as a rigorous branch of mathematics by taking microscopic laws as axioms and proving macroscopic laws as theorems. Axioms are mathematical statements that are assumed to be true, while a theorem is a logical consequence of axioms.

Hilbert’s sixth problem addresses that challenge, according to a post by Ma on Wednesday on Zhihu, a Quora-like Chinese online content platform.”

David Hilbert proposed this as one of twenty-three problems he presented in 1900 at the International Congress of Mathematicians. China is taking credit for these mathematicians and their work. China wants to point out how smart it is, while it likes to poke fun at the “dumb” United States. Let’s make our own point that these Chinese mathematicians are living and working in the United States.

Whitney Grace, April 16, 2025

The UK, the Postal Operation, and Computers

April 11, 2025

According to the Post Office Scandal, there’s a new amendment in Parliament that questions how machines work: “Proposed Amendment To Legal Presumption About The Reliability Of Computers.”

Journalist Tom Webb specializes in data protection and he informed author Nick Wallis about an amendment to the Data (Use and Access) Bill that is running through the British Parliament. The amendment questions:

“It concerns the legal presumption that “mechanical instruments” (which seems to be taken to include computer networks) are working properly if they look to the user like they’re working properly.”

Wallis has chronicled the problems associated with machines appearing to work properly since barrister Stephen Mason reported the issue to him. Barrister Mason is fighting on behalf of the British Post Office Scandal (which is another story) about the this flawed thinking and its legal implication. Here’s more on what the problem is:

“Although the “mechanical instruments” presumption has never, to the best of my knowledge, been quoted in any civil or criminal proceedings involving a Subpostmaster, it has been said to effectively reverse the burden of proof on anyone who might be convicted using digital evidence. The logic being if the courts are going to assume a computer was working fine at the time an offence allegedly occurred because it looked like it was working fine, it is then down to the defendant to prove that it was not working fine. This can be extremely difficult to do (per the Seema Misra/Lee Castleton cases).”

The proposed amendment uses legal jargon to do the following:

“This amendment overturns the current legal assumption that evidence from computers is always reliable which has contributed to miscarriages of justice including the Horizon Scandal. It enables courts to ask questions of those submitting computer evidence about its reliability.”

This explanation means that just because the little light is blinking and the machine is doing something, those lights do not mean the computer is working correctly. Remarkable.

Whitney Grace, April 11, 2025

China and AI: Moving Ahead?

April 10, 2025

There’s a longstanding rivalry between the United States and China. The rivalry extends to everything from government, economy, GDP, and technology. There’s been some recent technology developments in this heated East and West rivalry says The Independent in the article, “Has China Just Built The World’s First Human-Level AI?”

Deepseek is a AI start-up that’s been compared to OpenAI with its AI models. The clincher is that Deepseek’s models are more advanced than OpenAI because they perform better and use less resources. Another Chinese AI company claims they’ve made another technology breakthrough and it’s called “Manus.” Manus is is supposedly the world’s first fully autonomous AI agent that can perform complex tasks without human guidance. These tasks include creating a podcast, buying property, or booking travel plans.

Yichao Ji is the head of Manu’s AI development. He said that Manus is the next AI evolution and that it’s the beginning of artificial general intelligence (AGI). AGI is AI that rivals or surpasses human intelligence. Yichao Ji said:

“ ‘This isn’t just another chatbot or workflow, it’s a truly autonomous agent that bridges the gap between conception and execution,’ he said in a video demonstrating the AI’s capabilities. ‘Where other AI stops at generating ideas, Manus delivers results. We see it as the next paradigm of human-machine collaboration.’”

Meanwhile Dario Amodei’s company designed Claude, the ChatGPT rival, and he predicted that AGI would be available as soon as 2026. He wrote an essay in October 2024 with the following statement:

“ ‘It can engage in any actions, communications, or remote operations,’ he wrote, ‘including taking actions on the internet, taking or giving directions to humans, ordering materials, directing experiments, watching videos, making videos, and so on. It does all of these tasks with a skill exceeding that of the most capable humans in the world.’”

These are tasks that Manus can do, according to the AI’s Web site. However when Manus was tested users spotted it making mistakes that most humans would spot.

Manus’s team is grateful for the insight into its AI’s flaws and will work to deliver a better AGI. The experts are viewing Manus with a more critical eye, because Manus is not delivering the same results as its American counterparts.

It appears that the US is still developing higher performing AI that will become the basis of AGI. Congratulations to the red, white, and blue!

Whitney Grace, April 10, 2025

Why Worry about TikTok?

March 21, 2025

We have smart software, but the dinobaby continues to do what 80 year olds do: Write the old-fashioned human way. We did give up clay tablets for a quill pen. Works okay.

We have smart software, but the dinobaby continues to do what 80 year olds do: Write the old-fashioned human way. We did give up clay tablets for a quill pen. Works okay.

I hope this news item from WCCF Tech is wildly incorrect. I have a nagging thought that it might be on the money. “Deepseek’s Chatbot Was Being Used By Pentagon Employees For At Least Two Days Before The Service Was Pulled from the Network; Early Version Has Been Downloaded Since Fall 2024” is the headline I noted. I find this interesting.

The short article reports:

A more worrying discovery is that Deepseek mentions that it stores data on servers in China, possibly presenting a security risk when Pentagon employees started playing around with the chatbot.

And adds:

… employees were using the service for two days before this discovery was made, prompting swift action. Whether the Pentagon workers have been reprimanded for their recent act, they might want to exercise caution because Deepseek’s privacy policy clearly mentions that it stores user data on its Chinese servers.

Several observations:

- This is a nifty example of an insider threat. I thought cyber security services blocked this type of to and fro from government computers on a network connected to public servers.

- The reaction time is either months (fall of 2024 to 48 hours). My hunch is that it is the months long usage of an early version of the Chinese service.

- Which “manager” is responsible? Sorting out which vendors’ software did not catch this and which individual’s unit dropped the ball will be interesting and probably unproductive. Is it in any authorized vendors’ interest to say, “Yeah, our system doesn’t look for phoning home to China but it will be in the next update if your license is paid up for that service.” Will a US government professional say, “Our bad.”

Net net: We have snow removal services that don’t remove snow. We have aircraft crashing in sight of government facilities. And we have Chinese smart software running on US government systems connected to the public Internet. Interesting.

Stephen E Arnold, March 21, 2025

An Intel Blind Spot in Australia: Could an October-Type Event Occur?

March 17, 2025

Yep, another dinobaby original.

Yep, another dinobaby original.

I read a “real” news article (I think) in the UK Telegraph. The story “How Chinese Warships Encircled Australia without Canberra Noticing” surprised me. The write up reports:

In a highly unusual move, three Chinese naval vessels dubbed Task Group 107 – including a Jiangkai-class frigate, a Renhai-class cruiser and a Fuchi-class replenishment vessel – were conducting exercises in Australia’s exclusive economic zone.

The date was February 21, 2025. The ships were 300 miles from Australia. What’s the big deal?

According to the write up:

Anthony Albanese, Australia’s prime minister, downplayed the situation, while both the Australian Defence Force and the New Zealand Navy initially missed that the exercise was even happening.

Let me offer several observations based on what may a mostly accurate “real” news report:

- Australia like Israel is well equipped with home grown and third-party intelware. If the write up’s content is accurate, none of these intelware systems provided signals about the operation before, during, and after the report of the live fire drill

- As a member of Five Eyes, a group about which I know essentially nothing, Australia has access to assorted intelligence systems, including satellites. Obviously the data were incomplete, ignored, or not available to analysts or Preligens-type of systems. Note: Preligens is now owned by Safran

- What remediating actions are underway in Australia? To be fair, the “real” news outfit probably did not ask this question, but it seems a reasonable one to address. Someone was responsible, so what’s the fix?

Net net: Countries with sophisticated intelligence systems are getting some indications that these systems may not live up to the marketing hyperbole nor the procurement officials’ expectations of these systems. Israel suffered in many ways because of its 9/11 in October. One hopes that Australia can take this allegedly true incident involving China to heart and make changes.

Stephen E Arnold, March 17, 2025

FOGINT: France Gears Up for More Encrypted Message Access

March 12, 2025

Yep, another dinobaby original.

Yep, another dinobaby original.

Buoyed with the success of the Pavel Durov litigation, France appears to be getting ready to pursue Signal, the Zuck WhatsApp, and the Switzerland-based Proton Mail. The actions seem to lie in the future. But those familiar with the mechanisms of French investigators may predict that information gathering began years ago. With ample documentation, the French legislators with communication links to the French government seem to be ready to require Pavel-ovian responses to requests for user data.

“France Pushes for Law Enforcement to Signal, WhatsApp, and Encrypted Email” reports:

An amendment to France’s proposed “Narcotraffic” bill, which is passing through the National Assembly in the French Parliament, will require tech companies to hand over decrypted chat messages of suspected criminals within 72 hours. The law, which aims to provide French law enforcement with stronger powers to combat drug trafficking, has raised concerns among tech companies and civil society groups that it will lead to the creation of “backdoors” in encrypted services that will be exploited by cyber criminals and hostile nation-states. Individuals that fail to comply face fines of €1.5m while companies risk fines of up 2% of their annual world turnover if they fail to hand over encrypted communications demanded by French law enforcement.

The practical implications of these proposals is two-fold. First, the proposed legislation provides an alert to the identified firms that France is going to take action. The idea is that the services know what’s coming. The French investigators delight at recalcitrant companies proactively cooperating will probably be beneficial for the companies. Mr. Durov has learned that cooperation makes it possible for him to environ a future that does not include a stay at the overcrowded and dangerous prison just 16 kilometers from his hotel in Paris. The second is to keep up the momentum. Other countries have been indifferent to or unwilling to take on certain firms which have blown off legitimate requests for information about alleged bad actors. The French can be quite stubborn and have a bureaucracy that almost guarantees a less than amusing for the American outfits. The Swiss have experience in dealing with France, and I anticipate a quieter approach to Proton Mail.

The write up includes this statement:

opponents of the French law argue that breaking an encryption application that is allegedly designed for use by criminals is very different from breaking the encryption of chat apps, such as WhatsApp and Signal, and encrypted emails used by billions of people for non-criminal communications. “We do not see any evidence that the French proposal is necessary or proportional. To the contrary, any backdoor will sooner or later be exploited…

I think the statement is accurate. Information has a tendency to leak. But consider the impact on Telegram. That entity is in danger of becoming irrelevant because of France’s direct action against the Teflon-coated Russian Pavel Durov. Cooperation is not enough. The French action seems to put Telegram into a credibility hole, and it is not clear if the organization’s overblown crypto push can stave off user defection and slowing user growth.

Will the French law conflict with European Union and other EU states’ laws? Probably. My view is that the French will adopt the position, “C’est dommage en effet.” The Telegram “problem” is not completely resolved, but France is willing to do what other countries won’t. Is the French Foreign Legion operating in Ukraine? The French won’t say, but some of those Telegram messages are interesting. Oui, c’est dommage. Tip: Don’t fool around with a group of French Foreign Legion fellows whether you are wearing and EU flag T shirt and carrying a volume of EU laws, rules, regulations, and policies.

How will this play out? How would I know? I work in an underground office in rural Kentucky. I don’t think our local grocery store carries French cheese. However, I can offer a few tips to executives of the firms identified in the article:

- Do not go to France

- If you do go to France, avoid interactions with government officials

- If you must interact with government officials, make sure you have a French avocat or avocate lined up.

France seems so wonderful; it has great food; it has roads without billboards; and it has a penchant for direct action. Examples range from French Guiana to Western Africa. No, the “real” news doesn’t cover these activities. And executives of Signal and the Zuckbook may want to consider their travel plans. Avoid the issues Pavel Durov faces and may have resolved this calendar year. Note the word “may.”

Stephen E Arnold, March 12, 2025