Historical Revisionism: Twitter and Wikipedia

March 24, 2021

I wish I could recall the name of the slow talking wild-eyed professor who lectured about Mr. Stalin’s desire to have the history of the Soviet Union modified. The tendency was evident early in his career. Ioseb Besarionis dz? Jughashvili became Stalin, so fiddling with received wisdom verified by Ivory Tower types should come as no surprise.

Now we have Google and the right to be forgotten. As awkward as deleting pointers to content may be, digital information invites “reeducation”.

I learned in “Twitter to Appoint Representative to Turkey” that the extremely positive social media outfit will interact with the country’s government. The idea is to make sure content is just A-Okay. Changing tweets for money is a pretty good idea. Even better is coordinating the filtering of information with a nation state is another. But Apple and China seem to be finding a path forward. Maybe Apple in Russia will be a similar success.

A much more interesting approach to shaping reality is alleged in “Non-English Editions of Wikipedia Have a Misinformation Problem.” Wikipedia has a stellar track record of providing fact rich, neutral information I believe. This “real news” story states:

The misinformation on Wikipedia reflects something larger going on in Japanese society. These WWII-era war crimes continue to affect Japan’s relationships with its neighbors. In recent years, as Japan has seen an increase in the rise of nationalism, then–Prime Minister Shinzo Abe argued that there was no evidence of Japanese government coercion in the comfort women system, while others tried to claim the Nanjing Massacre never happened.

I am interested in these examples because each provides some color to one of my information “laws”. I have dubbed these “Arnold’s Precepts of Online Information.” Here’s the specific law which provides a shade tree for these examples:

Online information invites revisionism.

Stated another way, when “facts” are online, these are malleable, shapeable, and subjective.

When one runs a query on swisscows.com and then the same query on bing.com, ask:

Are these services indexing the same content?

The answer for me is, “No.” Filters, decisions about what to index, and update calendars shape the reality depicted online. Primary sources are a fine idea, but when those sources are shaped as well, what does one do?

The answer is like one of those Borges stories. Deleting and shaping content is more environmentally friendly than burning written records. A python script works with less smoke.

Stephen E Arnold, March24, 2021

GenX Indexing: Newgen Designs ID Info Extraction Software

March 9, 2021

As vaccines for COVID-19 rollout, countries are discussing vaccination documentation and how to include that with other identification paperwork. The World Health Organization does have some standards for vaccination documentation, but they are not universally applied. Adding yet another document for international travel makes things even more confusing. News Patrolling has a headline about new software that could make extracting ID information easier: “Newgen Launches AI And ML-Based Identity Document Extraction And Redaction Software.”

Newgen Software provides low code digital automation platforms developed a new ID extraction software: Intelligent IDXtract. Intelligent IDXtract extracts required information from identity documents and allows organizations to use the information for multiple reasons across industries. These include KYC verification, customer onboarding, and employee information management.

Intelligent IDXtract works by:

“Intelligent IDXtract uses a computer vision-based cognitive model to identify the presence, location, and type of one or more entities on a given identity document. The entities can include a person’s name, date of birth, unique ID number, and address, among others. The software leverages artificial intelligence and machine learning, powered by computer vision techniques and rule-based capabilities, to extract and redact entities per business requirements.”

The key features in the software will be seamless integration with business applications, entity recognition and localization, language independent localization and redaction of entity, trainable machine learning for customized models, automatic recognition, interpretation, location, and support for image capture variations.

Hopefully Intelligent IDXtract will streamline processes that require identity documentation as well as vaccine documentation.

Whitney Grace, March 9, 2021

The Semantic Web Identity Crisis? More Like Intellectual Cotton Candy?

February 22, 2021

“The Semantic Web identity Crisis: In Search of the Trivialities That Never Were” is a 5,700 word essay about confusion. The write up asserts that those engaged in Semantic Web research have an “ill defined sense of identity.” What I liked about the essay is that semantic progress has been made, but moving from 80 percent of the journey over the last 20 percent is going to be difficult. I would add that making the Semantic Web “work” may be impossible.

The write up explains:

In this article, we make the case for a return to our roots of “Web” and “semantics”, from which we as a Semantic Web community—what’s in a name—seem to have drifted in search for other pursuits that, however interesting, perhaps needlessly distract us from the quest we had tasked ourselves with. In covering this journey, we have no choice but to trace those meandering footsteps along the many detours of our community—yet this time around with a promise to come back home in the end.

Does the write up “come back home”?

In order to succeed, we will need to hold ourselves to a new, significantly higher standard. For too many years, we have expected engineers and software developers to take up the remaining 20%, as if they were the ones needing to catch up with us. Our fallacy has been our insistence that the remaining part of the road solely consisted of code to be written. We have been blind to the substantial research challenges we would surely face if we would only take our experiments out of our safe environments into the open Web. Turns out that the engineers and developers have moved on and are creating their own solutions, bypassing many of the lessons we already learned, because we stubbornly refused to acknowledge the amount of research needed to turn our theories into practice. As we were not ready for the Web, more pragmatic people started taking over.

From my point of view, it looks as if the Semantic Web thing is like a flashy yacht with its rudders and bow thrusters stuck in one position. The boat goes in circles. That would drive the passengers and crew bonkers.

Stephen E Arnold, February 22, 2021

Online Axiom: Distorted Information Is Part of the Datasphere

January 28, 2021

I read a 4,300 word post called “Nextdoor Is Quietly Replacing the Small-Town Paper” about an online social network aimed at “neighbors.” Yep, just like the one in which Mr. Rogers lived in for 31 years.

A world that only exists in upscale communities, populated by down home folks with money, and alarm systems.

The write up explains:

Nextdoor is an evolution of the neighborhood listserv forthe social media age, a place to trade composting tips, offerbabysitting services, or complain about the guy down the street whodoesn’t clean up his dog’s poop. Like many neighborhood listservs,it also has increasingly well-documented issues with racial profiling, stereotyping of the homeless, and political ranting of variousstripes, including QAnon. But Nextdoor has gradually evolved into something bigger and more consequential than just a digital bulletin board: In many communities,the platform has begun to step into roles once filled by America’slocal newspapers.

As I read this, I recalled that Google wants to set up its own news operation in Australia, but the GOOG is signing deals with independent publishers, maybe the mom-and-pop online advertising company should target Nextdoor. Imagine the Google Local ads which could be hosed into this service. Plus, Nextdoor already disappears certain posts and features one of the wonkiest interfaces for displaying comments and locating items offered for free or for sale. Google-ize it?

The article gathers some examples of how the at homers use Nextdoor to communicate. Information, disinformation, and misinformation complement quasi-controversial discussions. But if one gets too frisky, then the “seed” post is deleted from public view.

I have pointed out in my lectures (when I was doing them until the Covid thing) that the local and personal information is a goldmine of information useful to a number of commercial and government entities.

If you know zero about Nextdoor, check out the long, long article hiding happily behind a “register to read” paywall. On the other hand, sign up and check out the service.

Google, if you were a good neighbor, you would be looking at taking Nextdoor to Australia to complement the new play of “Google as a news publisher.” A “real” news outfit. Maybe shaped information is an online “law” describing what’s built in to interactions which are not intermediated?

Stephen E Arnold, January 28, 2021

Mobile and Social Media Users: Check Out the Utility of Metadata

January 15, 2021

Policeware vendors once commanded big, big bucks to match a person of interest to a location. Over the last decade prices have come down. Some useful products cost a fraction of the industrial strength, incredibly clumsy tools. If you are thinking about the hassle of manipulating data in IBM or Palantir products, you are in the murky field of prediction. I have not named the products which I think are the winners of this particular race.

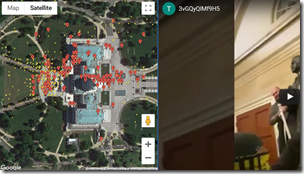

Source: https://thepatr10t.github.io/yall-Qaeda/

The focus of this write up is the useful information derived from the deplatformed Parler social media outfit. An enterprising individual named Patri10tic performed the sort of trick which Geofeedia made semi famous. You can check the map placing specific Parler uses in particular locations based on their messages at this link. What’s the time frame? The unusual protest at the US Capitol.

The point of this short post is different. I want to highlight several points:

- Metadata can be more useful than the content of a particular message or voice call

- Metadata can be mapped through time creating a nifty path of an individual’s movements

- Metadata can be cross correlated with other data. (If you attended one of my Amazon policeware lectures, the cross correlation figures prominently.)

- Metadata can be analyzed in more than two dimensions.

To sum up, I want to remind journalists that this type of data detritus has enormous value. That is the reason third parties attempt to bundle data together and provide authorized users with access to them.

What’s this have to do with policeware? From my point of view, almost anyone can replicate what systems costing as much as seven figures a year or more from their laptop at an outdoor table near a coffee shop.

Policeware vendors want to charge a lot. The Parler analysis demonstrates that there are many uses for low or zero cost geo manipulations.

Stephen E Arnold, January 15, 2021

Semantic Scholar: Mostly Useful Abstracting

December 4, 2020

A new search engine specifically tailored to scientific literature uses a highly trained algorithm. MIT Technology Review reports, “An AI Helps You Summarize the latest in AI” (and other computer science topics). Semantic Scholar generates tl;dr sentences for each paper on an author’s page. Literally—they call each summary, and the machine-learning model itself, “TLDR.” The work was performed by researchers at the Allen Institute for AI and the University of Washington’s Paul G. Allen School of Computer Science & Engineering.

AI-generated summaries are either extractive, picking a sentence out of the text to represent the whole, or abstractive, generating a new sentence. Obviously, an abstractive summary would be more likely to capture the essence of a whole paper—if it were done well. Unfortunately, due to limitations of natural language processing, most systems have relied on extractive algorithms. This model, however, may change all that. Writer Karen Hao tells us:

“How they did it: AI2’s abstractive model uses what’s known as a transformer—a type of neural network architecture first invented in 2017 that has since powered all of the major leaps in NLP, including OpenAI’s GPT-3. The researchers first trained the transformer on a generic corpus of text to establish its baseline familiarity with the English language. This process is known as ‘pre-training’ and is part of what makes transformers so powerful. They then fine-tuned the model—in other words, trained it further—on the specific task of summarization. The fine-tuning data: The researchers first created a dataset called SciTldr, which contains roughly 5,400 pairs of scientific papers and corresponding single-sentence summaries. To find these high-quality summaries, they first went hunting for them on OpenReview, a public conference paper submission platform where researchers will often post their own one-sentence synopsis of their paper. This provided a couple thousand pairs. The researchers then hired annotators to summarize more papers by reading and further condensing the synopses that had already been written by peer reviewers.”

The team went on to add a second dataset of 20,000 papers and their titles. They hypothesized that, as titles are themselves a kind of summary, this would refine the model further. They were not disappointed. The resulting summaries average 21 words to summarize papers that average 5,000 words, a compression of 238 times. Compare this to the next best abstractive option at 36.5 times and one can see TLDR is leaps ahead. But are these summaries as accurate and informative? According to human reviewers, they are even more so. We may just have here a rare machine learning model that has received enough training on good data to be effective.

The Semantic Scholar team continues to refine the software, training it to summarize other types of papers and to reduce repetition. They also aim to have it summarize multiple documents at once—good for researchers in a new field, for example, or policymakers being briefed on a complex issue. Stay tuned.

Cynthia Murrell, December 4, 2020

Smarsh Acquires Digital Reasoning

November 26, 2020

On its own website, communications technology firm Smarsh crows, “Smarsh Acquires Digital Reasoning, Combining Global Leadership in Artificial Intelligence and Machine Learning with Market Leading Electronic Communications Archiving and Supervision.” It is worth noting that Digital Reasoning was founded by a college philosophy senior, Tim Estes, who saw the future in machine learning back in 2000. First it was a search system, then an intelligence system, and now part of an archiving system. The company has been recognized among Fast Company’s Most Innovative Companies for AI and recently received the Frost & Sullivan Product Leadership Award in the AI Risk Surveillance Market. Smarsh was smart to snap it up. The press release tells us:

“The transaction brings together the leadership of Smarsh in digital communications content capture, archiving, supervision and e-discovery, with Digital Reasoning’s leadership in advanced AI/ML powered analytics. The combined company will enable customers to spot risks before they happen, maximize the scalability of supervision teams, and uncover strategic insights from large volumes of data in real-time. Smarsh manages over 3 billion messages daily across email, social media, mobile/text messaging, instant messaging and collaboration, web, and voice channels. The company has unparalleled expertise in serving global financial institutions and US-based wealth management firms across both the broker-dealer and registered investment adviser (RIA) segments.”

Dubbing the combined capabilities “Communications Intelligence,” Smarsh’s CEO Brian Cramer promises Digital Reasoning’s AI and machine learning contributions will help clients better manage risk and analyze communications for more profitable business intelligence. Estes adds,

“In this new world of remote work, a company’s digital communications infrastructure is now the most essential one for it to function and thrive. Smarsh and Digital Reasoning provide the only validated and complete solution for companies to understand what is being said in any digital channel and in any language. This enables them to quickly identify things like fraud, racism, discrimination, sexual harassment, and other misconduct that can create substantial compliance risk.”

See the write-up for its list of the upgraded platform’s capabilities. Smarsh was founded in 2001 by financial services professional Stephen Marsh (or S. Marsh). The company has made Gartner’s list of Leaders in Enterprise Information Archiving for six years running, among other accolades. Smarsh is based in Portland, Oregon, and maintains offices in several major cities worldwide.

Our take? Search plus very dense visualization could not push some government applications across the warfighting finish line. Smarsh on!

Cynthia Murrell, November 26, 2020

AI Tech Used to Index and Search Joint Pathology Center Archive

November 23, 2020

The US’s Joint Pathology Center is the proud collector of the world’s largest group of preserved human tissue samples. Now, with help from University of Waterloo’s KIMIA Lab in Ontario, Canada, the facility will soon be using AI to index and search its digital archive of samples. ComputerUser announces the development in, “Artificial Intelligence Search Technology Will Be Used to Help Modernize US Federal Pathology Facility.”

As happy as we are to see the emergence of effective search solutions, we are also ticked by the names KIMIA used—the image search engine is commercialized under the name Lagotto, and the image retrieval tech is dubbed Yottixel. The write-up tells us:

“Yottixel will be used to enhance biomedical research for infectious diseases and cancer, enabling easier data sharing to facilitate collaboration and medical advances. The JPC is the leading pathology reference centre for the US federal government and part of the US Defense Health Agency. In the last century, it has collected more than 55 million glass slides and 35 million tissue block samples. Its data spans every major epidemic and pandemic, and was used to sequence the Spanish flu virus of 1918. It is expected that the modernization also helps to better understand and fight the COVID-19 pandemic. … Researchers at Waterloo have obtained promising diagnostic results using their AI search technology to match digital images of tissue samples in suspected cancer cases with known cases in a database. In a paper published earlier this year, a validation project led by Kimia Lab achieved accurate diagnoses for 32 kinds of cancer in 25 organs and body parts.”

Short for the Laboratory for Knowledge Inference in Medical Image Analysis, KIMIA Lab focuses on mass image data in medical archives using machine learning schemes. Established in 2013 and hosted by the University of Waterloo’s Faculty of Engineering, the program trains students and hosts international visiting scholars.

Cynthia Murrell, November 23, 2020

Defeating Facial Recognition: Chasing a Ghost

August 12, 2020

The article hedges. Check the title: “This Tool could Protect Your Photos from Facial Recognition.” Notice the “could”. The main idea is that people do not want their photos analyzed and indexed with the name, location, state of mind, and other index terms. I am not so sure, but the write up explains with that “could” coloring the information:

The software is not intended to be just a one-off tool for privacy-loving individuals. If deployed across millions of images, it would be a broadside against facial recognition systems, poisoning the accuracy of the data sets they gather from the Web. <

So facial recognition = bad. Screwing up facial recognition = good.

There’s more:

“Our goal is to make Clearview go away,” said Dr Ben Zhao, a professor of computer science at the University of Chicago.

Okay, a company is a target.

How’s this work:

Fawkes converts an image — or “cloaks” it, in the researchers’ parlance — by subtly altering some of the features that facial recognition systems depend on when they construct a person’s face print.

Several observations:

- In the event of a problem like the explosion in Lebanon, maybe facial recognition can identify some of those killed.

- Law enforcement may find narrowing a pool of suspects to a smaller group may enhance an investigative process.

- Unidentified individuals who are successfully identified “could” add precision to Covid contact tracking.

- Applying the technology to differentiate “false” positives from “true”positives in some medical imaging activities may be helpful in some medical diagnoses.

My concern is that technical write ups are often little more than social polemics. Examining the upside and downside of an innovation is important. Converting a technical process into a quest to “kill” a company, a concept, or an application of technical processes is not helpful in DarkCyber’s view.

Stephen E Arnold, August 12, 2020

Twitter: Another Almost Adult Moment

August 7, 2020

Indexing is useful. Twitter seems to be recognizing this fact. “Twitter to Label State-Controlled News Accounts” reports:

The company will also label the accounts of government-linked media, as well as “key government officials” from China, France, Russia, the UK and US. Russia’s RT and China’s Xinhua News will both be affected by the change. Twitter said it was acting to provide people with more context about what they see on the social network.

Long overdue, the idea of an explicit index term may allow some tweeters to get some help when trying to figure out where certain stories originate.

Twitter, a particularly corrosive social media system, has avoided adult actions. The firm’s security was characterized in a recent DarkCyber video as a clown car operation. No words were needed. The video showed a clown car.

Several questions from the DarkCyber team:

- When will Twitter verify user identities, thus eliminating sock puppet accounts? Developers of freeware manage this type of registration and verification process, not perfectly but certainly better than some other organizations’.

- When will Twitter recognize that a tiny percentage of its tweeters account for the majority of the messages and implement a Twitch-like system to generate revenue from these individuals? Pay-per-use can be implemented in many ways, so can begging for dollars. Either way, Twitter gets an identification point which may have other functions.

- When will Twitter innovate? The service is valuable because a user or sock puppet can automate content regardless of its accuracy. Twitter has been the same for a number of Internet years. Dogs do age.

Is Twitter, for whatever reason, stuck in the management mentality of a high school science club which attracts good students, just not the whiz kids who are starting companies and working for Google type outfits from their parents’ living room?

Stephen E Arnold, August 7, 2020