The Fixed Network Lawful Interception Business is Booming

September 11, 2024

It is not just bad actors who profit from an increase in cybercrime. Makers of software designed to catch them are cashing in, too. The Market Research Report 224 blog shares “Fixed Network Lawful Interception Market Region Insights.” Lawful interception is the process by which law enforcement agencies, after obtaining the proper warrants of course, surveil circuit and packet-mode communications. The report shares findings from a study by Data Bridge Market Research on this growing sector. Between 2021 and 2028, this market is expected to grow by nearly 20% annually and hit an estimated value of $5,340 million. We learn:

“Increase in cybercrimes in the era of digitalization is a crucial factor accelerating the market growth, also increase in number of criminal activities, significant increase in interception warrants, rising surge in volume of data traffic and security threats, rise in the popularity of social media communications, rising deployment of 5G networks in all developed and developing economies, increasing number of interception warrants and rising government of both emerging and developed nations are progressively adopting lawful interception for decrypting and monitoring digital and analog information, which in turn increases the product demand and rising virtualization of advanced data centers to enhance security in virtual networks enabling vendors to offer cloud-based interception solutions are the major factors among others boosting the fixed network lawful interception market.”

Furthermore, the pace of these developments will likely increase over the next few years. The write-up specifies key industry players, a list we found particularly useful:

“The major players covered in fixed network lawful interception market report are Utimaco GmbH, VOCAL TECHNOLOGIES, AQSACOM, Inc, Verint, BAE Systems., Cisco Systems, Telefonaktiebolaget LM Ericsson, Atos SE, SS8 Networks, Inc, Trovicor, Matison is a subsidiary of Sedam IT Ltd, Shoghi Communications Ltd, Comint Systems and Solutions Pvt Ltd – Corp Office, Signalogic, IPS S.p.A, ZephyrTel, EVE compliancy solutions and Squire Technologies Ltd among other domestic and global players.”

See the press release for notes on Data Bridge’s methodology. It promises 350 pages of information, complete with tables and charts, for those who purchase a license. Formed in 2014, Data Bridge is based in Haryana, India.

Cynthia Murrell, September 11, 2024

Thoughts about the Dark Web

August 8, 2024

This essay is the work of a dumb humanoid. No smart software required.

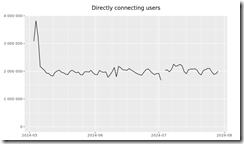

The Dark Web. Wow. YouTube offers a number of tell-all videos about the Dark Web. Articles explain the topics one can find on certain Dark Web fora. What’s forgotten is that the number of users of the Dark Web has been chugging along, neither gaining tens of millions of users or losing tens of millions of users. Why? Here’s a traffic chart from the outfit that sort of governs The Onion Router:

Source: https://metrics.torproject.org/userstats-relay-country.html

The chart is not the snappiest item on the sprawling Torproject.org Web site, but the message seems to be that TOR has been bouncing around two million users this year. Go back in time and the number has increased, but not much. Online statistics, particularly those associated with obfuscation software, are mushy. Let’s toss in another couple million users to account for alternative obfuscation services. What happens? We are not in the tens of millions.

Our research suggests that the stability of TOR usage is due to several factors:

- The hard core bad actors comprise a small percentage of the TOR usage and probably do more outside of TOR than within it. In September 2024 I will be addressing this topic at a cyber fraud conference.

- The number of entities indexing the “Dark Web” remains relatively stable. Sure, some companies drop out of this data harvesting but the big outfits remain and their software looks a lot like a user, particularly with some of the wonky verification Dark Web sites use to block automated collection of data.

- Regular Internet users don’t pay much attention to TOR, including those with the one-click access browsers like Brave.

- Human investigators are busy looking and interacting, but the numbers of these professionals also remains relatively stable.

To sum up, most people know little about the Dark Web. When these individuals figure out how to access a Web site advertising something exciting like stolen credit cards or other illegal products and services, they are unaware of a simple fact: An investigator from some country maybe operating like a bad actor to find a malefactor. By the way, the Dark Web is not as big as some cyber companies assert. The actual number of truly bad Dark Web sites is fewer than 100, based on what my researchers tell me.

A very “good” person approaches an individual who looks like a very tough dude. The very “good” person has a special job for the touch dude. Surprise! Thanks, MSFT Copilot. Good enough and you should know what certain professionals look like.

I read “Former Pediatrician Stephanie Russell Sentenced in Murder Plot.” The story is surprisingly not that unique. The reason I noted a female pediatrician’s involvement in the Dark Web is that she lives about three miles from my office. The story is that the good doctor visited the Dark Web and hired a hit man to terminate an individual. (Don’t doctors know how to terminate as part of their studies?)

The write up reports:

A Louisville judge sentenced former pediatrician Stephanie Russell to 12 years in prison Wednesday for attempting to hire a hitman to kill her ex-husband multiple times.

I love the somewhat illogical phrase “kill her ex-husband multiple times.”

Russell pleaded guilty April 22, 2024, to stalking her former spouse and trying to have him killed amid a protracted custody battle over their two children. By accepting responsibility and avoiding a trial, Russell would have expected a lighter prison sentence. However, she again tried to find a hitman, this time asking inmates to help with the search, prosecutors alleged in court documents asking for a heftier prison sentence.

One rumor circulating at the pub which is a popular lunch spot near the doctor’s former office is that she used the Dark Web and struck up an online conversation with one of the investigators monitoring such activity.

Net net: The Dark Web is indeed interesting.

Stephen E Arnold, August 8, 2024

A Modern Spy Novel: A License to Snoop

April 29, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

“UK’s Investigatory Powers Bill to Become Law Despite Tech World Opposition” reports the Investigatory Powers Amendment Bill or IPB is now a law. In a nutshell, the law expands the scope of data collection by law enforcement and intelligence services. The Register, a UK online publication, asserts:

Before the latest amendments came into force, the IPA already allowed authorized parties to gather swathes of information on UK citizens and tap into telecoms activity – phone calls and SMS texts. The IPB’s amendments add to the Act’s existing powers and help authorities trawl through more data, which the government claims is a way to tackle “modern” threats to national security and the abuse of children.

Thanks, Copilot. A couple of omissions from my prompt, but your illustration is good enough.

One UK elected official said:

“Additional safeguards have been introduced – notably, in the most recent round of amendments, a ‘triple-lock’ authorization process for surveillance of parliamentarians – but ultimately, the key elements of the Bill are as they were in early versions – the final version of the Bill still extends the scope to collect and process bulk datasets that are publicly available, for example.”

Privacy advocates are concerned about expanding data collections’ scope. The Register points out that “big tech” feels as though it is being put on the hot seat. The article includes this statement:

Abigail Burke, platform power program manager at the Open Rights Group, previously told The Register, before the IPB was debated in parliament, that the proposals amounted to an “attack on technology.”

Several observations:

- The UK is a member in good standing of an intelligence sharing entity which includes Australia, Canada, New Zealand, and the US. These nation states watch one another’s activities and sometimes emulate certain policies and legal frameworks.

- The IPA may be one additional step on a path leading to a ban on end-to-end-encrypted messaging. Such a ban, if passed, would prove disruptive to a number of business functions. Bad actors will ignore such a ban and continue their effort to stay ahead of law enforcement using homomorphic encryption and other sophisticated techniques to keep certain content private.

- Opportunistic messaging firms like Telegram may incorporate technologies which effectively exploit modern virtual servers and other technology to deploy networks which are hidden and effectively less easily “seen” by existing monitoring technologies. Bad actors can implement new methods forcing LE and intelligence professionals to operate in reaction mode. IPA is unlikely to change this cat-and-mouse game.

- Each day brings news of new security issues with widely used software and operating systems. Banning encryption may have some interesting downstream and unanticipated effects.

Net net: I am not sure that modern threats will decrease under IPA. Even countries with the most sophisticated software, hardware, and humanware security systems can be blindsided. Gaffes in Israel have had devastating consequences that an IPA-type approach would remedy.

Stephen E Arnold, April 29, 2024

Lawyer, Former Government Official, and Podcaster to Head NSO Group

January 2, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The high-profile intelware and policeware vendor NSO Group has made clear that specialized software is a potent policing tool. NSO Group continues to market its products and services at low-profile trade shows like those sponsored by an obscure outfit in northern Virginia. Now the firm has found a new friend in a former US official. TechDirt reports, “Former DHS/NSA Official Stewart Baker Decides He Can Help NSO Group Turn a Profit.” Writer Tim Cushing tells us:

“This recent filing with the House of Representatives makes it official: Baker, along with his employer Steptoe and Johnson, will now be seeking to advance the interests of an Israeli company linked to abusive surveillance all over the world. In it, Stewart Baker is listed as the primary lobbyist. This is the same Stewart Baker who responded to the Commerce Department blacklist of NSO by saying it wouldn’t matter because authoritarians could always buy spyware from… say…. China.”

So, the reasoning goes, why not allow a Western company to fill that niche? This perspective apparently makes Baker just the fellow to help NSO buff up NSO Group’s reputation. Cushing predicts:

“The better Baker does clearing NSO’s tarnished name, the sooner it and its competitors can return to doing the things that got them in trouble in the first place. Once NSO is considered somewhat acceptable, it can go back to doing the things that made it the most money: i.e., hawking powerful phone exploits to human rights abusers. But this time, NSO has a former US government official in its back pocket. And not just any former government official but one who spent months telling US citizens who were horrified by the implications of the Snowden leaks that they were wrong for being alarmed about bulk surveillance.”

Perhaps the winning combination for the NSO Group is a lawyer, former US government official, and a podcaster in one sleek package will do the job? But there are now alternatives to the Pegasus solution. Some of these do not have the baggage carted around by the stealthy flying horse.

Perhaps there will be a podcast about NSO Group in the near future.

Cynthia Murrell, January 2, 2024

Facial Recognition: A Bit of Bias Perhaps?

November 24, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

It’s a running gag in the tech industry that AI algorithms and related advancements are “racist.” Motion sensors can’t recognize dark pigmented skin. Photo recognition software misidentifies black and other ethnicities as primates. AI-trained algorithms are also biased against ethnic minorities and women in the financial, business, and other industries. AI is “racist” because it’s trained on data sets heavy in white and male information.

Ars Technica shares another story about biased AI: “People Think White AI-Generated Faces Are More Real Than Actual Photos, Study Says.” The journal of Psychological Science published a peer reviewed study, “AI Hyperrealism: Why AI Faces Are Perceived As More Real Than Human Ones.” The study discovered that faces created from three-year old AI technology were found to be more real than real ones. Predominately, AI-generate faces of white people were perceived as the most realistic.

The study surveyed 124 white adults who were shown a mixture of 100 AI-generated images and 100 real ones. They identified 66% of the AI images as human and 51% of the real faces were identified as real. Real and AI images of ethnic minorities with high amounts of melanin were viewed as real 51%. The study also discovered that participants who made the most mistakes were also the most confident, a clear indicator of the Dunning-Kruger effect.

The researchers conducted a second study with 610 participants and learned:

“The analysis of participants’ responses suggested that factors like greater proportionality, familiarity, and less memorability led to the mistaken belief that AI faces were human. Basically, the researchers suggest that the attractiveness and "averageness" of AI-generated faces made them seem more real to the study participants, while the large variety of proportions in actual faces seemed unreal.

Interestingly, while humans struggled to differentiate between real and AI-generated faces, the researchers developed a machine-learning system capable of detecting the correct answer 94 percent of the time.”

The study could be swung in the typical “racist” direction that AI will perpetuate social biases. The answer is simple and should be invested: create better data sets to train AI algorithms.

Whitney Grace, November 24, 2023

A Rare Moment of Constructive Cooperation from Tech Barons

November 23, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Platform-hopping is one way bad actors have been able to cover their tracks. Now several companies are teaming up to limit that avenue for one particularly odious group. TechNewsWorld reports, “Tech Coalition Launches Initiative to Crackdown on Nomadic Child Predators.” The initiative is named Lantern, and the Tech Coalition includes Discord, Google, Mega, Meta, Quora, Roblox, Snap, and Twitch. Such cooperation is essential to combat a common tactic for grooming and/ or sextortion: predators engage victims on one platform then move the discussion to a more private forum. Reporter John P. Mello Jr. describes how Lantern works:

Participating companies upload ‘signals’ to Lantern about activity that violates their policies against child sexual exploitation identified on their platform.

Signals can be information tied to policy-violating accounts like email addresses, usernames, CSAM hashes, or keywords used to groom as well as buy and sell CSAM. Signals are not definitive proof of abuse. They offer clues for further investigation and can be the crucial piece of the puzzle that enables a company to uncover a real-time threat to a child’s safety.

Once signals are uploaded to Lantern, participating companies can select them, run them against their platform, review any activity and content the signal surfaces against their respective platform policies and terms of service, and take action in line with their enforcement processes, such as removing an account and reporting criminal activity to the National Center for Missing and Exploited Children and appropriate law enforcement agency.”

The visually oriented can find an infographic of this process in the write-up. We learn Lantern has been in development for two years. Why did it take so long to launch? Part of it was designing the program to be effective. Another part was to ensure it was managed responsibly: The project was subjected to a Human Rights Impact Assessment by the Business for Social Responsibility. Experts on child safety, digital rights, advocacy of marginalized communities, government, and law enforcement were also consulted. Finally, we’re told, measures were taken to ensure transparency and victims’ privacy.

In the past, companies hesitated to share such information lest they be considered culpable. However, some hope this initiative represents a perspective shift that will extend to other bad actors, like those who spread terrorist content. Perhaps. We shall see how much tech companies are willing to cooperate. They wouldn’t want to reveal too much to the competition just to help society, after all.

Cynthia Murrell, November 23, 2023

Predictive Analytics and Law Enforcement: Some Questions Arise

October 17, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[2] Vea4_thumb_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2023/10/Vea4_thumb_thumb_thumb_thumb_thumb_t2_thumb-16.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

We wish we could prevent crime before it happens. With AI and predictive analytics it seems possible but Wired shares that “Predictive Policing Software Terrible At Predicting Crimes.” Plainfield, NJ’s police department purchased Geolitica predictive software and it was not a wise use go tax payer money. The Markup, a nonprofit investigative organization that wants technology serve the common good, reported Geolitica’s accuracy:

“We examined 23,631 predictions generated by Geolitica between February 25 and December 18, 2018, for the Plainfield Police Department (PD). Each prediction we analyzed from the company’s algorithm indicated that one type of crime was likely to occur in a location not patrolled by Plainfield PD. In the end, the success rate was less than half a percent. Fewer than 100 of the predictions lined up with a crime in the predicted category, that was also later reported to police.”

The Markup also analyzed predictions for robberies and aggravated results that would occur in Plainfield and it was 0.6%. Burglary predictions were worse at 0.1%.

The police weren’t really interested in using Geolitica either. They wanted to be accurate in predicting and reducing crime. The Plainfield, NJ hardly used the software and discontinued the program. Geolitica charged $20,500 for a year subscription then $15,5000 for year renewals. Geolitica had inconsistencies with information. Police found training and experience to be as effective as the predictions the software offered.

Geolitica will go out off business at the end of 2023. The law enforcement technology company SoundThinking hired Geolitica’s engineering team and will acquire some of their IP too. Police software companies are changing their products and services to manage police department data.

Crime data are important. Where crimes and victimization occur should be recorded and analyzed. Newark, New Jersey, used risk terrain modeling (RTM) to identify areas where aggravated assaults would occur. They used land data and found that vacant lots were large crime locations.

Predictive methods have value, but they also have application to specific use cases. Math is not the answer to some challenges.

Whitney Grace, October 17, 2023

Europol Focuses on Child Centric Crime

October 16, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[2] Vea4_thumb_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2023/10/Vea4_thumb_thumb_thumb_thumb_thumb_t2_thumb-15.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Children are the most vulnerable and exploited population in the world. The Internet unfortunately aides bad actors by allowing them to distribute child sexual abuse material aka CSAM to avoid censors. Europol (the European-only sector of Interpol) wants to end CSAM by overriding Europeans’ privacy rights. Tech Dirt explores the idea in the article, “Europol Tells EU Commission: Hey, When It Comes To CSAM, Just Let Us Do Whatever We Want.”

Europol wants unfiltered access to a EU proposed AI algorithm and its data programmed to scan online content for CSAM. The police agency also wants to use the same AI to detect other crimes. This information came from a July 2022 high-level meeting that involved Europol Executive Director Catherine de Belle and the European Commission’s Director-General for Migration and Home Affairs Monique Pariat. Europol pitched this idea when the EU believed it would mandate client-side scanning on service providers.

Privacy activists and EU member nations vetoed the idea, because it would allow anyone to eavesdrop on private conversations. They also found it violated privacy rights. Europol used the common moniker “for the children” or “save the children” to justify the proposal. Law enforcement, politicians, religious groups, and parents have spouted that rhetoric for years and makes more nuanced people appear to side with pedophiles.

“It shouldn’t work as well as it does, since it’s been a cliché for decades. But it still works. And it still works often enough that Europol not only demanded access to combat CSAM but to use this same access to search for criminal activity wholly unrelated to the sexual exploitation of children… Europol wants a police state supported by always-on surveillance of any and all content uploaded by internet service users. Stasi-on-digital-steroids. Considering there’s any number of EU members that harbor ill will towards certain residents of their country, granting an international coalition of cops unfiltered access to content would swiftly move past the initial CSAM justification to governments seeking out any content they don’t like and punishing those who dared to offend their elected betters.”

There’s also evidence that law enforcement officials and politicians are working in the public sector to enforce anti-privacy laws then leaving for the private sector. Once there, they work at companies that sell surveillance technology to governments. Is that a type of insider trading or nefarious influence?

Whitney Grace, October 16, 2023

Cognitive Blind Spot 4: Ads. What Is the Big Deal Already?

October 11, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb_thumb Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb_thumb](https://arnoldit.com/wordpress/wp-content/uploads/2023/10/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb_thumb_thumb.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

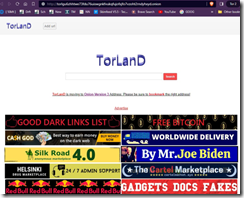

Last week, I presented a summary of Dark Web Trends 2023, a research update my team and I prepare each year. I showed a visual of the ads on a Dark Web search engine. Here’s an example of one of my illustrations:

The TorLanD service, when it is accessible via Tor, displays a search box and advertising. What is interesting about this service and a number of other Dark Web search engines is the ads. The search results are so-so, vastly inferior to those information retrieval solutions offered by intelware vendors.

Some of the ads appear on other Dark Web search systems as well; for example, Bobby and DarkSide, among others. The advertisements off a range of interesting content. TorLanD screenshot pitches carding, porn, drugs, gadgets (skimmers and software), illegal substances. I pointed out that the ads on TorLanD looked a lot like the ads on Bobby; for instance:

I want to point out that the Silk Road 4.0 and the Gadgets, Docs, Fakes ads are identical. Notice also that TorLanD advertises on Bobby. The Helsinki Drug Marketplace on the Bobby search system offers heroin.

Most of these ads are trade outs. The idea is that one Dark Web site will display an ad for another Dark Web site. There are often links to Dark Web advertising agencies as well. (For this short post, I won’t be listing these vendors, but if you are interested in this research, contact benkent2020 at yahoo dot com. One of my team will follow up and explain our for-fee research policy.)

The point of these two examples is make clear that advertising has become normalized, even among bad actors. Furthermore, few are surprised that bad actors (or alleged bad actors) communicate, pat one another on the back, and support an ecosystem to buy and sell space on the increasingly small Dark Web. Please, note that advertising appears in public and private Telegram groups focused on he topics referenced in these Dark Web ads.

Can you believe the ads? Some people do. Users of the Clear Web and the Dark Web are conditioned to accept ads and to believe that these are true, valid, useful, and intended to make it easy to break the law and buy a controlled substance or CSAM. Some ads emphasize “trust.”

People trust ads. People believe ads. People expect ads. In fact, one can poke around and identify advertising and PR agencies touting the idea that people “trust” ads, particularly those with brand identity. How does one build brand? Give up? Advertising and weaponized information are two ways.

The cognitive bias that operates is that people embrace advertising. Look at a page of Google results. Which are ads and which are ads but not identified. What happens when ads are indistinguishable from plausible messages? Some online companies offer stealth ads. On the Dark Web pages illustrating this essay are law enforcement agencies masquerading as bad actors. Can you identify one such ad? What about messages on Twitter which are designed to be difficult to spot as paid messages or weaponized content. For one take on Twitter technology, read “New Ads on X Can’t Be Blocked or Reported, and Aren’t Labeled as Advertisements.”

Let me highlight some of the functions on online ads like those on the Dark Web sites. I will ignore the Clear Web ads for the purposes of this essay:

- Click on the ad and receive malware

- Visit the ad and explore the illegal offer so that the site operator can obtain information about you

- Sell you a product and obtain the identifiers you provide, a deliver address (either physical or digital), or plant a beacon on your system to facilitate tracking

- Gather emails for phishing or other online initiatives

- Blackmail.

I want to highlight advertising as a vector of weaponization for three reasons: [a] People believe ads. I know it sound silly, but ads work. People suspend disbelief when an ad on a service offers something that sounds too good to be true; [b] many people do not question the legitimacy of an ad or its message. Ads are good. Ads are everywhere. and [c] Ads are essentially unregulated.

What happens when everything drifts toward advertising? The cognitive blind spot kicks in and one cannot separate the false from the real.

Public service note: Before you explore Dark Web ads or click links on social media services like Twitter, consider that these are vectors which can point to quite surprising outcomes. Intelligence agencies outside the US use Dark Web sites as a way to harvest useful information. Bad actors use ads to rip off unsuspecting people like the doctor who once lived two miles from my office when she ordered a Dark Web hitman to terminate an individual.

Ads are unregulated and full of surprises. But the cognitive blind spot for advertising guarantees that the technique will flourish and gain technical sophistication. Are those objective search results useful information or weaponized? Will the Dark Web vendor really sell you valid stolen credit cards? Will the US postal service deliver an unmarked envelope chock full of interesting chemicals?

Stephen E Arnold, October 11, 2023

Traveling to France? On a Watch List?

August 25, 2023

The capacity for surveillance has been lurking in our devices all along, of course. Now, reports Azerbaijan’s Azernews, “French Police Can Secretly Activate Phone Cameras, Microphones, and GPS to Spy on Citizens.” The authority to remotely activate devices was part of a larger justice reform bill recently passed. Officials insist, though, this authority will not be used willy-nilly:

“A judge must approve the use of the powers, and the recently amended bill forbids use against journalists, lawyers, and other ‘sensitive professions.’ The measure is also meant to limit use to serious cases, and only for a maximum of six months. Geolocation would be limited to crimes that are punishable by at least five years in prison.”

Surely, law enforcement would never push those limits. Apparently the Orwellian comparisons are evident even to officials, since Justice Minister Éric Dupond-Moretti preemptively batted them away. Nevertheless, we learn:

“French digital rights advocacy group, La Quadrature du Net, has raised serious concerns over infringements of fundamental liberties, and has argued that the bill violates the ‘right to security, right to a private life and to private correspondence’ and ‘the right to come and go freely.’ … The legislation comes as concerns about government device surveillance are growing. There’s been a backlash against NSO Group, whose Pegasus spyware has allegedly been misused to spy on dissidents, activists, and even politicians. The French bill is more focused, but civil liberties advocates are still alarmed at the potential for abuse. The digital rights group La Quadrature du Net has pointed out the potential for abuse, noting that remote access may depend on security vulnerabilities. Police would be exploiting security holes instead of telling manufacturers how to patch those holes, La Quadrature says.”

Smartphones, laptops, vehicles, and any other connected devices are all fair game under the new law. But only if one has filed the proper paperwork, we are sure. Nevertheless, progress.

Cynthia Murrell, August 25, 2023