Is This Correct? Google Sues to Protect Copyright

December 30, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

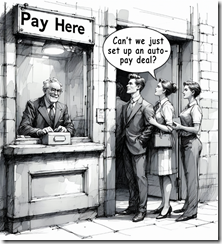

This headline stopped me in my tracks: “Google Lawsuit Says Data Scraping Company Uses Fake Searches to Steal Web Content.” The way my dinobaby brain works, I expect to see a publisher taking the world’s largest online advertising outfit in the crosshairs. But I trust Thomson Reuters because they tell me they are the trust outfit.

Google apparently cannot stop a third party from scraping content from its Web site. Is this SEO outfit operating at a level of sophistication beyond the ken of Mandiant, Gemini, and the third-party cyber tools the online giant has? Must be I guess. Thanks, Venice.ai. Good enough.

The alleged copyright violator in this case seems to be one of those estimable, highly professional firms engaged in search engine optimization. Those are the folks Google once saw as helpful to the sale of advertising. After all, if a Web site is not in a Google search result, that Web site does not exist. Therefore, to get traffic or clicks, the Web site “owner” can buy Google ads and, of course, make the Web site Google compliant. Maybe the estimable SEO professional will continue to fiddle and doctor words in a tireless quest to eliminate the notion of relevance in Google search results.

Now an SEO outfit is on the wrong site of what Google sees as the law. The write up says:

Google on Friday [December 19, 2025] sued a Texas company that “scrapes” data from online search results, alleging it uses hundreds of millions of fake Google search requests to access copyrighted material and “take it for free at an astonishing scale. The lawsuit against SerpApi, filed in federal court in California, said the company bypassed Google’s data protections to steal the content and sell it to third parties.

To be honest the phrase “astonishing scale” struck me as somewhat amusing. Google itself operates on “astonishing scale.” But what is good for the goose is obviously not good for the gander.

I asked You.com to provide some examples of people suing Google for alleged copyright violations. The AI spit out a laundry list. Here are four I sort of remembered:

- News Outlets & Authors v. Google (AI Training Copyright Cases)

- Google Users v. Google LLC (Privacy/Data Class Action with Copyright Claims)

- Advertisers v. Google LLC (Advertising Content Class Action)

- Oracle America, Inc. v. Google LLC

My thought is that with some experience in copyright litigation, Google is probably confident that the SEO outfit broke the law. I wanted to word it “broke the law which suits Google” but I am not sure that is clear.

Okay, which company will “win.” An SEO firm with resources slightly less robust than Google’s or Google?

Place your bet on one of the online gambling sites advertising everywhere at this time. Oh, Google permits online gambling ads in areas allowing gambling and with appropriate certifications, licenses, and compliance functions.

I am not sure what to make of this because Google’s ability to filter, its smart software, and its security procedures obviously are either insufficient, don’t work, or are full of exploitable gaps.

Stephen E Arnold, December 30, 2025

Un-Aliving Violates TOS and Some Linguistic Boundaries

December 18, 2025

Ah, lawyers.

Depression is a dark emotionally state and sometimes makes people take their lives. Before people unalive themselves, they usually investigate the act and/or reach out to trusted sources. These days the “trusted sources” are the host of AI chatbots that populate the Internet. Ars Technica shares the story about how one teenager committed suicide after using a chatbot: “OpenAI Says Dead Teen Violated TOS When He Used ChatGPT To Plan Suicide.”

OpenAI is facing a total of five lawsuits about wrongful deaths associated with ChatGPT. The first lawsuit came to court and OpenAI defended itself by claiming that the teen in question, Adam Raine, violated the terms of service because they prohibited self-harm and suicide. While pursuing the world’s “most engaging chatbot,” OpenAI relaxed their safety measures for ChatGPT which became Raine’s suicide coach.

OpenAI’s lawyers argued that Raine’s parents selected the most damaging chat logs. They also claim that the logs show that Raine had had suicidal ideations since age eleven and that his medication increased his un-aliving desires.

Along with the usual allegations about shifting the blame onto the parents and others, OpenAI says that people use the chatbot at their own risk. It’s a way to avoid any accountability.

“To overcome the Raine case, OpenAI is leaning on its usage policies, emphasizing that Raine should never have been allowed to use ChatGPT without parental consent and shifting the blame onto Raine and his loved ones. ‘ChatGPT users acknowledge their use of ChatGPT is ‘at your sole risk and you will not rely on output as a sole source of truth or factual information,’ the filing said, and users also “must agree to ‘protect people’ and ‘cannot use [the] services for,’ among other things, ‘suicide, self-harm,’ sexual violence, terrorism or violence.’”

OpenAI employees were also alarmed by the amount of “liberties” used to make the chatbot more engaging.

How far will OpenAI go with ChatGPT to make it intuitive, human-like, and intelligent? Raines already had underlying conditions that caused his death, but ChatGPT did exasperate them. Remember the terms of service.

Whitney Grace, December 18, 2025

Ka-Ching: The EU Cash Registers Tolls for the Google

December 16, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Thomson Reuters, the trust outfit because they say the company is, published another ka-ching story titled “Exclusive: Google Faces Fines Over Google Play if It Doesn’t Make More Concessions, Sources Say.” The story reports:

Alphabet’s Google is set to be hit with a potentially large EU fine early next year if it does not do more to ensure that its app store complies with EU rules aimed at ensuring fair access and competition, people with direct knowledge of the matter said.

An elected EU official introduces the new and permanent member of the parliament. Thanks, Venice.ai. Not exactly what I specified, but saving money on compute cycles is the name of the game today. Good enough.

I can hear the “Sorry. We’re really, really sorry” statement now. I can even anticipate the sequence of events; hence and herewith:

- Google says, “We believe we have complied.”

- The EU says, “Pay up.”

- Google says, “Let’s go to trial.”

- The EU says, “Fine with us.”

- The Google says, “We are innocent and have complied.”

- The EU says, “You are guilty and owe $X millions of dollars. (Note: The EU generates more revenue by fining US big tech companies than it does from certain tax streams I have heard.)

- The Google says, “Let’s negotiate.”

- The EU says, “Fine with us.”

- Google negotiates and says, “We have a deal plus we did nothing wrong.”

- The EU says, “Pay X millions less the Y millions we agree to deduct based on our fruitful negotiations.”

The actual factual article says:

DMA fines can be as much as 10% of a company’s global annual revenue. The Commission has also charged Google with favoring its associated search services in Google Search, and is investigating its use of online content for its artificial intelligence tools and services and its spam policy.

My interpretation of this snippet is that the EU has on deck another case of Google’s alleged law breaking. This is predictable, and the approach does generate revenue from companies with lots of cash.

Stephen E Arnold, December 16, 2025

Do Not Mess with the Mouse, Google

December 15, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

“Google Removes AI Videos of Disney Characters After Cease and Desist Letter” made me visualize an image of Godzilla which I sometimes misspell as Googzilla frightened of a mouse; specifically, a quite angry Mickey. Why?

A mouse causes a fearsome creature to jump on the kitchen chair. Who knew a mouse could roar? Who knew the green beast would listen? Thanks, Qwen. Your mouse does not look like one of Disney’s characters. Good for you.

The write up says:

Google has removed dozens of AI-generated videos that depicted Disney-owned characters after receiving a cease and desist letter from the studio on Wednesday. Disney flagged the YouTube links to the videos in its letter, and demanded that Google remove them immediately.

What adds an interesting twist to this otherwise ho hum story about copyright viewed as an old-fashioned concept is that Walt Disney invested in OpenAI and worked out a deal for some OpenAI customers to output Disney-okayed images. (How this will work out at prompt wizards try to put Minnie, Snow White, and probably most of the seven dwarves in compromising situations I don’t know. (If I were 15 years old, I would probably experiment to find a way to generate an image involving Price Charming and the Lion King in a bus station facility violating one another and the law. But now? Nah, I don’t care what Disney, ChatGPT users, and AI do. Have at it.)

The write up says that Google said:

“We have a longstanding and mutually beneficial relationship with Disney, and will continue to engage with them,” the company said. “More generally, we use public data from the open web to build our AI and have built additional innovative copyright controls like Google-extended and Content ID for YouTube, which give sites and copyright holders control over their content.”

Yeah, how did that work out when YouTube TV subscribers lost access to some Disney content. Then, Google asked users who paid for content and did not get it to figure out how to sign up to get the refund. Yep, beneficial.

Something caused the Google to jump on a kitchen chair when the mouse said, “See you in court. Bring your checkbook.”

I thought Google was too big to obey any entity other than its own mental confections. I was wrong again. When will the EU find a mouse-type tactic?

Stephen E Arnold, December 15, 2025

Meta: Flying Its Flag for Moving Fast and Breaking Things

December 3, 2025

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Meta, a sporty outfit, is the subject of an interesting story in “Press Gazette,” an online publication. The article “News Publishers File Criminal Complaint against Mark Zuckerberg Over Scam Ads” asserts:

A group of news publishers have filed a police complaint against Meta CEO Mark Zuckerberg over scam Facebook ads which steal the identities of journalists. Such promotions have been widespread on the Meta platform and include adverts which purport to be authored by trusted names in the media.

Thanks, MidJourney. Good enough, the gold standard for art today.

I can anticipate the outputs from some Meta adherents; for example, “We are really, really sorry.” or “We have specific rules against fraudulent behavior and we will take action to address this allegation.” Or, “Please, contact our legal representative in Sweden.”

The write up does not speculate as I just did in the preceding paragraph. The article takes a different approach, reporting:

According to Utgivarna: “These ads exploit media companies and journalists, cause both financial and psychological harm to innocent people, while Meta earns large sums by publishing the fraudulent content.” According to internal company documents, reported by Reuters, Meta earns around $16bn per year from fraudulent advertising. Press Gazette has repeatedly highlighted the use of well-known UK and US journalists to promote scam investment groups on Facebook. These include so-called pig-butchering schemes, whereby scammers win the trust of victims over weeks or months before persuading them to hand over money. [Emphasis added by Beyond Search]

On November 22, 2025, Time Magazine ran this allegedly accurate story “Court Filings Allege Meta Downplayed Risks to Children and Misled the Public.” In that article, the estimable social media company found itself in the news. That write up states:

Sex trafficking on Meta platforms was both difficult to report and widely tolerated, according to a court filing unsealed Friday. In a plaintiffs’ brief filed as part of a major lawsuit against four social media companies, Instagram’s former head of safety and well-being Vaishnavi Jayakumar testified that when she joined Meta in 2020 she was shocked to learn that the company had a “17x” strike policy for accounts that reportedly engaged in the “trafficking of humans for sex.”

I find it interesting that Meta is referenced in legal issues involving two particularly troublesome problems in many countries around the world. The one two punch is sex trafficking and pig butchering. I — probably incorrectly — interpret these two allegations as kiddie crime and theft. But I am a dinobaby, and I am not savvy to the ways of the BAIT (big AI tech)-type companies. Getting labeled as a party of sex trafficking and pig butchering is quite interesting to me. Happy holidays to Meta’s PR and legal professionals. You may be busy and 100 percent billable over the holidays and into the new year.

Several observations may be warranted:

- There are some frisky BAIT outfits in Silicon Valley. Meta may well be competing for the title as the Most Frisky Firm (MFF). I wonder what the prize is?

- Meta was annoyed with a “tell all” book written by a former employee. Meta’s push back seemed a bit of a tell to me. Perhaps some of the information hit too close to the leadership of Meta? Now we have sex and fraud allegations. So…

- How will Facebook, Instagram, and WhatsApp innovate in ad sales once Meta’s AI technology is fully deployed? Will AI, for example, block ad sales that are questionable? AI does make errors, which might be a useful angle for Meta going forward.

Net net: Perhaps some journalist with experience in online crime will take a closer look at Meta. I smell smoke. I am curious about the fire.

Stephen E Arnold, December 3, 2025

Telegram, Did You Know about the Kiddie Pix Pyramid Scheme?

November 25, 2025

![green-dino_thumb_thumb[3] green-dino_thumb_thumb[3]](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/11/green-dino_thumb_thumb3_thumb-5.gif) Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

The Independent, a newspaper in the UK, published “Leader of South Korea’s Biggest Telegram Sex Abuse Ring Gets Life Sentence.” The subtitle is a snappy one: “Seoul Court Says Kim Nok Wan Committed Crimes of Extreme Brutality.” Note: I will refer to this convicted person as Mr. Wan. The reason is that he will spend time in solitary confinement. In my experience individuals involved in kiddie crimes are at bottom of the totem pole among convicted people. If the prison director wants to keep him alive, he will be kept away from the general population. Even though most South Koreans are polite, it is highly likely that he will face a less than friendly greeting when he visits the TV room or exercise area. Therefore, my designation of Mr. Wan reflects the pallor his skin will evidence.

Now to the story:

The main idea is that Mr. Wan signed up for Telegram. He relied on Telegram’s Group and Channel function. He organized a social community dubbed the Vigilantes, a word unlikely to trigger kiddie pix filters. Then he “coerced victims, nearly 150 of them minors, into producing explicit material through blackmail and then distribute the content in online chat rooms.”

Telegram’s leader sets an example for others who want to break rules and be worshiped. Thanks, Venice.ai. Too bad you ignored my request for no facial hair. Good enough, the standard for excellence today I believe.

Mr. Wan’s innovation weas to set up what the Independent called “a pyramid hierarchy.” Think of an Herbal Life- or the OneCoin-type operation. He incorporated an interesting twist. According to the Independent:

He also sent a video of a victim to their father through an accomplice and threatened to release it at their workplace.

Let’s shift from the clever Mr. Wan to Telegram and its public and private Groups and Channels. The French arrested Pavel Durov in August 2024. The French judiciary identified a dozen crimes he allegedly committed. He awaits trial for these alleged crimes. Since that arrest, Telegram has, based on our monitoring of Telegram, blocked more aggressively a number of users and Groups for violating Telegram’s rules and regulations such as they are. However, Mr. Wan appears to have slipped through despite Telegram’s filtering methods.

Several observations:

- Will Mr. Durov implement content moderation procedures to block, prevent, and remove content like Mr. Wan’s?

- Will South Korea take a firm stance toward Telegram’s use in the country?

- Will Mr. Durov cave in to Iran’s demands so that Telegram is once again available in that country?

- Did Telegram know about Mr. Wan’s activities on the estimable Telegram platform?

Mr. Wan exploited Telegram. Perhaps more forceful actions should be taken by other countries against services which provide a greenhouse for certain types of online activity to flourish? Mr. Durov is a tech bro, and he has been pictured carrying a real (not metaphorical) goat to suggest that he is the greatest of all time.

That perception appears to be at odds with the risk his platform poses to children in my opinion.

Stephen E Arnold, November 25, 2025

Tim Apple, Granny Scarfs, and Snooping

November 24, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I spotted a write in a source I usually ignore. I don’t know if the write up is 100 percent on the money. Let’s assume for the purpose of my dinobaby persona that it indeed is. The write up is “Apple to Pay $95 Million Settle Suit Accusing Siri Of Snoopy Eavesdropping.” Like Apple’s incessant pop ups about my not logging into Facetime, iMessage, and iCloud, Siri being in snoop mode is not surprising to me. Tim Apple, it seems, is winding down. The pace of innovation, in my opinion, is tortoise like. I haven’t nothing against turtle like creatures, but a granny scarf for an iPhone. That’s innovation, almost as cutting edge as the candy colored orange iPhone. Stunning indeed.

Is Frederick the Great wearing an Apple Granny Scarf? Thanks, Venice.ai. Good enough.

What does the write up say about this $95 million sad smile?

Apple has agreed to pay $95 million to settle a lawsuit accusing the privacy-minded company of deploying its virtual assistant Siri to eavesdrop on people using its iPhone and other trendy devices. The proposed settlement filed Tuesday in an Oakland, California, federal court would resolve a 5-year-old lawsuit revolving around allegations that Apple surreptitiously activated Siri to record conversations through iPhones and other devices equipped with the virtual assistant for more than a decade.

Apple has managed to work the legal process for five years. Good work, legal eagles. Billable hours and legal moves generate income if my understanding is correct. Also, the notion of “surreptitiously” fascinates me. Why do the crazy screen nagging? Just activate what you want and remove the users’ options to disable the function. If you want to be surreptitious, the basic concept as I understand it is to operate so others don’t know what you are doing. Good try, but you failed to implement appropriate secretive operational methods. Better luck next time or just enable what you want and prevent users from turning off the data vacuum cleaner.

The write up notes:

Apple isn’t acknowledging any wrongdoing in the settlement, which still must be approved by U.S. District Judge Jeffrey White. Lawyers in the case have proposed scheduling a Feb. 14 court hearing in Oakland to review the terms.

I interpreted this passage to mean that the Judge has to do something. I assume that lawyers will do something. Whoever brought the litigation will do something. It strikes me that Apple will not be writing a check any time soon, nor will the fine change how Tim Apple has set up that outstanding Apple entity to harvest money, data, and good vibes.

I have several questions:

- Will Apple offer a complementary Granny Scarf to each of its attorneys working this case?

- Will Apple’s methods of harvesting data be revealed in a white paper written by either [a] Apple, [b] an unhappy Apple employee, or [c] a researcher laboring in the vineyards of Stanford University or San Jose State?

- Will regulatory authorities and the US judicial folks take steps to curtail the “we do what we want” approach to privacy and security?

I have answers for each of these questions. Here we go:

- No. Granny Scarfs are sold out

- No. No one wants to be hassled endlessly by Apple’s legions of legal eagles

- No. As the recent Meta decision about WhatsApp makes clear, green light, tech bros. Move fast, break things. Just do it.

Stephen E Arnold, November 24, 2025

Creative Types: Sweating AI Bullets

October 30, 2025

Artists and authors are in a tizzy (and rightly so) because AI is stealing their content. AI algorithms potentially will also put them out of jobs, but the latest data from Nieman Labs explains that people are using chatbots for information seeking over content: “People Are Using ChatGPT Twice As Much As They Were Last Year. They’re Still Just As Skeptical Of AI In News.”

Usage has doubled of AI chatbots in 2024 compared to the previous years. It’s being used for tasks formerly reserved for search engines and news outlets. There is still ambivalence about the information it provides.

Here are stats about information consumption trends:

“For publishers worried about declining referral traffic, our findings paint a worrying picture, in line with other recent findings in industry and academic research. Among those who say they have seen AI answers for their searches, only a third say they “always or often” click through to the source links, while 28% say they “rarely or never” do. This suggests a significant portion of user journeys may now end on the search results page.

Contrary to some vocal criticisms of these summaries, a good chunk of population do seem to find them trustworthy. In the U.S., 49% of those who have seen them express trust in them, although it is worth pointing out that this trust is often conditional.”

When it comes to trust habits, people believe AI on low-stakes, “first pass” information or the answer is “good enough,” because AI is trained on large amounts of data. When the stakes are higher, people will do further research. There is a “comfort gap” between AI news and human oversight. Very few people implicitly trust AI. People still prefer humans curating and writing the news over a machine. They also don’t mind AI being used for assisting tasks such as editing or translation, but a human touch is still needed o the final product.

Humans are still needed as is old-fashioned information getting. The process remains the same, the tools have just changed.

Whitney Grace, October 30, 2025

Google Needs Help from a Higher Power

October 17, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

In my opinion, there should be one digital online service. This means one search system, one place to get apps, one place to obtain real time “real” news, and one place to buy and sell advertising. Wouldn’t that make life much easier for the company who owned the “one place.” If the information in “US Supreme Court Allows Order Forcing Google to Make App Store Reforms” is accurate, Google’s dream of becoming that “one place” has been interrupted.

The write up from a trusted source reports:

The declined on Monday [October 6, 2025] to halt key parts of a judge’s order requiring Alphabet’s, Google to make major changes to its app store Play, as the company prepares to appeal a decision in a lawsuit brought by “Fortnite” maker Epic Games. The justices turned down Google’s request to temporarily freeze parts of the injunction won by Epic in its lawsuit accusing the tech giant of monopolizing how consumers access apps on Android devices and pay for transactions within apps.

Imagine the nerve of this outfit. These highly trained, respected legal professionals did not agree with Google’s rock-solid, diamond-hard arguments. Imagine a maker of electronic games screwing up one of the modules in the Google money and data machine. The nerve.

Thanks, MidJourney, good enough.

The write up adds:

Google in its Supreme Court filing said the changes would have enormous consequences for more than 100 million U.S. Android users and 500,000 developers. Google said it plans to file a full appeal to the Supreme Court by October 27, which could allow the justices to take up the case during their nine-month term that began on Monday.

The fact that the government is shut down will not halt, impair, derail, or otherwise inhibit Google’s quest for the justice it deserves. If the case can be extended, it is possible the government legal eagles will seek new opportunities in commercial enterprises or just resign due to the intellectual demands of their jobs.

The news story points out:

Google faces other lawsuits from government, consumer and commercial plaintiffs challenging its search and advertising business practices.

It is difficult to believe that a firm with such a rock solid approach to business can find itself swatting knowledge gnats. Onward to the “one service.” Is that on a Google T shirt yet?

Stephen E Arnold, October 17, 2025

The Ka-Ching Game: The EU Rings the Big Tech Cash Register Tactic

October 14, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The unusually tinted Financial Times published another “they will pay up and change, really” write up. The article is “Meta and Apple Close to Settling EU Cases.” [Note: You have to pay to read the FT’s orange write up.] The main idea is that these U S big technology outfits are cutting deals. The objective is to show that these two firms are interested in making friends with European Commission professionals. The combination of nice talk and multi-million euro payments should do the trick. That’s the hope.

Thanks, Venice.ai. Good enough.

The cute penalty method the EU crafted involved daily financial penalties for assorted alleged business practices. The penalties had an escalator feature. If the U S big tech outfits did not comply or pretend to comply, then the EU could send an invoice for up to five percent of the firm’s gross revenues. Could the E U collect? Well, that’s another issue. If Apple leaves the E U, the elected officials would have to use an Android mobile. If Meta departed, the elected officials would have to listen to their children’s complaints about their ruined social life. I think some grandmothers would be honked if the flow of grandchildren pictures were interrupted. (Who needs this? Take the money, Christina.)

Several observations:

- The EU will take money; the EU will cook up additional rules to make the Wild West outfits come to town but mostly behave

- The U S big tech companies will write a check, issue smarmy statements, and do exactly what they want to do. Decades of regulatory inefficacy creates certain opportunities. Some U S outfits spot those and figure out how to benefit from lack of action or ineptitude

- The efforts to curtail the U S big tech companies have historically been a rinse and repeat exercise. That won’t change.

The problem for the EU with regard to the U S is different from the other challenges it faces. In my opinion, the E U like other countries is:

- Unprepared for the new services in development by U S firms. I address these in a series of lectures I am doing for some government types in Colorado. Attendance at the talks is restricted, so I can’t provide any details about these five new services hurtling toward the online markets in the U S and elsewhere

- Unable to break its cycle of clever laws, U S company behavior, and accept money. More is needed. A good example of how one country addressed a problem online took place in France. That was a positive, decisive action and will interrupt the flow of cash from fines. Perhaps more E U countries should consider this French approach?

- The Big Tech outfits are not constrained by geographic borders. In case you have not caught up with some of the ideas of Silicon Valley, may I suggest you read the enervating and somewhat weird writings of a fellow named René Gerard?

Net net: Yep, a deal. No big surprise. Will it work? Nope.

Stephen E Arnold, October 15, 2025