Another Small Victory for OpenAI Against Authors

March 12, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

For those following the fight between human content creators and AI firms, score one for the algorithm engineers. TorrentFreak reports, “Court Dismisses Authors’ Copyright Infringement Claims Against OpenAI.” At issue is generative AI’s practice of feeding on humans’ work, without compensation, in order to mimic it. Multiple suits have been filed by record labels, writers, and visual artists. Reporter Ernesto Van der Sar writes:

“Several of the lawsuits filed by book authors include a piracy component. The cases allege that tech companies, including Meta and OpenAI, used the controversial Books3 dataset to train their models. The Books3 dataset was created by AI researcher Shawn Presser in 2020, who scraped the library of ‘pirate’ site Bibliotik. The general vision was that the plaintext collection of more than 195,000 books, which is nearly 37GB in size, could help AI enthusiasts build better models. The vision wasn’t wrong; large text archives are great training material for Large Language Models, but many authors disapprove of their works being used in this manner, without permission or compensation.”

A large group of rights holders have a football team. Those big folks are chasing the small but feisty opponent down the field. Which team will score? Thanks, MSFT Copilot. Keep up the good enough work.

Is that so unreasonable? Maybe not, but existing copyright law did not foresee this situation. We learn:

“After reviewing input from both sides, California District Judge Araceli Martínez-Olguín ruled on the matter. In her order, she largely sides with OpenAI. The vicarious copyright infringement claim fails because the court doesn’t agree that all output produced by OpenAI’s models can be seen as a derivative work. To survive, the infringement claim has to be more concrete.”

The plaintiffs are not out of moves, however. They can still file an amended complaint. But unless updated legislation is passed in the meantime, they may just be rebuffed again. So all they need is for Congress to act quickly to protect artists from tech firms. Any day now.

Cynthia Murrell, March 12, 2024

NSO Group: Pegasus Code Wings Its Way to Meta and Mr. Zuckerberg

March 7, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

NSO Group’s senior managers and legal eagles will have an opportunity to become familiar with an okay Brazilian restaurant and a waffle shop. That lovable leader of Facebook, Instagram, Threads, and WhatsApp may have put a stick in the now-ageing digital bicycle doing business as NSO Group. The company’s mark is pegasus, which is a flying horse. Pegasus’s dad was Poseidon, and his mom was the knock out Gorgon Medusa, who did some innovative hair treatments. The mythical pegasus helped out other gods until Zeus stepped in an acted with extreme prejudice. Quite a myth.

Poseidon decides to kill the mythical Pegasus, not for its software, but for its getting out of bounds. Thanks, MSFT Copilot. Close enough.

Life imitates myth. “Court Orders Maker of Pegasus Spyware to Hand Over Code to WhatsApp” reports that the hand over decision:

is a major legal victory for WhatsApp, the Meta-owned communication app which has been embroiled in a lawsuit against NSO since 2019, when it alleged that the Israeli company’s spyware had been used against 1,400 WhatsApp users over a two-week period. NSO’s Pegasus code, and code for other surveillance products it sells, is seen as a closely and highly sought state secret. NSO is closely regulated by the Israeli ministry of defense, which must review and approve the sale of all licenses to foreign governments.

NSO Group hired former DHS and NSA official Stewart Baker to fix up NSO Group gyro compass. Mr. Baker, who is a podcaster and affiliated with the law firm Steptoe and Johnson. For more color about Mr. Baker, please scan “Former DHS/NSA Official Stewart Baker Decides He Can Help NSO Group Turn A Profit.”

A decade ago, Israel’s senior officials might have been able to prevent a social media company from getting a copy of the Pegasus source code. Not anymore. Israel’s home-grown intelware technology simply did not thwart, prevent, or warn about the Hamas attack in the autumn of 2023. If NSO Group were battling in court with Harris Corp., Textron, or Harris Corp., I would not worry. Mr. Zuckerberg’s companies are not directly involved with national security technology. From what I have heard at conferences, Mr. Zuckerberg’s commercial enterprises are responsive to law enforcement requests when a bad actor uses Facebook for an allegedly illegal activity. But Mr. Zuckerberg’s managers are really busy with higher priority tasks. Some folks engaged in investigations of serious crimes must be patient. Presumably the investigators can pass their time scrolling through #Shorts. If the Guardian’s article is accurate, now those Facebook employees can learn how Pegasus works. Will any of those learnings stick? One hopes not.

Several observations:

- Companies which make specialized software guard their systems and methods carefully. Well, that used to be true.

- The reorganization of NSO Group has not lowered the firm’s public relations profile. NSO Group can make headlines, which may not be desirable for those engaged in national security.

- Disclosure of the specific Pegasus systems and methods will get a warm, enthusiastic reception from those who exchange ideas for malware and related tools on private Telegram channels, Dark Web discussion groups, or via one of the “stealth” communication services which pop up like mushrooms after rain in rural Kentucky.

Will the software Pegasus be terminated? I remain concerned that source code revealing how to perform certain tasks may lead to downstream, unintended consequences. Specialized software companies try to operate with maximum security. Now Pegasus may be flying away unless another legal action prevents this.

Where is Zeus when one needs him?

Stephen E Arnold, March 7, 2024

A Xoogler Explains AI, News, Inevitability, and Real Business Life

February 13, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read an essay providing a tiny bit of evidence that one can take the Googler out of the Google, but that Xoogler still retains some Googley DNA. The item appeared in the Bezos bulldozer’s estimable publication with the title “The Real Wolf Menacing the News Business? AI.” Absolutely. Obviously. Who does not understand that?

A high-technology sophist explains the facts of life to a group of listeners who are skeptical about artificial intelligence. The illustration was generated after three tries by Google’s own smart software. I love the miniature horse and the less-than-flattering representation of a sales professional. That individual looks like one who would be more comfortable eating the listeners than convincing them about AI’s value.

The essay contains a number of interesting points. I want to highlight three and then, as I quite enjoy doing, I will offer some observations.

The author is a Xoogler who served from 2017 to 2023 as the senior director of news ecosystem products. I quite like the idea of a “news ecosystem.” But ecosystems as some who follow the impact of man on environments can be destroyed or pushed to the edge of catastrophe. In the aftermath of devastation coming from indifferent decision makers, greed fueled entrepreneurs, or rhinoceros poachers, landscapes are often transformed.

First, the essay writer argues:

The news publishing industry has always reviled new technology, whether it was radio or television, the internet or, now, generative artificial intelligence.

I love the word “revile.” It suggests that ignorant individuals are unable to grasp the value of certain technologies. I also like the very clever use of the word “always.” Categorical affirmatives make the world of zeros and one so delightfully absolute. We’re off to a good start I think.

Second, we have a remarkable argument which invokes another zero and one type of thinking. Consider this passage:

The publishers’ complaints were premised on the idea that web platforms such as Google and Facebook were stealing from them by posting — or even allowing publishers to post — headlines and blurbs linking to their stories. This was always a silly complaint because of a universal truism of the internet: Everybody wants traffic!

I love those universal truisms. I think some at Google honestly believe that their insights, perceptions, and beliefs are the One True Path Forward. Confidence is good, but the implication that a universal truism exists strikes me as information about a psychological and intellectual aberration. Consider this truism offered by my uneducated great grandmother:

Always get a second opinion.

My great grandmother used the logically troublesome word “always.” But the idea seems reasonable, but the action may not be possible. Does Google get second opinions when it decides to kill one of its services, modify algorithms in its ad brokering system, or reorganize its contentious smart software units? “Always” opens the door to many issues.

Publishers (I assume “all” publishers)k want traffic. May I demonstrate the frailty of the Xoogler’s argument. I publish a blog called Beyond Search. I have done this since 2008. I do not care if I get traffic or not. My goal was and remains to present commentary about the antics of high-technology companies and related subjects. Why do I do this? First, I want to make sure that my views about such topics as Google search exist. Second, I have set up my estate so the content will remain online long after I am gone. I am a publisher, and I don’t want traffic, or at least the type of traffic that Google provides. One exception causes an argument like the Xoogler’s to be shown as false, even if it is self-serving.

Third, the essay points its self-righteous finger at “regulators.” The essay suggests that elected officials pursued “illegitimate complaints” from publishers. I noted this passage:

Prior to these laws, no one ever asked permission to link to a website or paid to do so. Quite the contrary, if anyone got paid, it was the party doing the linking. Why? Because everybody wants traffic! After all, this is why advertising businesses — publishers and platforms alike — can exist in the first place. They offer distribution to advertisers, and the advertisers pay them because distribution is valuable and seldom free.

Repetition is okay, but I am able to recall one of the key arguments in this Xoogler’s write up: “Everybody wants traffic.” Since it is false, I am not sure the essay’s argumentative trajectory is on the track of logic.

Now we come to the guts of the essay: Artificial intelligence. What’s interesting is that AI magnetically pulls regulators back to the casino. Smart software companies face techno-feudalists in a high-stakes game. I noted this passage about anchoring statements via verification and just training algorithms:

The courts might or might not find this distinction between training and grounding compelling. If they don’t, Congress must step in. By legislating copyright protection for content used by AI for grounding purposes, Congress has an opportunity to create a copyright framework that achieves many competing social goals. It would permit continued innovation in artificial intelligence via the training and testing of LLMs; it would require licensing of content that AI applications use to verify their statements or look up new facts; and those licensing payments would financially sustain and incentivize the news media’s most important work — the discovery and verification of new information — rather than forcing the tech industry to make blanket payments for rewrites of what is already long known.

Who owns the casino? At this time, I would suggest that lobbyists and certain non-governmental entities exert considerable influence over some elected and appointed officials. Furthermore, some AI firms are moving as quickly as reasonably possible to convert interest in AI into revenue streams with moats. The idea is that if regulations curtail AI companies, consumers would not be well served. No 20-something wants to read a newspaper. That individual wants convenience and, of course, advertising.

Now several observations:

- The Xoogler author believes in AI going fast. The technology serves users / customers what they want. The downsides are bleats and shrieks from an outmoded sector; that is, those engaged in news

- The logic of the technologist is not the logic of a person who prefers nuances. The broad statements are false to me, for example. But to the Xoogler, these are self-evident truths. Get with our program or get left to sleep on cardboard in the street.

- The schism smart software creates is palpable. On one hand, there are those who “get it.” On the other hand, there are those who fight a meaningless battle with the inevitable. There’s only one problem: Technology is not delivering better, faster, or cheaper social fabrics. Technology seems to have some downsides. Just ask a journalist trying to survive on YouTube earnings.

Net net: The attitude of the Xoogler suggests that one cannot shake the sense of being right, entitlement, and logic associated with a Googler even after leaving the firm. The essay makes me uncomfortable for two reasons: [1] I think the author means exactly what is expressed in the essay. News is going to be different. Get with the program or lose big time. And [2] the attitude is one which I find destructive because technology is assumed to “do good.” I am not too sure about that because the benefits of AI are not known and neither are AI’s downsides. Plus, there’s the “everybody wants traffic.” Monopolistic vendors of online ads want me to believe that obvious statement is ground truth. Sorry. I don’t.

Stephen E Arnold, February 13, 2024

Hewlett Packard and Autonomy: Search and $4 Billion

February 12, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

More than a decade ago, Hewlett Packard acquired Autonomy plc. Autonomy was one of the first companies to deploy what I call “smart software.” The system used Bayesian methods, still quite new to many in the information retrieval game in the 1990s. Autonomy kept its method in a black box assigned to a company from which Autonomy licensed the functions for information processing. Some experts in smart software overlook BAE Systems’ activity in the smart software game. That effort began in the late 1990s if my memory is working this morning. Few “experts” today care, but the dates are relevant.

Between the date Autonomy opened for business in 1996 and HP’s decision to purchase the company for about $8 billion in 2011, there was ample evidence that companies engaged in enterprise search and allied businesses like legal work processes or augmented magazine advertising were selling for much less. Most of the companies engaged in enterprise search simply went out of business after burning through their funds; for example, Delphes and Entopia. Others sold at what I thought we inflated or generous prices; for example, Vivisimo to IBM for about $28 million and Exalead to Dassault for 135 million euros.

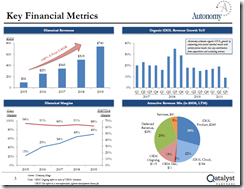

Then along comes HP and its announcement that it purchased Autonomy for a staggering $8 billion. I attended a search-related event when one of the presenters showed this PowerPoint slide:

The idea was that Autonomy’s systems generated multiple lines of revenue, including a cloud service. The key fact on the presentation was that the search-and-retrieval unit was not the revenue rocket ship. Autonomy has shored up its search revenue by acquisition; for example, Soundsoft, Virage, and Zantaz. The company also experimented with bundling software, services, and hardware. But the Qatalyst slide depicted a rosy future because of Autonomy management’s vision and business strategy.

Did I believe the analysis prepared by Frank Quatrone’s team? I accepted some of the comments about the future, and I was skeptical about others. In the period from 2006 to 2012, it was becoming increasingly difficult to overcome some notable failures in enterprise search. The poster child from the problems was Fast Search & Transfer. In a nutshell, Fast Search retreated from Web search, shutting down its Google competitor AllTheWeb.com. The company’s engaging founder John Lervik told me that the future was enterprise search. But some Fast Search customers were slow in paying their bills because of the complexity of tailoring the Fast Search system to a client’s particular requirements. I recall being asked to comment about how to get the Fast Search system to work because my team used it for the FirstGov.gov site (now USA.gov) when the Inktomi solution was no longer viable due to procurement rule changes. Fast Search worked, but it required the same type of manual effort that the Vivisimo system required. Search-and-retrieval for an organization is not a one size fits all thing, a fact Google learned with its spectacular failure with its truly misguided Google Search Appliance product. Fast Search ended with an investigation related to financial missteps, and Microsoft stepped in in 2008 and bought the company for about $1.2 billion. I thought that was a wild and crazy number, but I was one of the lucky people who managed to get Fast Search to work and knew that most licensees would not have the resources or talent I had at my disposal. Working for the White House has some benefits, particularly when Fast Search for the US government was part of its tie up with AT&T. Thank goodness for my counterpart Ms. Coker. But $1.2 billion for Fast Search? That in my opinion was absolutely bonkers from my point of view. There were better and cheaper options, but Microsoft did not ask my opinion until after the deal was closed.

Everyone in the HP Autonomy matter keeps saying the same thing like an old-fashioned 78 RPM record stuck in a groove. Thanks, MSFT Copilot. You produced the image really “fast.” Plus, it is good enough like most search systems.

What is the Reuters’ news story adding to this background? Nothing. The reason is that the news story focuses on one factoid: “HP Claims $4 Billion Losses in London Lawsuit over Autonomy Deal.” Keep in mind that HP paid $11 billion for Autonomy plc. Keep in mind that was 10 times what Microsoft paid for Fast Search. Now HP wants $4 billion. Stripping away everything but enterprise search, I could accept that HP could reasonably pay $1.2 billion for Autonomy. But $11 billion made Microsoft’s purchase of Fast Search less nutso. Because, despite technical differences, Autonomy and Fast Search were two peas in a pod. The similarities were significant. The differences were technical. Neither company was poised to grow as rapidly as their stakeholders envisioned.

When open source search options became available, these quickly became popular. Today if one wants serviceable search-and-retrieval for an enterprise application one can use a Lucene / Solr variant or pick one of a number of other viable open source systems.

But HP bought Autonomy and overpaid. Furthermore, Autonomy had potential, but the vision of Mike Lynch and the resources of HP were needed to convert the promise of Autonomy into a diversified information processing company. Autonomy could have provided high value solutions to the health and medical market; it could have become a key player in the policeware market; it could have leveraged its legal software into a knowledge pipeline for eDiscovery vendors to license and build upon; and it could have expanded its opportunities to license Autonomy stubs into broader OpenText enterprise integration solutions.

But what did HP do? It muffed the bunny. Mr. Lynch exited and set up a promising cyber security company and spent the rest of his time in courts. The Reuters’ article states:

Following one of the longest civil trials in English legal history, HP in 2022 substantially won its case, though a High Court judge said any damages would be significantly less than the $5 billion HP had claimed. HP’s lawyers argued on Monday that its losses resulting from the fraud entitle it to about $4 billion.

If I were younger and had not written three volumes of the Enterprise Search Report and a half dozen books about enterprise search, I would write about the wild and crazy years for enterprise search, its hits, its misses, and its spectacular failures (Yes, Google, I remember the Google Search Appliance quite well.) But I am a dinobaby.

The net net is HP made a poor decision and now years later it wants Mike Lynch to pay for HP’s lousy analysis of the company, its management missteps within its own Board of Directors, and its decision to pay $11 billion for a company in a sector in which at the time simply being profitable was a Herculean achievement. So this dinobaby says, “Caveat emptor.”

Stephen E Arnold, February 12, 2024

Regulators Shift into Gear to Investigate an AI Tie Up

January 19, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Solicitors, lawyers, and avocats want to mark the anniversary of the AI big bang. About one year ago, Microsoft pushed Google into hitting its Code Red button. Investment firms, developers, and wild-eyed entrepreneurs knew smart software was the real deal, not a digital file of a cartoon like that NFT baloney. In the last 12 months, AI went from jargon and eliciting yawns to the treasure map to the fabled city of El Dorado (even if it was a suburb of Grants, New Mexico. Google got the message quickly. The lawyers. Well, not too quickly.

Regulators look through the technological pile of 2023 gadgets. Despite being last year’s big thing, the law makers and justice deciders move into action mode. Exciting. Thanks, MSFT Copilot Bing thing. Good enough.

“EU Joins UK in Scrutinizing OpenAI’s Relationship with Microsoft” documents what happens when lawyers — after decades of inaction — wake to do something constructive. Social media gutted the fabric of many cultural norms. AI isn’t going to be given a 20 year free pass. No way.

The write up reports:

Antitrust regulators in the EU have joined their British counterparts in scrutinizing Microsoft’s alliance with OpenAI.

What will happen now? Here’s my short list of actions:

- Legal eagles on both sides of the Atlantic will begin grooming their feathers in order to be selected to deal with the assorted forms, filings, hearings, and advisory meetings. Some of the lawyers will call Ferrari to make sure they are eligible to buy a supercar; others may cast an eye on an impounded oligarch-linked yacht. Yep, big bucks ahead.

- Microsoft and OpenAI will let loose an platoon of humanoid art history and business administration majors. These professionals will create a wide range of informative explainers. Smart software will be pressed into duty, and I anticipate some smart automation to provide Teflon the the flow of digital documentation.

- Firms — possibly some based in the EU and a few bold souls in the US — will present information making clear that competition is a good thing. Governments must regulate smart software

- Entities hostile to the EU and the US will also output information or disinformation. Which is what depends on one’s perspective.

In short, 2024 will be an interesting year because one of the major threat to the Google could be converted to the digital equivalent of a eunuch in an Assyrian ruler’s court. What will this mean? Google wins. Unanticipated consequence? Absolutely.

Stephen E Arnold, January 19, 2024

AI Inventors Barred from Patents. For Now

January 17, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

For anyone wondering whether an AI system can be officially recognized as a patent inventor, the answer in two countries is no. Or at least not yet. We learn from The Fashion Law, “UK Supreme Court Says AI Cannot Be Patent Inventor.” Inventor Stephen Thaler pursued two patents on behalf of DABUS, his AI system. After the UK’s Intellectual Property Office, High Court, and the Court of Appeal all rejected the applications, the intrepid algorithm advocate appealed to the highest court in that land. The article reveals:

“In the December 20 decision, which was authored by Judge David Kitchin, the Supreme Court confirmed that as a matter of law, under the Patents Act, an inventor must be a natural person, and that DABUS does not meet this requirement. Against that background, the court determined that Thaler could not apply for and/or obtain a patent on behalf of DABUS.”

The court also specified the patent applications now stand as “withdrawn.” Thaler also tried his luck in the US legal system but met with a similar result. So is it the end of the line for DABUS’s inventor ambitions? Not necessarily:

“In the court’s determination, Judge Kitchin stated that Thaler’s appeal is ‘not concerned with the broader question whether technical advances generated by machines acting autonomously and powered by AI should be patentable, nor is it concerned with the question whether the meaning of the term ‘inventor’ ought to be expanded … to include machines powered by AI ….’”

So the legislature may yet allow AIs into the patent application queues. Will being a “natural person” soon become unnecessary to apply for a patent? If so, will patent offices increase their reliance on algorithms to handle the increased caseload? Then machines would grant patents to machines. Would natural people even be necessary anymore? Once a techno feudalist with truckloads of cash and flocks of legal eagles pulls up to a hearing, rules can become — how shall I say it? — malleable.

Cynthia Murrell, January 17, 2024

eBay: Still Innovating and Serving Customers with Great Ideas

January 16, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I noted “eBay to Pay $3 Million after Couple Became the Target of Harassment, Stalking.” If true, the “real” news report is quite interesting. The CBS professionals report:

“eBay engaged in absolutely horrific, criminal conduct. The company’s employees and contractors involved in this campaign put the victims through pure hell, in a petrifying campaign aimed at silencing their reporting and protecting the eBay brand,” Levy [a US attorney] said. “We left no stone unturned in our mission to hold accountable every individual who turned the victims’ world upside-down through a never-ending nightmare of menacing and criminal acts.”

MSFT Copilot could not render Munsters and one of their progeny opening a box. But the image is “good enough,” which is the modern way to define excellence. Well done, MSFT.

In what could have been a skit in the now-defunct “The Munsters”, allegedly some eBay professionals packed up “live spiders, cockroaches, a funeral wreath and a bloody pig mask.” The box was shipped to a couple of people who posted about the outstanding online flea market eBay on social media. A letter, coffee, or Zoom were not sufficient for the exceptional eBay executives. Why Zoom when one can bundle up some cockroaches and put them in a box? Go with the insects, right?

I noted this statement in the “real” news story:

seven people who worked for eBay’s Safety and Security unit, including two former cops and a former nanny, all pleaded guilty to stalking or cyberstalking charges.

Those posts were powerful indeed. I wonder if eBay considered hiring the people to whom the Munster fodder was sent. Individuals with excellent writing skills and the agility to evoke strong emotions are in demand in some companies.

A civil trial is scheduled for March 2025. The story has legs, maybe eight of them just like the allegedly alive spiders in the eBay gift box. Outstanding management decision making appears to characterize the eBay organization.

Stephen E Arnold, January 16, 2024

Google, There Goes Two Percent of 2022 Revenues. How Will the Company Survive?

January 1, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

True or false: Google owes $5 billion US. I am not sure, but the headline in Metro makes the number a semi-factoid. So let’s see what could force Googzilla to transfer the equivalent of less than two percent of Google’s alleged 2022 revenues. Wow. That will be painful for the online advertising giant. Well, fire some staff; raise ad rates; and boost the cost of YouTube subscriptions. Will the GOOG survive? I think so.

An executive ponders a court order to pay the equivalent of two percent of 2022 revenues for unproven alleged improper behavior. But the court order says, “Have a nice day.” I assume the court is sincere. Thanks, MSFT Copilot Bing thing. Good enough.

“Google Settles $5,000,000,000 Claim over Searches for Intimate and Embarrassing Things” reports:

Google has agreed to settle a US lawsuit claiming it secretly tracked millions of people who thought they were browsing privately through its Incognito Mode between 2016 and 2020. The claim was seeking at least $5 billion in damages, including at least $5,000 for each user affected. Ironically, the terms of the settlement have not been disclosed, but a formal agreement will be submitted to the court by February 24.

My thought is that Google’s legal eagles will not be partying on New Year’s Eve. These fine professionals will be huddling over their laptops, scrolling for fee legal databases, and using Zoom (the Google video service is a bit of a hassle) to discuss ways to [a] delay, [b] deflect, [c] deny, and [d] dodge the obviously [a] fallacious, [b] foul, [c] false, [d] flimsy, and [e] flawed claims that Google did anything improper.

Hey, incognito means what Google says it means, just like the “unlimited” data claims from wireless providers. Let’s not get hung up on details. Just ask the US regulatory authorities.

For you and me, we need to read Google’s terms of service, check our computing device’s security settings, and continue to live in a Cloud of Unknowing. The allegations that Google mapping vehicles did Wi-Fi sniffing? Hey, these assertions are [a] fallacious, [b] foul, [c] false, [d] flimsy, and [e] flawed . Tracking users. Op cit, gentle reader.

Never has a commercial enterprise been subjected to so many [a] unwarranted, [b] unprovable, [c] unacceptable, and [d] unnecessary assertions. Here’s my take: [a] The Google is innocent; [b] the GOOG is misunderstood, [c] Googzilla is a victim. I ticked a, b, and c.

Stephen E Arnold, January 1, 2024

The American Way: Loose the Legal Eagles! AI, Gray Lady, AI.

December 29, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

With the demands of the holidays, I have been remiss in commenting upon the festering legal sores plaguing the “real” news outfits. Advertising is tough to sell. Readers want some stories, not every story. Subscribers churn. The dead tree version of “real” news turn yellow in the windows of the shrinking number of bodegas, delis, and coffee shops interested in losing floor space to “real” news displays.

A youthful senior manager enters Dante’s fifth circle of Hades, the Flaming Legal Eagles Nest. Beelzebub wishes the “real” news professional good luck. Thanks, MSFT Copilot, I encountered no warnings when I used the word “Dante.” Good enough.

Google may be coming out of the dog training school with some slightly improved behavior. The leash does not connect to a shock collar, but maybe the courts will provide curtail some of the firm’s more interesting behaviors. The Zuckbook and X.com are news shy. But the smart software outfits are ripping the heart out of “real” news. That hurts, and someone is going to pay.

Enter the legal eagles. The target is AI or smart software companies. The legal eagles says, “AI, gray lady, AI.”

How do I know? Navigate to “New York Times Sues OpenAI, Microsoft over Millions of Articles Used to Train ChatGPT.” The write up reports:

The New York Times has sued Microsoft and OpenAI, claiming the duo infringed the newspaper’s copyright by using its articles without permission to build ChatGPT and similar models. It is the first major American media outfit to drag the tech pair to court over the use of stories in training data.

The article points out:

However, to drive traffic to its site, the NYT also permits search engines to access and index its content. "Inherent in this value exchange is the idea that the search engines will direct users to The Times’s own websites and mobile applications, rather than exploit The Times’s content to keep users within their own search ecosystem." The Times added it has never permitted anyone – including Microsoft and OpenAI – to use its content for generative AI purposes. And therein lies the rub. According to the paper, it contacted Microsoft and OpenAI in April 2023 to deal with the issue amicably. It stated bluntly: "These efforts have not produced a resolution."

I think this means that the NYT used online search services to generate visibility, access, and revenue. However, it did not expect, understand, or consider that when a system indexes content, that content is used for other search services. Am I right? A doorway works two ways. The NYT wants it to work one way only. I may be off base, but the NYT is aggrieved because it did not understand the direction of AI research which has been chugging along for 50 years.

What do smart systems require? Information. Where do companies get content? From online sources accessible via a crawler. How long has this practice been chugging along? The early 1990s, even earlier if one considers text and command line only systems. Plus the NYT tried its own online service and failed. Then it hooked up with LexisNexis, only to pull out of the deal because the “real” news was worth more than LexisNexis would pay. Then the NYT spun up its own indexing service. Next the NYT dabbled in another online service. Plus the outfit acquired About.com. (Where did those writers get that content?” I know the answer, but does the Gray Lady remember?)

Now with the success of another generation of software which the Gray Lady overlooked, did not understand, or blew off because it was dealing with high school management methods in its newsroom — now the Gray Lady has let loose the legal eagles.

What do I make of the NYT and online? Here are the conclusions I reached working on the Business Dateline database and then as an advisor to one of the NYT’s efforts to distribute the “real” news to hotels and steam ships via facsimile:

- Newspapers are not very good at software. Hey, those Linotype machines were killers, but the XyWrite software and subsequent online efforts have demonstrated remarkable ways to spend money and progress slowly.

- The smart software crowd is not in touch with the thought processes of those in senior management positions in publishing. When the groups try to find common ground, arguments over who pays for lunch are more common than a deal.

- Legal disputes are expensive. Many of those engaged reach some type of deal before letting a judge or a jury decide which side is the winner. Perhaps the NYT is confident that a jury of its peers will find the evil AI outfits guilty of a range of heinous crimes. But maybe not? Is the NYT a risk taker? Who knows. But the NYT will pay some hefty legal bills as it rushes to do battle.

Net net: I find the NYT’s efforts following a basic game plan. Ask for money. Learn that the money offered is less than the value the NYT slaps on its “real” news. The smart software outfit does what it has been doing. The NYT takes legal action. The lawyer engage. As the fees stack up, the idea that a deal is needed makes sense.

The NYT will do a deal, declare victory, and go back to creating “real” news. Sigh. Why? Microsoft has more money and can tie up the matter in court until Hell freezes over in my opinion. If the Gray Lady prevails, chalk up a win. But the losers can just up their cash offer, and the Gray Lady will smile a happy smile.

Stephen E Arnold, December 29, 2023

A Grade School Food Fight Could Escalate: Apples Could Become Apple Sauce

December 25, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

A squabble is blowing up into a court fight. “Beeper vs Apple Battle Intensifies: Lawmakers Demand DOJ Investigation” reports:

US senators have urged the DOJ to probe Apple’s alleged anti-competitive conduct against Beeper.

Apple killed a messaging service in the name of protecting apple pie, mom, love, truth, justice, and the American way. Ooops, sorry. That’s something from the Superman comix.

“You squashed my apple. You ruined my lunch. You ruined my life. My mommy will call your mommy, and you will be in trouble,” says the older, more mature child. The principal appears and points out that screeching is not comely. Thanks, MSFT Copilot. Close enough for horseshoes.

The article said:

The letter to the DOJ is signed by Minnesota Senator Amy Klobuchar, Utah Senator Mike Lee, Congressman Jerry Nadler, and Congressman Ken Buck. They have urged the law enforcement body to investigate “whether Apple’s potentially anti-competitive conduct against Beeper violates US antitrust laws.” Apple has been constantly trying to block Beeper Mini and Beeper Cloud from accessing iMessage. The two Beeper messaging apps allow Android users to interact with iPhone users through iMessage — an interoperability Apple has been opposed to for a long time now.

As if law enforcement did not have enough to think about. Now an alleged monopolist is engaged in a grade school cafeteria spat with a younger, much smaller entity. By golly, that big outfit is threatened by the jejune, immature, and smaller service.

How will this play out?

- A payday for Beeper when Apple makes the owners of Beeper an offer that would be tough to refuse. Big piles of money can alter one’s desire to fritter away one’s time in court

- The dust up spirals upwards. What if the attitude toward Apple’s approach to its competitors becomes a crusade to encourage innovation in a tough environment for small companies? Containment may be difficult.

- The jury decision against Google may kindle more enthusiasm for another probe of Apple and its posture in some tricky political situations; for example, the iPhone in China, the non-repairability issues, and Apple’s mesh of inter-connected services which may be seen as digital barriers to user choice.

In 2024, Apple may find that some government agencies are interested in the fruit growing on the company’s many trees.

Stephen E Arnold, December 25, 2023