Antitrust: Can Google Dodge Guilt Again?

October 9, 2025

The US Department of Justice brought an antitrust case against Google and Alphabet Inc. got away with a slap on the wrist. John Polonis via Medium shared the details and his opinion in, “Google’s Antitrust Escape And Tech’s Uncertain Future.” The Department of Justice can’t claim a victory in this case, because none of the suggestions to curtail Google’s power will be implemented.

Some restrictions were passed that ban exclusivity deals and require data sharing, but that’s all. It’s also nothing like the antitrust outcome of the Microsoft case in the 2000s. The judge behind the decision was Amit Mehta and he did want to deliver a dose of humility to Google:

“Judge Mehta also exercised humility when forcing Google to share data. Google will need to share parts of its search index with competitors, but it isn’t required to share other data related to those results (e.g., the quality of web pages). The reason for so much humility? Artificial intelligence. The judge emphasized Google’s new reality; how much harder it must fight to keep up with competitors who are seizing search queries that Google previously monopolized across smartphones and browsers.

Google can no longer use its financial clout like it did when it was the 900 pound gorilla of search. It’s amazing how much can change between the filing of an antitrust case and adjudication (generative AI didn’t even exist!).”

Google is now free to go hog wild with its AI projects without regulation. Google hasn’t lost any competitive edge, unlike Microsoft in its antitrust litigation. They’re now free to do whatever they want as well.

Polonis makes a very accurate point:

“The message is clear. Unless the government uncovers smoking gun evidence of deliberate anticompetitive intent — the kind of internal emails and memos that doomed Microsoft in the late 1990s (“cut off Netscape’s air supply”) — judges are reluctant to impose the most extreme remedies. Courts want narrow, targeted fixes that minimize unnecessary disruption. And the remedies should be directly tied to the anticompetitive conduct (which is why Judge Mehta focused so heavily on exclusivity agreements).”

Big Tech has a barrier free sandbox to experiment and conduct AI business deals. Judge Mehta’s decision has shaped society in ways we can’t predict, even AI doesn’t know the future yet. What will the US judicial process deliver in Google’s advertising legal dust up? We know Google can write checks to make problems go away. Will this work again for this estimable firm?

Whitney Grace, October 8, 2025

Big Tech Group Think: Two Examples

October 3, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Do the US tech giants do group think? Let’s look at two recent examples of the behavior and then consider a few observations.

First, navigate to “EU Rejects Apple Demand to Scrap Landmark Tech Rules.” The thrust of the write up is that Apple is not happy with the European digital competition law. Why? The EU is not keen on Apple’s business practices. Sure, people in the EU use Apple products and services, but the data hoovering makes some of those devoted Apple lovers nervous. Apple’s position is that the EU is annoying.

Thanks, Midjourney. Good enough.

The write up says:

“Apple has simply contested every little bit of the DMA since its entry into application,” retorted EU digital affairs spokesman Thomas Regnier, who said the commission was “not surprised” by the tech giant’s move.

Apple wants to protect its revenue, its business models, and its scope of operation. Governments are annoying and should not interfere with a US company of Apple’s stature is my interpretation of the legal spat.

Second, take a look at the Verge story “Google Just Asked the Supreme Court to Save It from the Epic Ruling.” The idea is that the online store restricts what a software developer can do. Forget that the Google Play Store provides access to some sporty apps. A bit of spice is the difficulty one has posting reviews of certain Play Store apps. And refunds for apps that don’t work? Yeah, no problemo.

The write up says:

… [Google] finally elevated its Epic v. Google case, the one that might fracture its control over the entire Android app ecosystem, to the Supreme Court level. Google has now confirmed it will appeal its case to the Supreme Court, and in the meanwhile, it’s asking the Court to press pause one more time on the permanent injunction that would start taking away its control.

It is observation time:

- The two technology giants are not happy with legal processes designed to enforce rules, regulations, and laws. The fix is to take the approach of a five year old, “I won’t clean up my room.”

- The group think appears to operate on the premise that US outfits of a certain magnitude should not be hassled like Gulliver by Lilliputians wearing robes, blue suits, and maybe a powdered wig or hair extenders

- The approach of the two companies strikes me, a definite non lawyer, as identical.

Therefore, the mental processes of these two companies appear to be aligned. Is this part of the mythic Silicon Valley “way”? Is it a consequence of spending time on Highway 101 or the Foothills Expressway thinking big thoughts? Is the approach the petulance that goes with superior entities encountering those who cannot get with the program?

My view: After decades of doing whatever, some outfits believe that type of freedom is the path to enlightenment, control, and money. Reinforced behaviors lead to what sure looks like group think to me.

Stephen E Arnold, October 3, 2025

Google and Its End Game

October 1, 2025

No smart software involved. Just a dinobaby’s work.

No smart software involved. Just a dinobaby’s work.

I read “In Court Filing, Google Concedes the Open Web Is in Rapid Decline.” The write up reveals that change is causing the information highway to morph into a stop light choked Dixie Highway. The article states:

Google says that forcing it to divest its AdX marketplace would hasten the demise of wide swaths of the web that are dependent on advertising revenue. This is one of several reasons Google asks the court to deny the government’s request.

Yes, so much depends on the Google just like William Carlos Williams observed in his poem “The Red Wheelbarrow.” I have modified the original to reflect the Googley era which is now emerging for everyone, including Ars Technica, to see:

so much depends upon the Google, glazed with data beside the chicken regulators.

The cited article notes:

As users become increasingly frustrated with AI search products, Google often claims people actually love AI search and are sending as many clicks to the web as ever. Now that its golden goose is on the line, the open web is suddenly “in rapid decline.” It’s right there on page five of the company’s September 5 filing…

Not only does Google say this, the company has been actively building the infrastructure for Google to become the “Internet.” No way, you say.

Sorry, way. Here’s what’s been going on since the initial public offering:

-

- Attract traffic and monetize via ads access to the traffic

- Increased data collection for marketing and “mining” for nuggets; that is, user behavior and related information

- Little by little, force “creators,” Web site developers, partners, and users to just let Google provide access to the “information” Google provides.

Smart software, like recreating certain Web site content, is just one more technology to allow Google to extend its control over its users, its advertisers, and partners.

Courts in the US have essentially hit pause on traffic lights controlling the flows of Google activity. Okay, Google has to share some information. How long will it take for “information” to be defined, adjudicated, and resolved.

The European Union is printing out invoices for Google to pay for assorted violations. Guess what? That’s the cost of doing business.

Net net: The Google will find a way to monetize its properties, slap taxes at key junctions, and shape information is ways that its competitors wish they could.

Yes, there is a new Web or Internet. It’s Googley. Adapt and accept. Feel free to get Google out of your digital life. Have fun.

Stephen E Arnold, October 3, 2025

xAI Sues OpenAI: Former Best Friends Enable Massive Law Firm Billings

September 30, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

What lucky judge will handle the new dust up between two tech bros? What law firms will be able to hire some humans to wade through documents? What law firm partners will be able to buy that Ferrari of their dreams? What selected jurors will have an opportunity to learn or at least listen to information about smart software? I don’t think Court TV will cover this matter 24×7. I am not sure what smart software is, and the two former partners are probably going to explain it is somewhat similar ways. I mean as former partners these two Silicon Valley luminaries shared ideas, some Philz coffee, and probably at a joint similar to the Anchovy Bar. California rolls for the two former pals.

When two Silicon Valley high-tech elephants fight, the lawyers begin billing. Thanks, Venice.ai. Good enough.

“xAI Sues OpenAI, Alleging Massive Trade Secret Theft Scheme and Poaching” makes it clear that the former BFFs are taking their beef to court. The write up says:

Elon Musk’s xAI has taken OpenAI to court, alleging a sweeping campaign to plunder its code and business secrets through targeted employee poaching. The lawsuit, filed in federal court in California, claims OpenAI ran a “coordinated, unlawful campaign” to misappropriate xAI’s source code and confidential data center strategies, giving it an unfair edge as Grok outperformed ChatGPT.

After I read the story, I have to confess that I am not sure exactly what allegedly happened. I think three loyal or semi-loyal xAI (Grok) types interviewed at OpenAI. As part of the conversations, valuable information was appropriated from xAI and delivered to OpenAI. Elon (Tesla) Musk asserts that xAI was damaged. xAI wants its information back. Plus, xAI wants the data deleted, payment of legal fees, etc. etc.

What I find interesting about this type of dust up is that if it goes to court, the “secret” information may be discussed and possibly described in detail by those crack Silicon Valley real “news” reporters. The hassle between i2 Ltd. and that fast-tracker Palantir Technologies began with some promising revelations. But the lawyers worked out a deal and the bulk of the interesting information was locked away.

My interpretation of this legal spat is probably going to make some lawyers wince and informed individuals wrinkle their foreheads. So be it.

- Mr. Musk is annoyed, and this lawsuit may be a clear signal that OpenAI is outperforming xAI and Grok in the court of consumer opinion. Grok is interesting, but ChatGPT has become the shorthand way of saying “artificial intelligence.” OpenAI is spending big bucks as ChatGPT becomes a candidate for word of the year.

- The deal between or among OpenAI, Nvidia, and a number of other outfits probably pushed Mr. Musk summon his attorneys. Nothing ruins an executive’s day more effectively than a big buck lawsuit and the opportunity to pump out information about how one firm harmed another.

- OpenAI and its World Network is moving forward. What’s problematic for Mr. Musk in my opinion is that xAI wants to do a similar type of smart cloud service. That’s annoying. To be fair Google, Meta, and BlueSky are in this same space too. But OpenAI is the outfit that Mr. Musk has identified as a really big problem.

How will this work out? I have no idea. The legal spat will be interesting to follow if it actually moves forward. I can envision a couple of years of legal work for the lawyers involved in this issue. Perhaps someone will actually define what artificial intelligence is and exactly how something based on math and open source software becomes a flash point? When Silicon Valley titans fight, the lawyers get to bill and bill a lot.

Stephen E Arnold, September 30, 2025

Google Is Going to Race Penske in Court!

September 15, 2025

Written by an unteachable dinobaby. Live with it.

Written by an unteachable dinobaby. Live with it.

How has smart software affected the Google? On the surface, we have the Code Red klaxons. Google presents big time financial results so the sirens drowned out by the cheers for big bucks. We have Google dodging problems with the Android and Chrome snares, so the sounds are like little chicks peeping in the eventide.

—-

FYI: The Penske Outfits

- Penske Corporation itself focuses on transportation, truck leasing, automotive retail, logistics, and motorsports.

- Penske Media Corporation (PMC), a separate entity led by Jay Penske, owns major media brands like Rolling Stone and Billboard.

—-

What’s actually going on is different, if the information in “Rolling Stone Publisher Sues Google Over AI Overview Summaries.” [Editor’s note: I live the over over lingo, don’t you?] The write up states:

Google has insisted that its AI-generated search result overviews and summaries have not actually hurt traffic for publishers. The publishers disagree, and at least one is willing to go to court to prove the harm they claim Google has caused. Penske Media Corporation, the parent company of Rolling Stone and The Hollywood Reporter, sued Google on Friday over allegations that the search giant has used its work without permission to generate summaries and ultimately reduced traffic to its publications.

Site traffic metrics are an interesting discipline. What exactly are the log files counting? Automated pings, clicks, views, downloads, etc.? Google is the big gun in traffic, and it has legions of SEO people who are more like cheerleaders for making sites Googley, doing the things that Google wants, and pitching Google advertising to get sort of reliable traffic to a Web site.

The SEO crowd is busy inventing new types of SEO. Now one wants one’s weaponized content to turn up as a link, snippet, or footnote in an AI output. Heck, some outfits are pitching to put ads on the AI output page because money is the name of the game. Pay enough and the snippet or summary of the answer to the user’s prompt may contain a pitch for that item of clothing or electronic gadget one really wants to acquire. Psychographic ad matching is marvelous.

The write up points out that an outfit I thought was into auto racing and truck rentals but is now a triple threat in publishing has a different take on the traffic referral game. The write up says:

Penske claims that in recent years, Google has basically given publishers no choice but to give up access to its content. The lawsuit claims that Google now only indexes a website, making it available to appear in search, if the publisher agrees to give Google permission to use that content for other purposes, like its AI summaries. If you think you lose traffic by not getting clickthroughs on Google, just imagine how bad it would be to not appear at all.

Google takes a different position, probably baffled why a race car outfit is grousing. The write up reports:

A spokesperson for Google, unsurprisingly, said that the company doesn’t agree with the claims. “With AI Overviews, people find Search more helpful and use it more, creating new opportunities for content to be discovered. We will defend against these meritless claims.” Google Spokesperson Jose Castaneda told Reuters.

Gizmodo, the source for the cited article about the truck rental outfit, has done some original research into traffic. I quote from the cited article:

Just for kicks, if you ask Google Gemini if Google’s AI Overviews are resulting in less traffic for publishers, it says, “Yes, Google’s AI Overview in search results appears to be resulting in less traffic for many websites and publishers. While Google has stated that AI Overviews create new opportunities for content discovery, several studies and anecdotal reports from publishers suggest a negative impact on traffic.”

I have some views on this situation, and I herewith present them to you:

- Google is calm on the outside but in crazy mode internally. The Googlers are trying to figure out how to keep revenues growing as referral traffic and the online advertising are undergoing some modest change. Is the glacier calving? Yep, but it is modest because a glacier is big and the calf is small.

- The SEO intermediaries at the Google are communicating like Chatty Cathies to the SEO innovators. The result will be a series of shotgun marriages among the lucrative ménage à trois of Google’s ad machine, search engine optimization professional, and advertising services firms in order to lure advertisers to a special private island.

- The bean counters at Google are looking at their MBA course materials, exam notes for CPAs, and reading books about forensic accounting in order to make the money furnaces at Google hot using less cash as fuel. This, gentle reader, is a very, very difficult task. At another time, a government agency might be curious about the financial engineering methods, but at this time, attention is directed elsewhere I presume.

Net net: This is a troublesome point. Google has lots of lawyers and probably more cash to spend on fighting the race car outfit and its news publications. Did you know that the race outfit owned the definitive publication about heavy metal as well at Billboard magazine?

Stephen E Arnold, September 15, 2025

Google: The EC Wants Cash, Lots of Cash

September 15, 2025

Sadly I am a dinobaby and too old and stupid to use smart software to create really wonderful short blog posts.

Sadly I am a dinobaby and too old and stupid to use smart software to create really wonderful short blog posts.

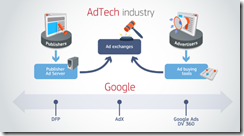

The European Commission is not on the same page as the judge involved in the Google case. Googzilla is having a bit of a vacay because Android and Chrome are still able to attend the big data party. But the EC? Not invited and definitely not welcome. “Commission Fines Google €2.95 Billion over Abusive Practices in Online Advertising Technology” states:

The European Commission has fined Google €2.95 billion for breaching EU antitrust rules by distorting competition in the advertising technology industry (‘adtech’). It did so by favouring its own online display advertising technology services to the detriment of competing providers of advertising technology services, advertisers and online publishers. The Commission has ordered Google (i) to bring these self-preferencing practices to an end; and (ii) to implement measures to cease its inherent conflicts of interest along the adtech supply chain. Google has now 60 days to inform the Commission about how it intends to do so.

The news release includes a third grade type diagram, presumably to make sure that American legal eagles who may not read at the same grade level as their European counterparts can figure out the scheme. Here it is:

For me, the main point is Google is in the middle. This racks up what crypto cronies call “gas fees” or service charges. Whatever happens Google gets some money and no other diners are allowed in the Mountain View giant’s dining hall.

There are explanations and other diagrams in the cited article. The main point is clear: The EC is not cowed by the Googlers nor their rationalizations and explanations about how much good the firm does.

Stephen E Arnold, September 15, 2025

Here Is a Happy Thought: The Web Is Dead

September 12, 2025

Just a dinobaby sharing observations. No AI involved. My apologies to those who rely on it for their wisdom, knowledge, and insights.

Just a dinobaby sharing observations. No AI involved. My apologies to those who rely on it for their wisdom, knowledge, and insights.

I read “One of the Last, Best Hopes for Saving the Open Web and a Free Press Is Dead.” If the headline is insufficiently ominous, how about the subtitle:

The Google ruling is a disaster. Let the AI slop flow and the writers, journalists and creators get squeezed.

The write up says:

Google, of course, was one of the worst actors. It controlled (and still controls) an astonishing 90% of the search engine market, and did so not by consistently offering the best product—most longtime users recognize the utility of Google Search has been in a prolonged state of decline—but by inking enormous payola deals with Apple and Android phone manufacturers to ensure Google is the default search engine on their products.

The subject is the US government court’s ruling that Google must share. Google’s other activities are just ducky. The write up presents this comment:

The only reason that OpenAI could even attempt to do anything that might remotely be considered competing with Google is that OpenAI managed to raise world-historic amounts of venture capital. OpenAI has raised $60 billion, a staggering figure, but also a sum that still very much might not be enough to compete in an absurdly capital intensive business against a decadal search monopoly. After all, Google drops $60 billion just to ensure its search engine is the default choice on a single web browser for three years. [Note: The SAT word “decadal” sort of means over 10 years. The Google has been doing search control for more than 20 years, but “more than 20 years is not sufficiently erudite I guess.]

The point is that competition in the number scale means that elephants are fighting. Guess what loses? The grass, the ants, and earthworms.

The write up concludes:

The 2024 ruling that Google was an illegal monopoly was a glimmer of hope at a time when platforms were concentrating ever more power, Silicon Valley oligarchy was on the rise, and it was clear the big tech cartels that effectively control the public internet were more than fine with overrunning it with AI slop. That ruling suggested there was some institutional will to fight against the corporate consolidation that has come to dominate the modern web, and modern life. It proved to be an illusion.

Several observations are warranted:

- Money talks; common sense walks

- AI is having dinner at the White House; the legal eagles involved in this high-profile matter got the message

- I was not surprised; the author, surprised and somewhat annoyed that the Internet is dead.

The US mechanisms remind me of how my father described government institutions in Campinas, Brazil, in the 1950s: Carry contos and distribute them freely. [Note: A conto was 1,000 cruzeiros at the time. Today the word applies to 1,000 reais.]

Stephen E Arnold, September 12, 2025

Google Does Its Thing: Courts Vary in their Views of the Outfit

September 10, 2025

Just a dinobaby sharing observations. No AI involved. My apologies to those who rely on it for their wisdom, knowledge, and insights.

Just a dinobaby sharing observations. No AI involved. My apologies to those who rely on it for their wisdom, knowledge, and insights.

I am not sure I understand how the US legal system works or any other legal system works. A legal procedure headed by a somewhat critical judge has allowed the Google to keep on doing what it is doing: Selling ads, collecting personal data, and building walled gardens even if they encroach on a kiddie playground.

However, at the same time, the Google was found to be a bit too frisky in its elephantine approach to business.

The first example is that Google was found guilty of collecting user data when users disabled the data collection. The details of this gross misunderstanding of how the superior thinkers at Google interpreted assorted guidelines and user settings appear in “Jury Slams Google Over App Data Collection to Tune of $425 Million.” Now to me that sounds like a lot of money. To the Google, it is a cash flow issue which can be addressed by negotiation, slow administrative response, and consulting firm speak. The write up says:

Google attorney Benedict Hur of Cooley LLP told jurors Google “certainly thought” it had permission to access the data. He added that Google lets users know it will continue to collect certain types of data, even if they toggle off web activity.

Quite an argument.

The other write up with some news about Google behavior is “France Fines Google, Shein Record Sums over Cookie Law Violations.” I found this passage in the write up interesting:

France’s data protection watchdog CNIL on Wednesday fined Google €325 million ($380 million) and fast-fashion retailer Shein €150 million ($175 million) for violating cookie rules. The record penalties target two platforms with tens of millions of French users, marking among the heaviest sanctions the regulator has imposed.

Several observations are warranted:

- Google is manifesting behavior similar to the China-linked outfit Shein. Who is learning from whom?

- Some courts find Google problematic; other courts think that Google is just doing okay Googley things

- A showdown may occur from outside the United States if a nation state just gets fed up with Google doing exactly whatever it wants.

I wonder if anyone at Google is thinking about hassling the French judiciary in the remainder of 2025 and into 2026. If so, it may be instructive to recall how the French judiciary addressed a 13-year-old case of digital Toxic Epidermal Necrolysis. Pavel Durov was arrested, interrogated for four days, and must report to French authorities every couple of weeks. His legal matter is moving through a judicial system noted for its methodical and red-tape choked processes.

Fancy a nice dinner in Paris, Google?

Stephen E Arnold, September 10, 2025

Google Monopoly: A Circle Still Unbroken

September 9, 2025

Just a dinobaby sharing observations. No AI involved. My apologies to those who rely on it for their wisdom, knowledge, and insights.

Just a dinobaby sharing observations. No AI involved. My apologies to those who rely on it for their wisdom, knowledge, and insights.

I am no lawyer, and I am not sure how the legal journey will unfold for the Google. I assume Google is still a monopoly. Google, however, is not happy with the recent court decision that appears to be a light tap on Googzilla’s snout. The snow is not falling and no errant piece of space junk has collided with the Mountain View campus.

I did notice a post on the Google blog with a cute url. The words “outreach-initiatives” , “public policy,” and DOJ search decision speak volumes to me.

The post carries this Google title, well, a Googley command:

Read our statement on today’s decision in the case involving Google Search

Okay, snap to it. The write up instructs:

Competition is intense and people can easily choose the services they want. That’s why we disagree so strongly with the Court’s initial decision in August 2024 on liability.

Okay, not em dashes, so Gemini did not write the sentence, although it may contain some words rarely associated with Googley things. These are words like “easily choose”. Hey, I thought Google was a monopoly. The purpose of the construct is to take steps to narrow choice. The Chicago stockyards uses fences, guides, and designated killing areas. But the cows don’t have a choice. The path is followed and the hammer drops. Thonk.

The write up adds:

Now the Court has imposed limits on how we distribute Google services, and will require us to share Search data with rivals. We have concerns about how these requirements will impact our users and their privacy, and we’re reviewing the decision closely.

The logic is pure blue chip consultant with a headache. I like the use of the word “imposed”. Does Google impose on its users; for instance, irrelevant search results, filtered YouTube videos, or roll up of user generated information in Google services? Of course not, a Google user can easily choose which videos to view on YouTube. A person looking for information can easily choose to access Web content on another Web search system. Just use Bing, Ecosia, or Phind. I like “easily.”

What strikes me is the command language and the huffiness about the decision.

Wow, I love Google. Is it a monopoly? Definitely not Android or Chrome. Ads? I don’t know. Probably not.

Stephen E Arnold, September 9, 2025

Bending Reality or Creating a Question of Ownership and Responsibility for Errors

September 3, 2025

No AI. Just a dinobaby working the old-fashioned way.

No AI. Just a dinobaby working the old-fashioned way.

The Google has may busy digital beavers working in the superbly managed organization. The BBC, however, seems to be agitated about what may be a truly insignificant matter: Ownership of substantially altered content and responsibility for errors introduced into digital content.

“YouTube secretly used AI to Edit People’s Videos. The Results Could Bend Reality” reports:

In recent months, YouTube has secretly used artificial intelligence (AI) to tweak people’s videos without letting them know or asking permission.

The BBC ignores a couple of issues that struck me as significant if — please, note the “if” — the assertion about YouTube altering content belonging to another entity. I will address these after some more BBC goodness.

I noted this statement:

the company [Google] has finally confirmed it is altering a limited number of videos on YouTube Shorts, the app’s short-form video feature.

Okay, the Google digital beavers are beavering away.

I also noted this passage attributed to Samuel Woolley, the Dietrich chair of disinformation studies at the University of Pittsburgh:

“You can make decisions about what you want your phone to do, and whether to turn on certain features. What we have here is a company manipulating content from leading users that is then being distributed to a public audience without the consent of the people who produce the videos…. “People are already distrustful of content that they encounter on social media. What happens if people know that companies are editing content from the top down, without even telling the content creators themselves?”

What about those issues I thought about after reading the BBC’s write up:

- If Google’s changes (improvements, enhancements, AI additions, whatever), will Google “own” the resulting content? My thought is that if Google can make more money by using AI to create a “fair use” argument, it will. How long will it take a court (assuming these are still functioning) to figure out if Google’s right or the individual content creator is the copyright holder?

- When, not if, Google’s AI introduces some type of error, is Google responsible or is it the creator’s problem? My hunch is that Google’s attorneys will argue that it provides a content creator with a free service. See the Terms of Service for YouTube and stop complaining.

- What if a content creator hits a home run and Google’s AI “learns” then outputs content via its assorted AI processes? Will Google be able to deplatform the original creator and just use it as a way to make money without paying the home-run hitting YouTube creator?

Perhaps the BBC would like to consider how these tiny “experiments” can expand until they shift the monetization methods further in favor of the Google. Maybe one reason is that BBC doesn’t think these types of thoughts. The Google, based on my experience, is indeed thinking these types of “what if” talks in a sterile room with whiteboards and brilliant Googlers playing with their mobile devices or snacking on goodies.

Stephen E Arnold, September 3, 2025