Japan Alleges Google Is a Monopoly Doing Monopolistic Things. What?

April 28, 2025

No AI, just the dinobaby himself.

No AI, just the dinobaby himself.

The Google has been around a couple of decades or more. The company caught my attention, and I wrote three monographs for a now defunct publisher in a very damp part of England. These are now out of print, but their titles illustrate my perception of what I call affectionately Googzilla:

- The Google Legacy. I tried to explain why Google’s approach was going to define how future online companies built their technical plumbing. Yep, OpenAI in all its charm is a descendant of those smart lads, Messrs. Brin and Page.

- Google Version 2.0. I attempted to document the shift in technical focus from search relevance to a more invasive approach to using user data to generate revenue. The subtitle, I thought at the time, gave away the theme of the book: “The Calculating Predator.”

- Google: The Digital Gutenberg. I presented information about how Google’s “outputs” from search results to more sophisticated content structures like profiles of people, places, and things was preparing Google to reinvent publishing. I was correct because the new head of search (Prabhakar Version 2.0) is making little reports the big thing in search results. This will doom many small publications because Google just tells you what it wants you to know.

I wrote these monographs between 2002 and 2008. I must admit that my 300 page Enterprise Search Report sold more copies than my Google work. But I think my Google trilogy explained what Googzilla was doing. No one cared.

Now I learn “Japan orders Google to stop pushing smartphone makers to install its apps.”* Okay, a little slow on the trigger, but government officials in the land of the rising sun figured out that Google is doing what Google has been doing for decades.

Enlightenment arrives!

The article reports:

Japan has issued a cease-and-desist order telling Google to stop pressuring smartphone makers to preinstall its search services on Android phones. The Japan Fair Trade Commission said on Tuesday Google had unfairly hindered competition by asking for preferential treatment for its search and browser from smartphone makers in violation of the country’s anti-monopoly law. The antitrust watchdog said Google, as far back as July 2020, had asked at least six Android smartphone manufacturers to preinstall its apps when they signed the license for the American tech giant’s app store…

Google has been this rodeo before. At the end of a legal process, Google will apologize, write a check, and move on down the road.

The question for me is, “How many other countries will see Google as a check writing machine?”

Quite a few in my opinion. The only problem is that these actions have taken many years to move from the thrill of getting a Google mouse pad to actual governmental action. (The best Google freebie was its flashing LED pin. Mine corroded and no longer flashed. I dumped it.)

Note for the * — Links to Microsoft “news” stories often go dead. Suck it up and run a query for the title using Google, of course.

Stephen E Arnold, April 28, 2025

FOGINT: Pavel Durov Responds to His Problems without Crisis PR Inputs

April 22, 2025

Before the US National Cyber Crime Conference, possibly significant news emerged about Telegram.

Before the US National Cyber Crime Conference, possibly significant news emerged about Telegram.

In my Telegram lecture at the NCCC 2025, I don’t talk about the psychological and financial impact Pavel Durov experienced as a result of his interaction with the French government. He was greeted at a Paris airport and detained. He talked with French officials. He hired Kaminski and his associates to represent him in the legal matter. Within a few weeks of his being confined to France, although not in St. Denis or Maison d’arrêt de Fleury-Mérogis, he seemed to be showing interest in providing some government authorities with Telegram “user names” or made-up handles and phone numbers (some real and some obtained through services providing temporary phone numbers). Mr. Durov, via indirect communication methods, seemed to indicate that he was doing what he had always done: Followed the rules of the jurisdictions in which Telegram operated. Then, without going to the French equivalent of a trial, Mr. Durov was allowed to return to Dubai, United Arab Emirates, one of the countries which has granted him citizenship.

Upon his return, he took some interesting actions:

- He began fiddling with some knobs and dials in order to get the TONcoin out of its doldrums

- He ramped up the marketing activities of the TON Foundation. (Please, keep in mind that Telegram and its proxy say they do not do marketing. The Telegram entities also do not do personnel or content control either.) Telegram and the Open Network Foundation (aka TON Foundation or ONF) are “there is no there there” or virtual outifts making do with coffee shop meetings, Telegram Messenger interactions, and maybe some face-to-face activity in a rented temporary space.)

- He started talking on CNN, for example.

From one of my team, I learned a couple of new “facts” this morning (April 22, 2025, 6 am US Eastern time). This individual reported:

Telegram has never shared private messages. The source is https://news.az/news/t-elegram-has-never-shared-private-messages-durov-says. Now there are two types of messages on Telegram Messenger. First, there are the default messages. These are encrypted from sender to the Telegram command-and-control system. There the messages are decrypted and processed. Then the messages are re-encrypted and forwarded to the recipient who opens them. The second type of message is the “secret” message which requires that the recipient be on Telegram when the sender creates and sends the message. That message is forwarded by the command-and-control system to the recipient. These “secret” messages remain encrypted for their digital journey. What Telegram shares with law enforcement is the IP address and the phone number of the sender. I will leave it to you to consider the “value” to Telegram’s command-and-control center of logging the metadata for messages and what happens when an investigator receives a bogus or temporary mobile number.,

A second item he shared with me this morning is that Pavel Durov continues to find himself at the edge of chaos with Russia. According to Meduza.io:

Russia slaps Telegram with another multi-million-ruble fine for refusing to take down ‘prohibited content’.

Several observations may be warranted:

- For a company that does not “do” marketing, Pavel Durov has been a busy and willing marketer on behalf of the Telegram operation. In fact, he seems to be sending out the message, “We cooperate with law enforcement.” Then he tries to make clear that he doesn’t reveal too much when Telegram cooperates with investigators.

- As a Russian citizen, Mr. Durov may want to [a] work with certain Russian officials to create the appearance of a problem with Russia in order to reduce the pressure from France or [b] Russia is acting independently to let Mr. Durov know he is a person of interest to the Kremlin. In short, which is it? Is Mr. Durov an asset for a certain country or is he a problem for a certain country in which some of his family and possibly some of his “core developers” reside? Uncomfortable either way I think.

- Telegram and its crypto play are not enjoying significant TONcoin upsides. The TONcoin is not the would-be high flier, Hamster Kombat home run it was prior to Mr. Durov’s arrest.

What’s clear is that Mr. Durov’s legal problems in France have been resolved. What is Mr. Durov’s relationship with the Russia’s government? Fuzzy stuff.

Net net: Mr. Durov’s recent actions appear to be signals that suggest Telegram is going to have to pull a rabbit from someone’s hoodie.

Stephen E Arnold, April 22, 2025

JudyRecords: Is It Back or Did It Never Go Away?

April 22, 2025

Believe it or not, no smart software. Just a dumb and skeptical dinobaby.

Believe it or not, no smart software. Just a dumb and skeptical dinobaby.

I was delighted to see that JudyRecords is back online. Here’s what the service says as of April 19, 2025:

Judyrecords is a 100% free nationwide search engine that lets you instantly search hundreds of millions of United States court cases and lawsuits.judyrecords has over 100x more cases than Google Scholar and 10x more cases than PACER, the official case management system of the United States federal judiciary.As of Jul 2022, judyrecords now features free full-text search of all United States patents from 1/1/1976 to 07/01/2022 — over 8.1 million patents in total.

My thought is that lawyers, law students, and dinobabies like me will find the service quite useful.

The JudyRecords’ Web site adds:

The first 500K results are displayed instead of just the first 2K.

- murder – 926K cases

- fraud – 2.1 million cases

- burglary – 3.7 million cases

- assault – 8.2 million cases

Most people don’t realize that the other “free” search engines limit the number of hits shown to the user. The old-fashioned ideas of precision and recall are not operative with most of the people whom I encounter. At the Googleplex, precision and recall are treated like a snappy joke when the Sundar & Prabhakar Comedy Show appears in a major venue like courtrooms.

If you want to control the results, JudyRecords provides old-fashioned and definitely unpopular methods such as Boolean logic. I can visualize the GenZs rolling their eyes and mouthing, “Are you crazy, old man?”

Please, check out JudyRecords because the outstanding management visionaries at LexisNexis, Thomson Reuters, and other “professional” publishers will be taking a look themselves.

Stephen E Arnold, April 22, 2025

Kiddie Loving Google and Data Hoovering

April 22, 2025

If you do not have kids or grandkids in school, you may have missed Google’s very successful foray into K-12 education. Google’s “Workspace for Education” tools are free to schools, but is the company providing them purely from a sense of civic duty? Of course not. Bloomberg Law reports, “Google Hit with Lawsuit over Data Collection on School Kids.” Apparently, US schools did not learn from Denmark’s 2022 ban on Google Workspace in its schools. Or they decided savings and convenience trumped student privacy and parental consent. Writer Isaiah Poritz tells us:

“Google LLC is unlawfully using its products—ubiquitous in K-12 education—to secretly gather information about school age children, substituting the consent of the school for that of parents, a proposed class action filed in California federal court said Monday. The tech giant collects not only traditional education records ‘but thousands of data points that span a child’s life,’ and ‘neither students nor their parents have agreed to this arrangement, according to the US District Court for the Northern District of California complaint.”

This is a significant breach, if true, considering almost 70% of K-12 schools in the US use these tools. We also learn:

“The company doesn’t disclose that it embeds hidden tracking technology in its Chrome browser that creates a child’s unique digital ‘fingerprint,’ the plaintiffs said. The fingerprint allows Google to ‘to track a child even when she or her school administrator has disabled cookies or is using technologies designed to block third-party cookies.’ The suit said Google has failed to obtain parental consent to take school childrens’ personal data. ‘Instead, Google relies on the consent of school personnel alone,’ the complaint said. ‘But school personnel do not have authority to provide consent in lieu of parents.’”

No, they do not. Or they shouldn’t. It seems like parents’ rights groups should have something to say about this. Perhaps they are too busy policing library shelves. The suit alleges Google is both selling students’ data to third parties and using it for its own targeted advertising. We note it would also be very easy, if the firm is so inclined, to build up a profile of a student who later creates a Google account which is then mapped onto that childhood data.

Naturally, Google denies the suit’s allegations. Of course, our favorite company does.

Cynthia Murrell, April 22, 2025

Google: Keep a Stiff Upper Lip and Bring Fiat Currency Other Than Dollars

April 17, 2025

No AI, just the dinobaby himself.

No AI, just the dinobaby himself.

Poor Googzilla. After decades of stomping through virtual barrier, those lovers of tea, fried fish, and cricket have had enough. I think some UK government officials have grown tired of, as Monty Python said:

“..what I object to is you automatically treat me as an inferior..” “Well, I am KING.”

“Google Faces £5bn UK Lawsuit” reports:

The lawsuit alleges that the tech giant has abused its dominant market position to prevent both existing and potential competitors from entering the general search and search advertising markets, thereby allowing Google to impose supra-competitive advertising prices. The lawsuit seeks compensation for thousands of UK advertisers impacted by the company’s actions.

Will Googzilla trample this pesky lawsuit the way cinematic cities fell to the its grandfather, Godzilla?

Key allegations include Google’s contracts with smartphone manufacturers and network operators that mandate the pre-installation of Google Search and the Chrome browser on Android devices. The suit also highlights Google’s agreement with Apple, under which it pays to remain the default search engine on iPhones. Plaintiffs argue that these practices have made Google the only practical platform for online search advertising.

I know little about the UK, but I did work for an outfit on Clarendon Terrace, adjacent Buckingham Palace, for a couple of years. I figured out that the US and UK government officials were generally cooperative, but there were some interesting differences.

Obviously there is the Monty Python canon. Another point of differentiation is a tendency to keep a stiff upper lip and then bang!

The wonderful Google and its quantumly supreme approach to business may be approaching one of those bang moments. Will Google’s solicitors prevail?

I am not very good at fancy probability and nifty gradient descent calculations. I would suggest that Googzilla bring a check book or a valid cargo container filled with an acceptable fiat currency. Pounds, euros, or Swiss francs are probably acceptable at this particular point in business history.

Oh, that £5bn works out to 5.4 million Swiss francs.

Stephen E Arnold, April 17, 2025

The French Are Going After Enablers: Other Countries May Follow

April 16, 2025

Another post by the dinobaby. Judging by the number of machine-generated images of young female “entities” I receive, this 80-year-old must be quite fetching to some scammers with AI. Who knew?

Another post by the dinobaby. Judging by the number of machine-generated images of young female “entities” I receive, this 80-year-old must be quite fetching to some scammers with AI. Who knew?

Enervated by the French judiciary’s ability to reason with Pavel Durov, the Paris Judicial Tribunal is going after what I call “enablers.” The term applies to the legitimate companies which make their online services available to customers. With the popularity of self-managed virtual machines, the online services firms receive an online order, collect a credit card, validate it, and let the remote customer set up and manage a computing resource.

Hey, the approach is popular and does not require expensive online service technical staff to do the handholding. Just collect the money and move forward. I am not sure the Paris Judicial Tribunal is interested in virtual anything. According to “French Court Orders Cloudflare to ‘Dynamically’ Block MotoGP Streaming Piracy”:

In the seemingly endless game of online piracy whack-a-mole, a French court has ordered Cloudflare to block several sites illegally streaming MotoGP. The ruling is an escalation of French blocking measures that began increasing their scope beyond traditional ISPs in the last few months of 2024. Obtained by MotoGP rightsholder Canal+, the order applies to all Cloudflare services, including DNS, and can be updated with ‘future’ domains.

The write up explains:

The reasoning behind the blocking request is similar to a previous blocking order, which also targeted OpenDNS and Google DNS. It is grounded in Article L. 333-10 of the French Sports Code, which empowers rightsholders to seek court orders against any outfit that can help to stop ‘serious and repeated’ sports piracy. This time, SECP’s demands are broader than DNS blocking alone. The rightsholder also requested blocking measures across Cloudflare’s other services, including its CDN and proxy services.

The approach taken by the French provides a framework which other countries can use to crack down on what seem to be legal online services. Many of these outfits expose one face to the public and regulators. Like the fictional Dr. Jekyll and Mr. Hyde, these online service firms make it possible for bad actors to perform a number of services to a special clientele; for example:

- Providing outlets for hate speech

- Hosting all or part of a Dark Web eCommerce site

- Allowing “roulette wheel” DNS changes for streaming sites distributing sports events

- Enabling services used by encrypted messaging companies whose clientele engages in illegal activity

- Hosting images of a controversial nature.

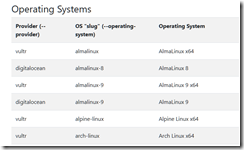

How can this be? Today’s technology makes it possible for an individual to do a search for a DMCA ignored advertisement for a service provider. Then one locates the provider’s Web site. Using a stolen credit card and the card owner’s identity, the bad actor signs up for a service from these providers:

This is a partial list of Dark Web hosting services compiled by SporeStack. Do you recognize the vendors Digital Ocean or Vultr? I recognized one.

These providers offer virtual machines and an API for interaction. With a bit of effort, the online providers have set up a vendor-customer experience that allows the online provider to say, “We don’t know what customer X is doing.” A cyber investigator has to poke around hunting for the “service” identified in the warrant in the hopes that the “service” will not be “gone.”

My view is that the French court may be ready to make life a bit less comfortable for some online service providers. The cited article asserts:

… the blockades may not stop at the 14 domain names mentioned in the original complaint. The ‘dynamic’ order allows SECP to request additional blockades from Cloudflare, if future pirate sites are flagged by French media regulator, ARCOM. Refusal to comply could see Cloudflare incur a €5,000 daily fine per site. “[Cloudflare is ordered to implement] all measures likely to prevent, until the date of the last race in the MotoGP season 2025, currently set for November 16, 2025, access to the sites identified above, as well as to sites not yet identified at the date of the present decision,” the order reads.

The US has a proposed site blocking bill as well.

But the French may continue to push forward using the “Pavel Durov action” as evidence that sitting on one’s hands and worrying about international repercussions is a waste of time. If companies like Amazon and Google operate in France, the French could begin tire kicking in the hopes of finding a bad wheel.

Mr. Durov believed he was not going to have a problem in France. He is a French citizen. He had the big time Kaminski firm represent him. He has lots of money. He has 114 children. What could go wrong? For starters, the French experience convinced him to begin cooperating with law enforcement requests.

Now France is getting some first hand experience with the enablers. Those who dismiss France as a land with too many different types of cheese may want to spend a few moments reading about French methods. Only one nation has some special French judicial savoir faire.

Stephen E Arnold, April 16, 2025

Meta a Great Company Lately?

April 10, 2025

Sorry, no AI used to create this item.

Sorry, no AI used to create this item.

Despite Google’s attempt to flood the zone with AI this and AI that, Meta kept popping up in my newsfeed this morning (April 10, 2025). I pushed past the super confidential information from the US District Court of Northern District of California (an amazing and typically incoherent extract of super confidential information) and focused on a non-fiction author.

The Zuck – NSO Group dust up does not make much of a factoid described in considerable detail in Wikipedia. That encyclopedia entry is “Onavo.” In a nutshell, Facebook acquired a company which used techniques not widely known to obtain information about users of an encrypted app. Facebook’s awareness of Onavo took place, according to Wikipedia, prior to 2013 when Facebook purchased Onavo. My thought is that someone in the Facebook organization learned about other Israeli specialized software firms. Due to the high profile NSO Group had as a result of its participation in certain intelligence-related conferences and the relatively small community of specialized software developers in Israel, Facebook may have learned about the Big Kahuna, NSO Group. My personal view is that Facebook and probably more than a couple of curious engineers learned how specialized software purpose-built to cope with mobile phone data and were more than casually aware of systems and methods. The Meta – NSO Group dust up is an interesting case. Perhaps someday someone will write up how the Zuck precipitated a trial, which to an outsider, looks like a confused government-centric firm facing a teenagers with grudge. Will this legal matter turn a playground-type of argument about who is on whose team into an international kidney stone for the specialized software sector? For now, I want to pick up the Meta thread and talk about Washington, DC.

The Hill, an interesting publication about interesting institutions, published “Whistleblower Tells Senators That Meta Undermined U.S. Security, Interests.” The author is a former Zucker who worked as the director of global public policy at Facebook. If memory serves me, she labored at the estimable firm when Zuck was undergoing political awakening.

The Hill reports:

Wynn-Williams told Hawley’s panel that during her time at Meta: “Company executives lied about what they were doing with the Chinese Communist Party to employees, shareholders, Congress and the American public,” according to a copy of her remarks. Her most explosive claim is that she witnessed Meta executives decide to provide the Chinese Communist Party with access to user data, including the data of Americans. And she says she has the “documents” to back up her accusations.

After the Zuck attempted to block, prevent, thwart, or delete Ms. Wynn-Williams’ book Careless People: A Cautionary Tale of Power, Greed, and Lost Idealism from seeing the light of a Kindle, I purchased the book. Silicon Valley tell-alls are usually somewhat entertaining. It is a mark of distinction for Ms. Wynn-Williams that she crafted a non-fiction write up that made me downright uncomfortable. Too much information about body functions and allegations about sharing information with a country not getting likes from too many people in certain Washington circles made me queasy. Dinobabies are often sensitive creatures unless they grow up to be Googzillas.

The Hill says:

Wynn-Williams testified that Meta started briefing the Chinese Communist party as early as 2015, and provided information about critical emerging technologies and artificial intelligence. “There’s a straight line you can draw from these briefings to the recent revelations that China is developing AI models for military use,” she said.

But isn’t open source AI software the future a voice in my head said?

What adds some zip to the appearance is this factoid from the article:

Wynn-Williams has filed a shareholder resolution asking the company’s board to investigate its activity in China and filed whistleblower complaints with the Securities and Exchange Administration and the Department of Justice.

I find it fascinating that on the West Coast, Facebook is unhappy with intelware being used on a Zuck-purchased service to obtain information about alleged persons of interest. About the same time, on the East coast, a former Zucker is asserting that the estimable social media company buddied up to a nation-state not particularly supportive of American interests.

Assuming that the Northern District court case is “real” and “actual factual” and that Ms. Wynn-Williams’ statements are “real” and “actual factual,” what can one hypothesize about the estimable Meta outfit? Here are my thoughts:

- Meta generates little windstorms of controversy. It doesn’t need to flood the zone with Google-style “look at us” revelations. Meta just stirs up storms.

- On the surface, Meta seems to have an interesting public posture. On one hand, the company wants to bring people together for good, etc. etc. On the other, the company could be seen as annoyed that a company used his acquired service to do data collection at odds with Meta’s own pristine approach to information.

- The tussles are not confined to tiny spaces. The West Coast matter concerns what I call intelware. When specialized software is no longer “secret,” the entire sector gets a bit of an uncomfortable feeling. Intelware is a global issue. Meta’s approach is in my opinion spilling outside the courtroom. The East Coast matter is another bigly problem. I suppose allegations of fraternization with a nation-state less than thrilled with the US approach to life could be seen as “small.” I think Ms. Wynn-Williams has a semi-large subject in focus.

Net net: [a] NSO Group cannot avoid publicity which could have an impact on a specialized software sector that should have remained in a file cabinet labeled “Secret.” [b] Ms. Wynn-Williams could have avoided sharing what struck me as confidential company information and some personal stuff as well. The book is more than a tell-all; it is a summary of what could be alleged intentional anti-US activity. [c] Online seems to be the core of innovation, finance, politics, and big money. Just forty five years ago, I wore bunny ears when I gave talks about the impact of online information. I called myself the Data Bunny. and, believe it or not, wore white bunny rabbit ears for a cheap laugh and make the technical information more approachable. Today many know online has impact. From a technical oddity used by fewer than 5,000 people to disruption of the specialized software sector by a much-loved organization chock full of Zuckers.

Stephen E Arnold, April 10, 2025

Original Research: Not-So-Original Assertions about Content Appropriation

April 2, 2025

No AI. Just a dinobaby sharing an observation about younger managers and their innocence.

No AI. Just a dinobaby sharing an observation about younger managers and their innocence.

The Social Science Research Council published the 30-plus page report “Beyond Public Access in LLM Pre-Training Data.” The subtitle reveals the principal finding: “Non-Public Non-Public Book Content in OpenAI’s Models.”

The write up states:

Using a legally obtained dataset of 34 copyrighted O’Reilly Media books, we apply the DE-COP membership inference attack method to investigate whether OpenAI’s large language models were trained on copyrighted content without consent. Our AUROC scores show that GPT-4o, OpenAI’s more recent and capable model, demonstrates strong recognition of paywalled O’Reilly book content (AUROC = 82%), compared to OpenAI’s earlier model GPT-3.5 Turbo. In contrast, GPT-3.5 Turbo shows greater relative recognition of publicly accessible O’Reilly book samples. GPT-4o Mini, as a much smaller model, shows no knowledge of public or non-public O’Reilly Media content when tested (AUROC ? 50%). Testing multiple models, with the same cutoff date, helps us account for potential language shifts over time that might bias our findings. These results highlight the urgent need for increased corporate transparency regarding pre-training data sources as a means to develop formal licensing frameworks for AI content training.

I want to mention that the DE-COP method provide one way to determine whether a specific data record was part of the training dataset for a machine learning model. The result of the SSRC’s research suggests that as OpenAI enhanced its models, the OpenAI organization appears to have used non-public content. Smaller (less capable OpenAI) models seemed to know less about the test set; the bigger models knew more.

The write up concludes that developers of smart software are sucking down data without obtaining permission or entering into licensing deals with publishers, copyright holders, or entities working on content not yet available as public content like this dinobaby blog post.

To sum up: A not-to-original assertion that certain organizations just take content and ignore rights is apparently accurate in this set of research. OpenAI accelerates. Speed is a positive. Breaking speed limits is believed to be no big deal.

A bit of a flashback: Over 40 years ago, I participated in a series of meetings to license the right to create abstracts of original articles from more than 1000 different publications. Our efforts were successful. Most publishers of academic business and management publications knew zero about online information services. The idea of summarizing an eight page article about executive compensation was unfamiliar to most of the people with whom our organization interacted over a period of years. Nevertheless, the owner of the database unit of the Courier Journal & Louisville Times Company insisted that we get signed agreements. The process was time consuming and expensive. Some outfits had representatives. So a negotiation went through layers of bureaucracy. Other publishers wanted a sit down meeting and a dog-and-pony show to explain why we wanted to boil down a 600 word article to about 150 words. There were worries about the impact of online access. There were worries about control of editorial content. There were worries about signing away rights. We couldn’t assuage worries, but we chugged along. The reputations of the Courier Journal and Barry Bingham Jr. helped but our small database operation had to follow the rules.

If the data in the cited document are accurate, the attitude of Barry Bingham Jr. would not have gained much traction at OpenAI. Instead of my getting a pat on the head for setting up the licensing deals with the able help of other Courier Journal professionals, I would have gotten kicked on the shin or worse.

Several observations:

- Observing content ownership remains important despite the acceleration toward illegal behaviors in the online sector

- Using show-and-tells and explaining the benefits of participating in digital innovations remains important. Knowledge minimizes anger and other negative reactions to pre-emptive behavior

- Managing the process is as important as demonstrating an unwillingness to conform to industry, social, and legal norms.

Let’s assume that the findings in the SSRC are off by 50 percent. Does that change what appears to be a strong possibility of some fancy dancing at the OpenAI disco?

My conclusion is that taking short cuts is part of the Silicon Valley approach of doing whatever and just saying, “Wow, I am sorry.”

I asked ChatGPT 4 via You.com this question:

Should I create an online product and just use content from the Harvard Business Review without permission?

Here’s the response:

No, you should not create an online product using content from the Harvard Business Review (HBR) without permission. Doing so would likely violate copyright laws and HBR’s usage policies. Here’s why:

HBR’s Copyright and Usage Policies

- HBR Material is Copyrighted: Harvard Business Review explicitly states that its content is protected by copyright, and no part of its publications may be reproduced without permission.

- Usage Restrictions: HBR has strict policies regarding how its content can be used. For example, even in academic settings, HBR articles cannot be freely distributed unless done through specific channels like creating course packs via "Harvard Business Publishing for Educators"

Permission is Required: If you want to use HBR content, you must obtain explicit permission. This includes reproducing, distributing, or using their material in any form.

Legal and Ethical Implications

- Copyright Infringement: Using HBR content without permission would likely constitute copyright infringement, which can result in legal action, fines, or other penalties.

- Ethical Concerns: Beyond legal issues, using someone else’s intellectual property without authorization is unethical and could harm your reputation or business.

What You Should Do Instead

- Seek Permission: Contact Harvard Business Review to request permission to use their content. They may have licensing options available for your intended use.

- Create Original Content: Instead of using HBR’s material, consider creating your own original content inspired by their ideas (but without copying their text or proprietary materials).

- Use Public Domain or Licensed Content: Look for content that is in the public domain or available under a license (e.g., Creative Commons) that allows commercial use.

Conclusion

Using HBR content without permission is not only illegal but also against their explicit policies. To avoid legal and ethical issues, either seek proper licensing or create your own original material.

In this case, the OpenAI’s AI may be more intelligent that some people engaged in content acquisition at the firm. But I am a dinobaby. I try to follow the social, legal, and ethical rules as my parents who had only high school educations taught me. I am a dinobaby. A sad dinobaby.

Stephen E Arnold, April 2, 2025

Dog Whistle Only Law Firm Partners Can Hear: More Profits, Bigger Bonuses!

March 21, 2025

Dinobaby, here. No smart software involved unlike some outfits. I did use Sam AI-Man’s art system to produce the illustration in the blog post.

Dinobaby, here. No smart software involved unlike some outfits. I did use Sam AI-Man’s art system to produce the illustration in the blog post.

Truth be told, we don’t do news. The write ups in my “placeholder” blog are my way to keep track of interesting items. Some of these I never include in my lectures. Some find their way into my monographs. The FOGINT stuff: Notes for my forthcoming monograph about Telegram, the Messenger mini app, and that lovable marketing outfit, the Open Network Foundation. If you want to know more, write benkent2020 at yahoo dot com. Some slacker will respond whilst scrolling Telegram Groups and Channels for interesting items.

Thanks, Sam AI-Man.

But this write up is an exception. This is a post about an article in the capitalist tool. (I have always like the ring of the slogan. I must admit when I worked in the Big Apple, I got a kick out of Malcolm Forbes revving his Harley at the genteel biker bar. But the slogan and the sound of the Hog? Unforgettable.)

What is causing me to stop my actual work to craft a blog post at 7 am on March 21, 2025? This article in Forbes Magazine. You know, the capitalist tool. Like a vice grip for Peruvian prison guards I think.

“Risk Or Revolution: Will AI Replace Lawyers?” sort of misses the main point of smart software and law firms. I will address the objective of big time law firms in a moment, but I want to look at what Hessie Jones, the strategist or stratagiste maybe, has to say:

Over the past few years, a growing number of legal professionals have embraced AI tools to boost efficiency and reduce costs. According to recent figures, nearly 73% of legal experts now plan to incorporate AI into their daily operations. 65% of law firms agree that "effective use of generative AI will separate the successful and unsuccessful law firms in the next five years."

Talk about leading the witness. “Who is your attorney?” The person in leg cuffs and an old fashioned straight jacket says, “Mr. Gradient Descent, your honor.”

The judge, a savvy fellow who has avoid social media criticism says, “Approach the bench.”

Silence.

The write up says:

Afolabi [a probate lawyer, a graduate of Osgoode Law School, York University in Canada] who holds a master’s from the London School of Economics, describes the evolution of legal processes over the past five years, highlighting the shift from paper-based systems to automated ones. He explains that the initial client interaction, where they tell a story and paint a picture remains crucial. However, the method of capturing and analyzing this information has changed significantly. "Five years ago, that would have been done via paper. You’re taking notes," Afolabi states, "now, there’s automation for that." He emphasizes that while the core process of asking questions remains, it’s now "the machine asking the questions." Automation extends to the initial risk analysis, where the system can contextualize the kind of issues and how to best proceed. Afolabi stresses that this automation doesn’t replace the lawyer entirely: "There’s still a lawyer there with the clients, of course."

Okay, the human lawyer, not the Musk envisioned Grok 3 android robot, will approach the bench. Well, someday.

Now the article’s author delivers the payoff:

While concerns about AI’s limitations persist, the consensus is clear: AI-driven services like Capita can make legal services more affordable and accessible without replacing human oversight.

After finishing this content marketing write up, I had several observations:

- The capitalist tool does not point out the entire purpose of the original Forbes, knock out Fortune Magazine and deliver information that will make a reader money.

- The article ignores the reality that smart software fiddling with word probabilities makes errors. Whether it was made up cases like Michael Cohen’s brush with AI or telling me that a Telegram-linked did not host a conference in Dubai, those mistakes might add some friction to smart speeding down the information highway.

- Lawyers will use AI to cut costs and speed billing cycles. In my opinion, lawyers don’t go to jail. Their clients do.

Let’s imagine the hog-riding Malcolm at his desk pondering great thoughts like this:

“It’s so much easier to suggest solutions when you don’t know too much about the problem.”

The problem for law firms will be solved by smart software; that is, reducing costs. Keep in mind, lawyers don’t go to jail that often. The AI hype train has already pulled into the legal profession. Will the result be better lawyering? I am not sure because once a judge or jury makes a decision the survey pool is split 50 50.

But those bonuses? Now that’s what AI can deliver. (Imagine the sound of a dog whistle with an AI logo, please.)

PS. If you are an observer of blue chip consulting firms. The same payoff logic applies. Both species have evolved to hear the more-money frequency.

Stephen E Arnold, March 21, 2025

A NewPlay for Google and Huawei: A Give and Go?

March 14, 2025

Time for Google to get smarmy in court. We learn from TorrentFreak, "Google Must Testify as LaLiga Demands Criminal Liability for ‘Piracy Profits’." Writer Andy Maxwell summarizes:

"A court in Murcia, Spain, has ordered Google to testify in a criminal case concerning IPTV app, NewPlay. Football league LaLiga, whose matches were allegedly offered illegally through the app, previously called for the directors of Google, Apple, and Huawei to face criminal charges. LaLiga criticized the companies for failing to disable copies of NewPlay already installed on users’ devices. Google and Huawei must now testify as ‘profit-making participants’ in an alleged piracy scheme."

See the write-up for the twists and turns of the case thus far. The key point: Removing NewPlay from app stores was not enough for LaLiga. The league demands they reach in and remove the player from devices it already inhabits. The court agrees. We learn:

"The court order required Google, Apple, and Huawei to disable or delete NewPlay to prevent future use on users’ mobile devices. That apparently didn’t happen. Unhappy with the lack of compliance, in 2024 LaLiga called on the investigating judge to punish the tech companies’ directors. LaLiga says that the installed NewPlay apps still haven’t been remotely disabled but given the precedent that may set, LaLiga seems unlikely to let the matter go without a fight. With support from Telefónica, Mediapro and rights group EGEDA, LaLiga wants to hold Google and Huawei responsible for pirated content reportedly made available via the NewPlay app."

So now the court is requiring reps from Google and Huawei to appear and address this partial compliance. Why not Apple, too? That is a mystery, reports Maxwell. He also wonders about the bigger picture. The Newplay website is still up, he notes, though both its internal and external links are currently disabled. Besides, at least one other app exists that appears to do the same thing. Will the court become embroiled in a long-term game of IPTV whack-a-mole? Google is a magnet for the courts it seems.

Cynthia Murrell, March 14, 2025