Apple Emulates the Timnit Gebru Method

October 26, 2021

Remember Dr. Timnit Gebru. This individual was the researcher who apparently did not go along with the tension flow related to Google’s approach to snorkeling. (Don’t get the snorkel thing? Yeah, too bad.) The solution was to exit Dr. Gebru and move in a Googley manner forward.

Now the Apple “we care about privacy” outfit appears to have reached a “me too moment” in management tactics.

Two quick examples:

First, the very Silicon Valley Verge published “Apple Just Fired a Leader of the #AppleToo Movement.” I am not sure what the AppleToo thing encompasses, but it obviously sparked the Timnit option. The write up says:

Apple has fired Janneke Parrish, a leader of the #AppleToo movement, amid a broad crackdown on leaks and worker organizing. Parrish, a program manager on Apple Maps, was terminated for deleting files off of her work devices during an internal investigation — an action Apple categorized as “non-compliance,” according to people familiar with the situation.

Okay, deletes are bad. I figured that out when Apple elected to get rid of the backspace key.

Second, Gizmodo, another Silicon Valley information service, revealed “Apple Wanted Her Fired. It Settled on an Absurd Excuse.” The write up reports:

The next email said she’d been fired. Among the reasons Apple provided, she’d “failed to cooperate” with what the company called its “investigatory process.”

Hasta la vista, Ashley Gjøvik.

Observations:

- The Timnit method appears to work well when females are involved in certain activities which run contrary to the Apple way. (Note that the Apple way includes flexibility in responding to certain requests from nation states like China.)

- The lack of information about the incidents is apparently part of the disappearing method. Transparency? Yeah, not so much in Harrod’s Creek.

- The one-two disappearing punch is fascinating. Instead of letting the dust settle, do the bang-bang thing.

Net net: Google’s management methods appear to be viral at least in certain management circles.

Stephen E Arnold, October 26, 2021

What Can Slow Down the GOOG? Lawyers Reviewing AI Research Papers

October 21, 2021

I spotted an allegedly true factoid in “Google’s AI Researchers Say Their Output Is Being Slowed by Lawyers after a String of High Level Exits : Getting Published Really Is a Nightmare Right Now.” Here is the paywalled item:

According to Google’s own online records, the company published 925 pieces of AI research in 2019, and a further 962 in 2020. But the company looks to have experienced a moderate slowdown this

year, publishing just 618 research papers in all of 2021 thus far.

Quite a decrease, particularly in the rarified atmosphere of the smartest people in the world who want to be in a position to train, test, deploy, and benefit from their smart software.

With management and legal cooks in the Google AI kitchen, the production of AI delicacies seems to be going down. Bad for careers? Good for lawyers? Yes and yes.

Is this a surprise? It depends on whom one asks.

At a time when there is chatter that VCs want to pump money into smart software and when some high profile individuals suggest China is the leader in artificial intelligence, the Google downturn in this facet of research is not good news for the GOOG.

Is there a fix? Sure, but none is going to include turning back the hands of time to undo what I call the Battle of Timnit. The decision to try and swizzle around the issue of baked in algorithmic bias appears to have blocked some Google researchers’ snorkels. Deep dives without free flowing research oxygen can be debilitating.

Stephen E Arnold, October 21, 2021

Xoogler Identifies Management Idiosyncrasies and Insecurities

October 18, 2021

Curious about how the GOOG manages. There an interesting interview on Triggernometry with Taras Kobernyk, a former engineer with the mom-and-pop online advertising company. You can locate the video at this url. Some useful information emerges in the video. Here are three examples from my notes:

- Google has an American culture

- It’s hard to trust the company

- Management is afraid.

I found the interview interesting because the engineer was from the former Soviet Union and seems to have taken some Google’s googley statements in a literal way. Result? Job hunt time. Is there “Damore” information to be revealed?

Stephen E Arnold, October 18, 2021

Amazon and Google: Another Management Challenge

October 18, 2021

There’s nothing like two very large companies struggling with a common issue. I read “Nearly 400 Google and Amazon Employees Called for the Companies to End a $1.2 Billion Contract with the Israeli Military.” Is the story true or a bit wide of the mark? I don’t know. It is interesting from an intellectual point of view.

The challenge is a management to do, a trivial one at that.

According to the write up:

Hundreds of Google and Amazon employees signed an open letter published in The Guardian on Tuesday [presumably October 12, 2021] condemning Project Nimbus, a $1.2 billion contract signed by the two companies to sell cloud services to the Israeli military and government.

Now what?

According to the precepts on the high school science club management method, someone screwed up hiring individuals who don’t fit in. The solution is to change the rules of employment; that is, let these individuals work from home on projects that would drive an intern insane.

Next up for these two giants will be a close look at the hiring process. Why can’t everyone be like those who lived in the dorm with Sergey and Larry or those who worked with Jeff Bezos when he was a simple Wall Street ethicist?

I will have to wait and see how these giant firms swizzle a solution or two.

Stephen E Arnold, October 18, 2021

An Onion Story: The Facebook Oversight Board Checks Out Rule Following

October 7, 2021

I read “Facebook’s Oversight Board to Review Xcheck System Following Investigation of Internal System That Exempted Certain Users.” Is this a story created for the satirical information service Onion, MAD Magazine, or the late, lamented Harvard Lampoon?

I noted this passage:

For certain elite users, Facebook’s rules don’t seem to apply.

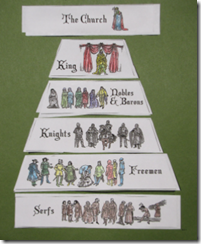

I think this means that there is one set of rules for one group of users and another set of rules for another group of users. In short, the method replicates the tidy structure of a medieval hierarchy; to wit:

The “church” would probably represent the Zuck and fellow technical elite plus a handful of fellow travelers. The king is up for grabs now that the lean in expert has leaned out. The nobles and barons are those who get a special set of rules. The freemen can buy ads. The serfs? Well, peasants are okay for clicks but not much else.

Now the oversight board which is supposed to be overseeing will begin the overseeing process of what appears to be a discriminatory system.

Obviously the oversight board is either in the class of freemen or serfs. I wonder if this Onionesque management method is a variant of the mushroom approach; that is, keep the oversight board and users in the dark and feed them organic matter rich in indole, skatole, hydrogen sulfide, and mercaptans?

That Facebook is an Empyrean spring of excellence in ethics, management, and business processes. My hunch is that not even the outfits like the Onion can match this joke. Maybe Franz (Happy) Kafka could?

Stephen E Arnold, October 7, 2021

Insight into Hacking Targets: Headhunters Make Slip Ups but the Often Ignore Them

October 7, 2021

I read “Former NSA Hacker Describes Being Recruited for UAE Spy Program.”

Here’s the passage I noted:

There were no red flags because I was so naive. But… there’s a ton of red flags [in retrospect]…. [For example] when you’re in the interview process and you’re talking about defending [the UAE] and … doing tracking of terrorist activity,… but then you’re [being asked] very specific questions about integrated enterprise Windows environments and [how you might hack them]. Guess who doesn’t have those type of networks? Terrorist organizations. So why [is the recruiter] asking these kinds of questions…?

Several observations:

- Perhaps a training program for those exiting certain government work assignments would be helpful? It could be called “Don’t Be Naïve.”

- Gee, what a surprise: Specific questions about hacking integrated enterprise Windows environments. Perhaps Microsoft should think about this statement from the article and adjust its security so that headhunters ask about MacOS, Linux, or Android?

- Does the government’s monitoring of certain former employees need a quick review?

Stephen E Arnold, October 7, 2021

Zoom Blunders Can Be Tricky for Employees

October 5, 2021

Zoom meetings seemed like the logical answer to collaborating from home during the pandemic, and its popularity is likely to last. However, asks Hacker Noon, “Has Zoom Made Us ‘Embrace the Dark Side’ of Humanity?” Writer Michael Brooks, a remote-worker since long before COVID, came across some startling information. He tells us:

“I stumbled upon a Bloomberg article with an axing title: ‘Zoom-Call Gaffes Led to Someone Getting Axed, 1 in 4 Bosses Say.’ According to the results of a survey conducted by Vyopta Inc., which included ‘200 executives at the vice president level or higher at companies with at least 500 employees,’ nearly 25% of employees got fired. Why?! Wait! What? What in the world do you need to do during a Zoom call or any other virtual meeting or conference to get fired? It turns out that ‘mortal-virtual-sins’ include ‘joining a call late, having a bad Internet connection, accidentally sharing sensitive information, and of course, not knowing when to mute yourself.’”

A severe penalty indeed for folks working with an unfamiliar platform amidst the distractions of home, all while coping with the stresses of a global plague. Brooks describes how one might handle similar situations with more compassion:

“There was a baby crying loud in the middle of a meeting with my staff. I asked a proud dad, a member of our team, to introduce an adorable noisemaker. The baby joined and stayed throughout a meeting in her father’s arms. There was another team member who kept forgetting to hit the mute button when she wasn’t talking. The background noise was deafening as if she was calling from the busiest construction site in the world. … For the next meeting, the whole team pretended that there was something wrong with her mic. It lasted for a couple of hilarious minutes. Since then, we’ve never had to remind someone to mute themselves.”

Brooks wonders whether some gaffs represent a sort of rebellion against too much Zooming. If so, one’s job is a high price to pay. He suggests frustrated workers discuss the matter with bosses and coworkers instead of passive-aggressively sabotaging meetings. As for employers, they might want to consider lightening up a bit instead of axing a quarter of their talent for very human errors.

Cynthia Murrell, October 5, 2021

Who Is Ready to Get Back to the Office?

October 4, 2021

The pandemic has had many workers asking “hey, who needs an office?” Maybe most of us, according to the write-up, “How Work from Home Has Changed and Became Less Desirable in Last 18 Months” posted at Illumination. Cloud software engineer Amrit Pal Singh writes:

“Work from home was something we all wanted before the pandemic changed everything. It saved us time, no need to commute to work, and more time with the family. Or at least we used to think that way. I must say, I used to love working from home occasionally in the pre-pandemic era. Traveling to work was a pain and I used to spend a lot of time on the road. Not to forget the interrupts, tea breaks, and meetings you need to attend at work. I used to feel these activities take up a lot of time. The pandemic changed it all. In the beginning, it felt like I could work from home all my life. But a few months later I want to go to work at least 2–3 times a week.”

What changed Singh’s mind? Being stuck at home, mainly. There is the expectation that since he is there he can both work and perform household chores each day. He also shares space with a child attending school virtually—as many remote workers know, this makes for a distracting environment. Then there is the loss of work-life balance; when both work and personal time occur in the same space, they tend to blend together and spur monotony. An increase in unnecessary meetings takes away from actually getting work done, but at the same time Singh misses speaking with his coworkers face-to-face. He concludes:

“I am not saying WFH is bad. In my opinion, a hybrid approach is the best where you go to work 2–3 days a week and do WFH the rest of the week. I started going to a nearby cafe to get some time alone. I have written this article in a cafe :)”

Is such a hybrid approach the balance we need?

Cynthia Murrell, October 4, 2021

Big Tech Responds to AI Concerns

October 4, 2021

We cannot decide whether this news represents a PR move or simply three red herrings. Reuters declares, “Money, Mimicry and Mind Control: Big Tech Slams Ethics Brakes on AI.” The article gives examples of Google, Microsoft, and IBM hitting pause on certain AI projects over ethical concerns. Reporters Paresh Dave and Jeffrey Dastin write:

“In September last year, Google’s (GOOGL.O) cloud unit looked into using artificial intelligence to help a financial firm decide whom to lend money to. It turned down the client’s idea after weeks of internal discussions, deeming the project too ethically dicey because the AI technology could perpetuate biases like those around race and gender. Since early last year, Google has also blocked new AI features analyzing emotions, fearing cultural insensitivity, while Microsoft (MSFT.O) restricted software mimicking voices and IBM (IBM.N) rejected a client request for an advanced facial-recognition system. All these technologies were curbed by panels of executives or other leaders, according to interviews with AI ethics chiefs at the three U.S. technology giants.”

See the write-up for more details on each of these projects and the concerns around how they might be biased or misused. These suspensions sound very responsible of the companies, but they may be more strategic than conscientious. Is big tech really ready to put integrity over profits? Some legislators believe regulations are the only way to ensure ethical AI. The article tells us:

“The EU’s Artificial Intelligence Act, on track to be passed next year, would bar real-time face recognition in public spaces and require tech companies to vet high-risk applications, such as those used in hiring, credit scoring and law enforcement. read more U.S. Congressman Bill Foster, who has held hearings on how algorithms carry forward discrimination in financial services and housing, said new laws to govern AI would ensure an even field for vendors.”

Perhaps, though lawmakers in general are famously far from tech-savvy. Will they find advisors to help them craft truly helpful legislation, or will the industry dupe them into being its pawns? Perhaps Watson could tell us.

Cynthia Murrell, October 4, 2021

The Benefits of Offices with People

September 29, 2021

One result of the pandemic is likely to be with us for a while. Many workers find they prefer working remotely for a number of reasons, and a hefty percentage insist they be allowed to continue doing so. That may not be best for employers, though, at least in the IT field. The journal Nature Human Behavior shares a study on “The Effects of Remote Work on Collaboration Among Information Workers.” A team of researchers from Microsoft, MIT, and the University of California, Berkeley, examined the internal communications of Microsoft employees during the first half of 2020. They analyzed patterns in emails, calendars, instant messages, video and audio calls, and work hours. The conclusion—remote work has a detrimental effect on collaboration and information sharing. The paper states:

“Our results suggest that shifting to firm-wide remote work caused the collaboration network to become more heavily siloed—with fewer ties that cut across formal business units or bridge structural holes in Microsoft’s informal collaboration network—and that those silos became more densely connected. Furthermore, the network became more static, with fewer ties added and deleted per month. Previous research suggests that these changes in collaboration patterns may impede the transfer of knowledge and reduce the quality of workers’ output. Our results also indicate that the shift to firm-wide remote work caused synchronous communication to decrease and asynchronous communication to increase. Not only were the communication media that workers used less synchronous, but they were also less ‘rich’ (for example, email and IM). These changes in communication media may have made it more difficult for workers to convey and process complex information. We expect that the effects we observe on workers’ collaboration and communication patterns will impact productivity and, in the long-term, innovation.”

It does make sense that communicating face to face would be more effective than any other method. That is primarily how humans have been doing it for thousands of years, after all. We note, though, the study focuses on a period early in the pandemic—perhaps some of these inefficiencies have improved since then. The researchers acknowledge their scope is limited to that time period at that corporation and suggest further study is needed once the pandemic is (finally!) over. The paper suggests large organizations that can collect communications data consider performing their own analysis and sharing those results with the rest of us. See the paper for the many details of the study’s methods, results, conclusions, recommendations, and references.

Cynthia Murrell, September 29, 2021